RunnableBranch: Dynamically route logic based on input | 🦜?🔗 Langchain

基于輸入的動態路由邏輯,通過上一步的輸出選擇下一步操作,允許創建非確定性鏈。路由保證路由間的結構和連貫。

有以下兩種方法執行路由

1、通過RunnableLambda?(推薦該方法)

2、RunnableBranch

from langchain_community.chat_models import ChatOpenAI

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import PromptTemplatemodel=ChatOpenAI(model="gpt-3.5-turbo",temperature=0)

chain = (PromptTemplate.from_template("""根據用戶的問題, 把它歸為關于 `LangChain`, `OpenAI`, or `Other`.不要回答其他字

<question>

{question}

</question>

歸為:

""")| model| StrOutputParser()

)response=chain.invoke({"question": "怎么調用ChatOpenAI"})

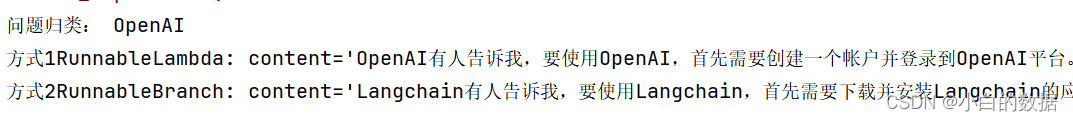

print('問題歸類:',response)

##定義LLM

langchain_chain = (PromptTemplate.from_template("""你是一個langchain專家. \開頭用 "langchain有人告訴我"回答問題. \Question: {question}Answer:""")| model

)

openai_chain = (PromptTemplate.from_template("""你是一個OpenAI專家. \開頭用 "OpenAI有人告訴我"回答問題. \Question: {question}Answer:""")| model

)

general_chain = (PromptTemplate.from_template("""回答下面問題:Question: {question}Answer:""")| model

)

def route(info):if "openai" in info["topic"].lower():return openai_chainelif "langchain" in info["topic"].lower():return langchain_chainelse:return general_chain

#方式1RunnableLambda

from langchain_core.runnables import RunnableLambda

full_chain = {"topic": chain, "question": lambda x: x["question"]} | RunnableLambda(route)

response=full_chain.invoke({"question": "怎么用openAi"})

print('方式1RunnableLambda:',response)

# 方式2RunnableBranch

from langchain_core.runnables import RunnableBranch

branch = RunnableBranch((lambda x: "anthropic" in x["topic"].lower(), openai_chain),(lambda x: "langchain" in x["topic"].lower(), langchain_chain),general_chain,

)

full_chain = {"topic": chain, "question": lambda x: x["question"]} | branch

response=full_chain.invoke({"question": "怎么用lanGchaiN?"})

print('方式2RunnableBranch:',response)

Vue環境搭建第一個項目)

(一)-CNN的基本原理與結構)

)

運行時間)

)

)