進行數據處理之后,我們得到了x_train和y_train,我們就可以用來進行回歸或分類模型訓練啦~

一、模型選擇

我們這里可能使用的是回歸模型(Regression),值得注意的是,回歸和分類不分家。分類是預測離散值,回歸是預測連續值,差不多。

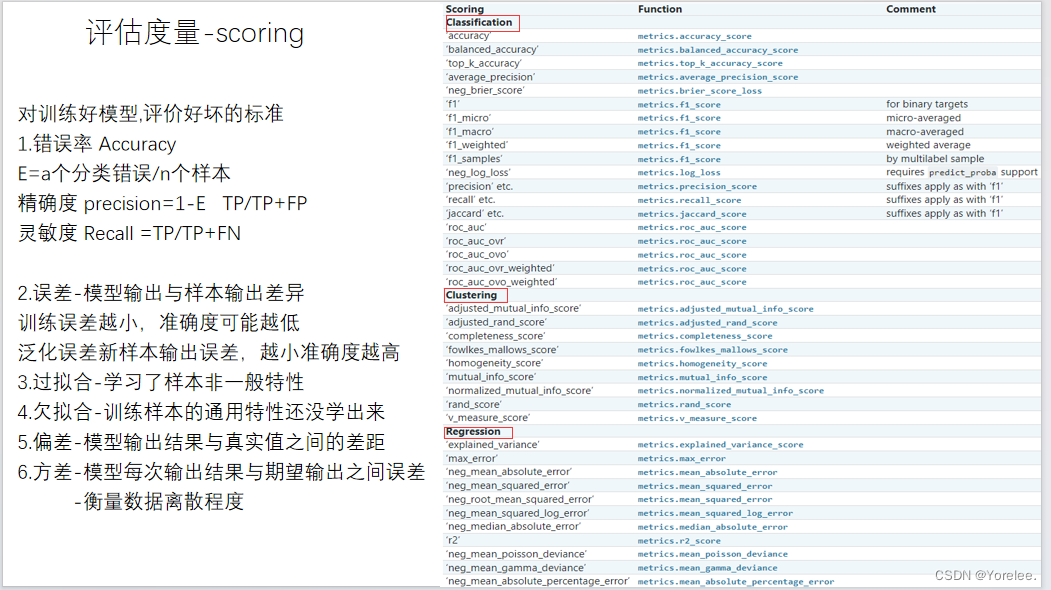

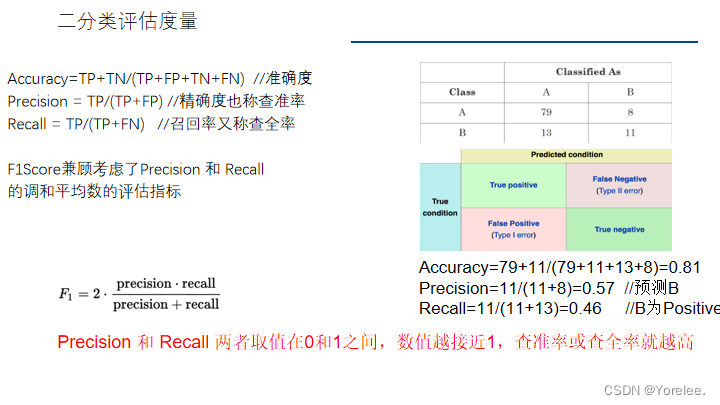

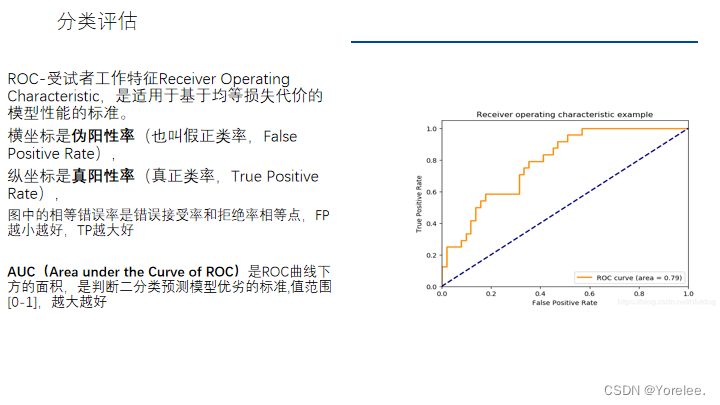

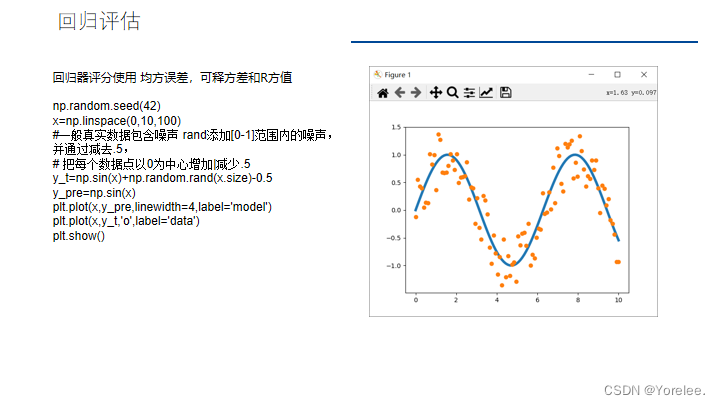

展示這些是為了看到,不同的任務,可能需要不用的評分標準,評分時別亂套用。

評估度量

一、默認參數模型對比

一、無標準化

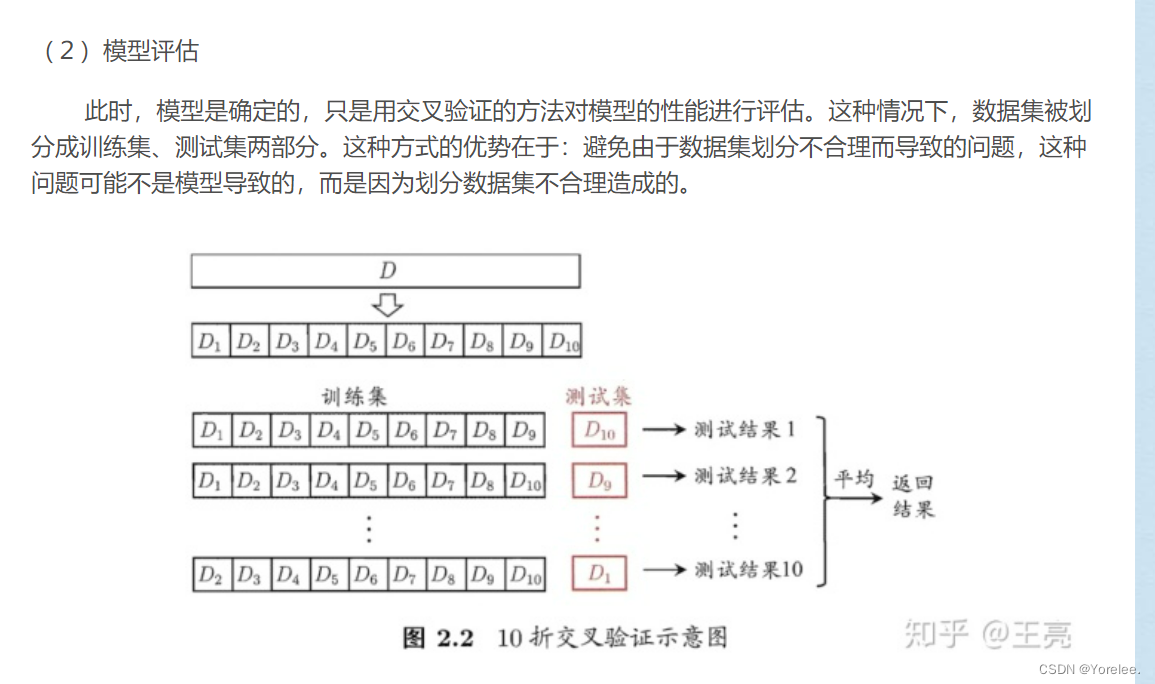

①K折交叉驗證? 模型評估

K折交叉驗證?模型評估,不重復抽樣將原始數據隨機分為 k 份。每一次挑選其中 1 份作為訓練集,剩余 k-1 份作為訓練集用于模型訓練。計算測試集上的得分。在這種情況下它只是用來計算模型得分的。

(這里說的測試集,嚴格上來講屬于整個機器學習過程中的驗證集)

回歸任務:

from sklearn.linear_model import LinearRegression

from sklearn.linear_model import Lasso

from sklearn.linear_model import ElasticNet

from sklearn.tree import DecisionTreeRegressor

from sklearn.neighbors import KNeighborsRegressor

from sklearn.svm import SVR

from sklearn.metrics import mean_squared_error #MSE

from sklearn.model_selection import cross_val_scoremodels = {} #dict={k:v,k1:v1} list=[1,2,3,4] set=(1,2,3,4)

models['LR'] = LinearRegression()

models['LASSO'] = Lasso()

models['EN'] = ElasticNet()

models['KNN'] = KNeighborsRegressor()

models['CART'] = DecisionTreeRegressor()

models['SVM'] = SVR()scoring='neg_mean_squared_error'

#回歸模型,這里用均方誤差得分作為模型的優化標準,模型會盡量使得該指標最好#比較均方差

results=[]

for key in models:cv_result=cross_val_score(models[key],x_train,y_train,cv=10,scoring=scoring)

#cv指定的是交叉驗證的折數,k折交叉驗證會將訓練集分成k部分,然后每個部分計算一個分數。results.append(cv_result) #list.append(t),這行語句的目的是為了以后畫圖用,但實際上print就能看到分數啦print('%s: %f (%f)'%(key,cv_result.mean(),cv_result.std()))#model = RandomForestClassifier(n_estimators= n_estimators)

#model.fit(X_train,y_train)

#mse = mean_squared_error(y_test,model.predict(X_test))分類任務:(XGB和LGB是集成模型)

from lightgbm import LGBMClassifier

from xgboost import XGBClassifier

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import cross_val_scoremodels = {} #dict={k:v,k1:v1} list=[1,2,3,4] set=(1,2,3,4)

models['RFC'] = RandomForestClassifier()

models['XGB'] = XGBClassifier()

models['LGB'] = LGBMClassifier(verbose= -1)scoring='roc_auc'

#使用AUC評估#比較均方差

results=[]

for key in models:cv_result=cross_val_score(models[key],x_train,y_train,cv=10,scoring=scoring)

#cv指定的是交叉驗證的折數,k折交叉驗證會將訓練集分成k部分,然后每個部分計算一個分數。print('%s: %f (%f)'%(key,cv_result.mean(),cv_result.std()))②先對模型進行訓練,然后計算得分

分類問題:

from lightgbm import LGBMClassifier

model = LGBMClassifier(verbose= -1)

model.fit(X_train,y_train)

#具體算什么得分,就靠你自己選了

#model.score() 函數通常計算的是模型的準確度(accuracy)

print('LGBM的訓練集得分:{}'.format(model.score(X_train,y_train)))

print('LGBM的測試集得分:{}'.format(model.score(X_test,y_test)))from sklearn.metrics import roc_auc_score# 預測概率

y_train_proba = model.predict_proba(X_train)[:, 1]

y_test_proba = model.predict_proba(X_test)[:, 1]# 計算訓練集和測試集的AUC

train_auc = roc_auc_score(y_train, y_train_proba)

test_auc = roc_auc_score(y_test, y_test_proba)print('LGBM的訓練集AUC:{:.4f}'.format(train_auc))

print('LGBM的測試集AUC:{:.4f}'.format(test_auc))

回歸問題:

model = RandomForestRegressor(n_estimators= n_estimators)

model.fit(X_train,y_train)

mse = mean_squared_error(y_test,model.predict(X_test))

print('RandomForest_Regressor的訓練集得分:{}'.format(model.score(X_train,y_train)))

print('RandomForest_Regressor的測試集得分:{}'.format(model.score(X_test,y_test)))

print('RandomForest_Regressor的mse得分:{}'.format(model.score(X_test,y_test)))二、pipeline正態化再訓練

Pipeline類似于一個管道,輸入的數據會從管道起始位置輸入,然后依次經過管道中的每一個部分最后輸出。沒啥區別其實()

from sklearn.pipeline import Pipeline

pipelines={}

pipelines['ScalerLR']=Pipeline([('Scaler',StandardScaler()),('LR',LinearRegression())])

pipelines['ScalerLASSO']=Pipeline([('Scaler',StandardScaler()),('LASSO',Lasso())])

pipelines['ScalerEN'] = Pipeline([('Scaler', StandardScaler()), ('EN', ElasticNet())])

pipelines['ScalerKNN'] = Pipeline([('Scaler', StandardScaler()), ('KNN', KNeighborsRegressor())])

pipelines['ScalerCART'] = Pipeline([('Scaler', StandardScaler()), ('CART', DecisionTreeRegressor())])

pipelines['ScalerSVM'] = Pipeline([('Scaler', StandardScaler()), ('SVM', SVR())])

#這個pipeline包含一個正態化~scoring='neg_mean_squared_error'results=[]

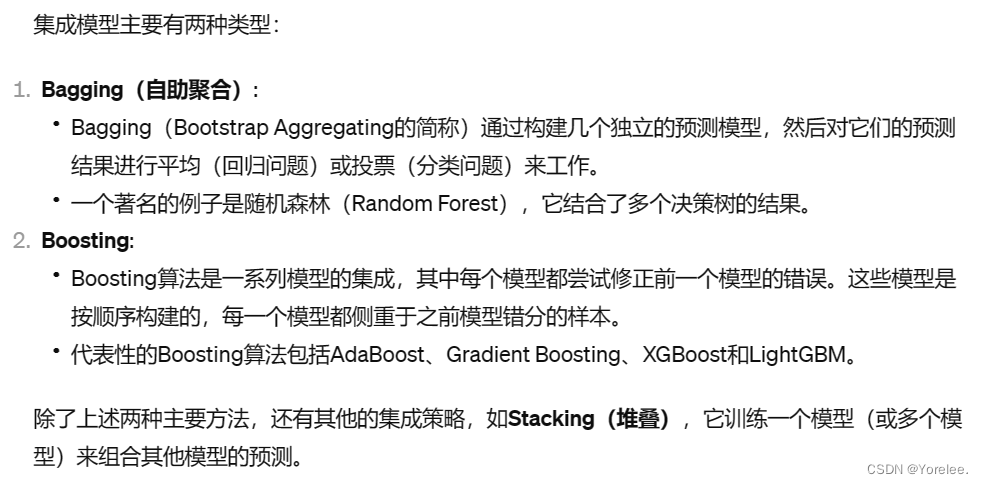

for key in pipelines:kfold=KFold(n_splits=num_flods,random_state=feed)cv_result=cross_val_score(pipelines[key],x_train,y_train,cv=kfold,scoring=scoring)results.append(cv_result)print('pipeline %s: %f (%f)'%(key,cv_result.mean(),cv_result.std()))二、集成模型

#調參外,提高模型準確度是使用集成算法。

import numpy as np

from sklearn.model_selection import KFold, cross_val_score

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler

from sklearn.ensemble import AdaBoostRegressor, RandomForestRegressor, GradientBoostingRegressor, ExtraTreesRegressor

from sklearn.neighbors import KNeighborsRegressor

from sklearn.linear_model import LinearRegressionnum_folds = 10

seed = 7

scoring = 'neg_mean_squared_error'ensembles = {}

ensembles['ScaledAB'] = Pipeline([('Scaler', StandardScaler()), ('AB', AdaBoostRegressor())])

ensembles['ScaledAB-KNN'] = Pipeline([('Scaler', StandardScaler()),('ABKNN', AdaBoostRegressor(base_estimator=KNeighborsRegressor(n_neighbors=3)))])

ensembles['ScaledAB-LR'] = Pipeline([('Scaler', StandardScaler()), ('ABLR', AdaBoostRegressor(LinearRegression()))])

ensembles['ScaledRFR'] = Pipeline([('Scaler', StandardScaler()), ('RFR', RandomForestRegressor())])

ensembles['ScaledETR'] = Pipeline([('Scaler', StandardScaler()), ('ETR', ExtraTreesRegressor())])

ensembles['ScaledGBR'] = Pipeline([('Scaler', StandardScaler()), ('RBR', GradientBoostingRegressor())])results = []

for key in ensembles:kfold = KFold(n_splits=num_flods, random_state=feed)cv_result = cross_val_score(ensembles[key], x_train, y_train, cv=kfold, scoring=scoring)results.append(cv_result)print('%s: %f (%f)' % (key, cv_result.mean(), cv_result.std()))二、模型優化(網格搜索,隨便寫寫)

使用GridSearchCV進行網格搜索

一、普通模型網格搜索——隨機森林

from sklearn.model_selection import GridSearchCV

from sklearn.ensemble import RandomForestRegressorparam_grid= {'n_estimators':[1,5,10,15,20,25,30,35,40,45,50,55,60,65,70,75,80,85,90,95,100,125,150,200],'max_features':('auto','sqrt','log2')}

#最佳迭代次數

#最大特征數

m = GridSearchCV(RandomForestRegressor(random_state=827),param_grid)

m = m.fit(X_train,y_train)

mse = mean_squared_error(y_test,m.predict(X_test))

print("該參數下得到的MSE值為:{}".format(mse))

print("該參數下得到的最佳得分為:{}".format(m.best_score_))

print("最佳參數為:{}".format(m.best_params_))二、集成模型網格搜索——XGB

%%time

from xgboost import XGBClassifier

from sklearn.model_selection import GridSearchCV

# xgb 網格搜索,參數調優

# c初始參數

params = {'learning_rate': 0.1, 'n_estimators': 500, 'max_depth': 5, 'min_child_weight': 1, 'seed': 0,'subsample': 0.8, 'colsample_bytree': 0.8, 'gamma': 0, 'reg_alpha': 0, 'reg_lambda': 1}

XGB_Regressor_Then_params = {'learning_rate': 0.1, 'n_estimators': 200, 'max_depth': 6, 'min_child_weight': 9, 'seed': 0,'subsample': 0.8, 'colsample_bytree': 0.8, 'gamma': 0.3, 'reg_alpha': 0, 'reg_lambda': 1}

# 最佳迭代次數:n_estimators、min_child_weight 、最大深度 max_depth、后剪枝參數 gamma、樣本采樣subsample 、 列采樣colsample_bytree

# L1正則項參數reg_alpha 、 L2正則項參數reg_lambda、學習率learning_rate

param_grid= {'n_estimators':[50,100,150,200,250,300,350,400,450,500,550,600,650,700,750,800,850,900,950,1000,1250,1500,1750,2000],}

m = GridSearchCV(XGBClassifier(objective ='reg:squarederror',**params),param_grid,scoring='roc_auc')

m = m.fit(X_train,y_train)

mse = mean_squared_error(y_test, m.predict(X_test))

print('該參數下得到的最佳AUC為:{}'.format(m.best_score_))

print('最佳參數為:{}'.format(m.best_params_))print('XGB的AUC圖:')

lr_fpr, lr_tpr, lr_thresholds = roc_curve(y_test,m.predict_proba(X_test)[:,1])

lr_roc_auc = metrics.auc(lr_fpr, lr_tpr)

plt.figure(figsize=(8, 5))

plt.plot([0, 1], [0, 1],'--', color='r')

plt.plot(lr_fpr, lr_tpr, label='XGB(area = %0.2f)' % lr_roc_auc)

plt.xlim([0.0, 1.0])

plt.ylim([0.0, 1.0])

plt.show()

node節點加入master主節點(3))

)

)

之 緩存)

Dropout抑制過擬合與超參數的選擇--九五小龐)

(一))