零.前言:

本文章借鑒:Python爬蟲實戰(五):根據關鍵字爬取某度圖片批量下載到本地(附上完整源碼)_python爬蟲下載圖片-CSDN博客

?大佬的文章里面有API的獲取,在這里我就不贅述了。

一.實戰目標:

對百度的圖片進行爬取,利用代理IP實現批量下載。

二.實現效果:

實現批量下載指定內容的圖片,存放到指定文件夾中:

?三.代碼實現

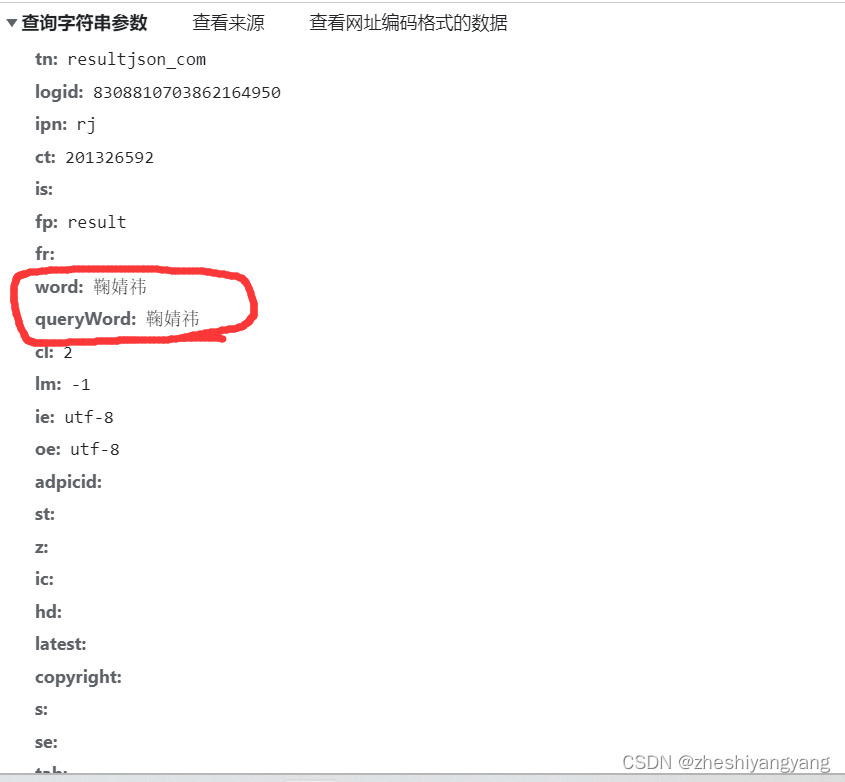

3.1分析網頁

右鍵網頁,點擊檢查,進入我們的Google開發者工具。

篩選出我們需要的文件(通過查找載荷尋找)

?接下來,只需要構建我們的載荷:

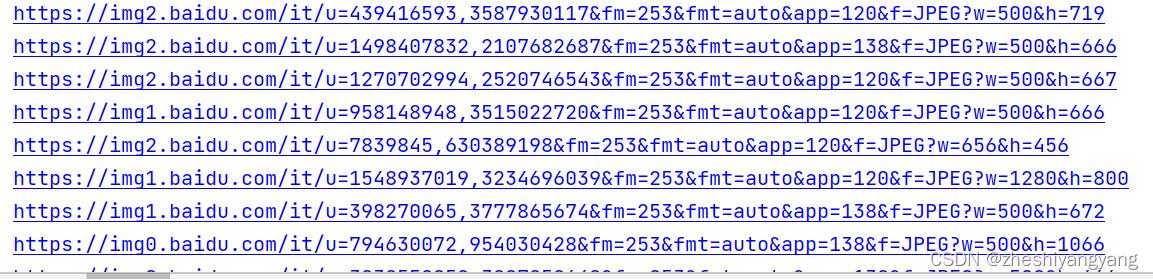

?3.2獲取圖片的URL鏈接

def get_img_url(keyword):#接口連接url = "https://image.baidu.com/search/acjson"#請求頭header = {"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36"}#params參數params = {"tn": "resultjson_com","logid": "7831763171415538646","ipn": "rj","ct": "201326592","is":"","fp":"result","fr":"","word":f"{keyword}","queryWord":f"{keyword}","cl":"2","lm":"-1","ie":"utf - 8","oe":"utf - 8","adpicid":"","st":"","z":"","ic":"","hd":"","latest":"","copyright":"","s":"","se":"","tab":"","width":"","height":"","face":"","istype":"","qc":"","nc":"1","expermode":"","nojc":"","isAsync":"","pn":"1","rn":"100","gsm":"78","1709030173834":""}#創建get請求r = requests.get(url=url,params=params,headers=header)#切換編碼格式r.encoding = "utf-8"json_dict = r.json()#定位數據data_list = json_dict["data"]#存儲鏈接url_list = []#循環取鏈接for i in data_list:if i:u = i["thumbURL"]url_list.append(u)return url_list結果:?

?3.3實現代理

def get_ip():#代理APIurl = "你的代理API"while 1:try:r = requests.get(url,timeout=10)except:continueip = r.text.strip()if "請求過于頻繁" in ip:print("IP請求頻繁")time.sleep(1)continuebreakproxies = {"https": f"{ip}"}return proxies效果:

?

?3.4實現爬蟲

def get_down_img(img_url_list):#創建文件夾if not os.path.isdir("鞠婧祎"):os.mkdir("鞠婧祎")#定義圖片編號n = 0header = {"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36"}times = 0while times < len(img_url_list):#獲取代理IPproxies = get_ip()try:img_data = requests.get(url=img_url_list[times],headers=header,proxies=proxies,timeout=2)except Exception as e:print(e)continue#拼接圖片存放地址和名字img_path = "鞠婧祎/" + str(n) + ".jpg"#寫入圖片with open(img_path,"wb") as f:f.write(img_data.content)n = n + 1times += 1?四、優化

上面基本實現了批量爬取圖片的目的,但是在實際使用中可能會因為代理IP的質量問題,網絡問題,導致爬取效率低下,在這里作者給出幾點優化的空間:

1.設置timeout超時時間(秒/S)

2.使用requests.sessions類,構建一個sessions對象,設置連接重試次數。

3.使用多線程,分批爬取

具體實現,可以等作者后面慢慢更新,挖個大坑,記得催更。。。

五、全部代碼

import requests

import time

import osdef get_img_url(keyword):#接口連接url = "https://image.baidu.com/search/acjson"#請求頭header = {"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36"}#params參數params = {"tn": "resultjson_com","logid": "7831763171415538646","ipn": "rj","ct": "201326592","is":"","fp":"result","fr":"","word":f"{keyword}","queryWord":f"{keyword}","cl":"2","lm":"-1","ie":"utf - 8","oe":"utf - 8","adpicid":"","st":"","z":"","ic":"","hd":"","latest":"","copyright":"","s":"","se":"","tab":"","width":"","height":"","face":"","istype":"","qc":"","nc":"1","expermode":"","nojc":"","isAsync":"","pn":"1","rn":"100","gsm":"78","1709030173834":""}#創建get請求r = requests.get(url=url,params=params,headers=header)#切換編碼格式r.encoding = "utf-8"json_dict = r.json()#定位數據data_list = json_dict["data"]#存儲鏈接url_list = []#循環取鏈接for i in data_list:if i:u = i["thumbURL"]url_list.append(u)print(u)return url_listdef get_ip():#代理APIurl = "你的API"while 1:try:r = requests.get(url,timeout=10)except:continueip = r.text.strip()if "請求過于頻繁" in ip:print("IP請求頻繁")time.sleep(1)continuebreakproxies = {"https": f"{ip}"}return proxiesdef get_down_img(img_url_list):#創建文件夾if not os.path.isdir("鞠婧祎"):os.mkdir("鞠婧祎")#定義圖片編號n = 0header = {"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36"}times = 0while times < len(img_url_list):#獲取代理IPproxies = get_ip()try:img_data = requests.get(url=img_url_list[times],headers=header,proxies=proxies,timeout=2)except Exception as e:print(e)continue#拼接圖片存放地址和名字img_path = "鞠婧祎/" + str(n) + ".jpg"#寫入圖片with open(img_path,"wb") as f:f.write(img_data.content)n = n + 1times += 1if __name__ == "__main__":url_list = get_img_url("鞠婧祎")get_down_img(url_list)六、前置文章

有些讀者可能不太懂一些爬蟲的知識,在這里作者給出部分文章,方便讀者理解:

關于Cookie的淺談-CSDN博客

JSON簡介以及如何在Python中使用JSON-CSDN博客

Python爬蟲實戰第一例【一】-CSDN博客

)

Unity自帶的角色控制器)

)