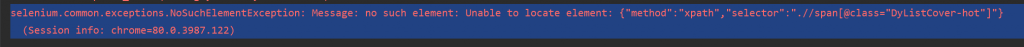

不加延遲報錯selenium.common.exceptions.NoSuchElementException: Message: no such element: Unable to locate element: {“method”:”xpath”,”selector”:”.//span[@class=”DyListCover-hot”]”}

(Session info: chrome=80.0.3987.122)

最開始以為是版本問題,不過應該不會,我檢查了下版本

最開始以為是版本問題,不過應該不會,我檢查了下版本

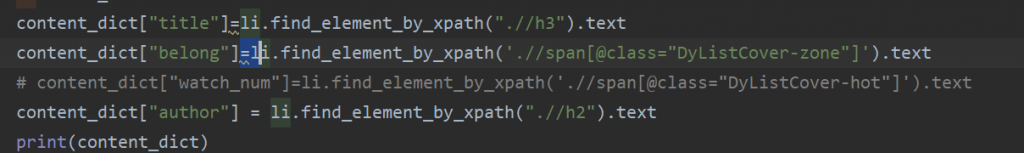

然后我注釋掉這一段

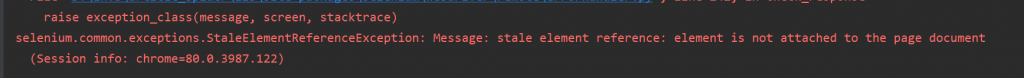

然后報Message: stale element reference: element is not attached to the page document

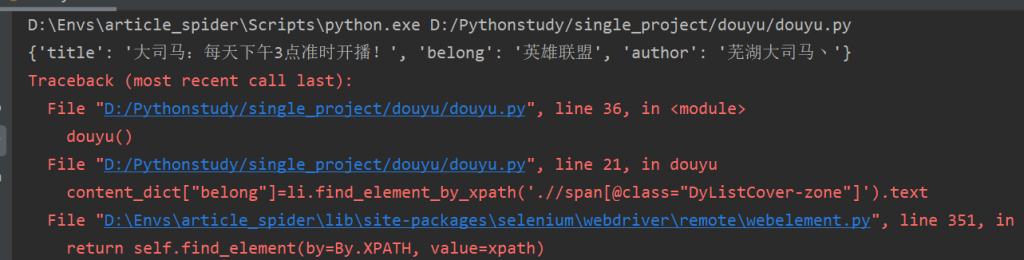

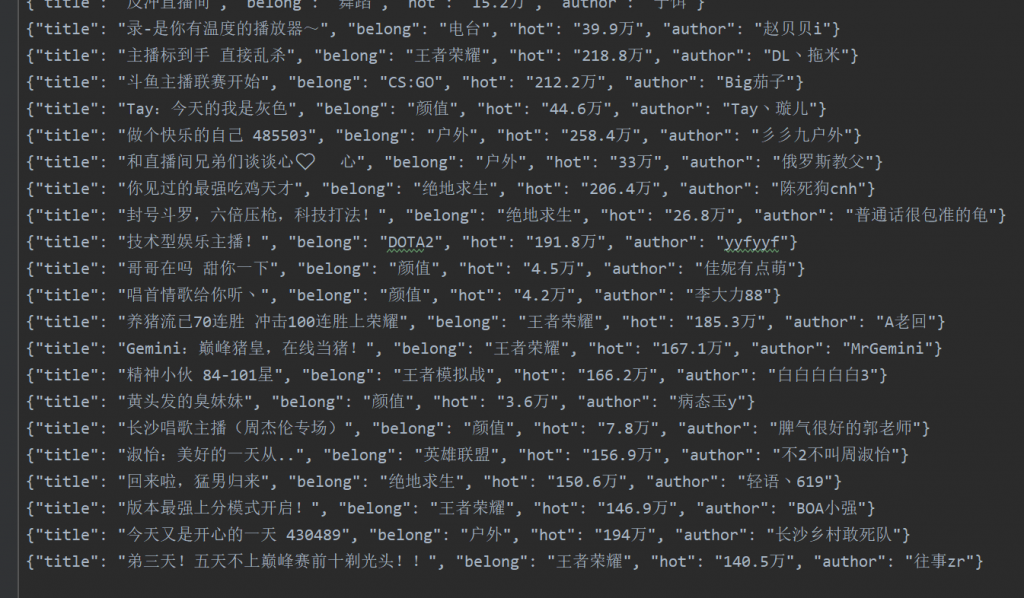

但是卻爬取到一段消息

說明有可能是延時的問題,在開頭加上延時,ok

附上源碼

import json

import time

from selenium import webdriver

driver=webdriver.Chrome()

driver.get("https://www.douyu.com/directory/all")

# driver.close()

#

def douyu():

##要加延遲,不然要報錯 time.sleep(5)

li_list=driver.find_elements_by_xpath('//*[@id="listAll"]/section[2]/div[2]/ul/li')

# print(list_all)

content_dict={}

for li in li_list:

content_dict["title"]=li.find_element_by_xpath(".//h3").text

content_dict["belong"]=li.find_element_by_xpath('.//span[@class="DyListCover-zone"]').text

content_dict["hot"]=li.find_element_by_xpath('.//span[@class="DyListCover-hot"]').text

content_dict["author"] = li.find_element_by_xpath(".//h2").text

print(content_dict)

#將字典轉換為字符串便于存儲

s=json.dumps(content_dict,ensure_ascii=False) #json序列化默認對中文采用ascii編碼,所以False

with open("douyu.txt","a",encoding="utf-8") as f:

f.write(s+'\n')

next_url=driver.find_elements_by_xpath('//li[@title="下一頁"]/span[@class="dy-Pagination-item-custom"]')

#三元表達式

next_url=next_url[0] if len(next_url) > 0 else None

while next_url is not None:

next_url.click()

time.sleep(3)

#我調我自己 點擊下一頁停5s繼續爬取 延時設在開頭 douyu()

douyu()

)

)

...)