參考鏈接

- FFmpeg源代碼簡單分析:avformat_find_stream_info()_雷霄驊的博客-CSDN博客_avformat_find_stream_info

avformat_find_stream_info()

- ?該函數可以讀取一部分視音頻數據并且獲得一些相關的信息

- avformat_find_stream_info()的聲明位于libavformat\avformat.h,如下所示。 ??

/*** Read packets of a media file to get stream information. This* is useful for file formats with no headers such as MPEG. This* function also computes the real framerate in case of MPEG-2 repeat* frame mode.* The logical file position is not changed by this function;* examined packets may be buffered for later processing.** @param ic media file handle* @param options If non-NULL, an ic.nb_streams long array of pointers to* dictionaries, where i-th member contains options for* codec corresponding to i-th stream.* On return each dictionary will be filled with options that were not found.* @return >=0 if OK, AVERROR_xxx on error** @note this function isn't guaranteed to open all the codecs, so* options being non-empty at return is a perfectly normal behavior.** @todo Let the user decide somehow what information is needed so that* we do not waste time getting stuff the user does not need.*/

int avformat_find_stream_info(AVFormatContext *ic, AVDictionary **options);- 簡單解釋一下它的參數的含義:

- ic:輸入的AVFormatContext。

- options:額外的選項,目前沒有深入研究過。

- 函數正常執行后返回值大于等于0。

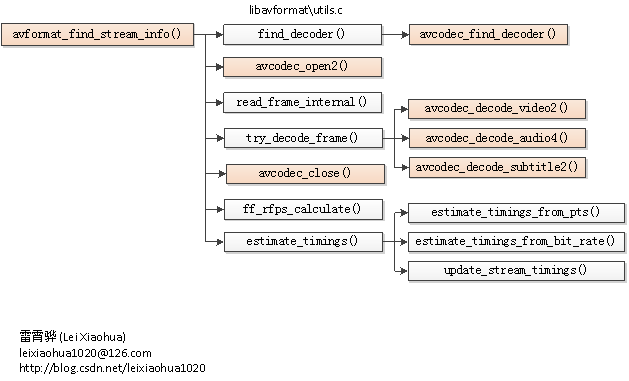

函數調用關系圖

?

代碼

int avformat_find_stream_info(AVFormatContext *ic, AVDictionary **options)

{FFFormatContext *const si = ffformatcontext(ic);int count = 0, ret = 0;int64_t read_size;AVPacket *pkt1 = si->pkt;int64_t old_offset = avio_tell(ic->pb);// new streams might appear, no options for thoseint orig_nb_streams = ic->nb_streams;int flush_codecs;int64_t max_analyze_duration = ic->max_analyze_duration;int64_t max_stream_analyze_duration;int64_t max_subtitle_analyze_duration;int64_t probesize = ic->probesize;int eof_reached = 0;int *missing_streams = av_opt_ptr(ic->iformat->priv_class, ic->priv_data, "missing_streams");flush_codecs = probesize > 0;av_opt_set_int(ic, "skip_clear", 1, AV_OPT_SEARCH_CHILDREN);max_stream_analyze_duration = max_analyze_duration;max_subtitle_analyze_duration = max_analyze_duration;if (!max_analyze_duration) {max_stream_analyze_duration =max_analyze_duration = 5*AV_TIME_BASE;max_subtitle_analyze_duration = 30*AV_TIME_BASE;if (!strcmp(ic->iformat->name, "flv"))max_stream_analyze_duration = 90*AV_TIME_BASE;if (!strcmp(ic->iformat->name, "mpeg") || !strcmp(ic->iformat->name, "mpegts"))max_stream_analyze_duration = 7*AV_TIME_BASE;}if (ic->pb) {FFIOContext *const ctx = ffiocontext(ic->pb);av_log(ic, AV_LOG_DEBUG, "Before avformat_find_stream_info() pos: %"PRId64" bytes read:%"PRId64" seeks:%d nb_streams:%d\n",avio_tell(ic->pb), ctx->bytes_read, ctx->seek_count, ic->nb_streams);}for (unsigned i = 0; i < ic->nb_streams; i++) {const AVCodec *codec;AVDictionary *thread_opt = NULL;AVStream *const st = ic->streams[i];FFStream *const sti = ffstream(st);AVCodecContext *const avctx = sti->avctx;if (st->codecpar->codec_type == AVMEDIA_TYPE_VIDEO ||st->codecpar->codec_type == AVMEDIA_TYPE_SUBTITLE) {

/* if (!st->time_base.num)st->time_base = */if (!avctx->time_base.num)avctx->time_base = st->time_base;}/* check if the caller has overridden the codec id */// only for the split stuffif (!sti->parser && !(ic->flags & AVFMT_FLAG_NOPARSE) && sti->request_probe <= 0) {sti->parser = av_parser_init(st->codecpar->codec_id);if (sti->parser) {if (sti->need_parsing == AVSTREAM_PARSE_HEADERS) {sti->parser->flags |= PARSER_FLAG_COMPLETE_FRAMES;} else if (sti->need_parsing == AVSTREAM_PARSE_FULL_RAW) {sti->parser->flags |= PARSER_FLAG_USE_CODEC_TS;}} else if (sti->need_parsing) {av_log(ic, AV_LOG_VERBOSE, "parser not found for codec ""%s, packets or times may be invalid.\n",avcodec_get_name(st->codecpar->codec_id));}}ret = avcodec_parameters_to_context(avctx, st->codecpar);if (ret < 0)goto find_stream_info_err;if (sti->request_probe <= 0)sti->avctx_inited = 1;codec = find_probe_decoder(ic, st, st->codecpar->codec_id);/* Force thread count to 1 since the H.264 decoder will not extract* SPS and PPS to extradata during multi-threaded decoding. */av_dict_set(options ? &options[i] : &thread_opt, "threads", "1", 0);/* Force lowres to 0. The decoder might reduce the video size by the* lowres factor, and we don't want that propagated to the stream's* codecpar */av_dict_set(options ? &options[i] : &thread_opt, "lowres", "0", 0);if (ic->codec_whitelist)av_dict_set(options ? &options[i] : &thread_opt, "codec_whitelist", ic->codec_whitelist, 0);// Try to just open decoders, in case this is enough to get parameters.// Also ensure that subtitle_header is properly set.if (!has_codec_parameters(st, NULL) && sti->request_probe <= 0 ||st->codecpar->codec_type == AVMEDIA_TYPE_SUBTITLE) {if (codec && !avctx->codec)if (avcodec_open2(avctx, codec, options ? &options[i] : &thread_opt) < 0)av_log(ic, AV_LOG_WARNING,"Failed to open codec in %s\n",__FUNCTION__);}if (!options)av_dict_free(&thread_opt);}read_size = 0;for (;;) {const AVPacket *pkt;AVStream *st;FFStream *sti;AVCodecContext *avctx;int analyzed_all_streams;unsigned i;if (ff_check_interrupt(&ic->interrupt_callback)) {ret = AVERROR_EXIT;av_log(ic, AV_LOG_DEBUG, "interrupted\n");break;}/* check if one codec still needs to be handled */for (i = 0; i < ic->nb_streams; i++) {AVStream *const st = ic->streams[i];FFStream *const sti = ffstream(st);int fps_analyze_framecount = 20;int count;if (!has_codec_parameters(st, NULL))break;/* If the timebase is coarse (like the usual millisecond precision* of mkv), we need to analyze more frames to reliably arrive at* the correct fps. */if (av_q2d(st->time_base) > 0.0005)fps_analyze_framecount *= 2;if (!tb_unreliable(sti->avctx))fps_analyze_framecount = 0;if (ic->fps_probe_size >= 0)fps_analyze_framecount = ic->fps_probe_size;if (st->disposition & AV_DISPOSITION_ATTACHED_PIC)fps_analyze_framecount = 0;/* variable fps and no guess at the real fps */count = (ic->iformat->flags & AVFMT_NOTIMESTAMPS) ?sti->info->codec_info_duration_fields/2 :sti->info->duration_count;if (!(st->r_frame_rate.num && st->avg_frame_rate.num) &&st->codecpar->codec_type == AVMEDIA_TYPE_VIDEO) {if (count < fps_analyze_framecount)break;}// Look at the first 3 frames if there is evidence of frame delay// but the decoder delay is not set.if (sti->info->frame_delay_evidence && count < 2 && sti->avctx->has_b_frames == 0)break;if (!sti->avctx->extradata &&(!sti->extract_extradata.inited || sti->extract_extradata.bsf) &&extract_extradata_check(st))break;if (sti->first_dts == AV_NOPTS_VALUE &&!(ic->iformat->flags & AVFMT_NOTIMESTAMPS) &&sti->codec_info_nb_frames < ((st->disposition & AV_DISPOSITION_ATTACHED_PIC) ? 1 : ic->max_ts_probe) &&(st->codecpar->codec_type == AVMEDIA_TYPE_VIDEO ||st->codecpar->codec_type == AVMEDIA_TYPE_AUDIO))break;}analyzed_all_streams = 0;if (!missing_streams || !*missing_streams)if (i == ic->nb_streams) {analyzed_all_streams = 1;/* NOTE: If the format has no header, then we need to read some* packets to get most of the streams, so we cannot stop here. */if (!(ic->ctx_flags & AVFMTCTX_NOHEADER)) {/* If we found the info for all the codecs, we can stop. */ret = count;av_log(ic, AV_LOG_DEBUG, "All info found\n");flush_codecs = 0;break;}}/* We did not get all the codec info, but we read too much data. */if (read_size >= probesize) {ret = count;av_log(ic, AV_LOG_DEBUG,"Probe buffer size limit of %"PRId64" bytes reached\n", probesize);for (unsigned i = 0; i < ic->nb_streams; i++) {AVStream *const st = ic->streams[i];FFStream *const sti = ffstream(st);if (!st->r_frame_rate.num &&sti->info->duration_count <= 1 &&st->codecpar->codec_type == AVMEDIA_TYPE_VIDEO &&strcmp(ic->iformat->name, "image2"))av_log(ic, AV_LOG_WARNING,"Stream #%d: not enough frames to estimate rate; ""consider increasing probesize\n", i);}break;}/* NOTE: A new stream can be added there if no header in file* (AVFMTCTX_NOHEADER). */ret = read_frame_internal(ic, pkt1);if (ret == AVERROR(EAGAIN))continue;if (ret < 0) {/* EOF or error*/eof_reached = 1;break;}if (!(ic->flags & AVFMT_FLAG_NOBUFFER)) {ret = avpriv_packet_list_put(&si->packet_buffer,pkt1, NULL, 0);if (ret < 0)goto unref_then_goto_end;pkt = &si->packet_buffer.tail->pkt;} else {pkt = pkt1;}st = ic->streams[pkt->stream_index];sti = ffstream(st);if (!(st->disposition & AV_DISPOSITION_ATTACHED_PIC))read_size += pkt->size;avctx = sti->avctx;if (!sti->avctx_inited) {ret = avcodec_parameters_to_context(avctx, st->codecpar);if (ret < 0)goto unref_then_goto_end;sti->avctx_inited = 1;}if (pkt->dts != AV_NOPTS_VALUE && sti->codec_info_nb_frames > 1) {/* check for non-increasing dts */if (sti->info->fps_last_dts != AV_NOPTS_VALUE &&sti->info->fps_last_dts >= pkt->dts) {av_log(ic, AV_LOG_DEBUG,"Non-increasing DTS in stream %d: packet %d with DTS ""%"PRId64", packet %d with DTS %"PRId64"\n",st->index, sti->info->fps_last_dts_idx,sti->info->fps_last_dts, sti->codec_info_nb_frames,pkt->dts);sti->info->fps_first_dts =sti->info->fps_last_dts = AV_NOPTS_VALUE;}/* Check for a discontinuity in dts. If the difference in dts* is more than 1000 times the average packet duration in the* sequence, we treat it as a discontinuity. */if (sti->info->fps_last_dts != AV_NOPTS_VALUE &&sti->info->fps_last_dts_idx > sti->info->fps_first_dts_idx &&(pkt->dts - (uint64_t)sti->info->fps_last_dts) / 1000 >(sti->info->fps_last_dts - (uint64_t)sti->info->fps_first_dts) /(sti->info->fps_last_dts_idx - sti->info->fps_first_dts_idx)) {av_log(ic, AV_LOG_WARNING,"DTS discontinuity in stream %d: packet %d with DTS ""%"PRId64", packet %d with DTS %"PRId64"\n",st->index, sti->info->fps_last_dts_idx,sti->info->fps_last_dts, sti->codec_info_nb_frames,pkt->dts);sti->info->fps_first_dts =sti->info->fps_last_dts = AV_NOPTS_VALUE;}/* update stored dts values */if (sti->info->fps_first_dts == AV_NOPTS_VALUE) {sti->info->fps_first_dts = pkt->dts;sti->info->fps_first_dts_idx = sti->codec_info_nb_frames;}sti->info->fps_last_dts = pkt->dts;sti->info->fps_last_dts_idx = sti->codec_info_nb_frames;}if (sti->codec_info_nb_frames > 1) {int64_t t = 0;int64_t limit;if (st->time_base.den > 0)t = av_rescale_q(sti->info->codec_info_duration, st->time_base, AV_TIME_BASE_Q);if (st->avg_frame_rate.num > 0)t = FFMAX(t, av_rescale_q(sti->codec_info_nb_frames, av_inv_q(st->avg_frame_rate), AV_TIME_BASE_Q));if ( t == 0&& sti->codec_info_nb_frames > 30&& sti->info->fps_first_dts != AV_NOPTS_VALUE&& sti->info->fps_last_dts != AV_NOPTS_VALUE) {int64_t dur = av_sat_sub64(sti->info->fps_last_dts, sti->info->fps_first_dts);t = FFMAX(t, av_rescale_q(dur, st->time_base, AV_TIME_BASE_Q));}if (analyzed_all_streams) limit = max_analyze_duration;else if (avctx->codec_type == AVMEDIA_TYPE_SUBTITLE) limit = max_subtitle_analyze_duration;else limit = max_stream_analyze_duration;if (t >= limit) {av_log(ic, AV_LOG_VERBOSE, "max_analyze_duration %"PRId64" reached at %"PRId64" microseconds st:%d\n",limit,t, pkt->stream_index);if (ic->flags & AVFMT_FLAG_NOBUFFER)av_packet_unref(pkt1);break;}if (pkt->duration > 0) {if (avctx->codec_type == AVMEDIA_TYPE_SUBTITLE && pkt->pts != AV_NOPTS_VALUE && st->start_time != AV_NOPTS_VALUE && pkt->pts >= st->start_time&& (uint64_t)pkt->pts - st->start_time < INT64_MAX) {sti->info->codec_info_duration = FFMIN(pkt->pts - st->start_time, sti->info->codec_info_duration + pkt->duration);} elsesti->info->codec_info_duration += pkt->duration;sti->info->codec_info_duration_fields += sti->parser && sti->need_parsing && avctx->ticks_per_frame == 2? sti->parser->repeat_pict + 1 : 2;}}if (st->codecpar->codec_type == AVMEDIA_TYPE_VIDEO) {

#if FF_API_R_FRAME_RATEff_rfps_add_frame(ic, st, pkt->dts);

#endifif (pkt->dts != pkt->pts && pkt->dts != AV_NOPTS_VALUE && pkt->pts != AV_NOPTS_VALUE)sti->info->frame_delay_evidence = 1;}if (!sti->avctx->extradata) {ret = extract_extradata(si, st, pkt);if (ret < 0)goto unref_then_goto_end;}/* If still no information, we try to open the codec and to* decompress the frame. We try to avoid that in most cases as* it takes longer and uses more memory. For MPEG-4, we need to* decompress for QuickTime.** If AV_CODEC_CAP_CHANNEL_CONF is set this will force decoding of at* least one frame of codec data, this makes sure the codec initializes* the channel configuration and does not only trust the values from* the container. */try_decode_frame(ic, st, pkt,(options && i < orig_nb_streams) ? &options[i] : NULL);if (ic->flags & AVFMT_FLAG_NOBUFFER)av_packet_unref(pkt1);sti->codec_info_nb_frames++;count++;}if (eof_reached) {for (unsigned stream_index = 0; stream_index < ic->nb_streams; stream_index++) {AVStream *const st = ic->streams[stream_index];AVCodecContext *const avctx = ffstream(st)->avctx;if (!has_codec_parameters(st, NULL)) {const AVCodec *codec = find_probe_decoder(ic, st, st->codecpar->codec_id);if (codec && !avctx->codec) {AVDictionary *opts = NULL;if (ic->codec_whitelist)av_dict_set(&opts, "codec_whitelist", ic->codec_whitelist, 0);if (avcodec_open2(avctx, codec, (options && stream_index < orig_nb_streams) ? &options[stream_index] : &opts) < 0)av_log(ic, AV_LOG_WARNING,"Failed to open codec in %s\n",__FUNCTION__);av_dict_free(&opts);}}// EOF already reached while reading the stream above.// So continue with reoordering DTS with whatever delay we have.if (si->packet_buffer.head && !has_decode_delay_been_guessed(st)) {update_dts_from_pts(ic, stream_index, si->packet_buffer.head);}}}if (flush_codecs) {AVPacket *empty_pkt = si->pkt;int err = 0;av_packet_unref(empty_pkt);for (unsigned i = 0; i < ic->nb_streams; i++) {AVStream *const st = ic->streams[i];FFStream *const sti = ffstream(st);/* flush the decoders */if (sti->info->found_decoder == 1) {err = try_decode_frame(ic, st, empty_pkt,(options && i < orig_nb_streams)? &options[i] : NULL);if (err < 0) {av_log(ic, AV_LOG_INFO,"decoding for stream %d failed\n", st->index);}}}}ff_rfps_calculate(ic);for (unsigned i = 0; i < ic->nb_streams; i++) {AVStream *const st = ic->streams[i];FFStream *const sti = ffstream(st);AVCodecContext *const avctx = sti->avctx;if (avctx->codec_type == AVMEDIA_TYPE_VIDEO) {if (avctx->codec_id == AV_CODEC_ID_RAWVIDEO && !avctx->codec_tag && !avctx->bits_per_coded_sample) {uint32_t tag= avcodec_pix_fmt_to_codec_tag(avctx->pix_fmt);if (avpriv_pix_fmt_find(PIX_FMT_LIST_RAW, tag) == avctx->pix_fmt)avctx->codec_tag= tag;}/* estimate average framerate if not set by demuxer */if (sti->info->codec_info_duration_fields &&!st->avg_frame_rate.num &&sti->info->codec_info_duration) {int best_fps = 0;double best_error = 0.01;AVRational codec_frame_rate = avctx->framerate;if (sti->info->codec_info_duration >= INT64_MAX / st->time_base.num / 2||sti->info->codec_info_duration_fields >= INT64_MAX / st->time_base.den ||sti->info->codec_info_duration < 0)continue;av_reduce(&st->avg_frame_rate.num, &st->avg_frame_rate.den,sti->info->codec_info_duration_fields * (int64_t) st->time_base.den,sti->info->codec_info_duration * 2 * (int64_t) st->time_base.num, 60000);/* Round guessed framerate to a "standard" framerate if it's* within 1% of the original estimate. */for (int j = 0; j < MAX_STD_TIMEBASES; j++) {AVRational std_fps = { get_std_framerate(j), 12 * 1001 };double error = fabs(av_q2d(st->avg_frame_rate) /av_q2d(std_fps) - 1);if (error < best_error) {best_error = error;best_fps = std_fps.num;}if (si->prefer_codec_framerate && codec_frame_rate.num > 0 && codec_frame_rate.den > 0) {error = fabs(av_q2d(codec_frame_rate) /av_q2d(std_fps) - 1);if (error < best_error) {best_error = error;best_fps = std_fps.num;}}}if (best_fps)av_reduce(&st->avg_frame_rate.num, &st->avg_frame_rate.den,best_fps, 12 * 1001, INT_MAX);}if (!st->r_frame_rate.num) {if ( avctx->time_base.den * (int64_t) st->time_base.num<= avctx->time_base.num * (uint64_t)avctx->ticks_per_frame * st->time_base.den) {av_reduce(&st->r_frame_rate.num, &st->r_frame_rate.den,avctx->time_base.den, (int64_t)avctx->time_base.num * avctx->ticks_per_frame, INT_MAX);} else {st->r_frame_rate.num = st->time_base.den;st->r_frame_rate.den = st->time_base.num;}}if (sti->display_aspect_ratio.num && sti->display_aspect_ratio.den) {AVRational hw_ratio = { avctx->height, avctx->width };st->sample_aspect_ratio = av_mul_q(sti->display_aspect_ratio,hw_ratio);}} else if (avctx->codec_type == AVMEDIA_TYPE_AUDIO) {if (!avctx->bits_per_coded_sample)avctx->bits_per_coded_sample =av_get_bits_per_sample(avctx->codec_id);// set stream disposition based on audio service typeswitch (avctx->audio_service_type) {case AV_AUDIO_SERVICE_TYPE_EFFECTS:st->disposition = AV_DISPOSITION_CLEAN_EFFECTS;break;case AV_AUDIO_SERVICE_TYPE_VISUALLY_IMPAIRED:st->disposition = AV_DISPOSITION_VISUAL_IMPAIRED;break;case AV_AUDIO_SERVICE_TYPE_HEARING_IMPAIRED:st->disposition = AV_DISPOSITION_HEARING_IMPAIRED;break;case AV_AUDIO_SERVICE_TYPE_COMMENTARY:st->disposition = AV_DISPOSITION_COMMENT;break;case AV_AUDIO_SERVICE_TYPE_KARAOKE:st->disposition = AV_DISPOSITION_KARAOKE;break;}}}if (probesize)estimate_timings(ic, old_offset);av_opt_set_int(ic, "skip_clear", 0, AV_OPT_SEARCH_CHILDREN);if (ret >= 0 && ic->nb_streams)/* We could not have all the codec parameters before EOF. */ret = -1;for (unsigned i = 0; i < ic->nb_streams; i++) {AVStream *const st = ic->streams[i];FFStream *const sti = ffstream(st);const char *errmsg;/* if no packet was ever seen, update context now for has_codec_parameters */if (!sti->avctx_inited) {if (st->codecpar->codec_type == AVMEDIA_TYPE_AUDIO &&st->codecpar->format == AV_SAMPLE_FMT_NONE)st->codecpar->format = sti->avctx->sample_fmt;ret = avcodec_parameters_to_context(sti->avctx, st->codecpar);if (ret < 0)goto find_stream_info_err;}if (!has_codec_parameters(st, &errmsg)) {char buf[256];avcodec_string(buf, sizeof(buf), sti->avctx, 0);av_log(ic, AV_LOG_WARNING,"Could not find codec parameters for stream %d (%s): %s\n""Consider increasing the value for the 'analyzeduration' (%"PRId64") and 'probesize' (%"PRId64") options\n",i, buf, errmsg, ic->max_analyze_duration, ic->probesize);} else {ret = 0;}}ret = compute_chapters_end(ic);if (ret < 0)goto find_stream_info_err;/* update the stream parameters from the internal codec contexts */for (unsigned i = 0; i < ic->nb_streams; i++) {AVStream *const st = ic->streams[i];FFStream *const sti = ffstream(st);if (sti->avctx_inited) {ret = avcodec_parameters_from_context(st->codecpar, sti->avctx);if (ret < 0)goto find_stream_info_err;ret = add_coded_side_data(st, sti->avctx);if (ret < 0)goto find_stream_info_err;}sti->avctx_inited = 0;}find_stream_info_err:for (unsigned i = 0; i < ic->nb_streams; i++) {AVStream *const st = ic->streams[i];FFStream *const sti = ffstream(st);if (sti->info) {av_freep(&sti->info->duration_error);av_freep(&sti->info);}avcodec_close(sti->avctx);// FIXME: avcodec_close() frees AVOption settable fields which includes ch_layout,// so we need to restore it.av_channel_layout_copy(&sti->avctx->ch_layout, &st->codecpar->ch_layout);av_bsf_free(&sti->extract_extradata.bsf);}if (ic->pb) {FFIOContext *const ctx = ffiocontext(ic->pb);av_log(ic, AV_LOG_DEBUG, "After avformat_find_stream_info() pos: %"PRId64" bytes read:%"PRId64" seeks:%d frames:%d\n",avio_tell(ic->pb), ctx->bytes_read, ctx->seek_count, count);}return ret;unref_then_goto_end:av_packet_unref(pkt1);goto find_stream_info_err;

}- 由于avformat_find_stream_info()代碼比較長,難以全部分析,在這里只能簡單記錄一下它的要點。

- 該函數主要用于給每個媒體流(音頻/視頻)的AVStream結構體賦值。

- 我們大致瀏覽一下這個函數的代碼,會發現它其實已經實現了解碼器的查找,解碼器的打開,視音頻幀的讀取,視音頻幀的解碼等工作。

- 換句話說,該函數實際上已經“走通”的解碼的整個流程。

- 下面看一下除了成員變量賦值之外,該函數的幾個關鍵流程。

- 1.查找解碼器:find_decoder() 此函數已經棄用;新版的好像使用的是find_probe_decoder

- 2.打開解碼器:avcodec_open2()

- 3.讀取完整的一幀壓縮編碼的數據:read_frame_internal()? 注:av_read_frame()內部實際上就是調用的read_frame_internal()。

- 4.解碼一些壓縮編碼數據:try_decode_frame()

- 下面選擇上述流程中幾個關鍵函數的代碼簡單看一下。?

find_probe_decoder

static const AVCodec *find_probe_decoder(AVFormatContext *s, const AVStream *st, enum AVCodecID codec_id)

{const AVCodec *codec;#if CONFIG_H264_DECODER/* Other parts of the code assume this decoder to be used for h264,* so force it if possible. */if (codec_id == AV_CODEC_ID_H264)return avcodec_find_decoder_by_name("h264");

#endifcodec = ff_find_decoder(s, st, codec_id);if (!codec)return NULL;if (codec->capabilities & AV_CODEC_CAP_AVOID_PROBING) {const AVCodec *probe_codec = NULL;void *iter = NULL;while ((probe_codec = av_codec_iterate(&iter))) {if (probe_codec->id == codec->id &&av_codec_is_decoder(probe_codec) &&!(probe_codec->capabilities & (AV_CODEC_CAP_AVOID_PROBING | AV_CODEC_CAP_EXPERIMENTAL))) {return probe_codec;}}}return codec;

}

ff_find_decoder?

- ff_find_decoder 函數和先前的find_decoder()函數雷同,用于找到合適的解碼器,它的定義如下所示。

const AVCodec *ff_find_decoder(AVFormatContext *s, const AVStream *st,enum AVCodecID codec_id)

{switch (st->codecpar->codec_type) {case AVMEDIA_TYPE_VIDEO:if (s->video_codec) return s->video_codec;break;case AVMEDIA_TYPE_AUDIO:if (s->audio_codec) return s->audio_codec;break;case AVMEDIA_TYPE_SUBTITLE:if (s->subtitle_codec) return s->subtitle_codec;break;}return avcodec_find_decoder(codec_id);

}- 從代碼中可以看出,如果指定的AVStream已經包含了解碼器,則函數什么也不做直接返回。

- 否則調用avcodec_find_decoder()獲取解碼器。

- avcodec_find_decoder()是一個FFmpeg的API函數,在這里不做詳細分析。?

?avcodec_find_decoder

const AVCodec *avcodec_find_decoder(enum AVCodecID id)

{return find_codec(id, av_codec_is_decoder);

}read_frame_internal()

- read_frame_internal()的功能是讀取一幀壓縮碼流數據。

- FFmpeg的API函數av_read_frame()內部調用的就是read_frame_internal()。

- 因此,可以認為read_frame_internal()和av_read_frame()的功能基本上是等同的。

static int read_frame_internal(AVFormatContext *s, AVPacket *pkt)

{FFFormatContext *const si = ffformatcontext(s);int ret, got_packet = 0;AVDictionary *metadata = NULL;while (!got_packet && !si->parse_queue.head) {AVStream *st;FFStream *sti;/* read next packet */ret = ff_read_packet(s, pkt);if (ret < 0) {if (ret == AVERROR(EAGAIN))return ret;/* flush the parsers */for (unsigned i = 0; i < s->nb_streams; i++) {AVStream *const st = s->streams[i];FFStream *const sti = ffstream(st);if (sti->parser && sti->need_parsing)parse_packet(s, pkt, st->index, 1);}/* all remaining packets are now in parse_queue =>* really terminate parsing */break;}ret = 0;st = s->streams[pkt->stream_index];sti = ffstream(st);st->event_flags |= AVSTREAM_EVENT_FLAG_NEW_PACKETS;/* update context if required */if (sti->need_context_update) {if (avcodec_is_open(sti->avctx)) {av_log(s, AV_LOG_DEBUG, "Demuxer context update while decoder is open, closing and trying to re-open\n");avcodec_close(sti->avctx);sti->info->found_decoder = 0;}/* close parser, because it depends on the codec */if (sti->parser && sti->avctx->codec_id != st->codecpar->codec_id) {av_parser_close(sti->parser);sti->parser = NULL;}ret = avcodec_parameters_to_context(sti->avctx, st->codecpar);if (ret < 0) {av_packet_unref(pkt);return ret;}sti->need_context_update = 0;}if (pkt->pts != AV_NOPTS_VALUE &&pkt->dts != AV_NOPTS_VALUE &&pkt->pts < pkt->dts) {av_log(s, AV_LOG_WARNING,"Invalid timestamps stream=%d, pts=%s, dts=%s, size=%d\n",pkt->stream_index,av_ts2str(pkt->pts),av_ts2str(pkt->dts),pkt->size);}if (s->debug & FF_FDEBUG_TS)av_log(s, AV_LOG_DEBUG,"ff_read_packet stream=%d, pts=%s, dts=%s, size=%d, duration=%"PRId64", flags=%d\n",pkt->stream_index,av_ts2str(pkt->pts),av_ts2str(pkt->dts),pkt->size, pkt->duration, pkt->flags);if (sti->need_parsing && !sti->parser && !(s->flags & AVFMT_FLAG_NOPARSE)) {sti->parser = av_parser_init(st->codecpar->codec_id);if (!sti->parser) {av_log(s, AV_LOG_VERBOSE, "parser not found for codec ""%s, packets or times may be invalid.\n",avcodec_get_name(st->codecpar->codec_id));/* no parser available: just output the raw packets */sti->need_parsing = AVSTREAM_PARSE_NONE;} else if (sti->need_parsing == AVSTREAM_PARSE_HEADERS)sti->parser->flags |= PARSER_FLAG_COMPLETE_FRAMES;else if (sti->need_parsing == AVSTREAM_PARSE_FULL_ONCE)sti->parser->flags |= PARSER_FLAG_ONCE;else if (sti->need_parsing == AVSTREAM_PARSE_FULL_RAW)sti->parser->flags |= PARSER_FLAG_USE_CODEC_TS;}if (!sti->need_parsing || !sti->parser) {/* no parsing needed: we just output the packet as is */compute_pkt_fields(s, st, NULL, pkt, AV_NOPTS_VALUE, AV_NOPTS_VALUE);if ((s->iformat->flags & AVFMT_GENERIC_INDEX) &&(pkt->flags & AV_PKT_FLAG_KEY) && pkt->dts != AV_NOPTS_VALUE) {ff_reduce_index(s, st->index);av_add_index_entry(st, pkt->pos, pkt->dts,0, 0, AVINDEX_KEYFRAME);}got_packet = 1;} else if (st->discard < AVDISCARD_ALL) {if ((ret = parse_packet(s, pkt, pkt->stream_index, 0)) < 0)return ret;st->codecpar->sample_rate = sti->avctx->sample_rate;st->codecpar->bit_rate = sti->avctx->bit_rate;

#if FF_API_OLD_CHANNEL_LAYOUT

FF_DISABLE_DEPRECATION_WARNINGSst->codecpar->channels = sti->avctx->ch_layout.nb_channels;st->codecpar->channel_layout = sti->avctx->ch_layout.order == AV_CHANNEL_ORDER_NATIVE ?sti->avctx->ch_layout.u.mask : 0;

FF_ENABLE_DEPRECATION_WARNINGS

#endifret = av_channel_layout_copy(&st->codecpar->ch_layout, &sti->avctx->ch_layout);if (ret < 0)return ret;st->codecpar->codec_id = sti->avctx->codec_id;} else {/* free packet */av_packet_unref(pkt);}if (pkt->flags & AV_PKT_FLAG_KEY)sti->skip_to_keyframe = 0;if (sti->skip_to_keyframe) {av_packet_unref(pkt);got_packet = 0;}}if (!got_packet && si->parse_queue.head)ret = avpriv_packet_list_get(&si->parse_queue, pkt);if (ret >= 0) {AVStream *const st = s->streams[pkt->stream_index];FFStream *const sti = ffstream(st);int discard_padding = 0;if (sti->first_discard_sample && pkt->pts != AV_NOPTS_VALUE) {int64_t pts = pkt->pts - (is_relative(pkt->pts) ? RELATIVE_TS_BASE : 0);int64_t sample = ts_to_samples(st, pts);int64_t duration = ts_to_samples(st, pkt->duration);int64_t end_sample = sample + duration;if (duration > 0 && end_sample >= sti->first_discard_sample &&sample < sti->last_discard_sample)discard_padding = FFMIN(end_sample - sti->first_discard_sample, duration);}if (sti->start_skip_samples && (pkt->pts == 0 || pkt->pts == RELATIVE_TS_BASE))sti->skip_samples = sti->start_skip_samples;sti->skip_samples = FFMAX(0, sti->skip_samples);if (sti->skip_samples || discard_padding) {uint8_t *p = av_packet_new_side_data(pkt, AV_PKT_DATA_SKIP_SAMPLES, 10);if (p) {AV_WL32(p, sti->skip_samples);AV_WL32(p + 4, discard_padding);av_log(s, AV_LOG_DEBUG, "demuxer injecting skip %u / discard %u\n",(unsigned)sti->skip_samples, (unsigned)discard_padding);}sti->skip_samples = 0;}if (sti->inject_global_side_data) {for (int i = 0; i < st->nb_side_data; i++) {const AVPacketSideData *const src_sd = &st->side_data[i];uint8_t *dst_data;if (av_packet_get_side_data(pkt, src_sd->type, NULL))continue;dst_data = av_packet_new_side_data(pkt, src_sd->type, src_sd->size);if (!dst_data) {av_log(s, AV_LOG_WARNING, "Could not inject global side data\n");continue;}memcpy(dst_data, src_sd->data, src_sd->size);}sti->inject_global_side_data = 0;}}if (!si->metafree) {int metaret = av_opt_get_dict_val(s, "metadata", AV_OPT_SEARCH_CHILDREN, &metadata);if (metadata) {s->event_flags |= AVFMT_EVENT_FLAG_METADATA_UPDATED;av_dict_copy(&s->metadata, metadata, 0);av_dict_free(&metadata);av_opt_set_dict_val(s, "metadata", NULL, AV_OPT_SEARCH_CHILDREN);}si->metafree = metaret == AVERROR_OPTION_NOT_FOUND;}if (s->debug & FF_FDEBUG_TS)av_log(s, AV_LOG_DEBUG,"read_frame_internal stream=%d, pts=%s, dts=%s, ""size=%d, duration=%"PRId64", flags=%d\n",pkt->stream_index,av_ts2str(pkt->pts),av_ts2str(pkt->dts),pkt->size, pkt->duration, pkt->flags);/* A demuxer might have returned EOF because of an IO error, let's* propagate this back to the user. */if (ret == AVERROR_EOF && s->pb && s->pb->error < 0 && s->pb->error != AVERROR(EAGAIN))ret = s->pb->error;return ret;

}

try_decode_frame()

- try_decode_frame()的功能可以從字面上的意思進行理解:“嘗試解碼一些幀”,它的定義如下所示

/* returns 1 or 0 if or if not decoded data was returned, or a negative error */

static int try_decode_frame(AVFormatContext *s, AVStream *st,const AVPacket *avpkt, AVDictionary **options)

{FFStream *const sti = ffstream(st);AVCodecContext *const avctx = sti->avctx;const AVCodec *codec;int got_picture = 1, ret = 0;AVFrame *frame = av_frame_alloc();AVSubtitle subtitle;AVPacket pkt = *avpkt;int do_skip_frame = 0;enum AVDiscard skip_frame;if (!frame)return AVERROR(ENOMEM);if (!avcodec_is_open(avctx) &&sti->info->found_decoder <= 0 &&(st->codecpar->codec_id != -sti->info->found_decoder || !st->codecpar->codec_id)) {AVDictionary *thread_opt = NULL;codec = find_probe_decoder(s, st, st->codecpar->codec_id);if (!codec) {sti->info->found_decoder = -st->codecpar->codec_id;ret = -1;goto fail;}/* Force thread count to 1 since the H.264 decoder will not extract* SPS and PPS to extradata during multi-threaded decoding. */av_dict_set(options ? options : &thread_opt, "threads", "1", 0);/* Force lowres to 0. The decoder might reduce the video size by the* lowres factor, and we don't want that propagated to the stream's* codecpar */av_dict_set(options ? options : &thread_opt, "lowres", "0", 0);if (s->codec_whitelist)av_dict_set(options ? options : &thread_opt, "codec_whitelist", s->codec_whitelist, 0);ret = avcodec_open2(avctx, codec, options ? options : &thread_opt);if (!options)av_dict_free(&thread_opt);if (ret < 0) {sti->info->found_decoder = -avctx->codec_id;goto fail;}sti->info->found_decoder = 1;} else if (!sti->info->found_decoder)sti->info->found_decoder = 1;if (sti->info->found_decoder < 0) {ret = -1;goto fail;}if (avpriv_codec_get_cap_skip_frame_fill_param(avctx->codec)) {do_skip_frame = 1;skip_frame = avctx->skip_frame;avctx->skip_frame = AVDISCARD_ALL;}while ((pkt.size > 0 || (!pkt.data && got_picture)) &&ret >= 0 &&(!has_codec_parameters(st, NULL) || !has_decode_delay_been_guessed(st) ||(!sti->codec_info_nb_frames &&(avctx->codec->capabilities & AV_CODEC_CAP_CHANNEL_CONF)))) {got_picture = 0;if (avctx->codec_type == AVMEDIA_TYPE_VIDEO ||avctx->codec_type == AVMEDIA_TYPE_AUDIO) {ret = avcodec_send_packet(avctx, &pkt);if (ret < 0 && ret != AVERROR(EAGAIN) && ret != AVERROR_EOF)break;if (ret >= 0)pkt.size = 0;ret = avcodec_receive_frame(avctx, frame);if (ret >= 0)got_picture = 1;if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF)ret = 0;} else if (avctx->codec_type == AVMEDIA_TYPE_SUBTITLE) {ret = avcodec_decode_subtitle2(avctx, &subtitle,&got_picture, &pkt);if (got_picture)avsubtitle_free(&subtitle);if (ret >= 0)pkt.size = 0;}if (ret >= 0) {if (got_picture)sti->nb_decoded_frames++;ret = got_picture;}}fail:if (do_skip_frame) {avctx->skip_frame = skip_frame;}av_frame_free(&frame);return ret;

}

- 從try_decode_frame()的定義可以看出,該函數首先判斷視音頻流的解碼器是否已經打開,如果沒有打開的話,先打開相應的解碼器。

- 接下來根據視音頻流類型的不同,調用不同的解碼函數進行解碼:。

- 解碼的循環會一直持續下去直到滿足了while()的所有條件。

while()語句的條件中有一個has_codec_parameters()函數,用于判斷AVStream中的成員變量是否都已經設置完畢。該函數在avformat_find_stream_info()中的多個地方被使用過。

has_codec_parameters()

- has_codec_parameters()用于檢查AVStream中的成員變量是否都已經設置完畢。

- 函數的定義如下

static int has_codec_parameters(const AVStream *st, const char **errmsg_ptr)

{const FFStream *const sti = cffstream(st);const AVCodecContext *const avctx = sti->avctx;#define FAIL(errmsg) do { \if (errmsg_ptr) \*errmsg_ptr = errmsg; \return 0; \} while (0)if ( avctx->codec_id == AV_CODEC_ID_NONE&& avctx->codec_type != AVMEDIA_TYPE_DATA)FAIL("unknown codec");switch (avctx->codec_type) {case AVMEDIA_TYPE_AUDIO:if (!avctx->frame_size && determinable_frame_size(avctx))FAIL("unspecified frame size");if (sti->info->found_decoder >= 0 &&avctx->sample_fmt == AV_SAMPLE_FMT_NONE)FAIL("unspecified sample format");if (!avctx->sample_rate)FAIL("unspecified sample rate");if (!avctx->ch_layout.nb_channels)FAIL("unspecified number of channels");if (sti->info->found_decoder >= 0 && !sti->nb_decoded_frames && avctx->codec_id == AV_CODEC_ID_DTS)FAIL("no decodable DTS frames");break;case AVMEDIA_TYPE_VIDEO:if (!avctx->width)FAIL("unspecified size");if (sti->info->found_decoder >= 0 && avctx->pix_fmt == AV_PIX_FMT_NONE)FAIL("unspecified pixel format");if (st->codecpar->codec_id == AV_CODEC_ID_RV30 || st->codecpar->codec_id == AV_CODEC_ID_RV40)if (!st->sample_aspect_ratio.num && !st->codecpar->sample_aspect_ratio.num && !sti->codec_info_nb_frames)FAIL("no frame in rv30/40 and no sar");break;case AVMEDIA_TYPE_SUBTITLE:if (avctx->codec_id == AV_CODEC_ID_HDMV_PGS_SUBTITLE && !avctx->width)FAIL("unspecified size");break;case AVMEDIA_TYPE_DATA:if (avctx->codec_id == AV_CODEC_ID_NONE) return 1;}return 1;

}estimate_timings()

- estimate_timings()位于avformat_find_stream_info()最后面,用于估算AVFormatContext以及AVStream的時長duration。

- 它的代碼如下所示。

static void estimate_timings(AVFormatContext *ic, int64_t old_offset)

{int64_t file_size;/* get the file size, if possible */if (ic->iformat->flags & AVFMT_NOFILE) {file_size = 0;} else {file_size = avio_size(ic->pb);file_size = FFMAX(0, file_size);}if ((!strcmp(ic->iformat->name, "mpeg") ||!strcmp(ic->iformat->name, "mpegts")) &&file_size && (ic->pb->seekable & AVIO_SEEKABLE_NORMAL)) {/* get accurate estimate from the PTSes */estimate_timings_from_pts(ic, old_offset);ic->duration_estimation_method = AVFMT_DURATION_FROM_PTS;} else if (has_duration(ic)) {/* at least one component has timings - we use them for all* the components */fill_all_stream_timings(ic);/* nut demuxer estimate the duration from PTS */if (!strcmp(ic->iformat->name, "nut"))ic->duration_estimation_method = AVFMT_DURATION_FROM_PTS;elseic->duration_estimation_method = AVFMT_DURATION_FROM_STREAM;} else {/* less precise: use bitrate info */estimate_timings_from_bit_rate(ic);ic->duration_estimation_method = AVFMT_DURATION_FROM_BITRATE;}update_stream_timings(ic);for (unsigned i = 0; i < ic->nb_streams; i++) {AVStream *const st = ic->streams[i];if (st->time_base.den)av_log(ic, AV_LOG_TRACE, "stream %u: start_time: %s duration: %s\n", i,av_ts2timestr(st->start_time, &st->time_base),av_ts2timestr(st->duration, &st->time_base));}av_log(ic, AV_LOG_TRACE,"format: start_time: %s duration: %s (estimate from %s) bitrate=%"PRId64" kb/s\n",av_ts2timestr(ic->start_time, &AV_TIME_BASE_Q),av_ts2timestr(ic->duration, &AV_TIME_BASE_Q),duration_estimate_name(ic->duration_estimation_method),(int64_t)ic->bit_rate / 1000);

}從estimate_timings()的代碼中可以看出,有3種估算方法:

- (1)通過pts(顯示時間戳)。該方法調用estimate_timings_from_pts()。它的基本思想就是讀取視音頻流中的結束位置AVPacket的PTS和起始位置AVPacket的PTS,兩者相減得到時長信息。

- (2)通過已知流的時長。該方法調用fill_all_stream_timings()。它的代碼沒有細看,但從函數的注釋的意思來說,應該是當有些視音頻流有時長信息的時候,直接賦值給其他視音頻流。

- (3)通過bitrate(碼率)。該方法調用estimate_timings_from_bit_rate()。它的基本思想就是獲得整個文件大小,以及整個文件的bitrate,兩者相除之后得到時長信息。

estimate_timings_from_bit_rate()

- 在這里附上上述幾種方法中最簡單的函數estimate_timings_from_bit_rate()的代碼。

static void estimate_timings_from_bit_rate(AVFormatContext *ic)

{FFFormatContext *const si = ffformatcontext(ic);int show_warning = 0;/* if bit_rate is already set, we believe it */if (ic->bit_rate <= 0) {int64_t bit_rate = 0;for (unsigned i = 0; i < ic->nb_streams; i++) {const AVStream *const st = ic->streams[i];const FFStream *const sti = cffstream(st);if (st->codecpar->bit_rate <= 0 && sti->avctx->bit_rate > 0)st->codecpar->bit_rate = sti->avctx->bit_rate;if (st->codecpar->bit_rate > 0) {if (INT64_MAX - st->codecpar->bit_rate < bit_rate) {bit_rate = 0;break;}bit_rate += st->codecpar->bit_rate;} else if (st->codecpar->codec_type == AVMEDIA_TYPE_VIDEO && sti->codec_info_nb_frames > 1) {// If we have a videostream with packets but without a bitrate// then consider the sum not knownbit_rate = 0;break;}}ic->bit_rate = bit_rate;}/* if duration is already set, we believe it */if (ic->duration == AV_NOPTS_VALUE &&ic->bit_rate != 0) {int64_t filesize = ic->pb ? avio_size(ic->pb) : 0;if (filesize > si->data_offset) {filesize -= si->data_offset;for (unsigned i = 0; i < ic->nb_streams; i++) {AVStream *const st = ic->streams[i];if ( st->time_base.num <= INT64_MAX / ic->bit_rate&& st->duration == AV_NOPTS_VALUE) {st->duration = av_rescale(filesize, 8LL * st->time_base.den,ic->bit_rate *(int64_t) st->time_base.num);show_warning = 1;}}}}if (show_warning)av_log(ic, AV_LOG_WARNING,"Estimating duration from bitrate, this may be inaccurate\n");

}

- 從代碼中可以看出,該函數做了兩步工作:

- (1)如果AVFormatContext中沒有bit_rate信息,就把所有AVStream的bit_rate加起來作為AVFormatContext的bit_rate信息。

- (2)使用文件大小filesize除以bitrate得到時長信息。

- 具體的方法是:

- AVStream->duration=(filesize*8/bit_rate)/time_base

- PS:

- 1)filesize乘以8是因為需要把Byte轉換為Bit

- 2)具體的實現函數是那個av_rescale()函數。x=av_rescale(a,b,c)的含義是x=a*b/c。

- 3)之所以要除以time_base,是因為AVStream中的duration的單位是time_base,注意這和AVFormatContext中的duration的單位(單位是AV_TIME_BASE,固定取值為1000000)是不一樣的。

int64_t av_rescale(int64_t a, int64_t b, int64_t c)

{return av_rescale_rnd(a, b, c, AV_ROUND_NEAR_INF);

}- 至此,avformat_find_stream_info()主要的函數就分析完了。

)

)

![android 使用shell模擬觸屏_[Android]通過adb shell input上報命令模擬屏幕點擊事件【轉】...](http://pic.xiahunao.cn/android 使用shell模擬觸屏_[Android]通過adb shell input上報命令模擬屏幕點擊事件【轉】...)

)

:走進Java)

)

:Java內存區域與內存溢出異常)

已被send_frame 和 receive_packet替代)

:垃圾收集器與垃圾回收策略)

...)

)