1.whisper部署

詳細過程可以參照:🏠

創建項目文件夾

mkdir whisper cd whisperconda創建虛擬環境

conda create -n py310 python=3.10 -c conda-forge -y安裝pytorch

pip install --pre torch torchvision torchaudio --extra-index-url下載whisper

pip install --upgrade --no-deps --force-reinstall git+https://github.com/openai/whisper.git安裝相關包

pip install tqdm pip install numba pip install tiktoken==0.3.3 brew install ffmpeg測試一下whispet是否安裝成功(默認識別為中文)

whisper test.wav --model small #test.wav為自己的測試wav文件,map3也支持 small是指用小模型whisper識別中文的時候經常會輸出繁體,加入一下參數可以避免:

whisper test.wav --model small --language zh --initial_prompt "以下是普通話的句子。" #注意"以下是普通話的句子。"不能隨便修改,只能是這句話才有效果。

2.腳本批量測試

創建test.sh腳本,輸入一下內容,可以實現對某一文件夾下的wav文件逐個中文語音識別。

#!/bin/bash

for ((i=0;i<300;i++));dofile="wav/A13_${i}.wav"if [ ! -f "$file" ];thenbreakfiwhisper "$file" --model medium --output_dir denied --language zh --initial_prompt "以下是普通話的句子。"

done?實現英文語音識別需要修改為:

#!/bin/bash

for ((i=0;i<300;i++));dofile="en/${i}.wav"if [ ! -f "$file" ];thenbreakfiwhisper "$file" --model small --output_dir denied --language en

done3.對運行出來的結果進行評測

一般地,語音識別通常采用WER,即詞錯誤率,評估語音識別和文本轉換質量。

這里我們主要采用 github上的開源項目:🌟?編寫的python-wer代碼對結果進行評價。

其中,我們的正確樣本形式為:

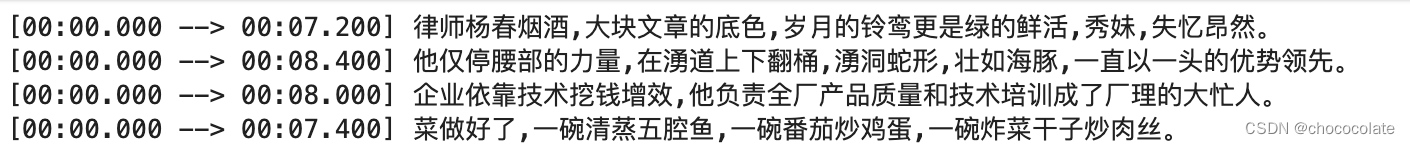

?whisper輸出的預測結果形式為:

?因此要對文本進行處理(去空格、去標點符號)后進行wer評價,相關代碼如下:

(可根據具體情況修改calculate_WER)

import sys

import numpydef editDistance(r, h):'''This function is to calculate the edit distance of reference sentence and the hypothesis sentence.Main algorithm used is dynamic programming.Attributes: r -> the list of words produced by splitting reference sentence.h -> the list of words produced by splitting hypothesis sentence.'''d = numpy.zeros((len(r)+1)*(len(h)+1), dtype=numpy.uint8).reshape((len(r)+1, len(h)+1))for i in range(len(r)+1):d[i][0] = ifor j in range(len(h)+1):d[0][j] = jfor i in range(1, len(r)+1):for j in range(1, len(h)+1):if r[i-1] == h[j-1]:d[i][j] = d[i-1][j-1]else:substitute = d[i-1][j-1] + 1insert = d[i][j-1] + 1delete = d[i-1][j] + 1d[i][j] = min(substitute, insert, delete)return ddef getStepList(r, h, d):'''This function is to get the list of steps in the process of dynamic programming.Attributes: r -> the list of words produced by splitting reference sentence.h -> the list of words produced by splitting hypothesis sentence.d -> the matrix built when calulating the editting distance of h and r.'''x = len(r)y = len(h)list = []while True:if x == 0 and y == 0: breakelif x >= 1 and y >= 1 and d[x][y] == d[x-1][y-1] and r[x-1] == h[y-1]: list.append("e")x = x - 1y = y - 1elif y >= 1 and d[x][y] == d[x][y-1]+1:list.append("i")x = xy = y - 1elif x >= 1 and y >= 1 and d[x][y] == d[x-1][y-1]+1:list.append("s")x = x - 1y = y - 1else:list.append("d")x = x - 1y = yreturn list[::-1]def alignedPrint(list, r, h, result):'''This funcition is to print the result of comparing reference and hypothesis sentences in an aligned way.Attributes:list -> the list of steps.r -> the list of words produced by splitting reference sentence.h -> the list of words produced by splitting hypothesis sentence.result -> the rate calculated based on edit distance.'''print("REF:", end=" ")for i in range(len(list)):if list[i] == "i":count = 0for j in range(i):if list[j] == "d":count += 1index = i - countprint(" "*(len(h[index])), end=" ")elif list[i] == "s":count1 = 0for j in range(i):if list[j] == "i":count1 += 1index1 = i - count1count2 = 0for j in range(i):if list[j] == "d":count2 += 1index2 = i - count2if len(r[index1]) < len(h[index2]):print(r[index1] + " " * (len(h[index2])-len(r[index1])), end=" ")else:print(r[index1], end=" "),else:count = 0for j in range(i):if list[j] == "i":count += 1index = i - countprint(r[index], end=" "),print("\nHYP:", end=" ")for i in range(len(list)):if list[i] == "d":count = 0for j in range(i):if list[j] == "i":count += 1index = i - countprint(" " * (len(r[index])), end=" ")elif list[i] == "s":count1 = 0for j in range(i):if list[j] == "i":count1 += 1index1 = i - count1count2 = 0for j in range(i):if list[j] == "d":count2 += 1index2 = i - count2if len(r[index1]) > len(h[index2]):print(h[index2] + " " * (len(r[index1])-len(h[index2])), end=" ")else:print(h[index2], end=" ")else:count = 0for j in range(i):if list[j] == "d":count += 1index = i - countprint(h[index], end=" ")print("\nEVA:", end=" ")for i in range(len(list)):if list[i] == "d":count = 0for j in range(i):if list[j] == "i":count += 1index = i - countprint("D" + " " * (len(r[index])-1), end=" ")elif list[i] == "i":count = 0for j in range(i):if list[j] == "d":count += 1index = i - countprint("I" + " " * (len(h[index])-1), end=" ")elif list[i] == "s":count1 = 0for j in range(i):if list[j] == "i":count1 += 1index1 = i - count1count2 = 0for j in range(i):if list[j] == "d":count2 += 1index2 = i - count2if len(r[index1]) > len(h[index2]):print("S" + " " * (len(r[index1])-1), end=" ")else:print("S" + " " * (len(h[index2])-1), end=" ")else:count = 0for j in range(i):if list[j] == "i":count += 1index = i - countprint(" " * (len(r[index])), end=" ")print("\nWER: " + result)return resultdef wer(r, h):"""This is a function that calculate the word error rate in ASR.You can use it like this: wer("what is it".split(), "what is".split()) """# build the matrixd = editDistance(r, h)# find out the manipulation stepslist = getStepList(r, h, d)# print the result in aligned wayresult = float(d[len(r)][len(h)]) / len(r) * 100result = str("%.2f" % result) + "%"result=alignedPrint(list, r, h, result)return result# 計算總WER

def calculate_WER():with open("whisper_out.txt", "r") as f:text1_list = [i[11:].strip("\n") for i in f.readlines()]with open("A13.txt", "r") as f:text2_orgin_list = [i[11:].strip("\n") for i in f.readlines()]total_distance = 0total_length = 0WER=0symbols = ",@#¥%……&*()——+~!{}【】;‘:“”‘。?》《、"# calculate distance between each pair of textsfor i in range(len(text1_list)):match1 = re.search('[\u4e00-\u9fa5]', text1_list[i])if match1:index1 = match1.start()else:index1 = len(text1_list[i])match2 = re.search('[\u4e00-\u9fa5]', text2_orgin_list[i])if match2:index2 = match2.start()else:index2 = len( text2_orgin_list[i])result1= text1_list[i][index1:]result1= result1.translate(str.maketrans('', '', symbols))result2= text2_orgin_list[i][index2:]result2=result2.replace(" ", "")print(result1)print(result2)result=wer(result1,result2)WER+=float(result.strip('%')) / 100WER=WER/len(text1_list)print("總WER:", WER)print("總WER:", WER.__format__('0.2%'))

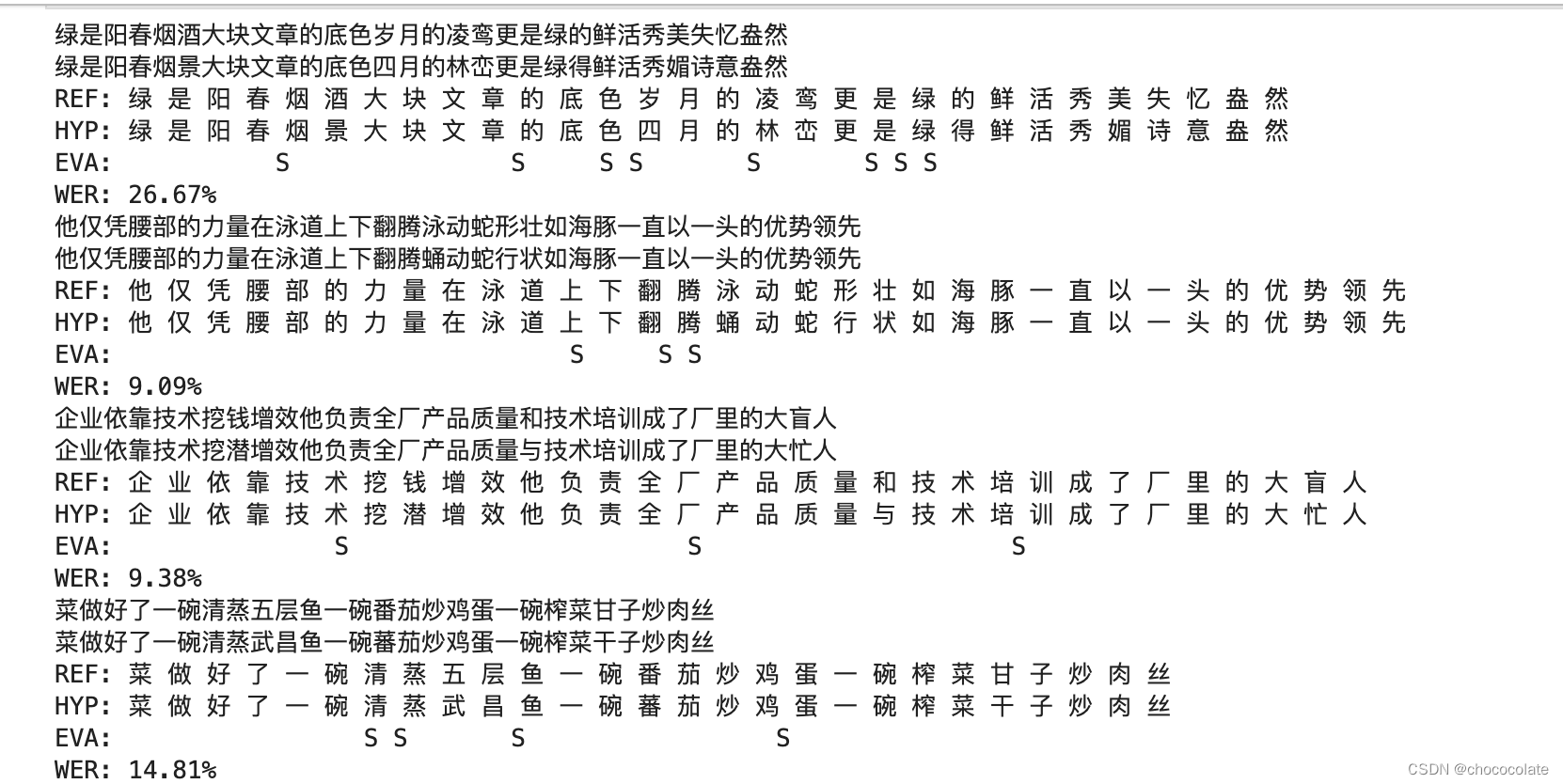

calculate_WER()評價結果形如:

4.與paddlespeech的測試對比:

| 數據集 | 數據量 | paddle (中英文分開) | paddle (同一模型) | whisper(small) (同一模型) | whisper(medium) (同一模型) | ||

| zhthchs30 (中文錯字率) | 250 | 11.61% | 45.53% | 24.11% | 13.95% | ||

| LibriSpeech (英文錯字率) | 125 | 7.76% | 50.88% | 9.31% | 9.31% |

5.測試所用數據集

自己處理過的開源wav數據

)

_nmap使用及案例)

|ChatGLM2-6B基于UCloud UK8S的創新應用)

![034_小馳私房菜_[問題復盤] Qcom平臺,某些三方相機拍照旋轉90度](http://pic.xiahunao.cn/034_小馳私房菜_[問題復盤] Qcom平臺,某些三方相機拍照旋轉90度)

)

)