機器學習模型 非線性模型

You’ve divided your data into a training, development and test set, with the correct percentage of samples in each block, and you’ve also made sure that all of these blocks (specially development and test set) come from the same distribution.

您已將數據分為訓練,開發和測試集,每個模塊中都有正確百分比的樣本,并且還確保所有這些模塊(特別是開發和測試集)都來自同一分布。

You’ve done some exploratory data analysis, gathered insights from this data, and chosen the best features for the task at hand. You’ve also chosen an evaluation metric that is well suited for your problem. Using this metric you will be able to iterate and change the hyper-parameters and configuration of your models in the quest to obtain the best possible performance.

您已經進行了一些探索性數據分析 ,從這些數據中收集了見解,并為當前任務選擇了最佳功能 。 您還選擇了一個非常適合您的問題的評估指標。 使用此度量,您將能夠迭代和更改模型的超參數和配置,以獲取最佳性能。

After all this, you pre-process the data, prepare it, and finally train a model (lets say a Support Vector Machine). You wait patiently, and once it has finished training you dispose yourself to evaluate the results, which are the following:

完成所有這些之后,您需要對數據進行預處理,準備并最終訓練模型(例如說支持向量機 )。 您需要耐心等待,一旦培訓結束,您就可以評估以下結果:

Training set error: 8%

訓練集錯誤:8%

Development set error: 10%

開發設定錯誤:10%

How should we look at these results? What can we compare them against? How can we improve them? Is it possible to do it?

我們應該如何看待這些結果? 我們可以將它們與什么進行比較? 我們如何改善它們? 有可能做到嗎?

In this post we will answer all of these questions in an easy, accessible manner. This guide is not a debugging guide about setting breakpoints in your code or seeing how training is evolving. It is about knowing what to do when your model is trained and built, how to correctly asses its performance, and seeing how you could improve it.

在這篇文章中,我們將以一種易于訪問的方式回答所有這些問題。 本指南不是關于在代碼中設置斷點或了解培訓如何發展的調試指南。 它是關于知道在訓練和構建模型時該怎么做,如何正確評估其性能以及如何改進它。

Lets get to it!

讓我們開始吧!

可以與我們的模型進行比較嗎? (What to compare our model against?)

When we build our first model and get the initial round of results, it is always desirable to compare this model against some already existing metric, to quickly asses how well it is doing. For this, we have two main strategies: Baseline models and Human-level performance.

當我們建立第一個模型并獲得初始結果時,總是希望將這個模型與一些已經存在的指標進行比較,以快速評估它的表現。 為此,我們有兩個主要策略:基線模型和人員水平的績效。

基準模型 (Baseline models)

A baseline model is a very simple model that generally yields acceptable results in some kind of task. These results, given by the baseline, are the ones you should try to improve with your new shiny machine learning model.

基線模型是一個非常簡單的模型,通常可以在某種任務中產生可接受的結果。 由基線給出的這些結果是您應該嘗試使用新的閃亮的機器學習模型進行改進的結果。

In a few words, a baseline is a simple approach towards solving a problem that gives a good enough result, but that should be taken as a starting point for performance. If you build a model that does not surpass baseline model performance on some data, then you should probably rethink what you are doing.

簡而言之,基線是解決問題的簡單方法,可以給出足夠好的結果,但是應該將其作為性能的起點。 如果您建立的模型在某些數據上沒有超過基準模型性能,那么您可能應該重新考慮您在做什么。

Lets see an example to get a better idea of how this works: In Natural Language Processing (NLP) one of the most common problems is that of Sentiment Analysis: detecting the mood, feeling or sentiment of a certain sentence, which could be positive, neutral or negative. A very simple model that can do this, is Naive Bayes: it is very transparent, fast on the training, and generally gives acceptable results, however, these are far from being optimal.

讓我們看一個例子,以更好地了解其工作原理:在自然語言處理(NLP)中,最常見的問題之一就是情感分析:檢測特定句子的情緒,感覺或情感,這可能是肯定的,中性或負面。 樸素的貝葉斯 ( Naive Bayes)是一個可以做到這一點的非常簡單的模型:它非常透明,訓練Swift,并且通常可以提供可接受的結果,但是,這些結果遠非最佳。

Imagine, you gather some labelled data for sentiment analysis, pre-process the data, train a Naive Bayes model and get 65% accuracy. Because we are taking Naive Bayes as a Baseline model for this task, with every further model we build, we should aim to beat this 65% accuracy. If we train a Logistic Regression and get 55% performance, then we should probably re-think what we are doing.

想象一下,您收集了一些標記數據以進行情感分析 ,預處理數據,訓練Naive Bayes模型并獲得65%的準確性。 由于我們將樸素貝葉斯(Naive Bayes)作為該任務的基準模型,因此我們構建的每個其他模型都應力爭達到65%的精度。 如果我們訓練Logistic回歸并獲得55%的性能,那么我們可能應該重新考慮我們在做什么。

We might come to the conclusion that non-neural models are not fit for this task, train an initial Recurrent Neural Network, and get 70%. Now, as we have beaten the baseline, we can try to keep improving this RNN to get better and better performance.

我們可能得出這樣的結論:非神經模型不適合此任務,訓練初始的遞歸神經網絡 ,并獲得70%的收益 。 現在,我們已經突破了基準線,可以嘗試不斷改進此RNN,以獲得越來越好的性能。

員工績效 (Human Level Performance)

In the recent years, it has become usual for Machine Learning algorithms to not only produce excellent results in many fields, but to achieve even better results than human experts in those specific fields. Because of this, an useful metric to compare the performance of an algorithm on a certain task is Human Level Performance on that same task.

近年來,機器學習算法不僅在許多領域產生出色的結果,而且比那些特定領域的人類專家取得更好的結果已成為常態。 因此,用于比較算法在特定任務上的性能的有用度量是該任務上的人員水平性能 。

Lets see an example so that you can quickly grasp how this works. Imagine that a cardiovascular doctor can look at the health parameters of patients and diagnose with only three errors out of every one-hundred patients if the patient has a certain disease or not.

讓我們看一個示例,以便您可以快速了解其工作原理。 想象一下,如果有某種疾病,心血管醫生可以查看患者的健康參數,并且在每一百名患者中僅診斷出三個錯誤。

Now, we build a Machine learning model to look at these same parameters and diagnose the absence or presence of this previous disease. If our model makes 10 errors out of every 100 diagnoses, then there is a lot of room for improvement, (the expert makes 7 fewer errors for every 100 patients; he has a 7% lower error rate), however, if our model makes 1 failed prediction out 100, it is surpassing human level performance, and therefore doing quite well.

現在,我們建立一個機器學習模型來查看這些相同的參數,并診斷出先前疾病的存在與否。 如果我們的模型每100次診斷中就有10個錯誤,那么還有很大的改進余地(專家每100名患者減少7個錯誤;錯誤率降低7%),但是,如果我們的模型能夠1項失敗的預測中有100項超出了人類水平的表現,因此做得還不錯。

Human level performance: 3% error

人員水平績效:3%的錯誤

Model test data performance: 10% error

模型測試數據性能:10%的誤差

Alright, now that we have understood these two metrics, lets progress in the analysis of the results of our Machine Learning models taking Human-level performance as the metric to compare against.

好了,既然我們已經理解了這兩個指標,就可以在以人為水平的績效為指標進行比較的機器學習模型的結果分析中取得進展。

與人類水平的表現比較 (Comparing to Human level performance)

Understanding how humans perform in a task can guide us towards how to reduce bias and variance. If you don’t know what Bias or Variance are, you can learn about it on the following post:

了解人類在一項任務中的表現可以指導我們如何減少偏見和差異。 如果您不知道什么是“偏差”或“方差”,則可以在以下文章中了解它:

Despite humans being awesome at certain tasks, as we have said, Machines can become even better than them, and surpass human level performance. However, there is a certain threshold that neither humans or Machine learning models can surpass: Bayes Optimal error.

正如我們已經說過的,盡管人類在某些任務上表現出色,但是機器可以變得比它們更好,并且可以超越人類水平的性能。 但是,人類或機器學習模型都無法超越某個特定的閾值: 貝葉斯最優誤差。

Bayes optimal error is the best theoretical result that can be obtained for a certain task, and can not be improved by any kind of function, natural or artificial.

貝葉斯最佳誤差是可以針對某項任務獲得的最佳理論結果,不能通過任何自然或人為的功能來改善。

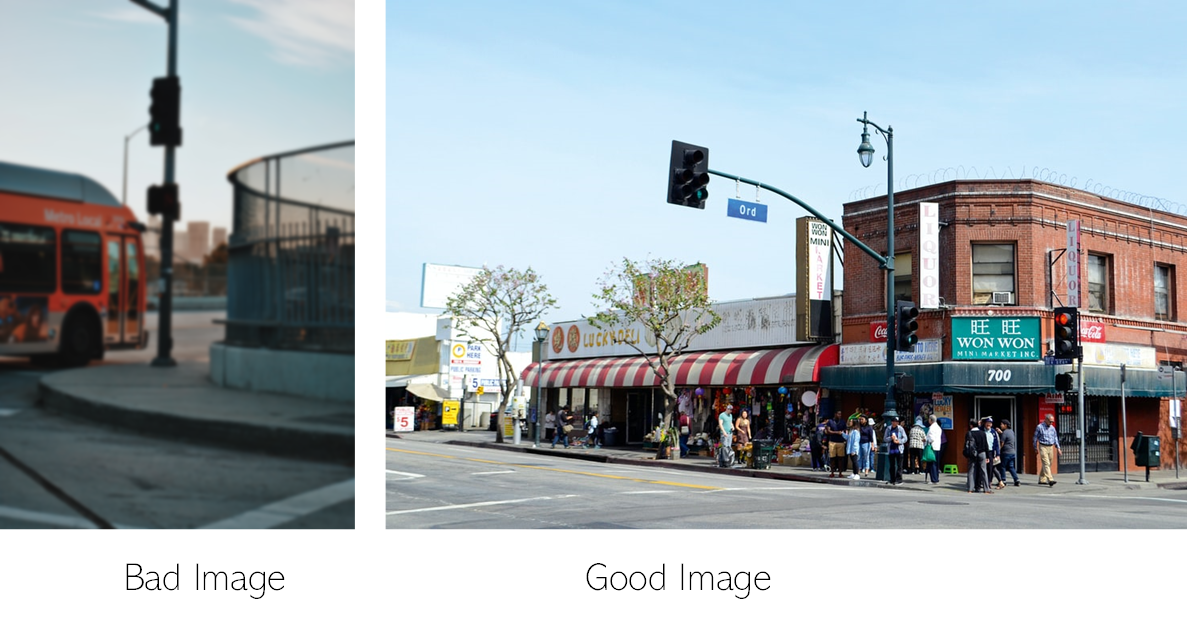

Imagine a data set composed of images of traffic lights where some images have an orientation such, and are so blurry that it is impossible, even for humans to get all the correct light colours from these images.

想象一下一個由交通信號燈圖像組成的數據集,其中某些圖像具有這樣的方向,并且非常模糊,以至于即使人類也無法從這些圖像中獲得所有正確的燈光顏色。

For this data set, Bayes optimal performance would be the maximum number of images that we can actually correctly classify, as some of them are impossible both for humans and machines.

對于此數據集,貝葉斯的最佳性能將是我們實際上可以正確分類的最大圖像數,因為其中某些圖像對于人和機器都是不可能的。

For many tasks human level performance is close to Bayes optimal error, so we tend to use human level performance as a proxy or approximation of Bayes optimal error.

對于許多任務,人類水平的性能接近貝葉斯最佳誤差,因此我們傾向于使用人類水平的性能作為貝葉斯最佳誤差的近似值或近似值 。

Lets see a more concrete example, with numbers, to get a complete grasp of the relationship between Human level performance, Bayes Optimal error, and the results of our models.

讓我們看一個帶有數字的更具體的例子,以全面了解人的水平表現,貝葉斯最佳誤差與模型結果之間的關系。

了解人員水平的表現和貝葉斯最佳誤差 (Understanding Human level performance and Bayes Optimal error)

Imagine a medical image diagnosis task, where a typical doctor achieves a 1% error. Because of this, if we take Human level performance as a proxy for Bayes, we can say that Bayes error is lower or equal to 1%.

想象一下醫學圖像診斷任務,典型的醫生在其中完成了1%的錯誤。 因此,如果我們將人的性能作為貝葉斯的代理,那么我們可以說貝葉斯誤差低于或等于1%。

It is important to note that Human level performance has to be defined depending on the context in which the Machine Learning system is going to be deployed.

重要的是要注意,必須根據將要部署機器學習系統的上下文來定義人員級別的性能。

Imagine now that we build a Machine learning model and get the following results on this diagnosis task:

現在想象一下,我們建立了機器學習模型,并在此診斷任務中獲得了以下結果:

Training set error: 7%

訓練集誤差:7%

Test set error: 8%

測試設定錯誤:8%

Now, if our Human level performance (proxy for Bayes error) is 1%, what do you think we should focus on improving? The error difference between Bayes Optimal error (1%) and our training set error (7%) or the error difference between training and test set error? We will call the first of these two differences Avoidable bias (between human and training set error) and the second one Variance (between train and test errors).

現在,如果我們的人員水平表現(貝葉斯誤差的代理人)為1%, 您認為我們應該著重改進什么? 貝葉斯最優誤差(1%)與訓練集誤差(7%)之間的誤差差還是訓練與測試集誤差之間的誤差差? 我們將這兩個差異中的第一個稱為可避免的偏差 (在人為和訓練設置誤差之間),第二個差異 (在訓練和測試誤差之間)。

Once we know where to optimise, how should we do it? Keep reading to find out!

一旦我們知道在哪里進行優化,我們應該如何做? 繼續閱讀以找出答案!

在哪里以及如何改善我們的機器學習模型 (Where and how to improve our Machine Learning models)

Depending on these sizes of these two error differences (avoidable bias and variance) there are different strategies which we ought to apply in order to reduce these errors and get the best possible results out of our models.

根據這兩個誤差差異的大小(可避免的偏差和方差),我們應采用不同的策略以減少這些誤差并從模型中獲得最佳結果。

In the previous example, the difference between human level performance and training set error (6%) is a lot bigger than the difference between training and test set error (1%), so we will focus on reducing the avoidable bias. If training set error was 2% however, then the bias would be 1%, and the variance would be 6% and we would focus on reducing variance.

在前面的示例中,人的水平性能和訓練設置誤差之間的差異(6%)比訓練和測試設置誤差之間的差異(1%)大得多,因此我們將重點放在減少可避免的偏差上。 但是,如果訓練集誤差為2%,則偏差將為1%,方差將為6%,我們將集中精力減少方差。

If bias and variance were very similar, and there was room for improving both, then we would have to see which is least expensive or easier to reduce.

如果偏差和方差非常相似,并且都有改進的余地,那么我們將不得不看到哪一種最便宜或更容易減少。

Lastly, if human level performance, training, and test error where all similar and acceptable, we would leave our awesome model just as it is.

最后,如果人員水平的性能,培訓和測試錯誤都相似且可以接受,我們將保留我們的出色模型。

How do we reduce each of these gaps? Lets take a look first and how to reduce avoidable bias.

我們如何縮小這些差距? 讓我們先來看一下如何減少可避免的偏差。

改善模型性能:如何減少可避免的偏差。 (Improving model performance: how to reduce Avoidable Bias.)

In our search for the best possible Machine learning model, we must look to fit the training set really well without producing over-fitting.

在尋找最佳的機器學習模型時,我們必須看起來非常適合訓練集,而不會產生過度擬合。

We will look at how to quantify and reduce this over-fitting in just a bit, but the first thing we have to try to achieve is an acceptable performance on our training set, making the gap between human level performance or Bayes error, and training set error as small as possible.

我們將稍稍研究一下如何量化和減少這種過度擬合,但是我們要做的第一件事就是在我們的訓練集上獲得可接受的性能,從而在人為水平的性能或貝葉斯誤差與培訓之間形成差距將誤差設置得盡可能小。

For this there are various strategies we can adopt:

為此,我們可以采用多種策略:

If we trained a classic Machine learning model, like a Decision Tree, a Linear or Logistic Regressor, we could try to train something more complex like an SVM, or a Boosting model.

如果我們訓練了經典的機器學習模型 ,例如決策樹,線性或邏輯回歸,則可以嘗試訓練更復雜的東西,例如SVM或Boosting模型 。

If after this we are still getting poor results, maybe our task needs a more complex or specific architecture, like a Recurrent or Convolutional Neural Network.

如果在此之后我們仍然獲得較差的結果,則也許我們的任務需要更復雜或更具體的體系結構 ,例如遞歸或卷積神經網絡。

- When Artificial Neural Networks still don’t cut it enough, we can train these networks longer, make them deeper or change the optimisation algorithms. 當人工神經網絡仍然不能滿足需要時,我們可以訓練這些網絡更長的時間,使其更深,或者改變優化算法。

After all this, if there is still a lot of room for improvement, we could try to get more labelled data by humans, to see if there is some sort of issue with our initial data set.

畢竟,如果仍然有很多改進的余地,我們可以嘗試由人類獲取更多的標簽數據 ,以查看我們的初始數據集是否存在某種問題。

Lastly, we can carry out manual error analysis: seeing specific examples where our algorithm is performing badly. Going back to an image classification example, maybe through this analysis we can see that small dogs are getting classified as cats, and we can fix this by getting more labelled images of small dogs. In our traffic light example we could spot the issue with blurry images and set a pre-processing step for the data set to discard any images that don’t meet a certain quality threshold.

最后,我們可以進行手動錯誤分析 :查看我們的算法表現不佳的特定示例。 回到圖像分類的例子,也許通過分析,我們可以看到小狗被分類為貓,并且我們可以通過獲取更多帶標簽的小狗圖像來解決此問題。 在我們的交通信號燈示例中,我們可以發現圖像模糊的問題,并為數據集設置預處理步驟,以丟棄任何不符合特定質量閾值的圖像。

By using these tactics we can make avoidable bias become increasingly low. Now that we know how to do this, lets take a look at how to reduce Variance.

通過使用這些策略,我們可以使可避免的偏差變得越來越小。 現在我們知道如何做到這一點,讓我們看一下如何減少方差。

改善模型性能:如何減少差異。 (Improving model performance: how to reduce Variance.)

When our model has high variance, we say that it is over-fitting: it adapts too well to the training data, but generalises badly to data it has not seen before. To reduce this variance, there are various strategies that we can adopt, which differ mostly from the ones we just saw to reduce bias. These strategies are:

當我們的模型具有很高的方差時,我們說它是過度擬合的:它對訓練數據的適應性太強,但是對以前從未見過的數據的歸納性很差。 為了減少這種差異,我們可以采用多種策略,這些策略與我們剛剛看到的減少偏差的策略大部分不同。 這些策略是:

Get more labelled data: if our model ins’t generalising well in some cases, maybe it is because it has never seen those kind of data instances in the training, and therefore getting more training data could be of great use for model improvement.

獲取更多帶標簽的數據:如果我們的模型在某些情況下不能很好地推廣,也許是因為它在訓練中從未見過此類數據實例,因此獲取更多訓練數據對于模型改進很有用。

Trying data augmentation: if getting more data is not possible, then we could try data augmentation techniques. With images this is a pretty standard procedure, done by rotating, cropping, RGB shifting and other similar strategies.

嘗試數據增強:如果無法獲得更多數據,則可以嘗試數據增強技術。 對于圖像,這是一個非常標準的過程,通過旋轉,裁剪,RGB移位和其他類似策略來完成。

Use regularisation: there are techniques that are specifically conceived for reducing over-fitting, like L1 and L2 regularisation, or Dropout in the case of Artificial Neural networks.

使用正則化:存在專門為減少過度擬合而設計的技術,例如L1和L2正則化,或者在人工神經網絡的情況下為Dropout。

After this, we would have also managed to reduce our variance! Awesome, now we have optimised our Machine Learning model to its full potential.

在此之后,我們也將設法減少差異! 太棒了,現在我們已經優化了機器學習模型的全部潛能。

結論和其他資源 (Conclusion and additional Resources)

That is it! As always, I hope you enjoyed the post, and that I managed to help you understand how to debug and improve the performance of your Machine learning models.

這就對了! 與往常一樣,希望您喜歡這篇文章 ,并且我設法幫助您了解如何調試和改善機器學習模型的性能。

If you liked this post then feel free to follow me on Twitter at @jaimezorno. Also, you can take a look at my other posts on Data Science and Machine Learning here. Have a good read!

如果您喜歡這篇文章,請隨時 通過@jaimezorno 在 Twitter上 關注我 。 另外,您可以在 此處 查看我在數據科學和機器學習方面的其他帖子 。 祝您閱讀愉快!

If you want to learn more about Machine Learning and Artificial Intelligence follow me on Medium, and stay tuned for my next posts! Also, you can check out this repository for more resources on Machine Learning and AI!

如果您想了解有關機器學習和人工智能的更多信息,請 在Medium上關注我 ,并繼續關注我的下一篇文章! 另外,您可以在 此存儲庫中 查看有關機器學習和AI的更多資源!

翻譯自: https://towardsdatascience.com/the-ultimate-guide-to-debugging-your-machine-learning-models-103dc0f9e421

機器學習模型 非線性模型

本文來自互聯網用戶投稿,該文觀點僅代表作者本人,不代表本站立場。本站僅提供信息存儲空間服務,不擁有所有權,不承擔相關法律責任。 如若轉載,請注明出處:http://www.pswp.cn/news/390553.shtml 繁體地址,請注明出處:http://hk.pswp.cn/news/390553.shtml 英文地址,請注明出處:http://en.pswp.cn/news/390553.shtml

如若內容造成侵權/違法違規/事實不符,請聯系多彩編程網進行投訴反饋email:809451989@qq.com,一經查實,立即刪除!

通過示例進行解釋)

...)