代碼部分:

import numpy as np

import pandas as pd

from matplotlib import pyplot as plt

import torch

from torch import nn

import torch.nn.functional as F

from torch.utils.data import Dataset,DataLoader,TensorDataset"""

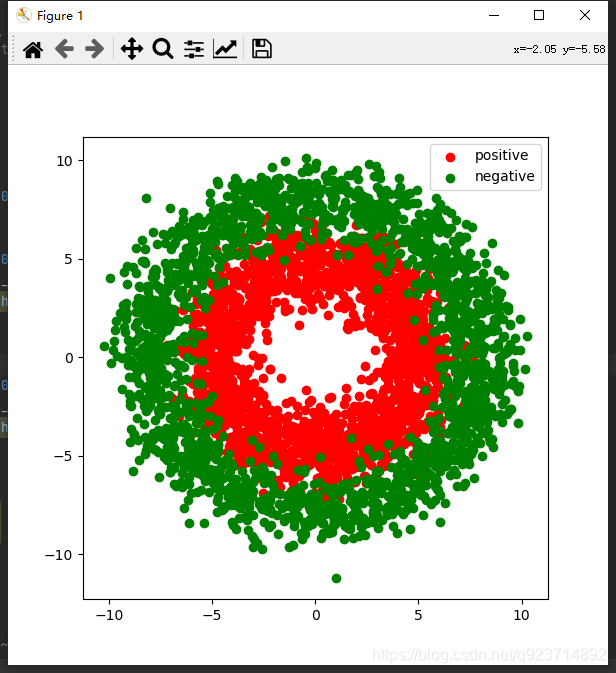

準備數據

"""#正負樣本數量

n_positive,n_negative = 2000,2000#生成正樣本, 小圓環分布

r_p = 5.0 + torch.normal(0.0,1.0,size = [n_positive,1])

theta_p = 2*np.pi*torch.rand([n_positive,1])

Xp = torch.cat([r_p*torch.cos(theta_p),r_p*torch.sin(theta_p)],axis = 1)

Yp = torch.ones_like(r_p)#生成負樣本, 大圓環分布

r_n = 8.0 + torch.normal(0.0,1.0,size = [n_negative,1])

theta_n = 2*np.pi*torch.rand([n_negative,1])

Xn = torch.cat([r_n*torch.cos(theta_n),r_n*torch.sin(theta_n)],axis = 1)

Yn = torch.zeros_like(r_n)#匯總樣本

X = torch.cat([Xp,Xn],axis = 0)

Y = torch.cat([Yp,Yn],axis = 0)#可視化

plt.figure(figsize = (6,6))

plt.scatter(Xp[:,0],Xp[:,1],c = "r")

plt.scatter(Xn[:,0],Xn[:,1],c = "g")

plt.legend(["positive","negative"])plt.show()"""

#構建輸入數據管道

"""

ds = TensorDataset(X,Y)

dl = DataLoader(ds,batch_size = 10,shuffle=True)"""

2, 定義模型

"""

class DNNModel(nn.Module):def __init__(self):super(DNNModel, self).__init__()self.fc1 = nn.Linear(2,4)self.fc2 = nn.Linear(4,8)self.fc3 = nn.Linear(8,1)def forward(self,x):x = F.relu(self.fc1(x))x = F.relu(self.fc2(x))y = nn.Sigmoid()(self.fc3(x))return ydef loss_func(self,y_pred,y_true):return nn.BCELoss()(y_pred,y_true)def metric_func(self,y_pred,y_true):y_pred = torch.where(y_pred > 0.5, torch.ones_like(y_pred, dtype=torch.float32),torch.zeros_like(y_pred, dtype=torch.float32))acc = torch.mean(1 - torch.abs(y_true - y_pred))return acc@propertydef optimizer(self):return torch.optim.Adam(self.parameters(), lr=0.001)model = DNNModel()# 測試模型結構

(features,labels) = next(iter(dl))

predictions = model(features)loss = model.loss_func(predictions,labels)

metric = model.metric_func(predictions,labels)print("init loss:",loss.item())

print("init metric:",metric.item())"""

3,訓練模型

"""

def train_step(model, features, labels):# 正向傳播求損失predictions = model(features)loss = model.loss_func(predictions,labels)metric = model.metric_func(predictions,labels)# 反向傳播求梯度loss.backward()# 更新模型參數model.optimizer.step()model.optimizer.zero_grad()return loss.item(),metric.item()# 測試train_step效果

features,labels = next(iter(dl)) #非for循環就用next

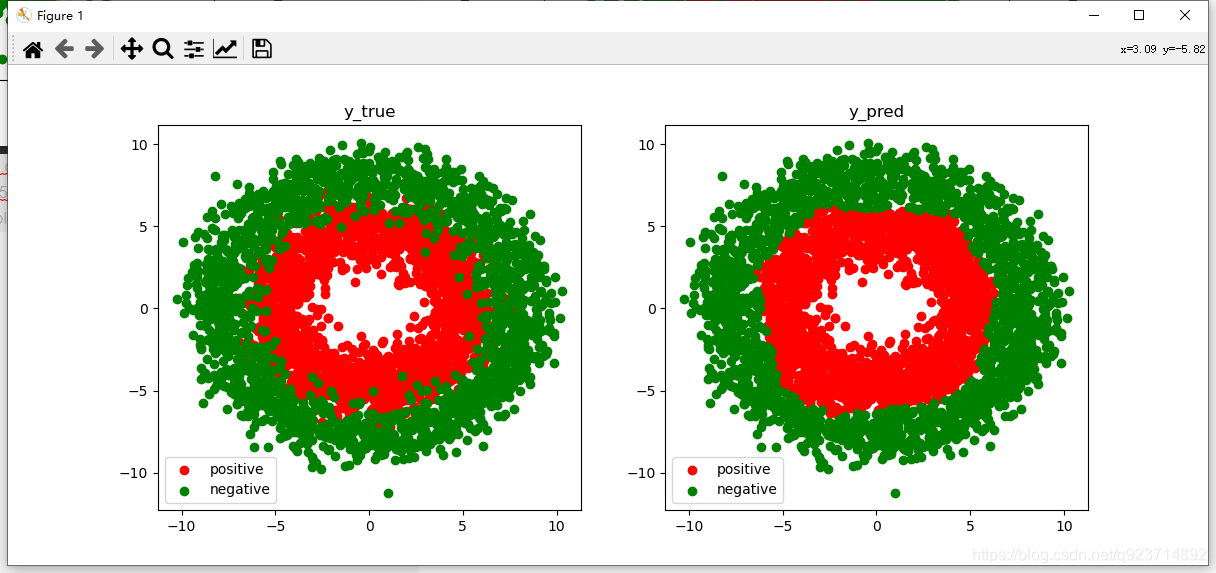

train_step(model,features,labels)def train_model(model,epochs):for epoch in range(1,epochs+1):loss_list,metric_list = [],[]for features, labels in dl:lossi,metrici = train_step(model,features,labels)loss_list.append(lossi)metric_list.append(metrici)loss = np.mean(loss_list)metric = np.mean(metric_list)if epoch%100==0:print("epoch =",epoch,"loss = ",loss,"metric = ",metric)train_model(model,epochs = 300)# 結果可視化

fig, (ax1,ax2) = plt.subplots(nrows=1,ncols=2,figsize = (12,5))

ax1.scatter(Xp[:,0],Xp[:,1], c="r")

ax1.scatter(Xn[:,0],Xn[:,1],c = "g")

ax1.legend(["positive","negative"])

ax1.set_title("y_true")Xp_pred = X[torch.squeeze(model.forward(X)>=0.5)]

Xn_pred = X[torch.squeeze(model.forward(X)<0.5)]ax2.scatter(Xp_pred[:,0],Xp_pred[:,1],c = "r")

ax2.scatter(Xn_pred[:,0],Xn_pred[:,1],c = "g")

ax2.legend(["positive","negative"])

ax2.set_title("y_pred")plt.show()

結果展示:

數據部分:

結果分類:

思考:

本文中的DNN模型,將loss(損失),metric(準確率),optimizer(優化器)的定義放在了DNN網絡中,這也產生了一系列的問題。首先在調用這些函數時,需要用網絡名+“.”來調用。例如:loss = model.loss_func(predictions,labels)

但是這里最重要的一點是 def optimizer(self):在optimizer函數上面必須有:@property,若沒有將會出現AttributeError: 'function' object has no attribute 'step'的報錯。

@property裝飾器的作用:我們可以使用@property裝飾器來創建只讀屬性,@property裝飾器會將方法轉換為相同名稱的只讀屬性,可以與所定義的屬性配合使用,這樣可以防止屬性被修改。

)

![【洛谷算法題】P1046-[NOIP2005 普及組] 陶陶摘蘋果【入門2分支結構】Java題解](http://pic.xiahunao.cn/【洛谷算法題】P1046-[NOIP2005 普及組] 陶陶摘蘋果【入門2分支結構】Java題解)

)

)