樸素貝葉斯分類器 文本分類

背景 (Background)

Following a disaster, typically you will get millions and millions of communications, either direct or via social media, right at the time when disaster response organizations have the least capacity to filter and pull out the messages which are the most important. And often it really is only one in every thousand messages that might be relevant to disaster response professionals.

災難發生后,通常在災難響應組織過濾和提取最重要消息的能力最差的時候,您將直接或通過社交媒體獲得數以百萬計的通信。 通常,實際上只有十分之幾的消息可能與災難響應專業人員相關。

So the way that disasters are typically responded to is that different organizations will take care of different parts of the problem. One organization will care about water, another one will care about blocked roads, and another will care about medical supplies.

因此,災難通常的應對方式是不同的組織將處理問題的不同部分。 一個組織將關心水,另一個組織將關心道路阻塞,另一個組織將關心醫療用品。

— Robert Munro, former CTO of Figure Eight (acquired by Appen)

-圖8的前CTO Robert Munro(被Appen收購)

Robert Munro summed up the problem quite well. With so many messages being received during disasters, there needs to be a way of directing these messages to the appropriate organization so that they can respond to the problem accordingly.

Robert Munro很好地總結了這個問題。 災難期間收到了如此多的消息,因此需要一種將這些消息定向到適當組織的方法,以便它們可以相應地對問題做出響應。

Using data from Figure Eight (now Appen), we will be building a web application to classify disaster messages so that an emergency professional would know which organization to send the message to.

使用圖八 (現在為Appen)中的數據,我們將構建一個Web應用程序以對災難消息進行分類,以便緊急事件專業人員知道將消息發送到哪個組織。

This walkthrough assumes you have some knowledge of natural language processing and machine learning. We will go over the general process but you can see the full code at my Github.

本演練假定您具有一些自然語言處理和機器學習的知識。 我們將介紹整個過程,但是您可以在我的Github上查看完整的代碼。

數據 (The Data)

The data contains 26,248 labeled messages that were sent during past disasters around the world. Each message is labeled as 1 or more of the following 36 categories:

數據包含在世界各地過去的災難中發送的26,248條帶標簽的郵件。 每條消息被標記為以下36個類別中的1個或多個:

'related', 'request', 'offer', 'aid_related', 'medical_help', 'medical_products', 'search_and_rescue', 'security', 'military', 'child_alone', 'water', 'food', 'shelter', 'clothing', 'money', 'missing_people', 'refugees', 'death', 'other_aid', 'infrastructure_related', 'transport', 'buildings', 'electricity', 'tools', 'hospitals', 'shops', 'aid_centers', 'other_infrastructure', 'weather_related', 'floods', 'storm', 'fire', 'earthquake', 'cold', 'other_weather', 'direct_report'

“相關”,“請求”,“提供”,“援助相關”,“醫療幫助”,“醫療產品”,“搜索和救援”,“安全”,“軍事”,“獨身”,“水”,“食品”,“庇護所” ”,“衣服”,“錢”,“失民”,“難民”,“死亡”,“其他援助”,“基礎設施相關”,“運輸”,“建筑物”,“電力”,“工具”,“醫院”, “商店”,“援助中心”,“其他基礎設施”,“與天氣相關”,“洪水”,“風暴”,“火災”,“地震”,“寒冷”,“其他天氣”,“直接報告”

Note: Messages don’t necessarily fall into only 1 category. A message can be labeled as multiple categories or even none.

注意:郵件不一定只屬于1類。 一條消息可以標記為多個類別,甚至可以都不標記。

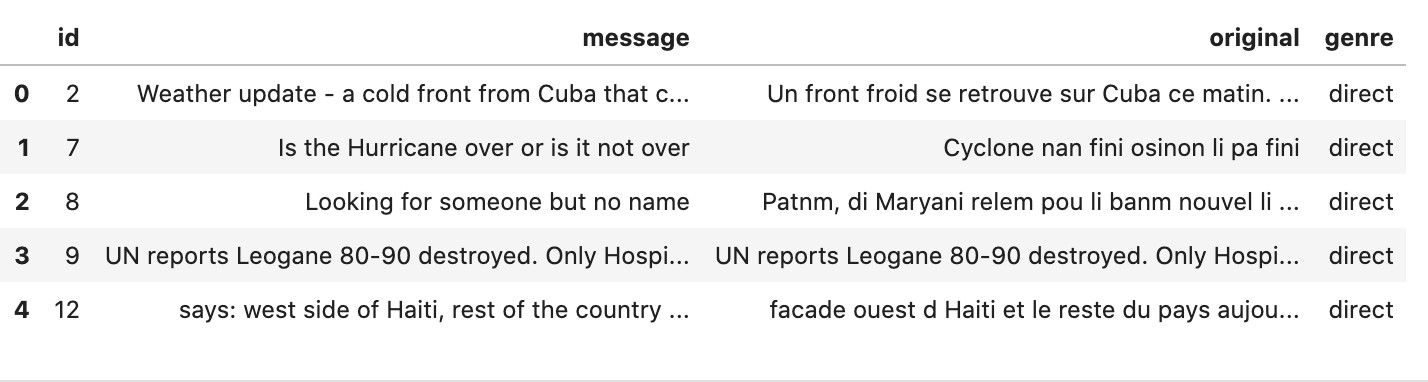

As seen in figure 1, the original data was split into 2 CSV files:

如圖1所示 ,原始數據分為2個CSV文件:

- Messages dataset — the messages and the method in which they were receive 郵件數據集-郵件及其接收方法

- Categories dataset — The categories the messages were labeled as 類別數據集-郵件被標記為的類別

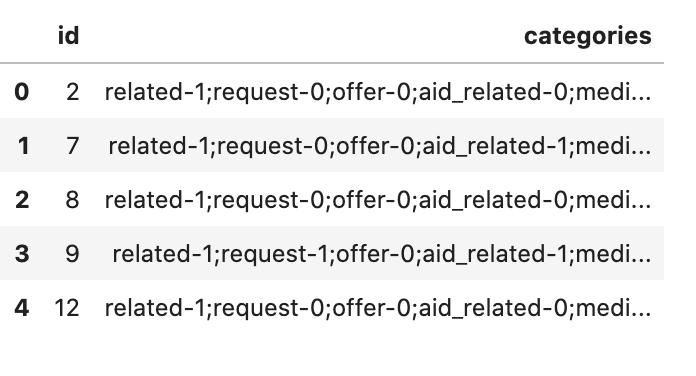

And the categories dataset (figure 1B) was formatted in a way that is unusable. All 36 categories and their corresponding values (0 for no or 1 for yes) are stuffed into a single column. To be able to use this dataset as labels for our supervised learning model, we’ll need to transform that single column into 36 separate columns (1 for each category) with binary numeric values, shown in figure 2 below.

而且類別數據集( 圖1B)的格式無法使用。 所有36個類別及其對應的值(0表示“否”或1表示“是”)被填充到一列中。 為了能夠將此數據集用作監督學習模型的標簽,我們需要將該單列轉換為具有二進制數值的36個單獨的列(每個類別1個),如下圖2所示。

None of the messages in the dataset were labeled as child_alone so this category will be removed since it is not providing any information.

數據集中的所有消息均未標記為child_alone因此將刪除該類別,因為它未提供任何信息。

To prepare the data, I wrote an ETL pipeline with the following steps:

為了準備數據,我編寫了一個ETL管道,其步驟如下:

- Import the data from the 2 CSV files 從2個CSV文件導入數據

Transform the categories dataset from 1 string variable (figure 1B) into 36 numeric variables (figure 2)

將類別數據集從1個字符串變量( 圖1B )轉換為36個數字變量( 圖2 )

Drop

child_alonefrom the categories dataset, leaving 35 categories left to classify從類別數據集中刪除

child_alone,剩下35個類別以進行分類- Merge the 2 datasets into 1 將2個數據集合并為1個

- Load the merged dataset into a SQLite database 將合并的數據集加載到SQLite數據庫中

分類器 (The Classifier)

With the data processed, we can use it to train a classification model. But wait! Machine learning models don’t know how to interpret text data directly, so we need to somehow convert the text into numeric features first. No worries though. This feature extraction can be done in conjunction with the classification model within a single pipeline.

處理完數據后,我們可以使用它來訓練分類模型。 可是等等! 機器學習模型不知道如何直接解釋文本數據,因此我們需要首先以某種方式將文本轉換為數字特征。 不用擔心。 可以與單個管道中的分類模型一起完成此特征提取。

The machine learning pipeline (code below) was built as follows:

機器學習管道(以下代碼)的構建如下:

1. Tf-idf vectorizer — tokenizes an entire corpus of text data to build a vocabulary and converts individual documents into a numeric vector based on the vocabulary

1. Tf-idf矢量化器 -標記整個文本數據集以構建詞匯表,并根據該詞匯表將單個文檔轉換為數字矢量

Tokenizer steps: lowercase all characters > remove all punctuation > tokenize text into individual words > strip any white space surrounding words > remove stopwords (words that add no meaning to a sentence) > stem remaining words

標記生成器步驟:小寫字母>除去所有標點>標記化文本為單個單詞>剝去任何空白周圍的單詞>移除停止詞(即沒有意義添加到句子話)>干剩余字

Vectorizer steps: convert a text document into a term frequency vector (word counts) > normalize word counts by multiplying the inverse document frequency

矢量化器步驟:將文本文檔轉換為術語頻率矢量(字數)>通過乘以逆文檔頻率將字數歸一化

2. Multi-output classifier using a logistic regression model — predicts 35 binary labels (0 or 1 for each of the 35 categories)

2. 使用邏輯回歸模型的多輸出分類器 -預測35個二元標簽(35個類別中的每個類別為0或1)

After importing the data from the database we just created, we split the data into a training and test set, and use the training set to train the classifier pipeline outlined above. A grid search was done to optimize the parameters for both steps in the pipeline and the final classifier was evaluated on the test set with the following results:

從剛剛創建的數據庫中導入數據后,我們將數據分為訓練和測試集,并使用訓練集來訓練上面概述的分類器管道。 進行了網格搜索以優化管道中兩個步驟的參數,并在測試集中對最終分類器進行了評估,結果如下:

Average accuracy: 0.9483

平均準確度 :0.9483

Average precision: 0.9397

平均精度 :0.9397

Average recall: 0.9483

平均召回率 :0.9483

Average F-score: 0.9380

平均F值 :0.9380

As this was a multi-output classification problem, these metrics were averaged across all 35 outputs.

由于這是一個多輸出分類問題,因此對所有35個輸出進行平均。

I also tried Naive Bayes and random forest models, but they didn’t perform as well as the logistic regression model. The random forest model had slightly better metrics for a lot of the categories, but since it takes significantly longer to train, I opted for logistic regression.

我還嘗試過樸素貝葉斯和隨機森林模型,但它們的表現不如邏輯回歸模型。 對于許多類別,隨機森林模型的指標稍好一些,但是由于訓練所需的時間明顯更長,因此我選擇了邏輯回歸。

Finally, the trained classifier is saved in pickle format.

最后,訓練有素的分類器以泡菜格式保存。

應用程序 (The Application)

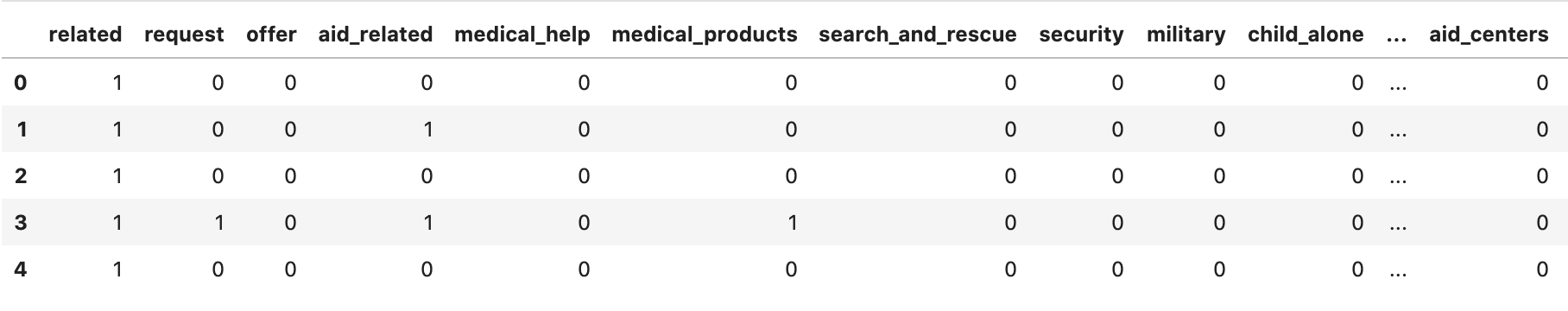

Now that we have a trained classifier, we can build it into a web application that classifies disaster messages. Personally, I prefer Flask as it is a lightweight framework, perfect for smaller applications. The app’s interface is shown in figure 4 below.

現在,我們擁有訓練有素的分類器,可以將其構建到對災難消息進行分類的Web應用程序中。 就個人而言,我更喜歡Flask,因為它是輕量級的框架,非常適合較小的應用程序。 該應用程序的界面如下圖4所示。

As shown in Figure 4, the web application has 2 pages:

如圖4所示,Web應用程序有2個頁面:

Home page: This page contains an input field to enter a message to classify and a dashboard of interactive visualizations that summarizes the data. The dashboard (created with Plotly) shows the (1) distribution of message genres, (2) the distribution of message word counts, (3) top message categories, and (4) the most common words in messages.

主頁 :此頁面包含一個輸入字段,用于輸入要分類的消息以及用于匯總數據的交互式可視化儀表板。 儀表板(使用Plotly創建)顯示(1)消息類型的分布,(2)消息字數的分布,(3)頂部消息類別,以及(4)消息中最常見的單詞。

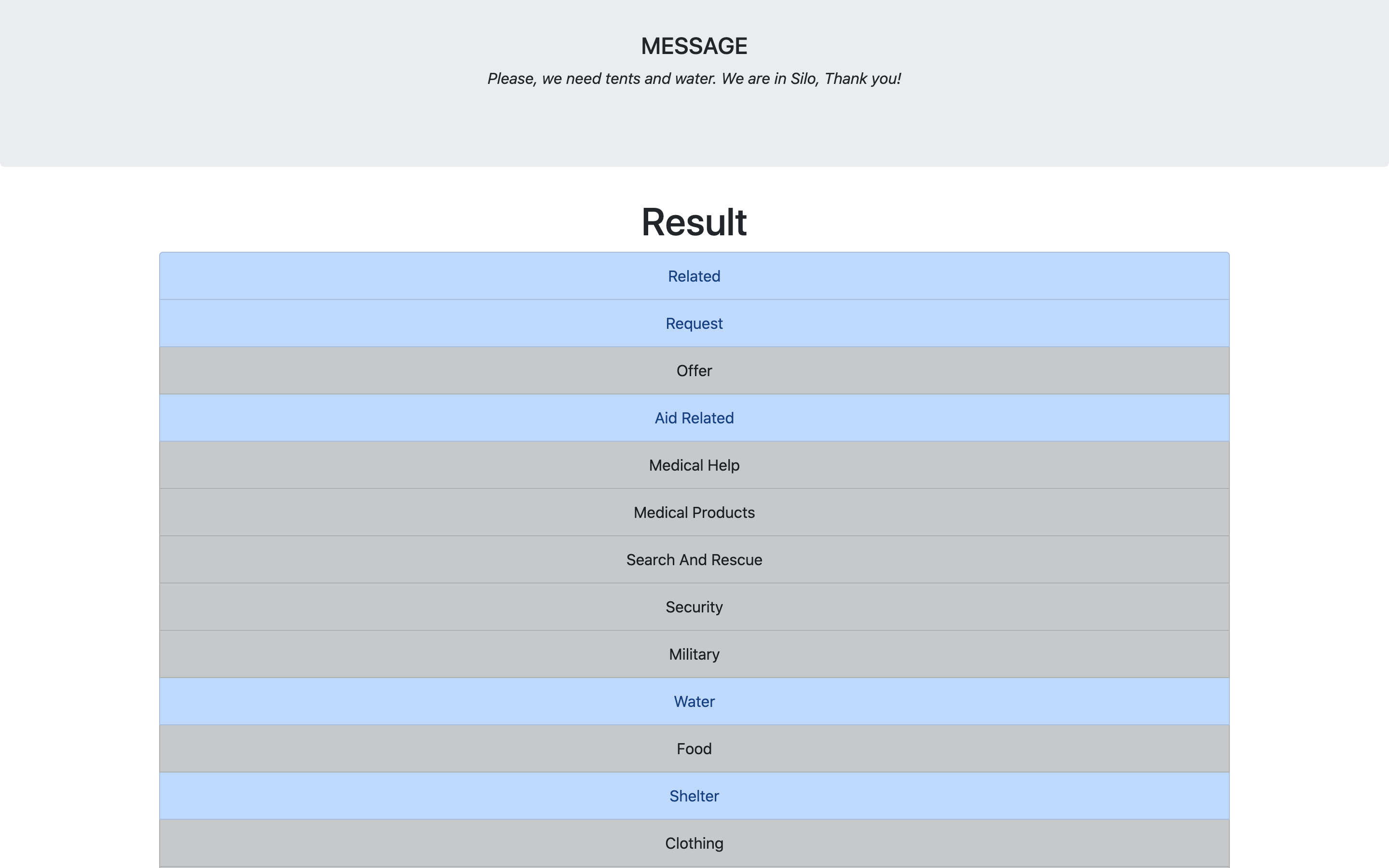

Result page: This page displays the message that was entered into the input field and the 35 classification results for that message. The categories highlighted blue are the categories that the message was classified as.

結果頁面 :此頁面顯示輸入到輸入字段中的消息以及該消息的35個分類結果。 藍色突出顯示的類別是郵件被分類為的類別。

Both pages were written in HTML and Bootstrap (a CSS library) and are rendered by the Flask app. To build the app, we first load in the data and the trained model.

這兩個頁面都是用HTML和Bootstrap(一個CSS庫)編寫的,并由Flask應用程序呈現。 要構建該應用程序,我們首先要加載數據和經過訓練的模型。

We use the data to set up the home-page visualizations in the back-end with Plotly’s Python library and render these visualizations in the front-end with Plotly’s Javascript library.

我們使用這些數據在Plotly的Python庫中在后端設置主頁可視化效果,并在Plotly的Javascript庫中在前端渲染這些可視化效果。

When text is entered into the input field and submitted, it is fetched by Flask to the back-end where the model will classify it, and the result page will then be rendered with the classification results.

將文本輸入輸入字段并提交后,Flask會將其提取到模型將對其進行分類的后端,然后將使用分類結果來呈現結果頁面。

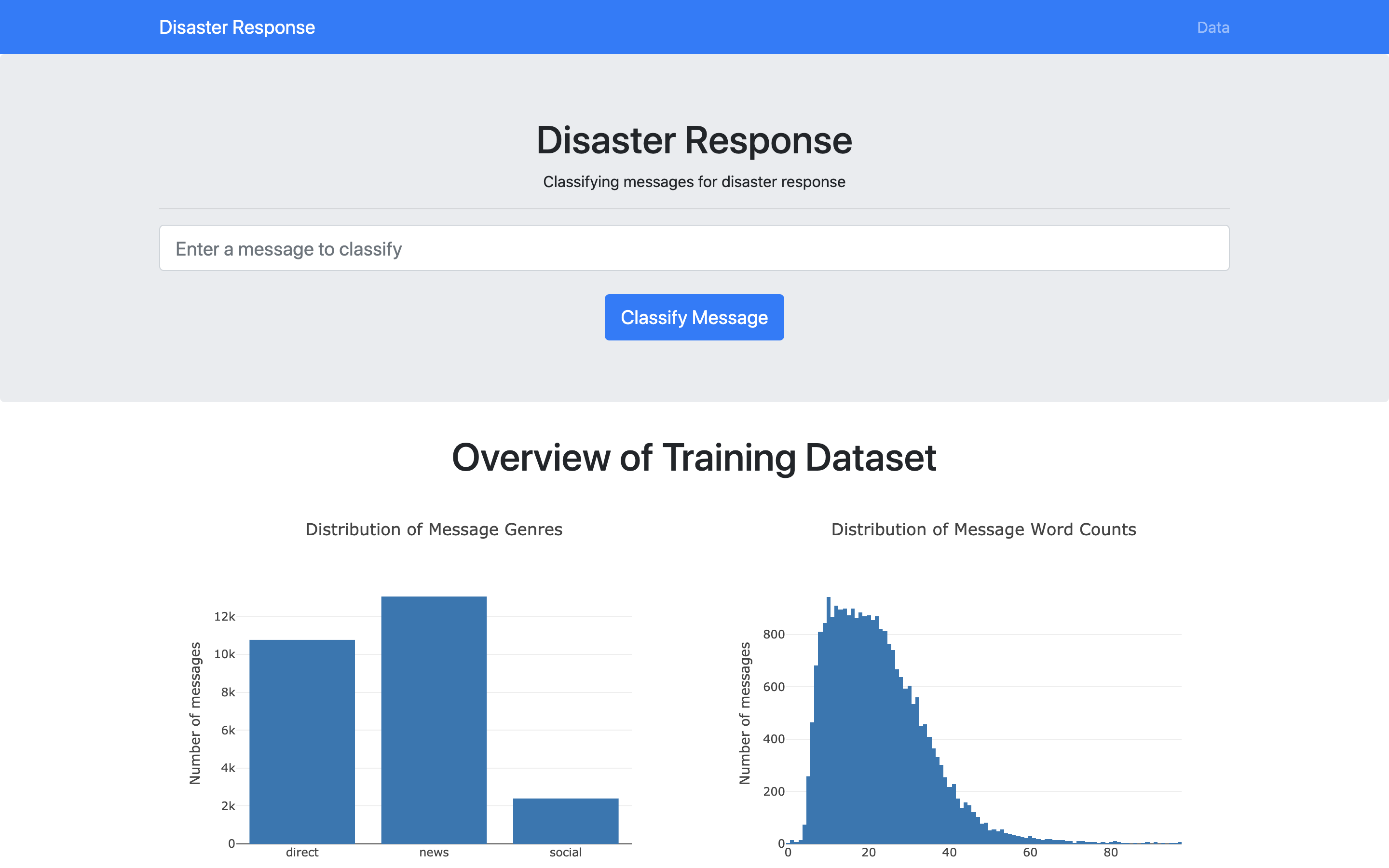

As shown in figure 4B, I tested an example message:

如圖4B 所示 ,我測試了一個示例消息:

“Please, we need tents and water. We are in Silo, Thank you!”

“請,我們需要帳篷和水。 我們在筒倉,謝謝!”

And it was classified as “related”, “request”, “aid related”, “water” and “shelter”.

它分為“相關”,“請求”,“與援助有關”,“水”和“庇護所”。

摘要 (Summary)

The main components of this project are (1) the data processing pipeline, which transforms the data into a usable format and prepares it for the classifier, (2) the machine learning pipeline, which includes a tf-idf vectorizer and a logistic regression classifier, and (3) the web application, which serves the trained classifier and a data dashboard.

該項目的主要組件是(1)數據處理管道,它將數據轉換為可用格式并為分類器做準備;(2)機器學習管道,其中包括tf-idf矢量化器和邏輯回歸分類器,以及(3)Web應用程序,該服務為訓練有素的分類器和數據儀表板提供服務。

Here are some ideas for improving this project you may want to try:

以下是一些改進您可能想嘗試的項目的想法:

- Different or additional text processing steps, like lemmatization instead of stemming 不同的或附加的文本處理步驟,例如詞法化而不是詞干化

- Extract more features from the text, like message word count 從文本中提取更多功能,例如消息字數

- A different classification algorithm, like convolutional neural networks 不同的分類算法,例如卷積神經網絡

The web application is available on my Github. Clone the repository and follow the instructions in the readme to try it yourself!

該Web應用程序可在我的Github上找到 。 克隆存儲庫,并按照自述文件中的說明進行操作!

翻譯自: https://medium.com/analytics-vidhya/building-a-text-classifier-for-disaster-response-caf83137e08d

樸素貝葉斯分類器 文本分類

本文來自互聯網用戶投稿,該文觀點僅代表作者本人,不代表本站立場。本站僅提供信息存儲空間服務,不擁有所有權,不承擔相關法律責任。 如若轉載,請注明出處:http://www.pswp.cn/news/388995.shtml 繁體地址,請注明出處:http://hk.pswp.cn/news/388995.shtml 英文地址,請注明出處:http://en.pswp.cn/news/388995.shtml

如若內容造成侵權/違法違規/事實不符,請聯系多彩編程網進行投訴反饋email:809451989@qq.com,一經查實,立即刪除!

)

)