深度學習 推理 訓練

背景與挑戰📋 (Background and challenges 📋)

In a modern deep learning algorithm, the dependence on manual annotation of unlabeled data is one of the major limitations. To train a good model, usually, we have to prepare a vast amount of labeled data. In the case of a small number of classes and data, we can use the pre-trained model from the labeled public dataset and fine-tune a few last layers with your data. However, in real life, it’s easily faced with the problem when your data is considerably large (the products in the store or the face of a human,..) and it will be difficult for the model to learn with just a few trainable layers. Furthermore, the amount of unlabeled data (e.g. document text, images on the Internet) is uncountable. Labeling all of them for the task is almost impossible but not utilizing them is definitely a waste.

在現代深度學習算法中,對未標記數據的手動注釋的依賴是主要限制之一。 為了訓練一個好的模型,通常,我們必須準備大量的標記數據。 對于少量的類和數據,我們可以使用帶有標簽的公共數據集中的預訓練模型,并使用您的數據微調最后幾層。 但是,在現實生活中,當您的數據相當大時(存儲在商店中的產品或人的臉,..),很容易遇到問題,并且模型很難通過幾個可訓練的層來學習。 此外,未標記數據(例如,文檔文本,Internet上的圖像)的數量是不可數的。 為任務標記所有標簽幾乎是不可能的,但是不使用它們絕對是浪費。

In this case, training a deep model again from scratch with a new dataset will be an option but it takes a lot of time and effort for labeling data while using a pre-trained deep model seems no longer helpful. That is the reason why Self-supervised learning was born. The idea behind this is simple, which serves two main tasks:

在這種情況下,使用新數據集從頭開始重新訓練深度模型將是一種選擇,但使用預先訓練的深度模型似乎不再有用,但是花費大量時間和精力來標記數據。 這就是自我監督學習誕生的原因。 這背后的想法很簡單,它有兩個主要任務:

Surrogate task: the deep model will learn generalizable representations from unlabeled data without annotation, and then will be able to self-generate a supervisory signal exploiting implicit information.

替代任務:深度模型將從未標記的數據中學習可概括的表示形式,而無需注釋,然后將能夠利用隱式信息自行生成監控信號。

Downstream task: representations will be fine-tuned for supervised-learning tasks e.g. classification and image retrieval with less number of labeled data (the number of labeled data depending on the performance of model based on your requirement)

下游任務:將對有監督學習任務的表示進行微調 例如,分類和圖像檢索使用較少的標記數據(標記數據的數量取決于您的需求取決于模型的性能)

There are much different training approaches proposed to learn such representations: Relative position [1]: the model needs to understand the spatial context of objects to tell the relative position between parts; Jigsaw puzzle [2]: the model needs to place 9 shuffled patches back to the original locations; Colorization [3]: the model has trained to color a grayscale input image; precisely the task is to map this image to a distribution over quantized color value outputs; Counting features [4]: The model learns a feature encoder using feature counting relationship of input images transforming by Scaling and Tiling; SimCLR [5]: The model learns representations for visual inputs by maximizing agreement between differently augmented views of the same sample via a contrastive loss in the latent space.

提出了許多不同的訓練方法來學習此類表示形式: 相對位置[1]: 模型需要了解對象的空間背景,以告訴零件之間的相對位置; 拼圖游戲[2]:模型需要將9個打亂的補丁放回到原始位置; 著色[3]:模型已訓練為對灰度輸入圖像進行著色; 確切的任務是將該圖像映射到量化的顏色值輸出上的分布; 計數特征[4]:模型使用通過縮放轉換的輸入圖像的特征計數關系學習特征編碼器 和 平鋪; SimCLR [5]:模型通過潛在空間中的對比損失來最大化同一樣本的不同增強視圖之間的一致性,從而學習視覺輸入的表示形式。

However, I would like to introduce one interesting approach that is able to recognize things like a human. The key factor in human learning is the acquisition of new knowledge by comparing relating and different entities. So, it is a nontrivial solution if we can apply a similar mechanism in self-supervised machine learning via the Relational reasoning approach [6].

但是,我想介紹一種有趣的方法,它能夠識別像人類一樣的東西。 人類學習的關鍵因素是通過比較相關實體和不同實體來獲取新知識。 因此,如果我們可以通過關系推理方法在自我監督的機器學習中應用類似的機制,那將是一個不平凡的解決方案[6]。

The relational reasoning paradigm is based on a key design principle: the use of a relation network as a learnable function on the unlabeled dataset to quantify the relationships between views of the same object (intra-reasoning) and relationships between different objects in different scenes (inter-reasoning). The possibility to exploit a similar mechanism in self-supervised machine learning via relational reasoning was evaluated by the performance on standard datasets (CIFAR-10, CIFAR-100, CIFAR-100–20, STL-10, tiny-ImageNet, SlimageNet), learning schedule, and backbones (bothshallow and deep). The results show that the Relational reasoning approach largely outperforms the best competitor in all conditions by an average 14% accuracy and the most recent state-of-the-art method by 3% indicating in this paper [6].

關系推理范例基于一個關鍵設計原則:使用關系網絡作為未標記數據集上的可學習功能,以量化同一對象的視圖之間的關系(內部推理)和不同場景中不同對象之間的關系(相互解釋)。 通過標準數據集(CIFAR-10,CIFAR-100,CIFAR-100–20,STL-10,tiny-ImageNet,SlimageNet)的性能評估了通過關系推理在自我監督機器學習中采用類似機制的可能性,學習時間表和骨干(淺層和深層)。 結果表明, 關系推理方法在所有條件下的表現均優于最佳競爭者,平均準確度為14%,而最新技術水平的表現為3%,表明了本文的研究 [6]。

技術亮點📄 (Technique highlight 📄)

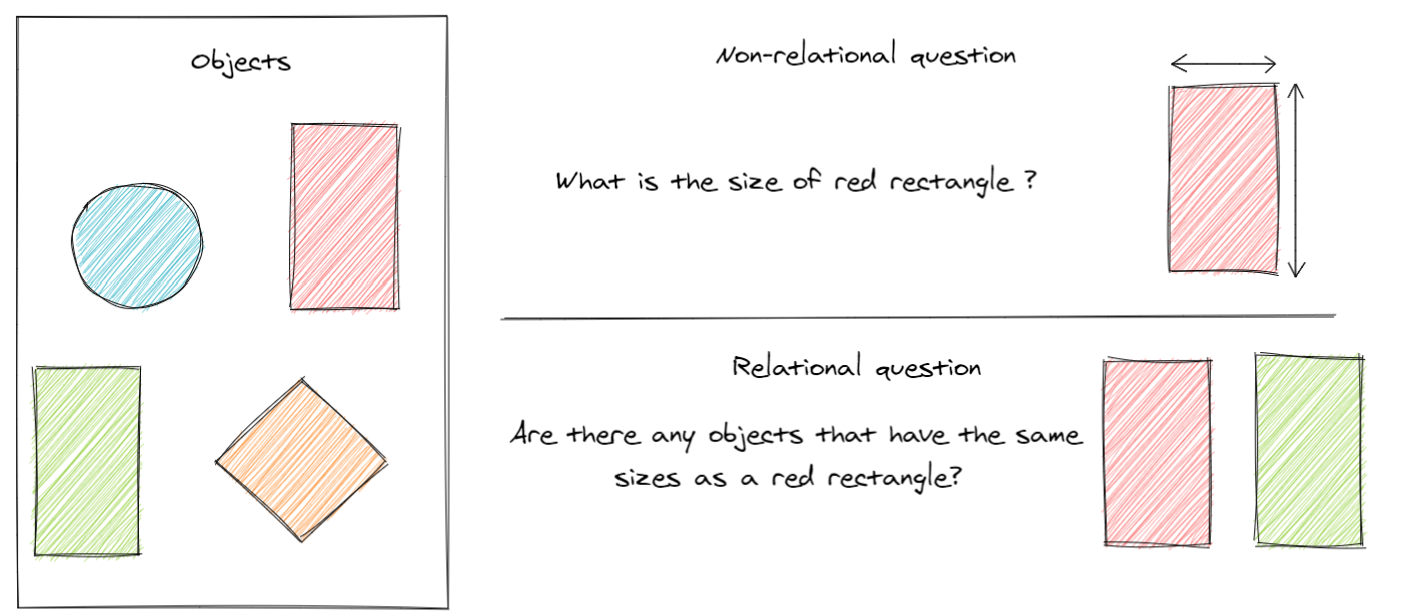

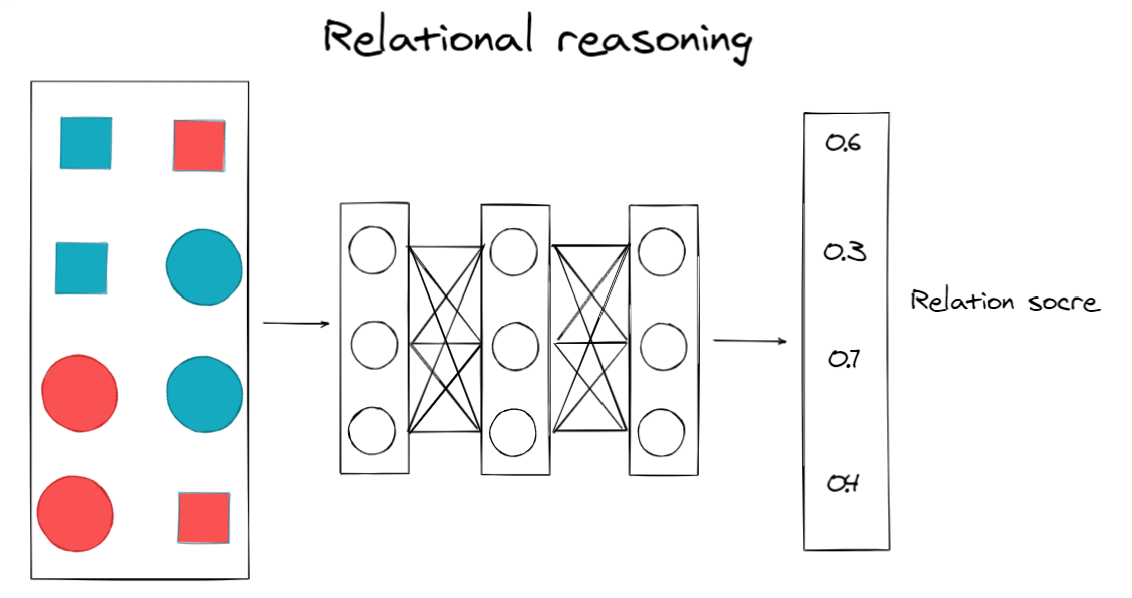

For the simplest explanation, Relational Reasoning is just a methodology that tries to help learners understanding relations between different objects (ideas) rather than learning objects individually. That could help learners easily distinguish and remember the object based on their difference. There are two main components in the Relational reasoning system [6]: backbone structure and relation head. The relation head was used in the pretext task phase for supporting the underlying neural network backbone learning useful representations in the unlabeled dataset and then it will be discarded. The backbone structure was used in downstream tasks such as classification or image retrieval after training in the pretext task.

對于最簡單的解釋, 關系推理只是一種試圖幫助學習者理解不同對象(思想)之間關系的方法,而不是單獨學習對象。 這可以幫助學習者根據他們的差異輕松區分和記住對象。 關系推理系統[6]有兩個主要組成部分:主干 結構和關系頭 。 關系頭在前置任務階段中用于支持基礎神經網絡主干學習未標記數據集中的有用表示形式,然后將其丟棄。 在對前言任務進行培訓之后,將主干結構用于下游任務,例如分類或圖像檢索。

Previous work: focus on within-scene relation, meaning that

先前的工作:專注于場景內關系,這意味著

all the elements in the same object belong to the same scene (e.g. balls from a basket); training on label dataset and the main goal is the relation head [7].

同一對象中的所有元素都屬于同一場景(例如,籃子中的球); 在標簽數據集上進行訓練,主要目標是關系頭[7]。

New approach: focus on relations between different views of the same object (intra-reasoning) and between different objects in different scenes (inter-reasoning); use relational reasoning on unlabeled data and the relation head is a pretext task for learning useful representations in the underlying backbone.

新方法:關注同一對象的不同視圖之間的關系( 推理 )和不同場景中的不同對象之間的關系( 推理 ); 在未標記的數據上使用關系推理,并且關系頭是用于學習基礎骨干中有用表示的一個前置任務。

Let’s discuss the important point in some part of the Relational reasoning system:

讓我們討論關系推理系統某些部分的重點:

Mini-batch augmentation:

小批量增強:

As mentioned before, this system introduced intra-reasoning and inter-reasoning? So why we need them? It is not possible to create pairs of similar and dissimilar objects when labels are not given. To solve this problem, the bootstrapping technique was applied and resulted in forming intra-reasoning and inter-reasoning, where:

如前所述,該系統引入了內部推理和內部推理 ? 那么為什么我們需要它們? 如果未指定標簽,則無法創建相似和不相似的對象對。 為了解決這個問題,應用了引導技術,并導致形成內部推理和內部推理,其中:

Intra-reasoning consists of sampling random augmentations of the same object {A1; A2 } (positive pair) (eg. different views of same basketball)

內部推理由對同一對象{A1; A2}(正對)(例如,同一籃球的不同觀點)

Inter-reasoning consists of coupling two random objects {A1; B1} (negative pair) (eg. basketball with random ball)

相互推理包括耦合兩個隨機對象{A1; B1}(負對)(例如帶隨機球的籃球)

Furthermore, the utilization of the random augmentations functions (e.g. geometric transformation, color distortion) is also considered to make between-scenes reasoning more complicated. The benefit of these augmentations functions forces the learner (backbone) to pay attention to the correlation between a wider set of features (e.g. color, size, texture, etc.). For instance, in the pair {foot ball, basket ball}, the color alone is a strong predictor of the class. However, with the random changing of color as well as the shape size, the learner now is difficult to discriminate the difference between this pair. The learner has to take a look at another feature, consequently, it results in better representation.

此外,還考慮利用隨機增強函數(例如幾何變換,顏色失真)使場景間推理更加復雜。 這些增強功能的好處迫使學習者(骨干)注意更廣泛的功能(例如顏色,大小,紋理等)之間的相關性。 例如,在{腳球,籃子球}對中,單獨的顏色是該類別的有力預測指標。 然而,隨著顏色和形狀大小的隨機變化,學習者現在難以區分這對之間的差異。 學習者必須看一下另一個功能,因此,它可以提供更好的表示。

2. Metric learning

2.公制學習

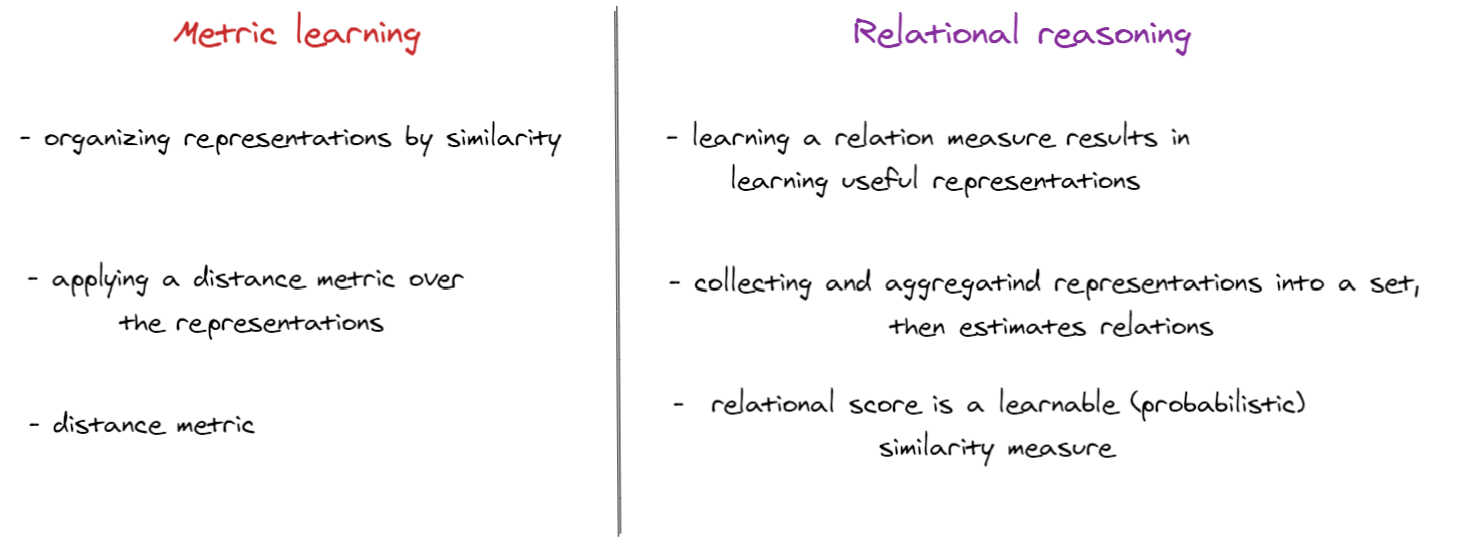

The aim of metric learning s to use a distance metric to bring closer representations of similar inputs (positives) while moving away representations of dissimilar inputs (negatives). However, in Relational reasoning, metric learning is fundamentally different:

度量學習的目的是使用距離度量在不相似輸入(負)的表示移開的同時,更接近相似輸入(正)的表示。 但是,在關系推理中,度量學習本質上是不同的:

3. Loss function

3.損失功能

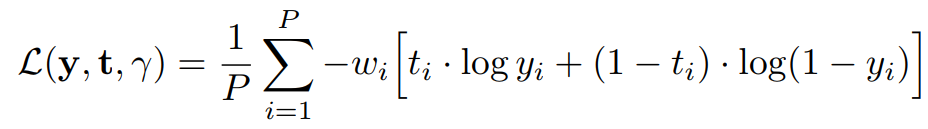

The learning objective is a binary classification problem over the presentation pairs. Therefore we can use a binary cross-entropy loss to the maximization of a Bernoulli log-likelihood, where the relation score y represents a probabilistic estimate of representation membership inducing through a sigmoid activation function.

學習目標是表示對上的二進制分類問題。 因此,我們可以使用二元交叉熵損失來最大化伯努利對數似然,其中關系得分y表示通過S型激活函數引起的表示成員資格的概率估計。

Finally, this paper [6] also supplied the result of Relational reasoning on standard datasets (CIFAR-10, CIFAR-100, CIFAR-100–20, STL-10, tiny-ImageNet, SlimageNet), different backbones (shallow and deep), same learning schedule (epochs). The results are below, for further information you can check out his paper.

最后,本文[6]還提供了標準數據集(CIFAR-10,CIFAR-100,CIFAR-100-20,STL-10,tiny-ImageNet,SlimageNet),不同主干(淺層和深層)的關系推理結果。 ,相同的學習時間表(時期)。 結果如下,有關更多信息,請查看他的論文。

實驗評估📊 (Experimental evaluation 📊)

In this article, I want to reproduce the Relational reasoning system on the public image dataset STL-10. This dataset comprises of 10 classes (airplane, bird, automobile, cat, deer, dog, horse, monkey, ship, truck) with 96x96 pixels color.

在本文中,我想在公共圖像數據集STL-10上重現關系推理系統 。 該數據集包含10個類別(飛機,鳥類,汽車,貓,鹿,狗,馬,猴子,船,卡車),顏色為96x96像素。

First of all, we need to import some important library

首先,我們需要導入一些重要的庫

STL-10 dataset consists of 1300 labeled images (500 for training and 800 for testing). However, it also includes 100000 unlabeled images from a similar but broader distribution of images. For instance, it contains other types of animals (bears, rabbits, etc.) and vehicles (trains, buses, etc.) in addition to the ones in the labeled set

STL-10數據集包含1300個帶標簽的圖像(用于訓練的500個圖像和用于測試的800個圖像)。 但是,它也包括來自相似但分布較廣的圖像的100000張未標記圖像。 例如,除了標記集中的動物外,它還包含其他類型的動物(熊,兔子等)和車輛(火車,公共汽車等)

And then we will create the Relational reasoning class based on the suggestion of the author

然后根據作者的建議創建關系推理類

To compare the performance of Relational reasoning methodology on the shallow and deep model, we will create a shallow model (Conv4) and use the structure of a deep model (Resnet34).

為了比較關系推理方法在淺層模型和深層模型上的性能,我們將創建一個淺層模型(Conv4),并使用深層模型(Resnet34)的結構。

backbone = Conv4() # shallow model

backbone = models.resnet34(pretrained = False) # deep modelSome hyperparameters and augmentation strategies were set based on the suggestion of the author. We will train our backbone with relation head on unlabeled STL-10 dataset.

根據作者的建議設置了一些超參數和擴充策略。 我們將在未標記的STL-10數據集中訓練帶有關系頭的主干。

Up to now, we’ve already created everything necessary to train our model. Now we will train the backbone and relation head model in 10 epochs and 16 augmentation images (K), it took 4 hours with the shallow model (Conv4) and 6 hours on the deep model (Resnet34) by 1 GPU Tesla P100-PCIE-16GB (you can freely change the number of epochs as well as another hyperparameter to obtain better results)

到目前為止,我們已經創建了訓練模型所需的一切。 現在,我們將在10個時期和16個增強圖像(K)中訓練主干和關系頭模型,其中1個GPU Tesla P100-PCIE-在淺層模型(Conv4)和深層模型(Resnet34)上花費了4個小時, 16GB(您可以自由更改時期數以及另一個超參數以獲得更好的結果)

device = torch.device("cuda:0") if torch.cuda.is_available() else torch.device("cpu")backbone.to(device)

model = RelationalReasoning(backbone, feature_size)

model.train(tot_epochs=tot_epochs, train_loader=train_loader)

torch.save(model.backbone.state_dict(), 'model.tar')After training our backbone model, we discard the relation head and use only the backbone for the downstream tasks. We need to fine-tune our backbone with labeled data in STL-10 (500 images) and test the final model in the test set (800 images). Training and testing datasets will load in Dataloader without augmentations.

訓練完主干模型后,我們將丟棄關系頭,而僅將主干用于下游任務。 我們需要使用STL-10中的標記數據(500張圖像)對主干進行微調,并在測試集中測試最終模型(800張圖像)。 訓練和測試數據集將在不進行增強的情況下加載到Dataloader中。

We will load the pretrained backbone model and use a simple linear model to connect the output feature with a number of classes in the dataset.

我們將加載預訓練的骨干模型,并使用簡單的線性模型將輸出要素與數據集中的多個類連接。

# linear model

linear_layer = torch.nn.Linear(64, 10) # if backbone is Conv4

linear_layer = torch.nn.Linear(1000, 10) # if backbone is Resnet34# defining a raw backbone model

backbone_lineval = Conv4() # Conv4backbone_lineval = models.resnet34(pretrained = False) # Resnet34# load model

checkpoint = torch.load('model.tar') # name of pretrain weight

backbone_lineval.load_state_dict(checkpoint)In this time, only the linear model will be trained, the backbone model will be frozen. First, we will see the result of fine-tuned Conv4

此時,僅線性模型將被訓練,主干模型將被凍結。 首先,我們將看到經過微調的Conv4的結果

And then check on the test set

然后檢查測試集

Conv4 obtained 49.98% accuracy on the test set, it means that the backbone model could learn useful feature in the unlabeled dataset, we just need to fine-tune with few epochs to achieve a good result. Now let check the performance of the deep model.

Conv4在測試集上獲得了49.98%的準確性,這意味著主干模型可以在未標記的數據集中學習有用的功能,我們只需要經過幾個時期就可以進行微調以取得良好的結果。 現在讓我們檢查深度模型的性能。

Then evaluating on the test dataset

然后評估測試數據集

It’s much better, we can obtain 55.38% accuracy on the test set. In this article, the main goal is to reproduce and evaluate the Relational reasoning methodology to teach the model distinguishing the object without the label, therefore, these results were very promising. If you feel unsatisfied, you can freely do the experiment by changing the hyperparameter such as the number of augmentation, epochs, or model structure.

更好,我們可以在測試集上獲得55.38%的精度。 在本文中,主要目標是重現和評估關系推理方法,以講授區分沒有標簽的對象的模型,因此,這些結果非常有希望。 如果您不滿意,則可以通過更改超參數(例如擴充的數量,歷元或模型結構)自由地進行實驗。

最后的想法📕 (Final Thoughts 📕)

Self-supervised relational reasoning is effective in both a quantitative and qualitative manners, and with backbones of different size from shallow to deep structure. Representations learned through comparison can be easily transferred from one domain to another, they are fine-grained and compact, which may be due to the correlation between accuracy and number of augmentations. In relational reasoning, the number of augmentations has a primary role affecting the quality of the clusters of objects based on the author’s experiment [4]. Self-supervised learning has a strong potential to become the future of machine learning in many aspects.

自我監督的關系推理在定量和定性方面都是有效的,并且具有從淺到深結構不同大小的主干。 通過比較學習的表示形式可以輕松地從一個域轉移到另一個域,它們的粒度細密,這可能是由于準確性和擴充數量之間的相關性所致。 在關系推理中,基于作者的實驗[4],擴充的數量起著主要作用,影響對象簇的質量。 自我監督學習在許多方面都具有成為機器學習未來的強大潛力。

You can contact me if you want further discussion. Here is my Linkedin

如果您想進一步討論,可以與我聯系。 這是我的Linkedin

Enjoy!!! 👦🏻

請享用!!! 👦🏻

翻譯自: https://towardsdatascience.com/train-without-labeling-data-using-self-supervised-learning-by-relational-reasoning-b0298ad818f9

深度學習 推理 訓練

本文來自互聯網用戶投稿,該文觀點僅代表作者本人,不代表本站立場。本站僅提供信息存儲空間服務,不擁有所有權,不承擔相關法律責任。 如若轉載,請注明出處:http://www.pswp.cn/news/388940.shtml 繁體地址,請注明出處:http://hk.pswp.cn/news/388940.shtml 英文地址,請注明出處:http://en.pswp.cn/news/388940.shtml

如若內容造成侵權/違法違規/事實不符,請聯系多彩編程網進行投訴反饋email:809451989@qq.com,一經查實,立即刪除!

)

)

)

![CODE[VS] 1621 混合牛奶 USACO](http://pic.xiahunao.cn/CODE[VS] 1621 混合牛奶 USACO)