管道過濾模式 大數據

介紹 (Introduction)

If you are starting with Big Data it is common to feel overwhelmed by the large number of tools, frameworks and options to choose from. In this article, I will try to summarize the ingredients and the basic recipe to get you started in your Big Data journey. My goal is to categorize the different tools and try to explain the purpose of each tool and how it fits within the ecosystem.

如果您從大數據開始,通常會被眾多工具,框架和選項所困擾。 在本文中,我將嘗試總結其成分和基本配方,以幫助您開始大數據之旅。 我的目標是對不同的工具進行分類,并試圖解釋每個工具的目的以及它如何適應生態系統。

First let’s review some considerations and to check if you really have a Big Data problem. I will focus on open source solutions that can be deployed on-prem. Cloud providers provide several solutions for your data needs and I will slightly mention them. If you are running in the cloud, you should really check what options are available to you and compare to the open source solutions looking at cost, operability, manageability, monitoring and time to market dimensions.

首先,讓我們回顧一些注意事項,并檢查您是否確實有 大數據問題 。 我將重點介紹可以在本地部署的開源解決方案。 云提供商為您的數據需求提供了幾種解決方案,我將略微提及它們。 如果您在云中運行,則應真正檢查可用的選項,并與開源解決方案進行比較,以了解成本,可操作性,可管理性,監控和上市時間。

數據注意事項 (Data Considerations)

(If you have experience with big data, skip to the next section…)

(如果您有使用大數據的經驗,請跳到下一部分...)

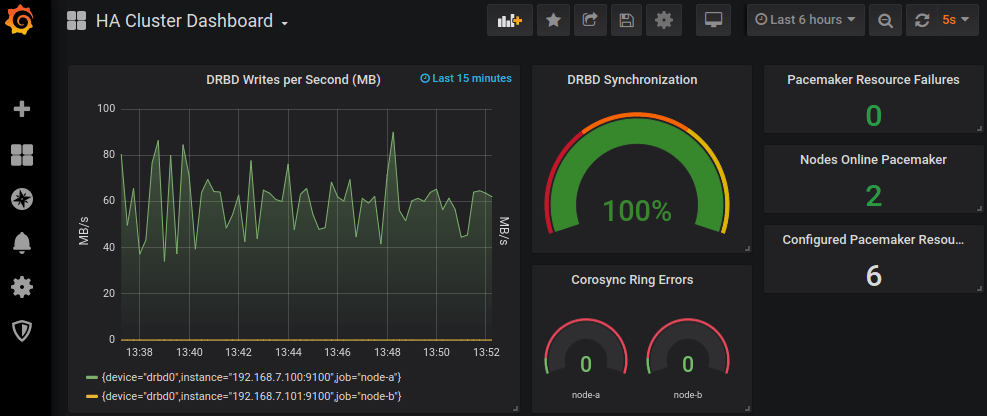

Big Data is complex, do not jump into it unless you absolutely have to. To get insights, start small, maybe use Elastic Search and Prometheus/Grafana to start collecting information and create dashboards to get information about your business. As your data expands, these tools may not be good enough or too expensive to maintain. This is when you should start considering a data lake or data warehouse; and switch your mind set to start thinking big.

大數據非常復雜 ,除非絕對必要,否則請不要參與其中。 要獲取見解,請從小處著手,也許使用Elastic Search和Prometheus / Grafana來開始收集信息并創建儀表板以獲取有關您的業務的信息。 隨著數據的擴展,這些工具可能不夠好或維護成本太高。 這是您應該開始考慮數據湖或數據倉庫的時候。 并切換你的思維定勢開始考慮 大 。

Check the volume of your data, how much do you have and how long do you need to store for. Check the temperature! of the data, it loses value over time, so how long do you need to store the data for? how many storage layers(hot/warm/cold) do you need? can you archive or delete data?

檢查數據量,有多少以及需要存儲多長時間。 檢查溫度 ! 數據,它會隨著時間的流逝而失去價值,那么您需要存儲多長時間? 您需要多少個存儲層(熱/熱/冷)? 您可以存檔或刪除數據嗎?

Other questions you need to ask yourself are: What type of data are your storing? which formats do you use? do you have any legal obligations? how fast do you need to ingest the data? how fast do you need the data available for querying? What type of queries are you expecting? OLTP or OLAP? What are your infrastructure limitations? What type is your data? Relational? Graph? Document? Do you have an schema to enforce?

您需要問自己的其他問題是:您存儲的數據類型是什么? 您使用哪種格式? 您有任何法律義務嗎? 您需要多快提取數據? 您需要多長時間可用于查詢的數據? 您期望什么類型的查詢? OLTP還是OLAP? 您的基礎架構有哪些限制? 您的數據是什么類型? 有關系嗎 圖形? 文件? 您有要實施的架構嗎?

I could write several articles about this, it is very important that you understand your data, set boundaries, requirements, obligations, etc in order for this recipe to work.

我可能會寫幾篇關于此的文章,理解此數據,設置邊界 ,要求,義務等非常重要,這樣才能起作用。

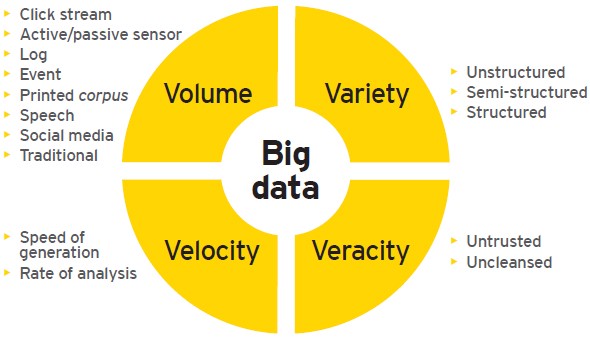

Data volume is key, if you deal with billions of events per day or massive data sets, you need to apply Big Data principles to your pipeline. However, there is not a single boundary that separates “small” from “big” data and other aspects such as the velocity, your team organization, the size of the company, the type of analysis required, the infrastructure or the business goals will impact your big data journey. Let’s review some of them…

數據量是關鍵,如果每天要處理數十億個事件或海量數據集,則需要將大數據原理應用于管道。 但是, 沒有一個單一的邊界將“小”數據與“大”數據以及其他方面(例如速度 , 團隊組織 , 公司規模,所需分析類型, 基礎架構或業務目標)相區分。您的大數據之旅。 讓我們回顧其中的一些……

OLTP與OLAP (OLTP vs OLAP)

Several years ago, businesses used to have online applications backed by a relational database which was used to store users and other structured data(OLTP). Overnight, this data was archived using complex jobs into a data warehouse which was optimized for data analysis and business intelligence(OLAP). Historical data was copied to the data warehouse and used to generate reports which were used to make business decisions.

幾年前,企業曾經使用關系數據庫支持在線應用程序,該關系數據庫用于存儲用戶和其他結構化數據( OLTP )。 一夜之間,這些數據使用復雜的作業存檔到數據倉庫中 ,該倉庫針對數據分析和商業智能( OLAP )進行了優化。 歷史數據已復制到數據倉庫中,并用于生成用于制定業務決策的報告。

數據倉庫與數據湖 (Data Warehouse vs Data Lake)

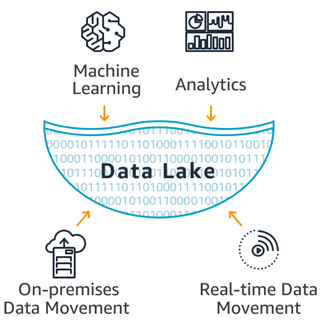

As data grew, data warehouses became expensive and difficult to manage. Also, companies started to store and process unstructured data such as images or logs. With Big Data, companies started to create data lakes to centralize their structured and unstructured data creating a single repository with all the data.

隨著數據的增長,數據倉庫變得昂貴且難以管理。 此外,公司開始存儲和處理非結構化數據,例如圖像或日志。 借助大數據 ,公司開始創建數據湖以集中其結構化和非結構化數據,從而創建包含所有數據的單個存儲庫。

In short, a data lake it’s just a set of computer nodes that store data in a HA file system and a set of tools to process and get insights from the data. Based on Map Reduce a huge ecosystem of tools such Spark were created to process any type of data using commodity hardware which was more cost effective.The idea is that you can process and store the data in cheap hardware and then query the stored files directly without using a database but relying on file formats and external schemas which we will discuss later. Hadoop uses the HDFS file system to store the data in a cost effective manner.

簡而言之,數據湖只是將數據存儲在HA 文件系統中的一組計算機節點,以及一組用于處理數據并從中獲取見解的工具 。 基于Map Reduce ,創建了龐大的工具生態系統,例如Spark ,可以使用更具成本效益的商品硬件處理任何類型的數據。其想法是,您可以在廉價的硬件中處理和存儲數據,然后直接查詢存儲的文件而無需使用數據庫,但依賴于文件格式和外部架構,我們將在后面討論。 Hadoop使用HDFS文件系統以經濟高效的方式存儲數據。

For OLTP, in recent years, there was a shift towards NoSQL, using databases such MongoDB or Cassandra which could scale beyond the limitations of SQL databases. However, recent databases can handle large amounts of data and can be used for both , OLTP and OLAP, and do this at a low cost for both stream and batch processing; even transactional databases such as YugaByteDB can handle huge amounts of data. Big organizations with many systems, applications, sources and types of data will need a data warehouse and/or data lake to meet their analytical needs, but if your company doesn’t have too many information channels and/or you run in the cloud, a single massive database could suffice simplifying your architecture and drastically reducing costs.

對于OLTP來說 ,近年來,使用MongoDB或Cassandra之類的數據庫可以向NoSQL轉移,這種數據庫的擴展范圍可能超出SQL數據庫的限制。 但是, 最近的數據庫可以處理大量數據,并且可以用于OLTP和OLAP,并且可以低成本進行流處理和批處理。 甚至YugaByteDB之類的事務數據庫也可以處理大量數據。 具有許多系統,應用程序,數據源和數據類型的大型組織將需要一個數據倉庫和/或數據湖來滿足其分析需求,但是如果您的公司沒有太多的信息渠道和/或您在云中運行,一個海量數據庫就足以簡化您的體系結構并大大降低成本 。

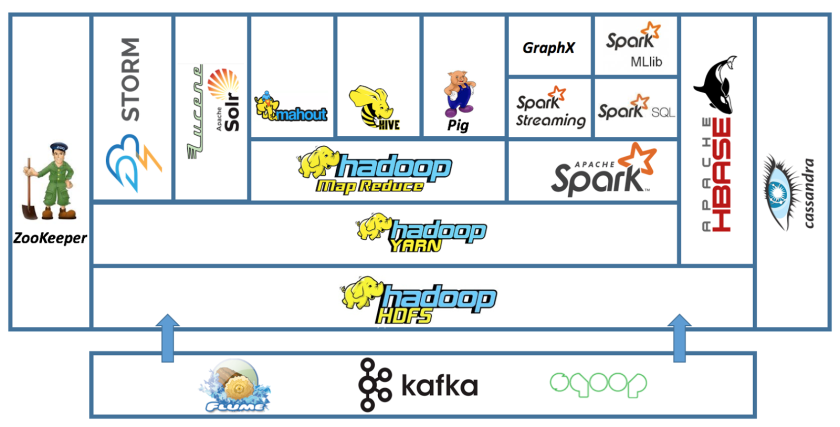

Hadoop或沒有Hadoop (Hadoop or No Hadoop)

Since its release in 2006, Hadoop has been the main reference in the Big Data world. Based on the MapReduce programming model, it allowed to process large amounts of data using a simple programming model. The ecosystem grew exponentially over the years creating a rich ecosystem to deal with any use case.

自2006年發布以來, Hadoop一直是大數據世界中的主要參考。 基于MapReduce編程模型,它允許使用簡單的編程模型來處理大量數據。 這些年來,生態系統呈指數增長,創建了一個豐富的生態系統來處理任何用例。

Recently, there has been some criticism of the Hadoop Ecosystem and it is clear that the use has been decreasing over the last couple of years. New OLAP engines capable of ingesting and query with ultra low latency using their own data formats have been replacing some of the most common query engines in Hadoop; but the biggest impact is the increase of the number of Serverless Analytics solutions released by cloud providers where you can perform any Big Data task without managing any infrastructure.

最近,人們對Hadoop生態系統提出了一些批評 ,并且很明顯,在最近幾年中,使用率一直在下降。 能夠使用自己的數據格式以超低延遲進行接收和查詢的新OLAP引擎已經取代了Hadoop中一些最常見的查詢引擎; 但是最大的影響是云提供商發布的無服務器分析解決方案的數量增加了,您可以在其中執行任何大數據任務而無需管理任何基礎架構 。

Given the size of the Hadoop ecosystem and the huge user base, it seems to be far from dead and many of the newer solutions have no other choice than create compatible APIs and integrations with the Hadoop Ecosystem. Although HDFS is at the core of the ecosystem, it is now only used on-prem since cloud providers have built cheaper and better deep storage systems such S3 or GCS. Cloud providers also provide managed Hadoop clusters out of the box. So it seems, Hadoop is still alive and kicking but you should keep in mind that there are other newer alternatives before you start building your Hadoop ecosystem. In this article, I will try to mention which tools are part of the Hadoop ecosystem, which ones are compatible with it and which ones are not part of the Hadoop ecosystem.

考慮到Hadoop生態系統的規模和龐大的用戶基礎,這似乎還沒有死,而且許多新的解決方案除了創建兼容的API和與Hadoop生態系統的集成外別無選擇。 盡管HDFS是生態系統的核心,但由于云提供商已構建了更便宜,更好的深度存儲系統(例如S3或GCS) ,因此現在僅在本地使用。 云提供商還提供開箱即用的托管Hadoop集群 。 看起來Hadoop仍然活躍并且活躍,但是您應該記住,在開始構建Hadoop生態系統之前,還有其他更新的選擇。 在本文中,我將嘗試提及哪些工具是Hadoop生態系統的一部分,哪些與之兼容,哪些不是Hadoop生態系統的一部分。

批量與流 (Batch vs Streaming)

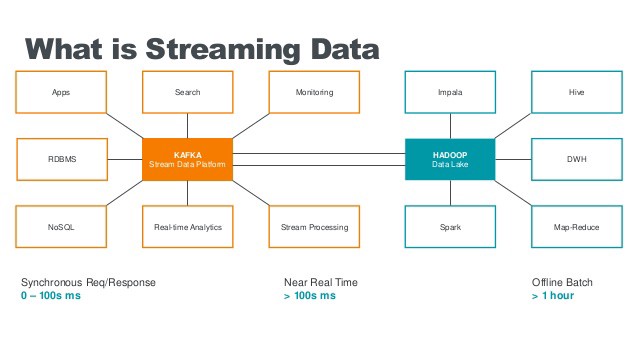

Based on your analysis of your data temperature, you need to decide if you need real time streaming, batch processing or in many cases, both.

根據對數據溫度的分析,您需要確定是否需要實時流傳輸,批處理或在很多情況下都需要 。

In a perfect world you would get all your insights from live data in real time, performing window based aggregations. However, for some use cases this is not possible and for others it is not cost effective; this is why many companies use both batch and stream processing. You should check your business needs and decide which method suits you better. For example, if you just need to create some reports, batch processing should be enough. Batch is simpler and cheaper.

在理想環境中,您將實時地從實時數據中獲得所有見解,并執行基于窗口的聚合。 但是,對于某些用例來說,這是不可能的,而對于另一些用例,則沒有成本效益。 這就是為什么許多公司同時使用批處理和流處理的原因 。 您應該檢查您的業務需求,并確定哪種方法更適合您。 例如,如果只需要創建一些報告,則批處理就足夠了。 批處理更簡單,更便宜 。

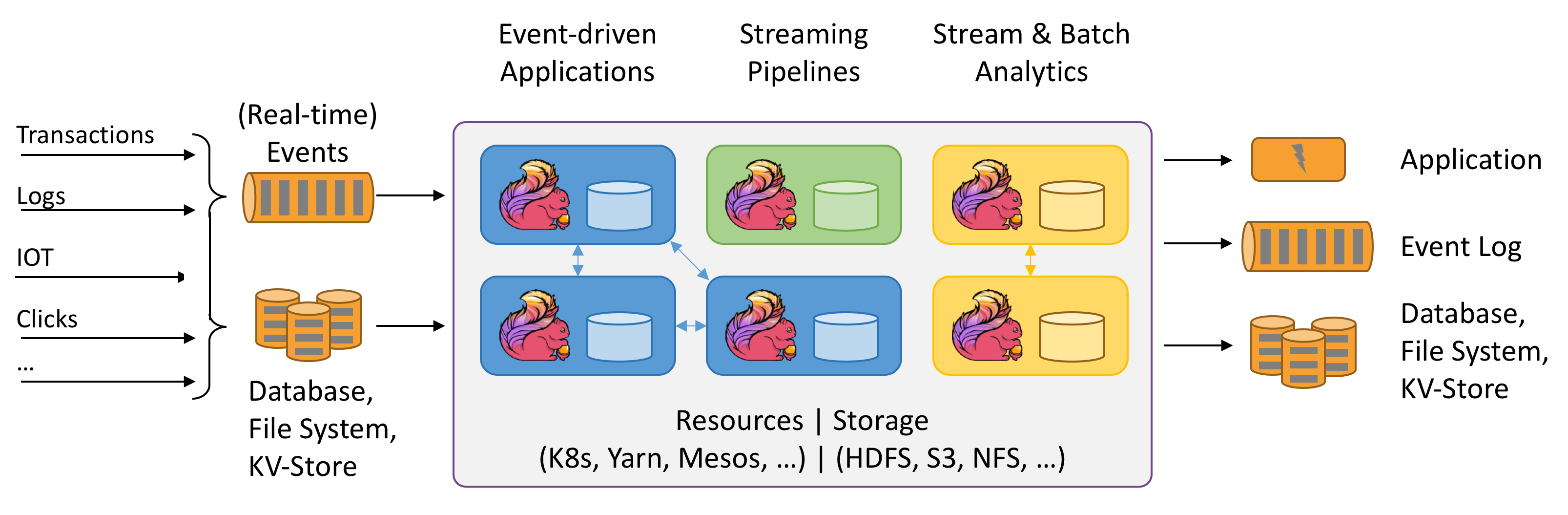

The latest processing engines such Apache Flink or Apache Beam, also known as the 4th generation of big data engines, provide a unified programming model for batch and streaming data where batch is just stream processing done every 24 hours. This simplifies the programming model.

最新的處理引擎,例如Apache Flink或Apache Beam ,也稱為第四代大數據引擎 ,為批處理和流數據提供統一的編程模型,其中批處理只是每24小時進行一次流處理。 這簡化了編程模型。

A common pattern is to have streaming data for time critical insights like credit card fraud and batch for reporting and analytics. Newer OLAP engines allow to query both in an unified way.

一種常見的模式是具有流數據以獲取時間緊迫的見解,例如信用卡欺詐,以及用于報告和分析的批處理。 較新的OLAP引擎允許以統一的方式進行查詢。

ETL與ELT (ETL vs ELT)

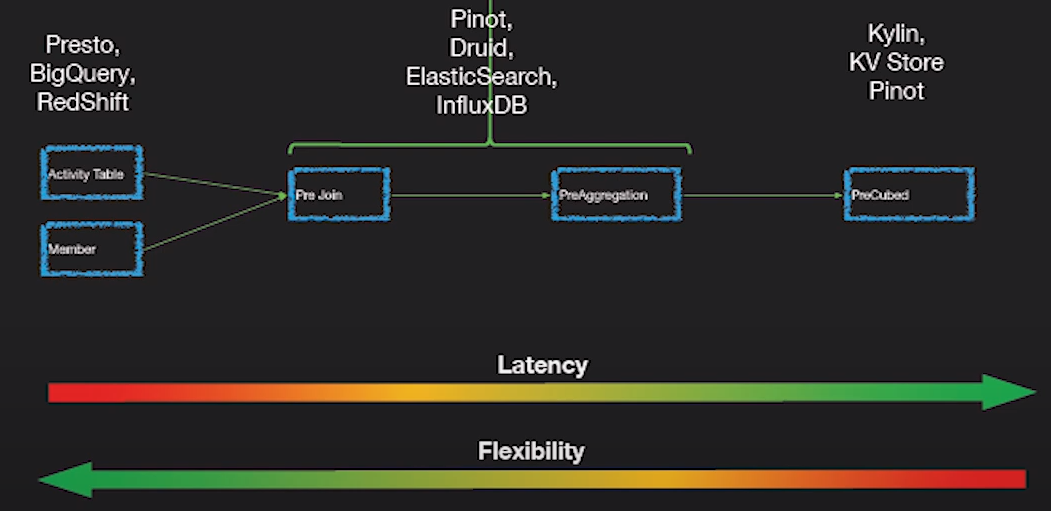

Depending on your use case, you may want to transform the data on load or on read. ELT means that you can execute queries that transform and aggregate data as part of the query, this is possible to do using SQL where you can apply functions, filter data, rename columns, create views, etc. This is possible with Big Data OLAP engines which provide a way to query real time and batch in an ELT fashion. The other option, is to transform the data on load(ETL) but note that doing joins and aggregations during processing it’s not a trivial task. In general, data warehouses use ETL since they tend to require a fixed schema (star or snowflake) whereas data lakes are more flexible and can do ELT and schema on read.

根據您的用例,您可能需要在加載或讀取時轉換數據 。 ELT意味著您可以執行將數據轉換和聚合為查詢一部分的查詢,這可以使用SQL進行,在SQL中您可以應用函數,過濾數據,重命名列,創建視圖等。BigData OLAP引擎可以實現它提供了一種以ELT方式實時查詢和批量查詢的方法。 另一個選擇是在load( ETL )上轉換數據,但是請注意,在處理過程中進行聯接和聚合并不是一件容易的事。 通常, 數據倉庫使用ETL,因為它們傾向于要求使用固定的模式(星型或雪花型),而數據湖更靈活,并且可以在讀取時執行ELT和模式 。

Each method has its own advantages and drawbacks. In short, transformations and aggregation on read are slower but provide more flexibility. If your queries are slow, you may need to pre join or aggregate during processing phase. OLAP engines discussed later, can perform pre aggregations during ingestion.

每種方法都有其自身的優點和缺點。 簡而言之,讀取時的轉換和聚合速度較慢,但??提供了更大的靈活性。 如果查詢很慢,則可能需要在處理階段進行預加入或聚合。 稍后討論的OLAP引擎可以在攝取期間執行預聚合。

團隊結構和方法 (Team Structure and methodology)

Finally, your company policies, organization, methodologies, infrastructure, team structure and skills play a major role in your Big Data decisions. For example, you may have a data problem that requires you to create a pipeline but you don’t have to deal with huge amount of data, in this case you could write a stream application where you perform the ingestion, enrichment and transformation in a single pipeline which is easier; but if your company already has a data lake you may want to use the existing platform, which is something you wouldn’t build from scratch.

最后,您的公司政策,組織,方法論,基礎架構,團隊結構和技能在您的大數據決策中起著重要作用 。 例如,您可能有一個數據問題,需要您創建管道,但是不必處理大量數據,在這種情況下,您可以編寫一個流應用程序,在該應用程序中以單一管道更容易; 但是,如果您的公司已經有一個數據湖,則可能要使用現有的平臺,而您不會從頭開始構建該平臺。

Another example is ETL vs ELT. Developers tend to build ETL systems where the data is ready to query in a simple format, so non technical employees can build dashboards and get insights. However, if you have a strong data analyst team and a small developer team, you may prefer ELT approach where developers just focus on ingestion; and data analysts write complex queries to transform and aggregate data. This shows how important it is to consider your team structure and skills in your big data journey.

另一個例子是ETL與ELT。 開發人員傾向于建立ETL系統,在該系統中,數據可以以簡單的格式進行查詢,因此非技術人員可以構建儀表板并獲得見解。 但是,如果您有一個強大的數據分析人員團隊和一個小的開發人員團隊,則您可能更喜歡ELT方法,使開發人員只專注于提取; 數據分析師編寫復雜的查詢來轉換和聚合數據。 這表明在大數據旅程中考慮團隊結構和技能的重要性。

It is recommended to have a diverse team with different skills and backgrounds working together since data is a cross functional aspect across the whole organization. Data lakes are extremely good at enabling easy collaboration while maintaining data governance and security.

建議將具有不同技能和背景的多元化團隊一起工作,因為數據是整個組織中跨職能的方面。 數據湖非常擅長在保持數據治理和安全性的同時實現輕松的協作 。

配料 (Ingredients)

After reviewing several aspects of the Big Data world, let’s see what are the basic ingredients.

在回顧了大數據世界的幾個方面之后,讓我們看一下基本要素。

數據存儲) (Data (Storage))

The first thing you need is a place to store all your data. Unfortunately, there is not a single product to fit your needs that’s why you need to choose the right storage based on your use cases.

您需要的第一件事是一個存儲所有數據的地方。 不幸的是,沒有一種產品可以滿足您的需求,這就是為什么您需要根據用例選擇合適的存儲。

For real time data ingestion, it is common to use an append log to store the real time events, the most famous engine is Kafka. An alternative is Apache Pulsar. Both, provide streaming capabilities but also storage for your events. This is usually short term storage for hot data(remember about data temperature!) since it is not cost efficient. There are other tools such Apache NiFi used to ingest data which have its own storage. Eventually, from the append log the data is transferred to another storage that could be a database or a file system.

對于實時數據攝取 ,通常使用附加日志存儲實時事件,最著名的引擎是Kafka 。 一個替代方法是Apache Pulsar 。 兩者都提供流功能,還可以存儲事件。 這通常是熱數據的短期存儲(請記住數據溫度!),因為它不經濟高效。 還有其他一些工具,例如用于存儲數據的Apache NiFi ,它們都有自己的存儲。 最終,數據將從附加日志傳輸到另一個存儲,該存儲可以是數據庫或文件系統。

Massive Databases

海量數據庫

Hadoop HDFS is the most common format for data lakes, however; large scale databases can be used as a back end for your data pipeline instead of a file system; check my previous article on Massive Scale Databases for more information. In summary, databases such Cassandra, YugaByteDB or BigTable can hold and process large amounts of data much faster than a data lake can but not as cheap; however, the price gap between a data lake file system and a database is getting smaller and smaller each year; this is something that you need to consider as part of your Hadoop/NoHadoop decision. More and more companies are now choosing a big data database instead of a data lake for their data needs and using deep storage file system just for archival.

Hadoop HDFS是數據湖最常用的格式。 大型數據庫可以用作數據管道的后端,而不是文件系統。 查看我以前關于大規模規模數據庫的文章 想要查詢更多的信息。 總而言之,像Cassandra , YugaByteDB或BigTable這樣的數據庫可以保存和處理大量數據,其速度比數據湖快得多,但價格卻不便宜。 但是,數據湖文件系統與數據庫之間的價格差距逐年縮小。 這是您在Hadoop / NoHadoop決策中需要考慮的一部分。 現在,越來越多的公司選擇大數據數據庫而不是數據湖來滿足其數據需求,而僅將深存儲文件系統用于歸檔。

To summarize the databases and storage options outside of the Hadoop ecosystem to consider are:

總結要考慮的Hadoop生態系統之外的數據庫和存儲選項是:

Cassandra: NoSQL database that can store large amounts of data, provides eventual consistency and many configuration options. Great for OLTP but can be used for OLAP with pre computed aggregations (not flexible). An alternative is ScyllaDB which is much faster and better for OLAP (advanced scheduler)

Cassandra : NoSQL數據庫,可以存儲大量數據,提供最終的一致性和許多配置選項。 非常適合OLTP,但可用于帶有預先計算的聚合的OLAP(不靈活)。 一種替代方案是ScyllaDB ,對于OLAP ( 高級調度程序 ) 而言 ,它更快,更好。

YugaByteDB: Massive scale Relational Database that can handle global transactions. Your best option for relational data.

YugaByteDB :可以處理全局事務的大規模關系數據庫。 關系數據的最佳選擇。

MongoDB: Powerful document based NoSQL database, can be used for ingestion(temp storage) or as a fast data layer for your dashboards

MongoDB :強大的基于文檔的NoSQL數據庫,可用于提取(臨時存儲)或用作儀表板的快速數據層

InfluxDB for time series data.

InfluxDB用于時間序列數據。

Prometheus for monitoring data.

Prometheus用于監視數據。

ElasticSearch: Distributed inverted index that can store large amounts of data. Sometimes ignored by many or just used for log storage, ElasticSearch can be used for a wide range of use cases including OLAP analysis, machine learning, log storage, unstructured data storage and much more. Definitely a tool to have in your Big Data ecosystem.

ElasticSearch :分布式倒排索引,可以存儲大量數據。 有時,ElasticSearch被許多人忽略或僅用于日志存儲,可用于各種用例,包括OLAP分析,機器學習,日志存儲,非結構化數據存儲等等。 絕對是您在大數據生態系統中擁有的工具。

Remember the differences between SQL and NoSQL, in the NoSQL world, you do not model data, you model your queries.

記住SQL和NoSQL之間的區別, 在NoSQL世界中,您不對數據建模,而是對查詢建模。

Hadoop Databases

Hadoop數據庫

HBase is the most popular data base inside the Hadoop ecosystem. It can hold large amount of data in a columnar format. It is based on BigTable.

HBase是Hadoop生態系統中最受歡迎的數據庫。 它可以以列格式保存大量數據。 它基于BigTable 。

File Systems (Deep Storage)

文件系統 (深度存儲)

For data lakes, in the Hadoop ecosystem, HDFS file system is used. However, most cloud providers have replaced it with their own deep storage system such S3 or GCS.

對于數據湖 ,在Hadoop生態系統中,使用HDFS文件系統。 但是,大多數云提供商已將其替換為自己的深度存儲系統,例如S3或GCS 。

These file systems or deep storage systems are cheaper than data bases but just provide basic storage and do not provide strong ACID guarantees.

這些文件系統或深度存儲系統比數據庫便宜,但僅提供基本存儲,不提供強大的ACID保證。

You will need to choose the right storage for your use case based on your needs and budget. For example, you may use a database for ingestion if you budget permit and then once data is transformed, store it in your data lake for OLAP analysis. Or you may store everything in deep storage but a small subset of hot data in a fast storage system such as a relational database.

您將需要根據您的需求和預算為您的用例選擇合適的存儲。 例如,如果您的預算允許,則可以使用數據庫進行攝取,然后轉換數據后,將其存儲在數據湖中以進行OLAP分析。 或者,您可以將所有內容存儲在深度存儲中,但將一小部分熱數據存儲在關系數據庫等快速存儲系統中。

File Formats

檔案格式

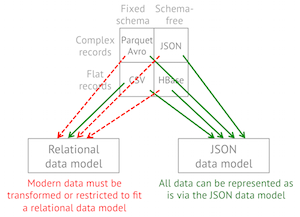

Another important decision if you use a HDFS is what format you will use to store your files. Note that deep storage systems store the data as files and different file formats and compression algorithms provide benefits for certain use cases. How you store the data in your data lake is critical and you need to consider the format, compression and especially how you partition your data.

如果使用HDFS,另一個重要的決定是將使用哪種格式存儲文件。 請注意,深度存儲系統將數據存儲為文件,并且不同的文件格式和壓縮算法為某些用例提供了好處。 如何在數據湖中存儲數據至關重要 ,您需要考慮格式 , 壓縮方式 ,尤其是如何 對數據進行 分區 。

The most common formats are CSV, JSON, AVRO, Protocol Buffers, Parquet, and ORC.

最常見的格式是CSV,JSON, AVRO , 協議緩沖區 , Parquet和ORC 。

Some things to consider when choosing the format are:

選擇格式時應考慮以下幾點:

The structure of your data: Some formats accepted nested data such JSON, Avro or Parquet and others do not. Even, the ones that do may not be highly optimized for it.

數據的結構 :某些格式可以接受嵌套數據,例如JSON,Avro或Parquet,而其他格式則不能。 甚至,可能沒有對其進行高度優化。

Performance: Some formats such Avro and Parquet perform better than other such JSON. Even between Avro and Parquet for different use cases one will be better than others. For example, since Parquet is a column based format it is great to query your data lake using SQL whereas Avro is better for ETL row level transformation.

性能 :Avro和Parquet等某些格式的性能優于其他JSON。 即使在Avro和Parquet的不同用例之間,一個也會比其他更好。 例如,由于Parquet是基于列的格式,因此使用SQL查詢數據湖非常有用,而Avro更適合ETL行級轉換。

Easy to read: Consider if you need to read the data or not. JSON or CSV are text formats and are human readable whereas more performant formats such parquet or avro are binary.

易于閱讀 :考慮是否需要讀取數據。 JSON或CSV是文本格式,并且易于閱讀,而功能更強的格式(例如鑲木地板或avro)是二進制格式。

Compression: Some formats offer higher compression rates than others.

壓縮 :某些格式比其他格式提供更高的壓縮率。

Schema evolution: Adding or removing fields is far more complicated in a data lake than in a database. Some formats like Avro or Parquet provide some degree of schema evolution which allows you to change the data schema and still query the data. Tools such Delta Lake format provide even better tools to deal with changes in Schemas.

模式演變 :在數據湖中添加或刪除字段要比在數據庫中復雜得多。 諸如Avro或Parquet之類的某些格式提供了某種程度的架構演變,使您可以更改數據架構并仍然查詢數據。 諸如Delta Lake格式的工具甚至提供了更好的工具來處理模式中的更改。

Compatibility: JSON or CSV are widely adopted and compatible with almost any tool while more performant options have less integration points.

兼容性 :JSON或CSV被廣泛采用并與幾乎所有工具兼容,而性能更高的選項具有較少的集成點。

As we can see, CSV and JSON are easy to use, human readable and common formats but lack many of the capabilities of other formats, making it too slow to be used to query the data lake. ORC and Parquet are widely used in the Hadoop ecosystem to query data whereas Avro is also used outside of Hadoop, especially together with Kafka for ingestion, it is very good for row level ETL processing. Row oriented formats have better schema evolution capabilities than column oriented formats making them a great option for data ingestion.

如我們所見,CSV和JSON易于使用,易于閱讀和通用格式,但是缺乏其他格式的許多功能,因此它太慢而無法用于查詢數據湖。 ORC和Parquet在Hadoop生態系統中被廣泛用于查詢數據,而Avro還在Hadoop之外使用,尤其是與Kafka一起用于提取時,對于行級ETL處理非常有用。 面向行的格式比面向列的格式具有更好的模式演化功能,這使它們成為數據提取的理想選擇。

Lastly, you need to also consider how to compress the data considering the trade off between file size and CPU costs. Some compression algorithms are faster but with bigger file size and others slower but with better compression rates. For more details check this article.

最后,您還需要考慮文件大小和CPU成本之間的權衡,如何壓縮數據 。 某些壓縮算法速度更快,但文件大小更大;另一些壓縮算法速度較慢,但??壓縮率更高。 有關更多詳細信息,請查看本文 。

Again, you need to review the considerations that we mentioned before and decide based on all the aspects we reviewed. Let’s go through some use cases as an example:

同樣,您需要查看我們之前提到的注意事項,并根據我們查看的所有方面進行決策。 讓我們以一些用例為例:

Use Cases

用例

You need to ingest real time data and storage somewhere for further processing as part of an ETL pipeline. If performance is important and budget is not an issue you could use Cassandra. The standard approach is to store it in HDFS using an optimized format as AVRO.

您需要在某處提取實時數據和存儲,以作為ETL管道的一部分進行進一步處理。 如果性能很重要并且預算不是問題,則可以使用Cassandra。 標準方法是使用優化格式AVRO將其存儲在HDFS中。

- You need to process your data and storage somewhere to be used by a highly interactive user facing application where latency is important (OLTP), you know the queries in advance. In this case use Cassandra or another database depending on the volume of your data. 您需要在某個地方處理數據和存儲,以供高度交互的面向用戶的應用程序使用,其中延遲很重要(OLTP),您需要提前知道查詢。 在這種情況下,請根據數據量使用Cassandra或其他數據庫。

- You need to serve your processed data to your user base, consistency is important and you do not know the queries in advance since the UI provides advanced queries. In this case you need a relational SQL data base, depending on your side a classic SQL DB such MySQL will suffice or you may need to use YugaByteDB or other relational massive scale database. 您需要將處理后的數據提供給您的用戶群,一致性很重要,并且由于UI提供了高級查詢,因此您不預先知道查詢。 在這種情況下,您需要一個關系型SQL數據庫,這取決于您身邊的經典SQL DB(例如MySQL)就足夠了,或者您可能需要使用YugaByteDB或其他關系型大規模數據庫。

- You need to store your processed data for OLAP analysis for your internal team so they can run ad-hoc queries and create reports. In this case, you can store the data in your deep storage file system in Parquet or ORC format. 您需要為內部團隊存儲處理后的數據以進行OLAP分析,以便他們可以運行臨時查詢并創建報告。 在這種情況下,您可以將數據以Parquet或ORC格式存儲在深度存儲文件系統中。

- You need to use SQL to run ad-hoc queries of historical data but you also need dashboards that need to respond in less than a second. In this case you need a hybrid approach where you store a subset of the data in a fast storage such as MySQL database and the historical data in Parquet format in the data lake. Then, use a query engine to query across different data sources using SQL. 您需要使用SQL來運行歷史數據的臨時查詢,但是您還需要儀表板,這些儀表板需要在不到一秒鐘的時間內做出響應。 在這種情況下,您需要一種混合方法,在這種方法中,您將數據的子集存儲在快速存儲中,例如MySQL數據庫,并將歷史數據以Parquet格式存儲在數據湖中。 然后,使用查詢引擎使用SQL跨不同的數據源進行查詢。

You need to perform really complex queries that need to respond in just a few milliseconds, you also may need to perform aggregations on read. In this case, use ElasticSearch to store the data or some newer OLAP system like Apache Pinot which we will discuss later.

您需要執行非常復雜的查詢,僅需幾毫秒即可響應,還可能需要在讀取時執行聚合。 在這種情況下,請使用ElasticSearch存儲數據或某些較新的OLAP系統(如Apache Pinot) ,稍后我們將對其進行討論。

- You need to search unstructured text. In this case use ElasticSearch. 您需要搜索非結構化文本。 在這種情況下,請使用ElasticSearch。

基礎設施 (Infrastructure)

Your current infrastructure can limit your options when deciding which tools to use. The first question to ask is: Cloud vs On-Prem. Cloud providers offer many options and flexibility. Furthermore, they provide Serverless solutions for your Big Data needs which are easier to manage and monitor. Definitely, the cloud is the place to be for Big Data; even for the Hadoop ecosystem, cloud providers offer managed clusters and cheaper storage than on premises. Check my other articles regarding cloud solutions.

當前的基礎架構會在決定使用哪些工具時限制您的選擇。 要問的第一個問題是: Cloud vs On-Prem 。 云提供商提供了許多選擇和靈活性。 此外,它們為您的大數據需求提供了無服務器解決方案,更易于管理和監控。 無疑,云是存放大數據的地方。 即使對于Hadoop生態系統, 云提供商也提供托管群集和比本地存儲便宜的存儲。 查看我有關云解決方案的其他文章。

If you are running on premises you should think about the following:

如果您在場所中運行,則應考慮以下事項:

Where do I run my workloads? Definitely Kubernetes or Apache Mesos provide a unified orchestration framework to run your applications in a unified way. The deployment, monitoring and alerting aspects will be the same regardless of the framework you use. In contrast, if you run on bare metal, you need to think and manage all the cross cutting aspects of your deployments. In this case, managed clusters and tools will suit better than libraries and frameworks.

我在哪里運行工作負載? 絕對是Kubernetes或Apache Mesos 提供統一的編排框架,以統一的方式運行您的應用程序。 無論使用哪種框架,部署,監視和警報方面都是相同的。 相反,如果您使用裸機運行,則需要考慮和管理部署的所有交叉方面。 在這種情況下,托管集群和工具將比庫和框架更適合。

What type of hardware do I have? If you have specialized hardware with fast SSDs and high-end servers, then you may be able to deploy massive databases like Cassandra and get great performance. If you just own commodity hardware, the Hadoop ecosystem will be a better option. Ideally, you want to have several types of servers for different workloads; the requirements for Cassandra are far different from Hadoop tools such Spark.

我擁有哪種類型的硬件? 如果您具有帶有快速SSD和高端服務器的專用硬件,則可以部署Cassandra等大型數據庫并獲得出色的性能。 如果您僅擁有商品硬件,那么Hadoop生態系統將是一個更好的選擇。 理想情況下,您希望針對不同的工作負載使用多種類型的服務器。 Cassandra的要求與Spark等Hadoop工具有很大不同。

監控和警報 (Monitoring and Alerting)

The next ingredient is essential for the success of your data pipeline. In the big data world, you need constant feedback about your processes and your data. You need to gather metrics, collect logs, monitor your systems, create alerts, dashboards and much more.

下一個要素對于數據管道的成功至關重要。 在大數據世界中, 您需要有關流程和數據的持續反饋 。 您需要收集指標,收集日志,監視系統,創建警報 , 儀表板等等。

Use open source tools like Prometheus and Grafana for monitor and alerting. Use log aggregation technologies to collect logs and store them somewhere like ElasticSearch.

使用Prometheus和Grafana等開源工具進行監視和警報。 使用日志聚合技術來收集日志并將其存儲在ElasticSearch之類的地方 。

Leverage on cloud providers capabilities for monitoring and alerting when possible. Depending on your platform you will use a different set of tools. For Cloud Serverless platform you will rely on your cloud provider tools and best practices. For Kubernetes, you will use open source monitor solutions or enterprise integrations. I really recommend this website where you can browse and check different solutions and built your own APM solution.

利用云提供商的功能進行監視和警報(如果可能)。 根據您的平臺,您將使用不同的工具集。 對于無云服務器平臺,您將依靠您的云提供商工具和最佳實踐。 對于Kubernetes,您將使用開源監控器解決方案或企業集成。 我真的建議您在此網站上瀏覽并查看其他解決方案,并構建自己的APM解決方案。

Another thing to consider in the Big Data word is auditability and accountability. Because of different regulations, you may be required to trace the data, capturing and recording every change as data flows through the pipeline. This is called data provenance or lineage. Tools like Apache Atlas are used to control, record and govern your data. Other tools such Apache NiFi supports data lineage out of the box. For real time traces, check Open Telemetry or Jaeger.

大數據一詞中要考慮的另一件事是可審計性和問責制。 由于法規不同,您可能需要跟蹤數據,捕獲和記錄數據流經管道時的所有更改。 這稱為數據來源或沿襲 。 諸如Apache Atlas之類的工具用于控制,記錄和管理您的數據。 其他工具如Apache NiFi也支持開箱即用的數據沿襲。 有關實時跟蹤,請檢查“ 打開遙測” 或“ Jaeger” 。

For Hadoop use, Ganglia.

對于Hadoop,請使用Ganglia 。

安全 (Security)

Apache Ranger provides a unified security monitoring framework for your Hadoop platform. Provides centralized security administration to manage all security related tasks in a central UI. It provides authorization using different methods and also full auditability across the entire Hadoop platform.

阿帕奇游俠 為您的Hadoop平臺提供統一的安全監控框架。 提供集中的安全性管理,以在中央UI中管理所有與安全性相關的任務。 它使用不同的方法提供授權,并在整個Hadoop平臺上提供全面的可審核性。

人 (People)

Your team is the key to success. Big Data Engineers can be difficult to find. Invest in training, upskilling, workshops. Remove silos and red tape, make iterations simple and use Domain Driven Design to set your team boundaries and responsibilities.

您的團隊是成功的關鍵。 大數據工程師可能很難找到。 投資于培訓,技能提升,研討會。 刪除孤島和繁文tape節,簡化迭代過程,并使用域驅動設計來設置團隊邊界和職責。

Fog Big Data you will have two broad categories:

霧大數據您將分為兩大類 :

Data Engineers for ingestion, enrichment and transformation. These engineers have a strong development and operational background and are in charge of creating the data pipeline. Developers, Administrators, DevOps specialists, etc will fall in this category.

數據工程師進行攝取,豐富和轉換。 這些工程師具有強大的開發和運營背景 ,并負責創建數據管道。 開發人員,管理員,DevOps專家等將屬于此類別。

Data Scientist: These can be BI specialists, data analysts, etc. in charge of generation reports, dashboards and gathering insights. Focused on OLAP and with strong business understanding, these people gather the data which will be used to make critical business decisions. Strong in SQL and visualization but weak in software development. Machine Learning specialists may also fall into this category.

數據科學家 :可以是BI專家,數據分析師等,負責生成報告,儀表板和收集見解。 這些人專注于OLAP并具有深刻的業務理解,收集了將用于制定關鍵業務決策的數據。 SQL和可視化方面很強,但是軟件開發方面很弱。 機器學習專家也可能屬于此類。

預算 (Budget)

This is an important consideration, you need money to buy all the other ingredients, and this is a limited resource. If you have unlimited money you could deploy a massive database and use it for your big data needs without many complications but it will cost you. So each technology mentioned in this article requires people with the skills to use it, deploy it and maintain it. Some technologies are more complex than others, so you need to take this into account.

這是一個重要的考慮因素,您需要金錢來購買所有其他成分,并且這是有限的資源。 如果您擁有無限的資金,則可以部署海量數據庫并將其用于大數據需求而不會帶來很多麻煩,但這會花費您大量資金。 因此,本文中提到的每種技術都需要具備使用,部署和維護技術的人員。 有些技術比其他技術更復雜,因此您需要考慮到這一點。

食譜 (Recipe)

Now that we have the ingredients, let’s cook our big data recipe. In a nutshell the process is simple; you need to ingest data from different sources, enrich it, store it somewhere, store the metadata(schema), clean it, normalize it, process it, quarantine bad data, optimally aggregate data and finally store it somewhere to be consumed by downstream systems.

現在我們已經掌握了配料,讓我們來準備大數據食譜。 簡而言之,該過程很簡單; 您需要從不同來源提取數據,對其進行充實,將其存儲在某個位置,存儲元數據(模式),對其進行清理,對其進行規范化,對其進行處理,隔離不良數據,以最佳方式聚合數據并將其最終存儲在某個位置以供下游系統使用。

Let’s have a look a bit more in detail to each step…

讓我們更詳細地了解每個步驟…

攝取 (Ingestion)

The first step is to get the data, the goal of this phase is to get all the data you need and store it in raw format in a single repository. This is usually owned by other teams who push their data into Kafka or a data store.

第一步是獲取數據, 此階段的目標是獲取所需的所有數據并將其以原始格式存儲在單個存儲庫中。 這通常由將其數據推送到Kafka或數據存儲中的其他團隊擁有。

For simple pipelines with not huge amounts of data you can build a simple microservices workflow that can ingest, enrich and transform the data in a single pipeline(ingestion + transformation), you may use tools such Apache Airflow to orchestrate the dependencies. However, for Big Data it is recommended that you separate ingestion from processing, massive processing engines that can run in parallel are not great to handle blocking calls, retries, back pressure, etc. So, it is recommended that all the data is saved before you start processing it. You should enrich your data as part of the ingestion by calling other systems to make sure all the data, including reference data has landed into the lake before processing.

對于沒有大量數據的簡單管道,您可以構建一個簡單的微服務工作流,該工作流可以在單個管道中攝取,豐富和轉換數據(注入+轉換),您可以使用Apache Airflow之類的工具來編排依賴性。 但是,對于大數據,建議您將攝取與處理分開 ,可以并行運行的海量處理引擎對于處理阻塞調用,重試,背壓等效果不佳。因此,建議在保存所有數據之前您開始處理它。 作為調用的一部分,您應該充實自己的數據,方法是調用其他系統以確保所有數據(包括參考數據)在處理之前已降落到湖泊中。

There are two modes of ingestion:

有兩種攝取方式:

Pull: Pull the data from somewhere like a database, file system, a queue or an API

拉 :從某處拉出數據等的數據庫,文件系統,一個隊列或API

Push: Applications can also push data into your lake but it is always recommended to have a messaging platform as Kafka in between. A common pattern is Change Data Capture(CDC) which allows us to move data into the lake in real time from databases and other systems.

推送 :應用程序也可以將數據推送到您的湖泊中,但始終建議在兩者之間使用一個消息傳遞平臺,例如Kafka 。 常見的模式是變更數據捕獲 ( CDC ),它使我們能夠將數據從數據庫和其他系統實時移入湖泊。

As we already mentioned, It is extremely common to use Kafka or Pulsar as a mediator for your data ingestion to enable persistence, back pressure, parallelization and monitoring of your ingestion. Then, use Kafka Connect to save the data into your data lake. The idea is that your OLTP systems will publish events to Kafka and then ingest them into your lake. Avoid ingesting data in batch directly through APIs; you may call HTTP end-points for data enrichment but remember that ingesting data from APIs it’s not a good idea in the big data word because it is slow, error prone(network issues, latency…) and can bring down source systems. Although, APIs are great to set domain boundaries in the OLTP world, these boundaries are set by data stores(batch) or topics(real time) in Kafka in the Big Data word. Of course, it always depends on the size of your data but try to use Kafka or Pulsar when possible and if you do not have any other options; pull small amounts of data in a streaming fashion from the APIs, not in batch. For databases, use tools such Debezium to stream data to Kafka (CDC).

正如我們已經提到的,使用非常普遍 卡夫卡 或 脈沖星 作為數據攝取的中介 ,以實現持久性,背壓,并行化和監測攝取。 然后,使用Kafka Connect將數據保存到您的數據湖中。 這個想法是您的OLTP系統將事件發布到Kafka,然后將其吸收到您的湖泊中。 避免直接通過API批量提取數據 ; 您可能會調用HTTP端點進行數據充實,但請記住,從API提取數據并不是大數據中的一個好主意,因為它速度慢,容易出錯(網絡問題,延遲等),并且可能導致源系統崩潰。 盡管API非常適合在OLTP世界中設置域邊界,但是這些邊界是由大數據字中的Kafka中的數據存儲(批)或主題(實時)設置的。 當然,它總是取決于您的數據大小,但是如果可能,如果沒有其他選擇,請嘗試使用Kafka或Pulsar 。 以流式方式從API中提取少量數據,而不是批量提取。 對于數據庫,請使用Debezium等工具將數據流式傳輸到Kafka(CDC)。

To minimize dependencies, it is always easier if the source system push data to Kafka rather than your team pulling the data since you will be tightly coupled with the other source systems. If this is not possible and you still need to own the ingestion process, we can look at two broad categories for ingestion:

為了最大程度地減少依賴性,如果源系統將數據推送到Kafka而不是您的團隊提取數據,則總是比較容易,因為您將與其他源系統緊密耦合。 如果無法做到這一點,并且您仍然需要掌握攝取過程,那么我們可以考慮兩種主要的攝取類別:

Un Managed Solutions: These are applications that you develop to ingest data into your data lake; you can run them anywhere. This is very common when ingesting data from APIs or other I/O blocking systems that do not have an out of the box solution, or when you are not using the Hadoop ecosystem. The idea is to use streaming libraries to ingest data from different topics, end-points, queues, or file systems. Because you are developing apps, you have full flexibility. Most libraries provide retries, back pressure, monitoring, batching and much more. This is a code yourself approach, so you will need other tools for orchestration and deployment. You get more control and better performance but more effort involved. You can have a single monolith or microservices communicating using a service bus or orchestrated using an external tool. Some of the libraries available are Apache Camel or Akka Ecosystem (Akka HTTP + Akka Streams + Akka Cluster + Akka Persistence + Alpakka). You can deploy it as a monolith or as microservices depending on how complex is the ingestion pipeline. If you use Kafka or Pulsar, you can use them as ingestion orchestration tools to get the data and enrich it. Each stage will move data to a new topic creating a DAG in the infrastructure itself by using topics for dependency management. If you do not have Kafka and you want a more visual workflow you can use Apache Airflow to orchestrate the dependencies and run the DAG. The idea is to have a series of services that ingest and enrich the date and then, store it somewhere. After each step is complete, the next one is executed and coordinated by Airflow. Finally, the data is stored in some kind of storage.

Un Managed Solutions :這些是您開發的應用程序,用于將數據提取到數據湖中; 您可以在任何地方運行它們。 從沒有現成解決方案的API或其他I / O阻止系統中提取數據時 ,或者在不使用Hadoop生態系統時,這非常常見 。 這個想法是使用流媒體庫從不同的主題,端點,隊列或文件系統中攝取數據。 因為您正在開發應用程序,所以您具有完全的靈活性 。 大多數庫提供重試,背壓,監視,批處理等等。 這是您自己的代碼方法,因此您將需要其他工具來進行編排和部署。 您將獲得更多的控制權和更好的性能,但需要更多的精力 。 您可以使用服務總線使單個整體或微服務進行通信,或者使用外部工具進行協調。 一些可用的庫是Apache Camel或Akka Ecosystem ( Akka HTTP + Akka Streams + Akka群集 + Akka Persistence + Alpakka )。 您可以將其部署為整體或微服務,具體取決于接收管道的復雜程度。 如果您使用Kafka或Pulsar ,則可以將它們用作獲取編排工具來獲取數據并豐富數據。 每個階段都將數據移動到一個新主題,通過使用主題進行依賴性管理在基礎架構中創建DAG 。 如果您沒有Kafka,并且想要一個更直觀的工作流程,則可以使用Apache Airflow來協調依賴關系并運行DAG。 這個想法是要提供一系列服務來攝取和豐富日期,然后將其存儲在某個地方。 完成每個步驟后,將執行下一個步驟并由Airflow進行協調。 最后,數據存儲在某種存儲中。

Managed Solutions: In this case you can use tools which are deployed in your cluster and used for ingestion. This is common in the Hadoop ecosystem where you have tools such Sqoop to ingest data from your OLTP databases and Flume to ingest streaming data. These tools provide monitoring, retries, incremental load, compression and much more.

托管解決方案 :在這種情況下,您可以使用部署在群集中并用于提取的工具。 這在Hadoop生態系統中很常見,在該生態系統中,您擁有諸如Sqoop之類的工具來從OLTP數據庫中獲取數據,而Flume則具有從流中獲取數據的能力。 這些工具提供監視,重試,增量負載,壓縮等功能。

Apache NiFi

Apache NiFi

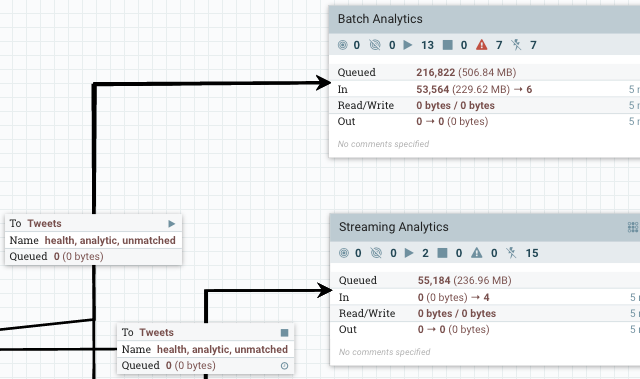

NiFi is one of these tools that are difficult to categorize. It is a beast on its own. It can be used for ingestion, orchestration and even simple transformations. So in theory, it could solve simple Big Data problems. It is a managed solution. It has a visual interface where you can just drag and drop components and use them to ingest and enrich data. It has over 300 built in processors which perform many tasks and you can extend it by implementing your own.

NiFi是其中很難分類的工具之一。 它本身就是野獸。 它可以用于攝取,編排甚至簡單的轉換。 因此,從理論上講,它可以解決簡單的大數據問題。 這是一個托管解決方案 。 它具有可視界面 ,您可以在其中拖放組件并使用它們來攝取和豐富數據。 它具有300多個內置處理器 ,可以執行許多任務,您可以通過實現自己的處理器來擴展它。

It has its own architecture, so it does not use any database HDFS but it has integrations with many tools in the Hadoop Ecosystem. You can call APIs, integrate with Kafka, FTP, many file systems and cloud storage. You can manage the data flow performing routing, filtering and basic ETL. For some use cases, NiFi may be all you need.

它具有自己的體系結構,因此它不使用任何數據庫HDFS,但已與Hadoop生態系統中的許多工具集成 。 您可以調用API,并與Kafka,FTP,許多文件系統和云存儲集成。 您可以管理執行路由,過濾和基本ETL的數據流。 對于某些用例,您可能只需要NiFi。

However, NiFi cannot scale beyond a certain point, because of the inter node communication more than 10 nodes in the cluster become inefficient. It tends to scale vertically better, but you can reach its limit, especially for complex ETL. However, you can integrate it with tools such Spark to process the data. NiFi is a great tool for ingesting and enriching your data.

但是,由于節點間通信,群集中的10個以上節點效率低下,因此NiFi無法擴展到某個特定點。 它傾向于在垂直方向更好地擴展,但是您可以達到其極限,尤其是對于復雜的ETL。 但是,您可以將其與Spark等工具集成以處理數據。 NiFi是吸收和豐富數據的絕佳工具。

Modern OLAP engines such Druid or Pinot also provide automatic ingestion of batch and streaming data, we will talk about them in another section.

諸如Druid或Pinot之類的現代OLAP引擎還提供了自動提取批處理和流數據的功能,我們將在另一部分中討論它們。

You can also do some initial validation and data cleaning during the ingestion, as long as they are not expensive computations or do not cross over the bounded context, remember that a null field may be irrelevant to you but important for another team.

您也可以在提取期間進行一些初始驗證和數據清理 ,只要它們不是昂貴的計算或不跨越邊界上下文,請記住,空字段可能對您無關緊要,但對另一個團隊很重要。

The last step is to decide where to land the data, we already talked about this. You can use a database or a deep storage system. For a data lake, it is common to store it in HDFS, the format will depend on the next step; if you are planning to perform row level operations, Avro is a great option. Avro also supports schema evolution using an external registry which will allow you to change the schema for your ingested data relatively easily.

最后一步是確定數據的放置位置,我們已經討論過了。 您可以使用數據庫或深度存儲系統。 對于數據湖,通常將其存儲在HDFS中,其格式取決于下一步;請參見下一步。 如果您打算執行行級操作, Avro是一個不錯的選擇。 Avro還使用外部注冊表支持架構演變,這將使您可以相對輕松地更改所攝取數據的架構。

元數據 (Metadata)

The next step after storing your data, is save its metadata (information about the data itself). The most common metadata is the schema. By using an external metadata repository, the different tools in your data lake or data pipeline can query it to infer the data schema.

存儲數據后,下一步是保存其元數據(有關數據本身的信息)。 最常見的元數據是架構 。 通過使用外部元數據存儲庫,數據湖或數據管道中的不同工具可以查詢它以推斷數據模式。

If you use Avro for raw data, then the external registry is a good option. This way you can easily de couple ingestion from processing.

如果將Avro用作原始數據,則外部注冊表是一個不錯的選擇。 這樣,您就可以輕松地將處理過程中的提取分離。

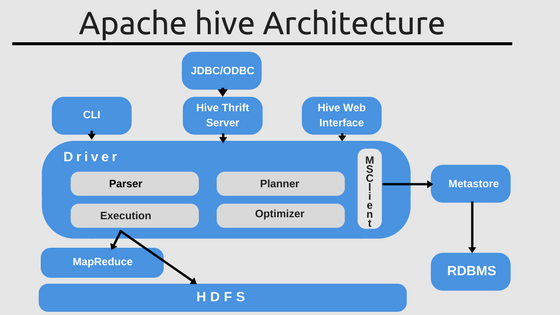

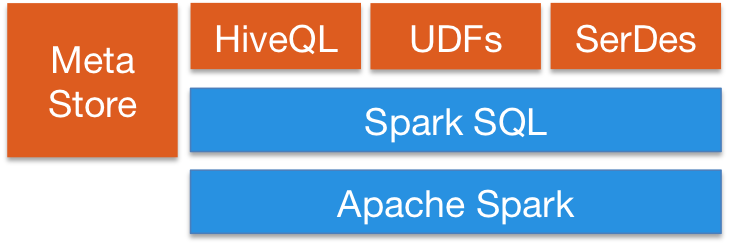

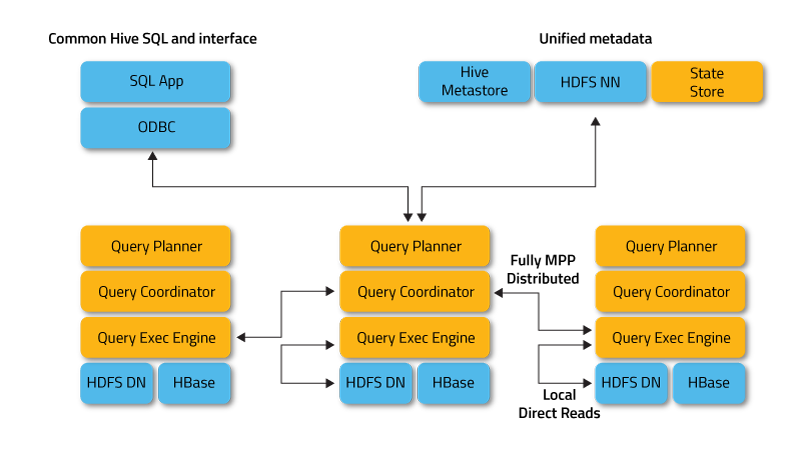

Once the data is ingested, in order to be queried by OLAP engines, it is very common to use SQL DDL. The most used data lake/data warehouse tool in the Hadoop ecosystem is Apache Hive, which provides a metadata store so you can use the data lake like a data warehouse with a defined schema. You can run SQL queries on top of Hive and connect many other tools such Spark to run SQL queries using Spark SQL. Hive is an important tool inside the Hadoop ecosystem providing a centralized meta database for your analytical queries. Other tools such Apache Tajo are built on top of Hive to provide data warehousing capabilities in your data lake.

數據一旦被攝取,為了由OLAP引擎查詢,通常會使用SQL DDL 。 Hadoop生態系統中最常用的數據湖/數據倉庫工具是Apache Hive ,它提供了元數據存儲,因此您可以像定義了架構的數據倉庫一樣使用數據湖。 You can run SQL queries on top of Hive and connect many other tools such Spark to run SQL queries using Spark SQL . Hive is an important tool inside the Hadoop ecosystem providing a centralized meta database for your analytical queries. Other tools such Apache Tajo are built on top of Hive to provide data warehousing capabilities in your data lake.

Apache Impala is a native analytic database for Hadoop which provides metadata store, you can still connect to Hive for metadata using Hcatalog.

Apache Impala is a native analytic database for Hadoop which provides metadata store, you can still connect to Hive for metadata using Hcatalog .

Apache Phoenix has also a metastore and can work with Hive. Phoenix focuses on OLTP enabling queries with ACID properties to the transactions. It is flexible and provides schema-on-read capabilities from the NoSQL world by leveraging HBase as its backing store. Apache Druid or Pinot also provide metadata store.

Apache Phoenix has also a metastore and can work with Hive. Phoenix focuses on OLTP enabling queries with ACID properties to the transactions. It is flexible and provides schema-on-read capabilities from the NoSQL world by leveraging HBase as its backing store. Apache Druid or Pinot also provide metadata store.

處理中 (Processing)

The goal of this phase is to clean, normalize, process and save the data using a single schema. The end result is a trusted data set with a well defined schema.

The goal of this phase is to clean, normalize, process and save the data using a single schema. The end result is a trusted data set with a well defined schema.

Generally, you would need to do some kind of processing such as:

Generally, you would need to do some kind of processing such as:

Validation: Validate data and quarantine bad data by storing it in a separate storage. Send alerts when a certain threshold is reached based on your data quality requirements.

Validation : Validate data and quarantine bad data by storing it in a separate storage. Send alerts when a certain threshold is reached based on your data quality requirements.

Wrangling and Cleansing: Clean your data and store it in another format to be further processed, for example replace inefficient JSON with Avro.

Wrangling and Cleansing : Clean your data and store it in another format to be further processed, for example replace inefficient JSON with Avro.

Normalization and Standardization of values

Normalization and Standardization of values

Rename fields

Rename fields

- … …

Remember, the goal is to create a trusted data set that later can be used for downstream systems. This is a key role of a data engineer. This can be done in a stream or batch fashion.

Remember, the goal is to create a trusted data set that later can be used for downstream systems. This is a key role of a data engineer. This can be done in a stream or batch fashion.

The pipeline processing can be divided in three phases in case of batch processing:

The pipeline processing can be divided in three phases in case of batch processing :

Pre Processing Phase: If the raw data is not clean or not in the right format, you need to pre process it. This phase includes some basic validation, but the goal is to prepare the data to be efficiently processed for the next stage. In this phase, you should try to flatten the data and save it in a binary format such Avro. This will speed up further processing. The idea is that the next phase will perform row level operations, and nested queries are expensive, so flattening the data now will improve the next phase performance.

Pre Processing Phase : If the raw data is not clean or not in the right format, you need to pre process it. This phase includes some basic validation, but the goal is to prepare the data to be efficiently processed for the next stage. In this phase, you should try to flatten the data and save it in a binary format such Avro. This will speed up further processing. The idea is that the next phase will perform row level operations, and nested queries are expensive, so flattening the data now will improve the next phase performance.

Trusted Phase: Data is validated, cleaned, normalized and transformed to a common schema stored in Hive. The goal is to create a trusted common data set understood by the data owners. Typically, a data specification is created and the role of the data engineer is to apply transformations to match the specification. The end result is a data set in Parquet format that can be easily queried. It is critical that you choose the right partitions and optimize the data to perform internal queries. You may want to partially pre compute some aggregations at this stage to improve query performance.

Trusted Phase : Data is validated, cleaned, normalized and transformed to a common schema stored in Hive . The goal is to create a trusted common data set understood by the data owners. Typically, a data specification is created and the role of the data engineer is to apply transformations to match the specification. The end result is a data set in Parquet format that can be easily queried. It is critical that you choose the right partitions and optimize the data to perform internal queries. You may want to partially pre compute some aggregations at this stage to improve query performance.

Reporting Phase: This step is optional but often required. Unfortunately, when using a data lake, a single schema will not serve all use cases; this is one difference between a data warehouse and data lake. Querying HDFS is not as efficient as a database or data warehouse, so further optimizations are required. In this phase, you may need to denormalize the data to store it using different partitions so it can be queried more efficiently by the different stakeholders. The idea is to create different views optimized for the different downstream systems (data marts). In this phase you can also compute aggregations if you do not use an OLAP engine (see next section). The trusted phase does not know anything about who will query the data, this phase optimizes the data for the consumers. If a client is highly interactive, you may want to introduce a fast storage layer in this phase like a relational database for fast queries. Alternatively you can use OLAP engines which we will discuss later.

Reporting Phase : This step is optional but often required. Unfortunately, when using a data lake, a single schema will not serve all use cases ; this is one difference between a data warehouse and data lake. Querying HDFS is not as efficient as a database or data warehouse, so further optimizations are required. In this phase, you may need to denormalize the data to store it using different partitions so it can be queried more efficiently by the different stakeholders. The idea is to create different views optimized for the different downstream systems ( data marts ). In this phase you can also compute aggregations if you do not use an OLAP engine (see next section). The trusted phase does not know anything about who will query the data, this phase optimizes the data for the consumers . If a client is highly interactive, you may want to introduce a fast storage layer in this phase like a relational database for fast queries. Alternatively you can use OLAP engines which we will discuss later.

For streaming the logic is the same but it will run inside a defined DAG in a streaming fashion. Spark allows you to join stream with historical data but it has some limitations. We will discuss later on OLAP engines, which are better suited to merge real time with historical data.

For streaming the logic is the same but it will run inside a defined DAG in a streaming fashion. Spark allows you to join stream with historical data but it has some limitations . We will discuss later on OLAP engines , which are better suited to merge real time with historical data.

Processing Frameworks

Processing Frameworks

Some of the tools you can use for processing are:

Some of the tools you can use for processing are:

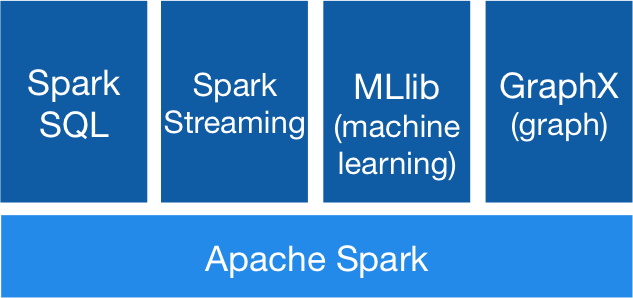

Apache Spark: This is the most well known framework for batch processing. Part of the Hadoop ecosystem, it is a managed cluster which provides incredible parallelism, monitoring and a great UI. It also supports stream processing (structural streaming). Basically Spark runs MapReduce jobs in memory increasing up to 100x times regular MapReduce performance. It integrates with Hive to support SQL and can be used to create Hive tables, views or to query data. It has lots of integrations, supports many formats and has a huge community. It is supported by all cloud providers. It can run on YARN as part of a Hadoop cluster but also in Kubernetes and other platforms. It has many libraries for specific use cases such SQL or machine learning.

Apache Spark : This is the most well known framework for batch processing. Part of the Hadoop ecosystem, it is a managed cluster which provides incredible parallelism , monitoring and a great UI. It also supports stream processing ( structural streaming ). Basically Spark runs MapReduce jobs in memory increasing up to 100x times regular MapReduce performance. It integrates with Hive to support SQL and can be used to create Hive tables, views or to query data. It has lots of integrations, supports many formats and has a huge community. It is supported by all cloud providers. It can run on YARN as part of a Hadoop cluster but also in Kubernetes and other platforms. It has many libraries for specific use cases such SQL or machine learning.

Apache Flink: The first engine to unify batch and streaming but heavily focus on streaming. It can be used as a backbone for microservices like Kafka. It can run on YARN as part of a Hadoop cluster but since its inception has been also optimized for other platforms like Kubernetes or Mesos. It is extremely fast and provides real time streaming, making it a better option than Spark for low latency stream processing, especially for stateful streams. It also has libraries for SQL, Machine Learning and much more.

Apache Flink : The first engine to unify batch and streaming but heavily focus on streaming . It can be used as a backbone for microservices like Kafka. It can run on YARN as part of a Hadoop cluster but since its inception has been also optimized for other platforms like Kubernetes or Mesos. It is extremely fast and provides real time streaming, making it a better option than Spark for low latency stream processing, especially for stateful streams. It also has libraries for SQL, Machine Learning and much more.

Apache Storm: Apache Storm is a free and open source distributed real-time computation system.It focuses on streaming and it is a managed solution part of the Hadoop ecosystem. It is scalable, fault-tolerant, guarantees your data will be processed, and is easy to set up and operate.

Apache Storm : Apache Storm is a free and open source distributed real-time computation system.It focuses on streaming and it is a managed solution part of the Hadoop ecosystem. It is scalable, fault-tolerant, guarantees your data will be processed, and is easy to set up and operate.

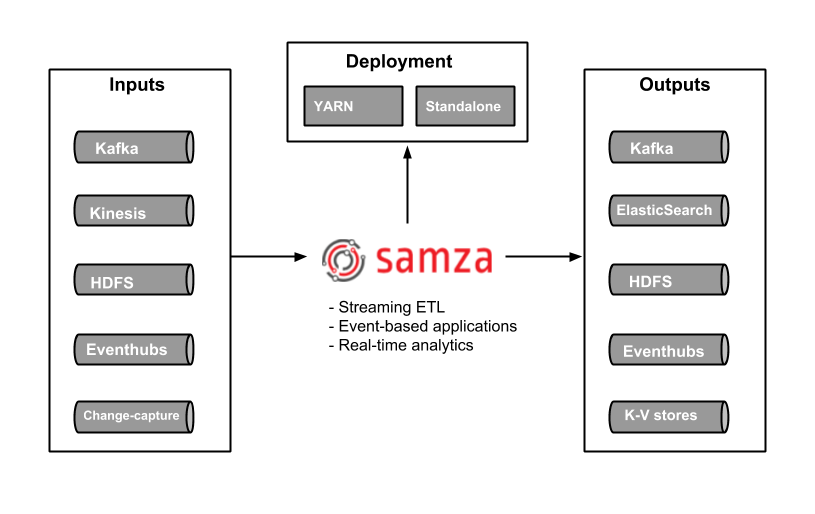

Apache Samza: Another great stateful stream processing engine. Samza allows you to build stateful applications that process data in real-time from multiple sources including Apache Kafka. Managed solution part of the Hadoop Ecosystem that runs on top of YARN.

Apache Samza : Another great stateful stream processing engine. Samza allows you to build stateful applications that process data in real-time from multiple sources including Apache Kafka. Managed solution part of the Hadoop Ecosystem that runs on top of YARN.

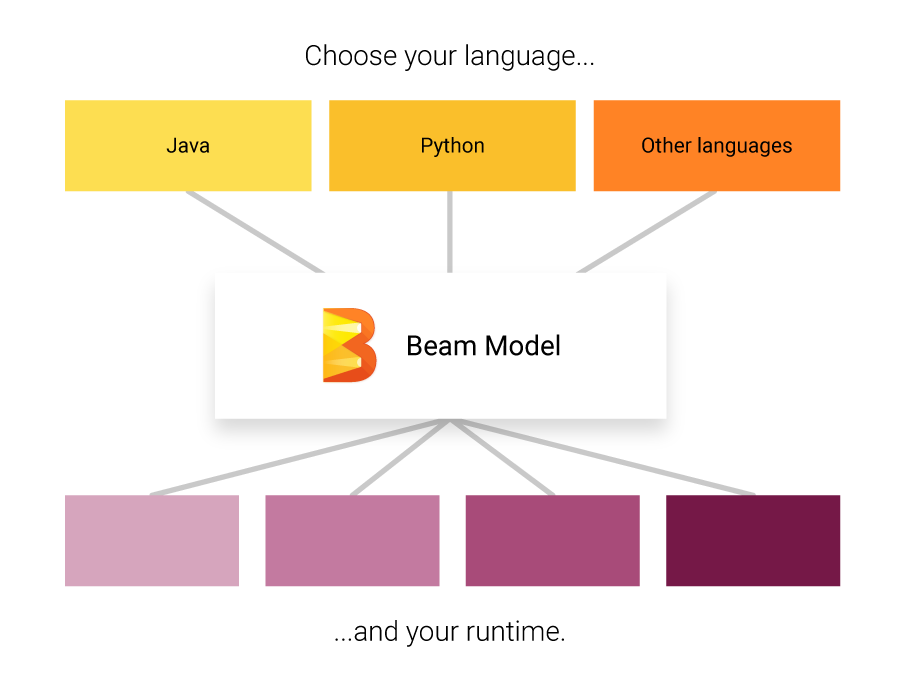

Apache Beam: Apache Beam it is not an engine itself but a specification of an unified programming model that brings together all the other engines. It provides a programming model that can be used with different languages, so developers do not have to learn new languages when dealing with big data pipelines. Then, it plugs different back ends for the processing step that can run on the cloud or on premises. Beam supports all the engines mentioned before and you can easily switch between them and run them in any platform: cloud, YARN, Mesos, Kubernetes. If you are starting a new project, I really recommend starting with Beam to be sure your data pipeline is future proof.

Apache Beam : Apache Beam it is not an engine itself but a specification of an unified programming model that brings together all the other engines. It provides a programming model that can be used with different languages , so developers do not have to learn new languages when dealing with big data pipelines. Then, it plugs different back ends for the processing step that can run on the cloud or on premises. Beam supports all the engines mentioned before and you can easily switch between them and run them in any platform: cloud, YARN, Mesos, Kubernetes. If you are starting a new project, I really recommend starting with Beam to be sure your data pipeline is future proof.

By the end of this processing phase, you have cooked your data and is now ready to be consumed!, but in order to cook the chef must coordinate with his team…

By the end of this processing phase, you have cooked your data and is now ready to be consumed!, but in order to cook the chef must coordinate with his team…

編排 (Orchestration)

Data pipeline orchestration is a cross cutting process which manages the dependencies between all the other tasks. If you use stream processing you need to orchestrate the dependencies of each streaming app, for batch, you need to schedule and orchestrate it job.

Data pipeline orchestration is a cross cutting process which manages the dependencies between all the other tasks. If you use stream processing you need to orchestrate the dependencies of each streaming app, for batch, you need to schedule and orchestrate it job.

Tasks and applications may fail, so you need a way to schedule, reschedule, replay, monitor, retry and debug your whole data pipeline in an unified way.

Tasks and applications may fail, so you need a way to schedule , reschedule, replay , monitor , retry and debug your whole data pipeline in an unified way.

Some of the options are:

Some of the options are:

Apache Oozie: Oozie it’s a scheduler for Hadoop, jobs are created as DAGs and can be triggered by time or data availability. It has integrations with ingestion tools such as Sqoop and processing frameworks such Spark.

Apache Oozie : Oozie it's a scheduler for Hadoop, jobs are created as DAGs and can be triggered by time or data availability. It has integrations with ingestion tools such as Sqoop and processing frameworks such Spark.

Apache Airflow: Airflow is a platform that allows to schedule, run and monitor workflows. Uses DAGs to create complex workflows. Each node in the graph is a task, and edges define dependencies among the tasks. Airflow scheduler executes your tasks on an array of workers while following the specified dependencies described by you. It generates the DAG for you maximizing parallelism. The DAGs are written in Python, so you can run them locally, unit test them and integrate them with your development workflow. It also supports SLAs and alerting. Luigi is an alternative to Airflow with similar functionality but Airflow has more functionality and scales up better than Luigi.

Apache Airflow : Airflow is a platform that allows to schedule, run and monitor workflows . Uses DAGs to create complex workflows. Each node in the graph is a task, and edges define dependencies among the tasks. Airflow scheduler executes your tasks on an array of workers while following the specified dependencies described by you. It generates the DAG for you maximizing parallelism . The DAGs are written in Python , so you can run them locally, unit test them and integrate them with your development workflow. It also supports SLAs and alerting . Luigi is an alternative to Airflow with similar functionality but Airflow has more functionality and scales up better than Luigi.

Apache NiFi: NiFi can also schedule jobs, monitor, route data, alert and much more. It is focused on data flow but you can also process batches. It runs outside of Hadoop but can trigger Spark jobs and connect to HDFS/S3.

Apache NiFi : NiFi can also schedule jobs, monitor, route data, alert and much more. It is focused on data flow but you can also process batches. It runs outside of Hadoop but can trigger Spark jobs and connect to HDFS/S3.

Query your data (Query your data)

Now that you have your cooked recipe, it is time to finally get the value from it. By this point, you have your data stored in your data lake using some deep storage such HDFS in a queryable format such Parquet or in a OLAP database.

Now that you have your cooked recipe, it is time to finally get the value from it. By this point, you have your data stored in your data lake using some deep storage such HDFS in a queryable format such Parquet or in a OLAP database .

There are a wide range of tools used to query the data, each one has its advantages and disadvantages. Most of them focused on OLAP but few are also optimized for OLTP. Some use standard formats and focus only on running the queries whereas others use their own format/storage to push processing to the source to improve performance. Some are optimized for data warehousing using star or snowflake schema whereas others are more flexible. To summarize these are the different considerations:

There are a wide range of tools used to query the data, each one has its advantages and disadvantages. Most of them focused on OLAP but few are also optimized for OLTP. Some use standard formats and focus only on running the queries whereas others use their own format/storage to push processing to the source to improve performance. Some are optimized for data warehousing using star or snowflake schema whereas others are more flexible. To summarize these are the different considerations:

- Data warehouse vs data lake Data warehouse vs data lake

- Hadoop vs Standalone Hadoop vs Standalone

- OLAP vs OLTP OLAP vs OLTP

- Query Engine vs. OLAP Engines Query Engine vs. OLAP Engines

We should also consider processing engines with querying capabilities.

We should also consider processing engines with querying capabilities.

Processing Engines (Processing Engines)

Most of the engines we described in the previous section can connect to the metadata server such as Hive and run queries, create views, etc. This is a common use case to create refined reporting layers.

Most of the engines we described in the previous section can connect to the metadata server such as Hive and run queries, create views, etc. This is a common use case to create refined reporting layers.

Spark SQL provides a way to seamlessly mix SQL queries with Spark programs, so you can mix the DataFrame API with SQL. It has Hive integration and standard connectivity through JDBC or ODBC; so you can connect Tableau, Looker or any BI tool to your data through Spark.

Spark SQL provides a way to seamlessly mix SQL queries with Spark programs, so you can mix the DataFrame API with SQL. It has Hive integration and standard connectivity through JDBC or ODBC; so you can connect Tableau , Looker or any BI tool to your data through Spark.

Apache Flink also provides SQL API. Flink’s SQL support is based on Apache Calcite which implements the SQL standard. It also integrates with Hive through the HiveCatalog. For example, users can store their Kafka or ElasticSearch tables in Hive Metastore by using HiveCatalog, and reuse them later on in SQL queries.

Apache Flink also provides SQL API. Flink's SQL support is based on Apache Calcite which implements the SQL standard. It also integrates with Hive through the HiveCatalog . For example, users can store their Kafka or ElasticSearch tables in Hive Metastore by using HiveCatalog , and reuse them later on in SQL queries.

Query Engines (Query Engines)

This type of tools focus on querying different data sources and formats in an unified way. The idea is to query your data lake using SQL queries like if it was a relational database, although it has some limitations. Some of these tools can also query NoSQL databases and much more. These tools provide a JDBC interface for external tools, such as Tableau or Looker, to connect in a secure fashion to your data lake. Query engines are the slowest option but provide the maximum flexibility.

This type of tools focus on querying different data sources and formats in an unified way . The idea is to query your data lake using SQL queries like if it was a relational database, although it has some limitations. Some of these tools can also query NoSQL databases and much more. These tools provide a JDBC interface for external tools, such as Tableau or Looker , to connect in a secure fashion to your data lake. Query engines are the slowest option but provide the maximum flexibility.

Apache Pig: It was one of the first query languages along with Hive. It has its own language different from SQL. The salient property of Pig programs is that their structure is amenable to substantial parallelization, which in turns enables them to handle very large data sets. It is not in decline in favor of newer SQL based engines.

Apache Pig : It was one of the first query languages along with Hive. It has its own language different from SQL. The salient property of Pig programs is that their structure is amenable to substantial parallelization , which in turns enables them to handle very large data sets. It is not in decline in favor of newer SQL based engines.

Presto: Released as open source by Facebook, it’s an open source distributed SQL query engine for running interactive analytic queries against data sources of all sizes. Presto allows querying data where it lives, including Hive, Cassandra, relational databases and file systems. It can perform queries on large data sets in a manner of seconds. It is independent of Hadoop but integrates with most of its tools, especially Hive to run SQL queries.

Presto : Released as open source by Facebook, it's an open source distributed SQL query engine for running interactive analytic queries against data sources of all sizes. Presto allows querying data where it lives, including Hive, Cassandra, relational databases and file systems. It can perform queries on large data sets in a manner of seconds. It is independent of Hadoop but integrates with most of its tools, especially Hive to run SQL queries.

Apache Drill: Provides a schema-free SQL Query Engine for Hadoop, NoSQL and even cloud storage. It is independent of Hadoop but has many integrations with the ecosystem tools such Hive. A single query can join data from multiple datastores performing optimizations specific to each data store. It is very good at allowing analysts to treat any data like a table, even if they are reading a file under the hood. Drill supports fully standard SQL. Business users, analysts and data scientists can use standard BI/analytics tools such as Tableau, Qlik and Excel to interact with non-relational datastores by leveraging Drill’s JDBC and ODBC drivers. Furthermore, developers can leverage Drill’s simple REST API in their custom applications to create beautiful visualizations.

Apache Drill : Provides a schema-free SQL Query Engine for Hadoop, NoSQL and even cloud storage. It is independent of Hadoop but has many integrations with the ecosystem tools such Hive. A single query can join data from multiple datastores performing optimizations specific to each data store. It is very good at allowing analysts to treat any data like a table, even if they are reading a file under the hood. Drill supports fully standard SQL . Business users, analysts and data scientists can use standard BI/analytics tools such as Tableau , Qlik and Excel to interact with non-relational datastores by leveraging Drill's JDBC and ODBC drivers. Furthermore, developers can leverage Drill's simple REST API in their custom applications to create beautiful visualizations.

OLTP Databases (OLTP Databases)

Although, Hadoop is optimized for OLAP there are still some options if you want to perform OLTP queries for an interactive application.

Although, Hadoop is optimized for OLAP there are still some options if you want to perform OLTP queries for an interactive application.

HBase is has very limited ACID properties by design, since it was built to scale and does not provides ACID capabilities out of the box but it can be used for some OLTP scenarios.

HBase is has very limited ACID properties by design, since it was built to scale and does not provides ACID capabilities out of the box but it can be used for some OLTP scenarios.

Apache Phoenix is built on top of HBase and provides a way to perform OTLP queries in the Hadoop ecosystem. Apache Phoenix is fully integrated with other Hadoop products such as Spark, Hive, Pig, Flume, and Map Reduce. It also can store metadata and it supports table creation and versioned incremental alterations through DDL commands. It is quite fast, faster than using Drill or other query engine.

Apache Phoenix is built on top of HBase and provides a way to perform OTLP queries in the Hadoop ecosystem. Apache Phoenix is fully integrated with other Hadoop products such as Spark, Hive, Pig, Flume, and Map Reduce. It also can store metadata and it supports table creation and versioned incremental alterations through DDL commands. It is quite fast , faster than using Drill or other query engine.

You may use any massive scale database outside the Hadoop ecosystem such as Cassandra, YugaByteDB, ScyllaDB for OTLP.

You may use any massive scale database outside the Hadoop ecosystem such as Cassandra, YugaByteDB, ScyllaDB for OTLP .

Finally, it is very common to have a subset of the data, usually the most recent, in a fast database of any type such MongoDB or MySQL. The query engines mentioned above can join data between slow and fast data storage in a single query.

Finally, it is very common to have a subset of the data, usually the most recent, in a fast database of any type such MongoDB or MySQL. The query engines mentioned above can join data between slow and fast data storage in a single query.

Distributed Search Indexes (Distributed Search Indexes)

These tools provide a way to store and search unstructured text data and they live outside the Hadoop ecosystem since they need special structures to store the data. The idea is to use an inverted index to perform fast lookups. Besides text search, this technology can be used for a wide range of use cases like storing logs, events, etc. There are two main options:

These tools provide a way to store and search unstructured text data and they live outside the Hadoop ecosystem since they need special structures to store the data. The idea is to use an inverted index to perform fast lookups. Besides text search, this technology can be used for a wide range of use cases like storing logs, events, etc. There are two main options:

Solr: it is a popular, blazing-fast, open source enterprise search platform built on Apache Lucene. Solr is reliable, scalable and fault tolerant, providing distributed indexing, replication and load-balanced querying, automated failover and recovery, centralized configuration and more. It is great for text search but its use cases are limited compared to ElasticSearch.

Solr : it is a popular, blazing-fast, open source enterprise search platform built on Apache Lucene . Solr is reliable, scalable and fault tolerant, providing distributed indexing, replication and load-balanced querying, automated failover and recovery, centralized configuration and more. It is great for text search but its use cases are limited compared to ElasticSearch .

ElasticSearch: It is also a very popular distributed index but it has grown into its own ecosystem which covers many use cases like APM, search, text storage, analytics, dashboards, machine learning and more. It is definitely a tool to have in your toolbox either for DevOps or for your data pipeline since it is very versatile. It can also store and search videos and images.

ElasticSearch : It is also a very popular distributed index but it has grown into its own ecosystem which covers many use cases like APM , search, text storage, analytics, dashboards, machine learning and more. It is definitely a tool to have in your toolbox either for DevOps or for your data pipeline since it is very versatile. It can also store and search videos and images.

ElasticSearch can be used as a fast storage layer for your data lake for advanced search functionality. If you store your data in a key-value massive database, like HBase or Cassandra, which provide very limited search capabilities due to the lack of joins; you can put ElasticSearch in front to perform queries, return the IDs and then do a quick lookup on your database.

ElasticSearch can be used as a fast storage layer f or your data lake for advanced search functionality. If you store your data in a key-value massive database, like HBase or Cassandra, which provide very limited search capabilities due to the lack of joins; you can put ElasticSearch in front to perform queries, return the IDs and then do a quick lookup on your database.

It can be used also for analytics; you can export your data, index it and then query it using Kibana, creating dashboards, reports and much more, you can add histograms, complex aggregations and even run machine learning algorithms on top of your data. The Elastic Ecosystem is huge and worth exploring.

It can be used also for analytics ; you can export your data, index it and then query it using Kibana , creating dashboards, reports and much more, you can add histograms, complex aggregations and even run machine learning algorithms on top of your data. The Elastic Ecosystem is huge and worth exploring.

OLAP Databases (OLAP Databases)

In this category we have databases which may also provide a metadata store for schemas and query capabilities. Compared to query engines, these tools also provide storage and may enforce certain schemas in case of data warehouses (star schema). These tools use SQL syntax and Spark and other frameworks can interact with them.

In this category we have databases which may also provide a metadata store for schemas and query capabilities. Compared to query engines, these tools also provide storage and may enforce certain schemas in case of data warehouses (star schema). These tools use SQL syntax and Spark and other frameworks can interact with them.

Apache Hive: We already discussed Hive as a central schema repository for Spark and other tools so they can use SQL, but Hive can also store data, so you can use it as a data warehouse. It can access HDFS or HBase. When querying Hive it leverages on Apache Tez, Apache Spark, or MapReduce, being Tez or Spark much faster. It also has a procedural language called HPL-SQL.

Apache Hive : We already discussed Hive as a central schema repository for Spark and other tools so they can use SQL , but Hive can also store data, so you can use it as a data warehouse. It can access HDFS or HBase . When querying Hive it leverages on Apache Tez , Apache Spark , or MapReduce , being Tez or Spark much faster. It also has a procedural language called HPL-SQL.

Apache Impala: It is a native analytic database for Hadoop, that you can use to store data and query it in an efficient manner. It can connect to Hive for metadata using Hcatalog. Impala provides low latency and high concurrency for BI/analytic queries on Hadoop (not delivered by batch frameworks such as Apache Hive). Impala also scales linearly, even in multitenant environments making a better alternative for queries than Hive. Impala is integrated with native Hadoop security and Kerberos for authentication, so you can securely managed data access. It uses HBase and HDFS for data storage.

Apache Impala : It is a native analytic database for Hadoop, that you can use to store data and query it in an efficient manner. It can connect to Hive for metadata using Hcatalog . Impala provides low latency and high concurrency for BI/analytic queries on Hadoop (not delivered by batch frameworks such as Apache Hive). Impala also scales linearly, even in multitenant environments making a better alternative for queries than Hive. Impala is integrated with native Hadoop security and Kerberos for authentication, so you can securely managed data access. It uses HBase and HDFS for data storage.

Apache Tajo: It is another data warehouse for Hadoop. Tajo is designed for low-latency and scalable ad-hoc queries, online aggregation, and ETL on large-data sets stored on HDFS and other data sources. It has integration with Hive Metastore to access the common schemas. It has many query optimizations, it is scalable, fault tolerant and provides a JDBC interface.

Apache Tajo : It is another data warehouse for Hadoop. Tajo is designed for low-latency and scalable ad-hoc queries, online aggregation, and ETL on large-data sets stored on HDFS and other data sources. It has integration with Hive Metastore to access the common schemas. It has many query optimizations, it is scalable, fault tolerant and provides a JDBC interface.

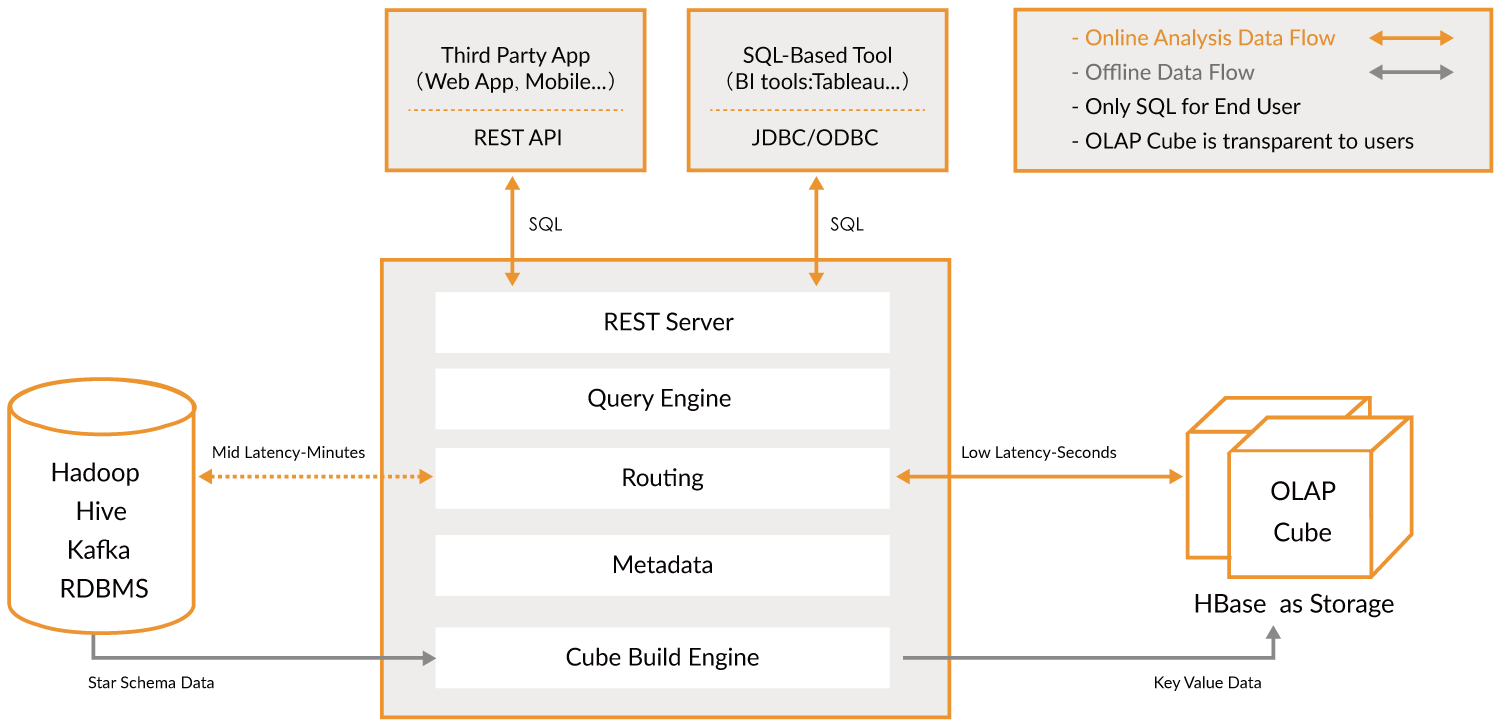

Apache Kylin: Apache Kylin is a newer distributed Analytical Data Warehouse. Kylin is extremely fast, so it can be used to complement some of the other databases like Hive for use cases where performance is important such as dashboards or interactive reports, it is probably the best OLAP data warehouse but it is more difficult to use, another problem is that because of the high dimensionality, you need more storage. The idea is that if query engines or Hive are not fast enough, you can create a “Cube” in Kylin which is a multidimensional table optimized for OLAP with pre computed values which you can query from your dashboards or interactive reports. It can build cubes directly from Spark and even in near real time from Kafka.

Apache Kylin : Apache Kylin is a newer distributed Analytical Data Warehouse . Kylin is extremely fast , so it can be used to complement some of the other databases like Hive for use cases where performance is important such as dashboards or interactive reports, it is probably the best OLAP data warehouse but it is more difficult to use, another problem is that because of the high dimensionality, you need more storage. The idea is that if query engines or Hive are not fast enough, you can create a “ Cube ” in Kylin which is a multidimensional table optimized for OLAP with pre computed values which you can query from your dashboards or interactive reports. It can build cubes directly from Spark and even in near real time from Kafka.

OLAP Engines (OLAP Engines)

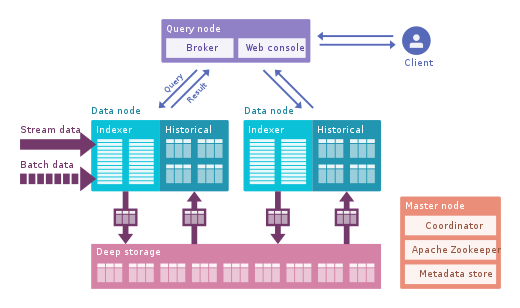

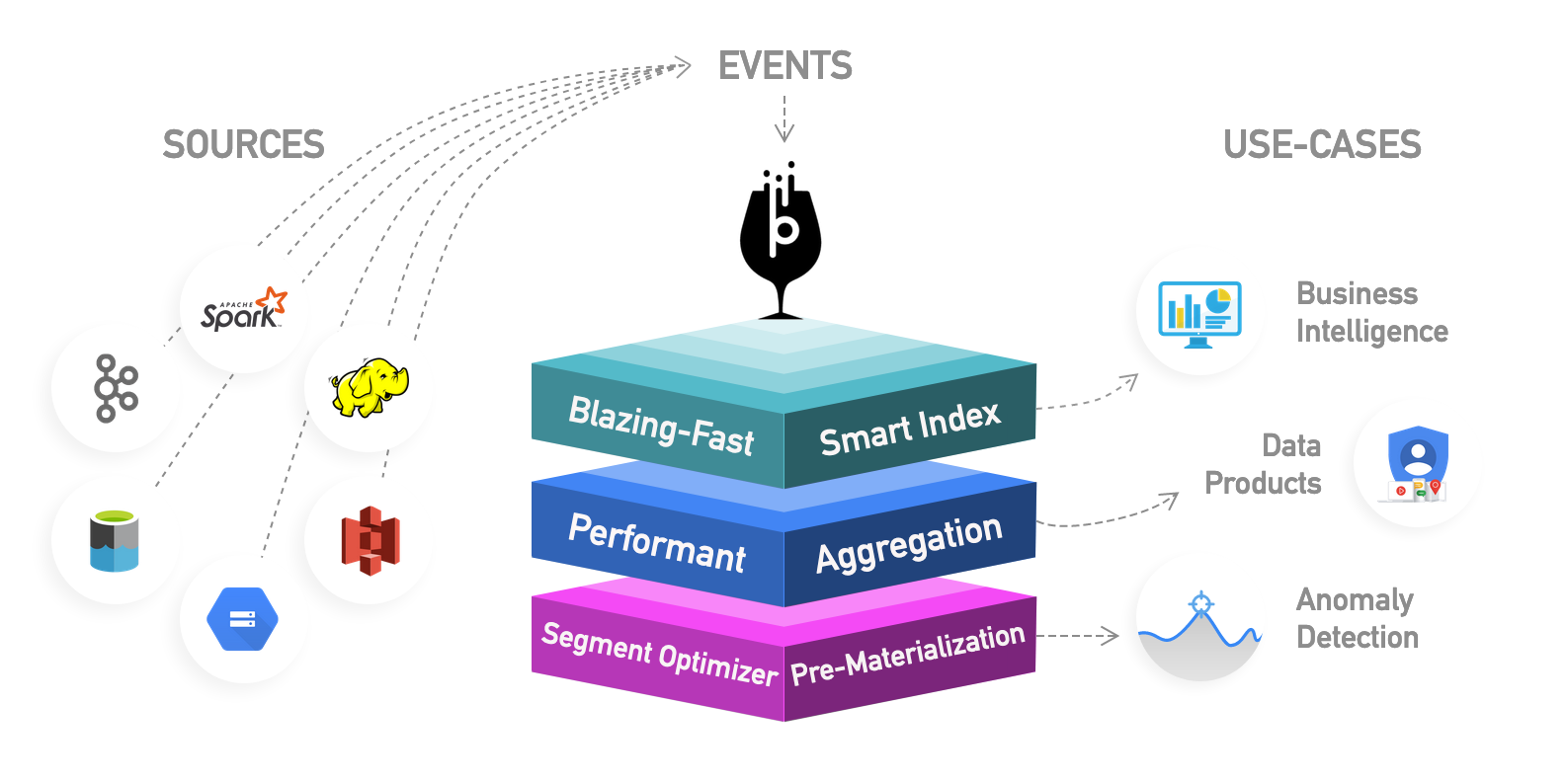

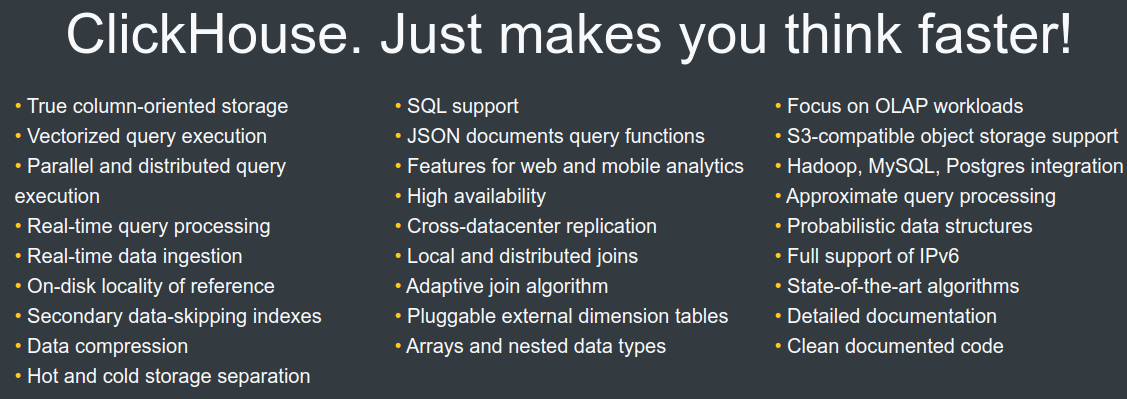

In this category, I include newer engines that are an evolution of the previous OLAP databases which provide more functionality creating an all-in-one analytics platform. Actually, they are a hybrid of the previous two categories adding indexing to your OLAP databases. They live outside the Hadoop platform but are tightly integrated. In this case, you would typically skip the processing phase and ingest directly using these tools.

In this category, I include newer engines that are an evolution of the previous OLAP databases which provide more functionality creating an all-in-one analytics platform . Actually, they are a hybrid of the previous two categories adding indexing to your OLAP databases. They live outside the Hadoop platform but are tightly integrated. In this case, you would typically skip the processing phase and ingest directly using these tools.