一、Sequential介紹

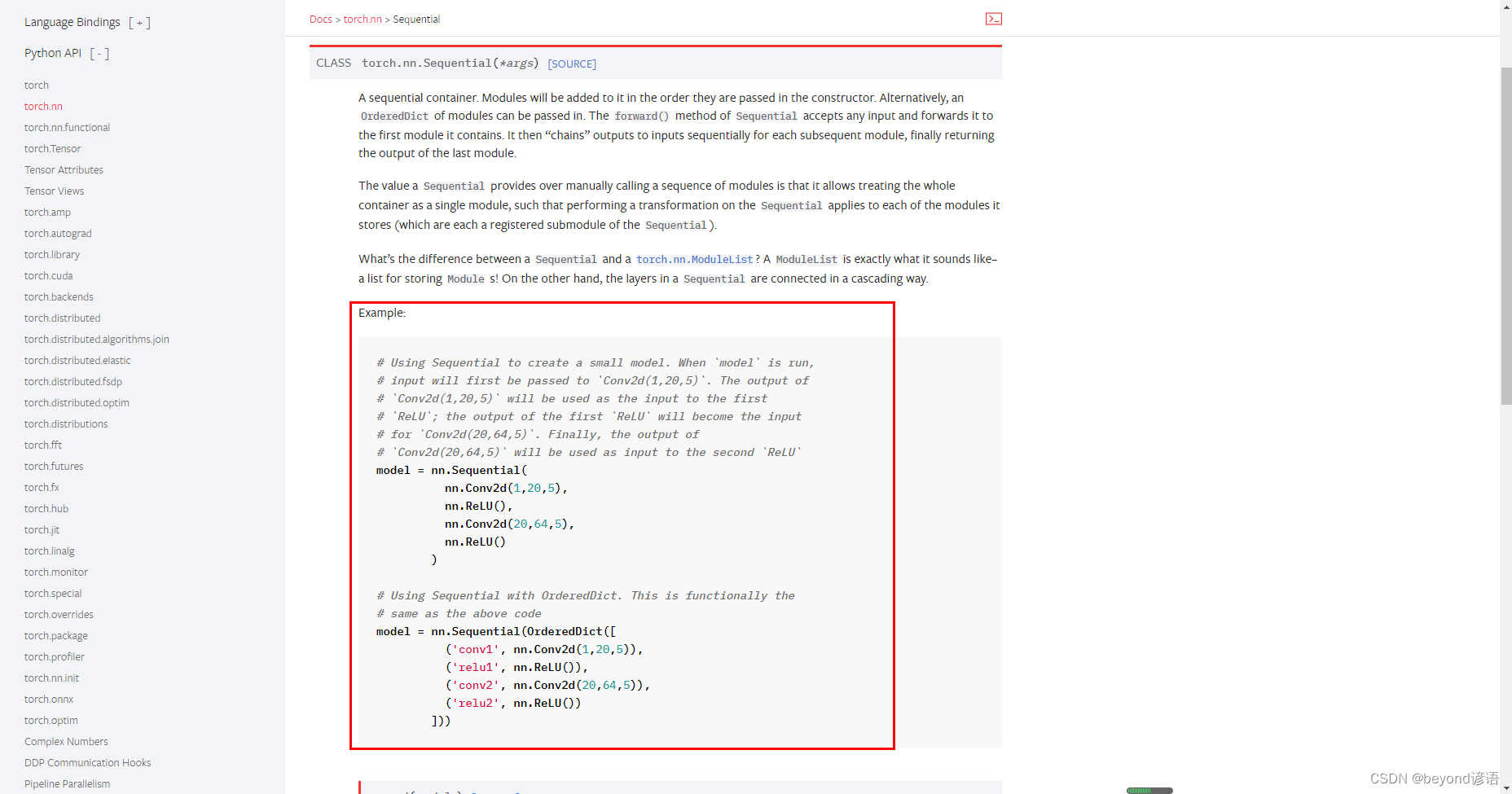

torch.nn.Sequential(*args)

由官網給的Example可以大概了解到Sequential是將多層網絡進行便捷整合,方便可視化以及簡化網絡復雜性

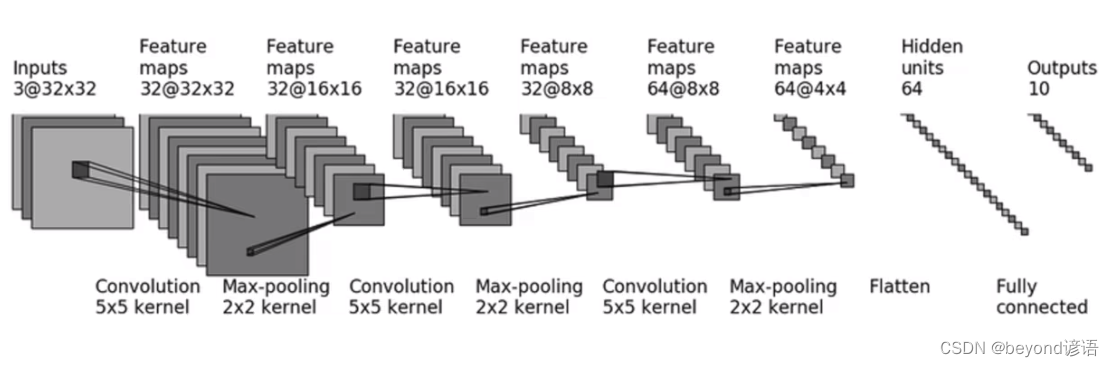

二、復現網絡模型訓練CIFAR-10數據集

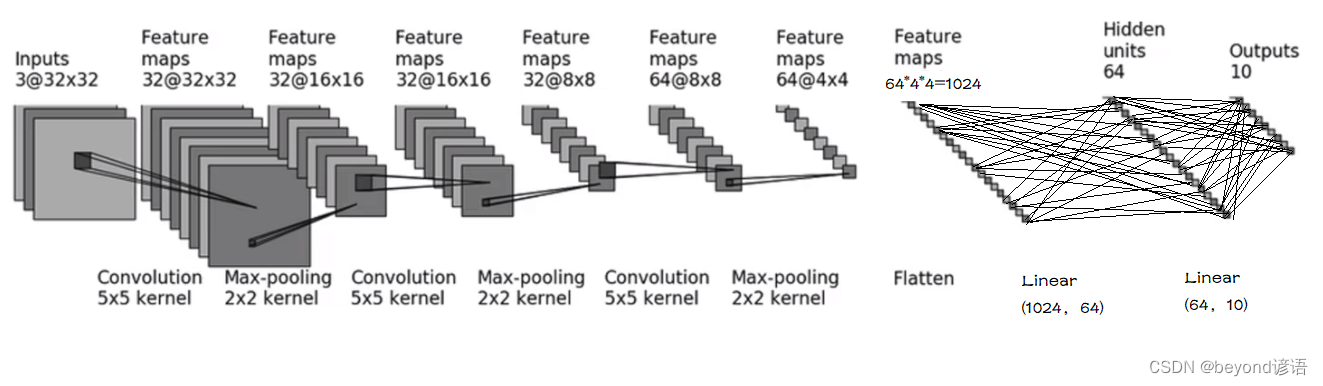

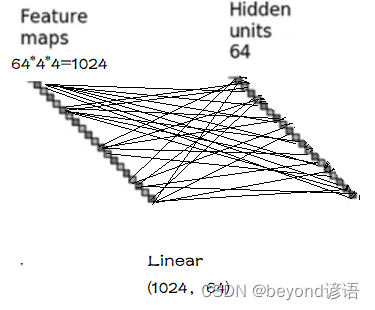

這里面有個Hidden units隱藏單元其實就是連個線性層

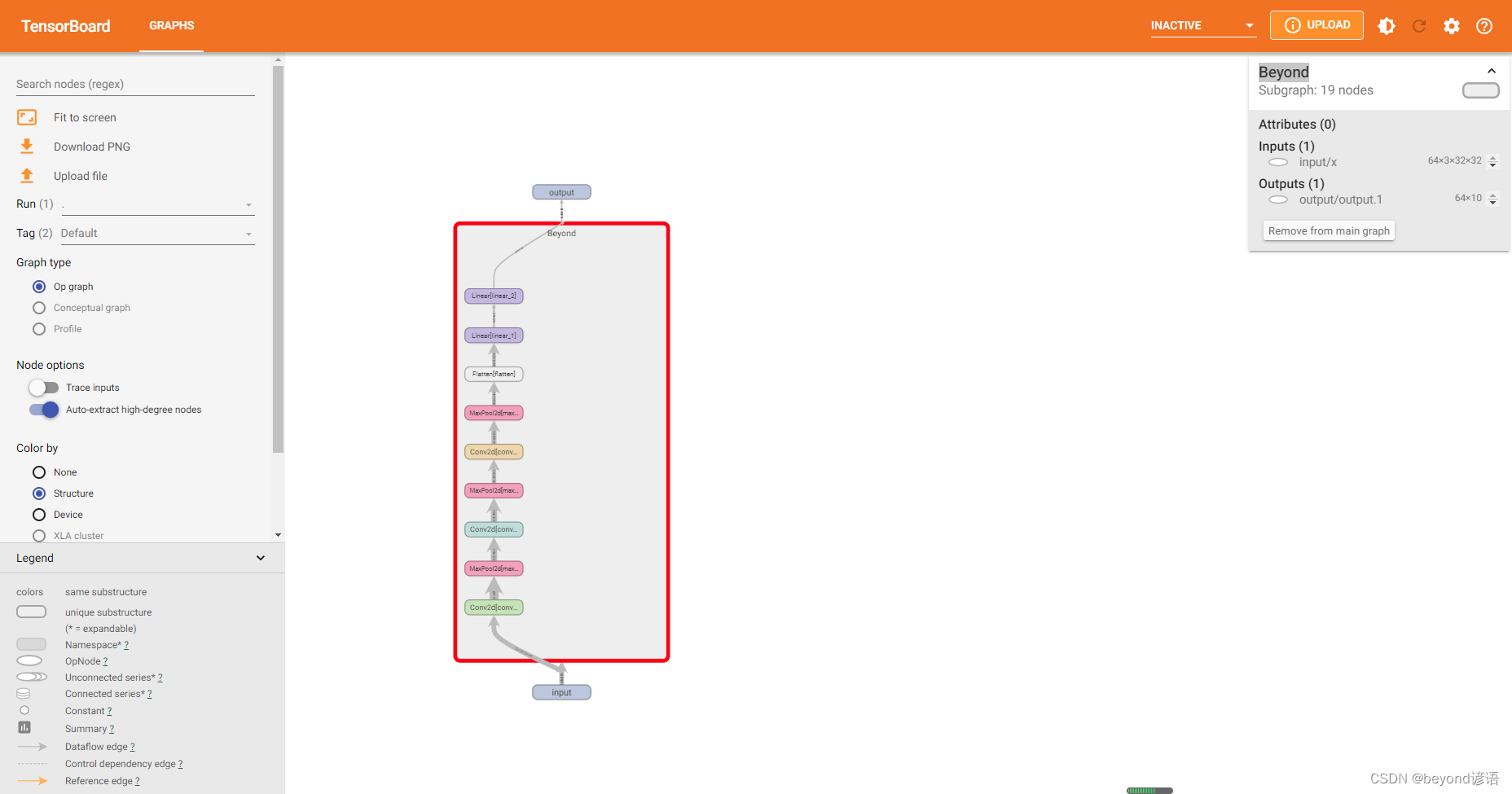

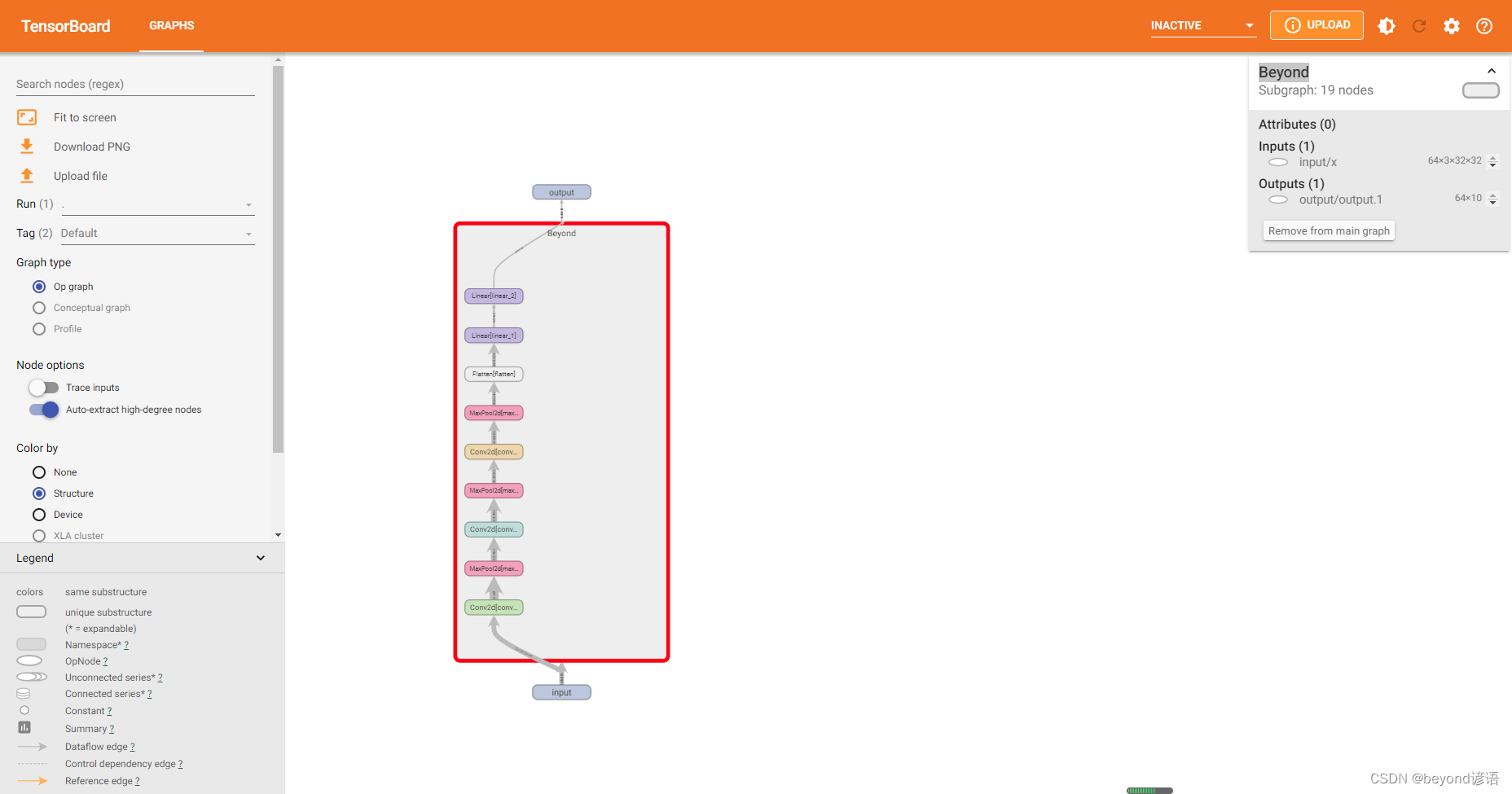

把隱藏層全部展開整個神經網絡架構如下:

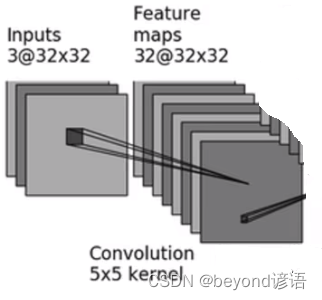

①輸入圖像為3通道的(32,32)圖像,(C,H,W)=(3,32,32)

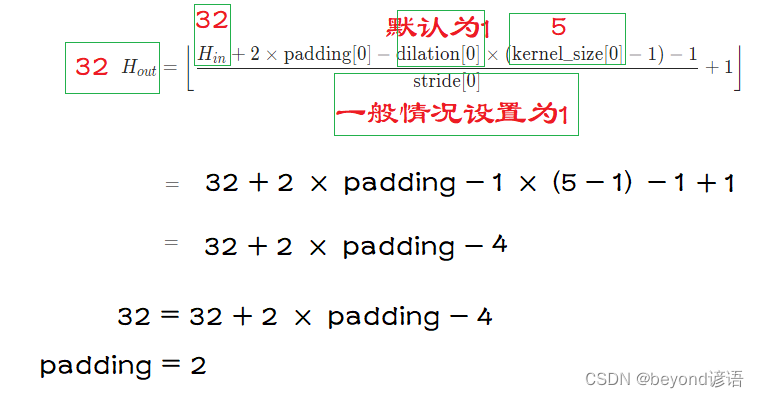

②通過一層(5,5)的卷積Convolution,輸出特征圖為(32,32,32),特征圖的(H,W),通過(5,5)的卷積核大小沒有發生變換,這說明卷積層肯定對原始圖像進行了加邊

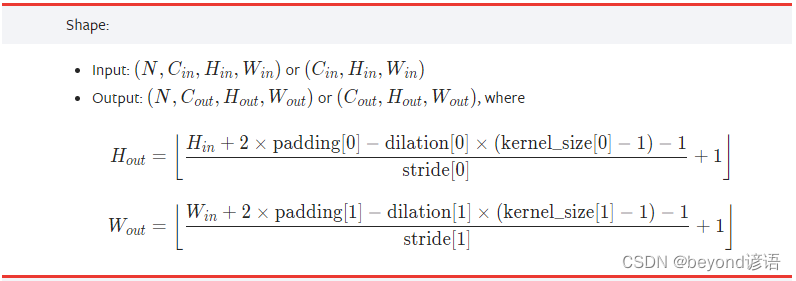

查看下官網給的卷積層padding的計算公式

分析一下:

故padding = 2,加了兩成外邊,之所以channel由3變成了32,是因為卷積核有多個并非一個卷積核

最終:輸入3通道;輸出32通道;stride = 1;padding = 2;dilation = 1(默認值);kernel_size = 5;

torch.nn.Conv2d(in_channels=3,out_channels=32,kernel_size=5,stride=1,padding=2)

③接著將(32,32,32)特征通過Max-Pooling,池化核為(2,2),輸出為(32,16,16)的特征圖

torch.nn.MaxPool2d(kernel_size=2)

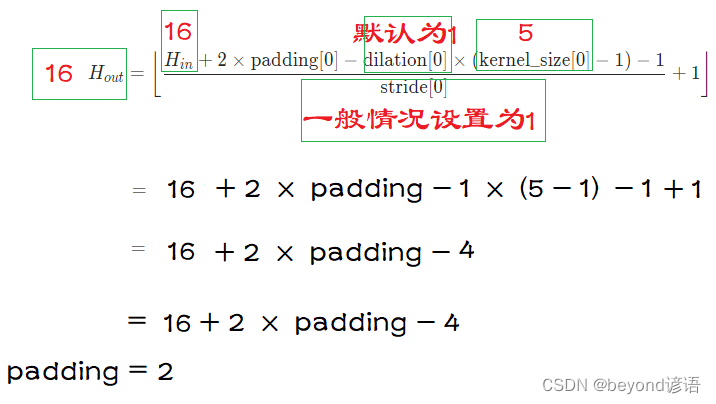

④接著將(32,16,16)特征圖通過(5,5)大小的卷積核進行卷積,輸出特征圖為(32,16,16),特征圖的(H,W),通過(5,5)的卷積核大小沒有發生變換,這說明卷積層肯定對原始圖像進行了加邊

同理根據官網給的計算公式可以求出padding = 2

通過上面兩次的計算可以看出,只要通過卷積核大小為(5,5),卷積之后的大小不變則padding肯定為2

故padding = 2,加了兩成外邊,這里channel由32變成了32,可以得知僅使用了一個卷積核

最終:輸入32通道;輸出32通道;stride = 1;padding = 2;dilation = 1(默認值);kernel_size = 5;

torch.nn.Conv2d(in_channels=32,out_channels=32,kernel_size=5,stride=1,padding=2)

⑤接著將(32,16,16)的特征圖通過Max-Pooling,池化核為(2,2),輸出為(32,8,8)的特征圖

torch.nn.MaxPool2d(kernel_size=2)

⑥再將(32,8,8)的特征圖輸入到卷積核為(5,5)的卷積層中,得到(64,8,8)特征圖,特征圖的(H,W),通過(5,5)的卷積核大小沒有發生變換,這說明卷積層肯定對原始圖像進行了加邊,由前兩次的計算得出的結果可以得知padding=2,這里channel由32變成了64,是因為使用了多個卷積核分別進行卷積

torch.nn.Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=1, padding=2)

⑦再將(64,8,8)的特征圖通過Max-Pooling,池化核為(2,2),輸出為(64,4,4)的特征圖

torch.nn.MaxPool2d(kernel_size=2)

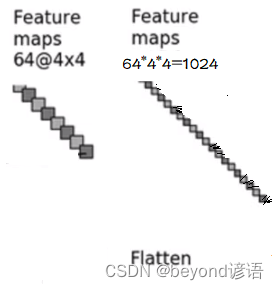

⑧再將(64,4,4)的特征圖進行Flatten展平成(1024)特征

torch.nn.Flatten()

⑨再將(1024)特征傳入第一個Linear層,輸出(64)

torch.nn.Linear(1024,64)

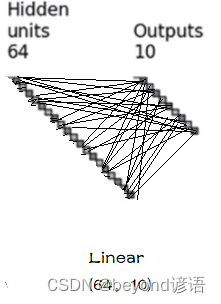

⑩最后將(64)特征圖經過第二個Linear層,輸出(10),從而達到CIFAR-10數據集的10分類任務

torch.nn.Linear(64,10)

三、傳統神經網絡實現

import torch

from torch import nn

from torch.nn import Conv2d

from torch.utils.tensorboard import SummaryWriterclass Beyond(nn.Module):def __init__(self):super(Beyond,self).__init__()self.conv_1 = torch.nn.Conv2d(in_channels=3,out_channels=32,kernel_size=5,stride=1,padding=2)self.maxpool_1 = torch.nn.MaxPool2d(kernel_size=2)self.conv_2 = torch.nn.Conv2d(in_channels=32,out_channels=32,kernel_size=5,stride=1,padding=2)self.maxpool_2 = torch.nn.MaxPool2d(kernel_size=2)self.conv_3 = torch.nn.Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=1, padding=2)self.maxpool_3 = torch.nn.MaxPool2d(kernel_size=2)self.flatten = torch.nn.Flatten()self.linear_1 = torch.nn.Linear(1024,64)self.linear_2 = torch.nn.Linear(64,10)def forward(self,x):x = self.conv_1(x)x = self.maxpool_1(x)x = self.conv_2(x)x = self.maxpool_2(x)x = self.conv_3(x)x = self.maxpool_3(x)x = self.flatten(x)x = self.linear_1(x)x = self.linear_2(x)return xbeyond = Beyond()

print(beyond)

"""

Beyond((conv_1): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(maxpool_1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)(conv_2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(maxpool_2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)(conv_3): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(maxpool_3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)(flatten): Flatten(start_dim=1, end_dim=-1)(linear_1): Linear(in_features=1024, out_features=64, bias=True)(linear_2): Linear(in_features=64, out_features=10, bias=True)

)

"""input = torch.zeros((64,3,32,32))

print(input.shape)#torch.Size([64, 3, 32, 32])

output = beyond(input)

print(output.shape)#torch.Size([64, 10])#將網絡圖上傳值tensorboard中進行可視化展示

writer = SummaryWriter("y_log")

writer.add_graph(beyond,input)

writer.close()

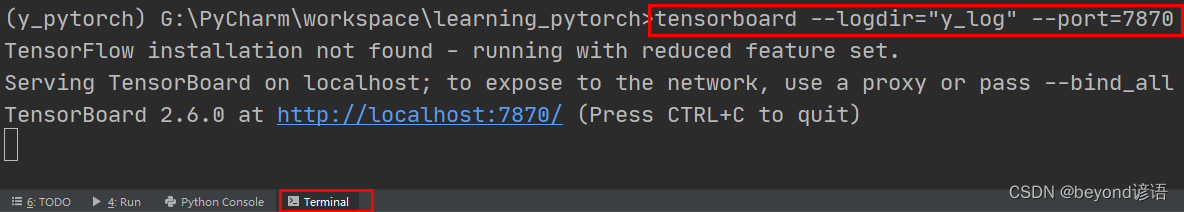

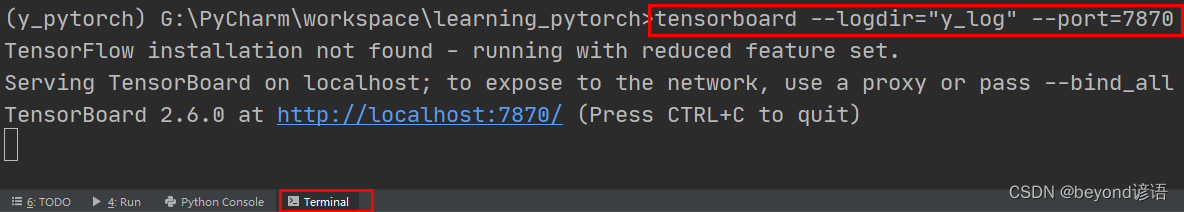

在Terminal下運行tensorboard --logdir=y_log --port=7870,logdir為打開事件文件的路徑,port為指定端口打開;

通過指定端口2312進行打開tensorboard,若不設置port參數,默認通過6006端口進行打開。

四、使用Sequential實現神經網絡

import torch

from torch import nn

from torch.nn import Conv2d

from torch.utils.tensorboard import SummaryWriterclass Beyond(nn.Module):def __init__(self):super(Beyond,self).__init__()self.model = torch.nn.Sequential(torch.nn.Conv2d(3,32,5,padding=2),torch.nn.MaxPool2d(2),torch.nn.Conv2d(32,32,5,padding=2),torch.nn.MaxPool2d(2),torch.nn.Conv2d(32,64,5,padding=2),torch.nn.MaxPool2d(2),torch.nn.Flatten(),torch.nn.Linear(1024,64),torch.nn.Linear(64,10))def forward(self,x):x = self.model(x)return xbeyond = Beyond()

print(beyond)

"""

Beyond((conv_1): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(maxpool_1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)(conv_2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(maxpool_2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)(conv_3): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(maxpool_3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)(flatten): Flatten(start_dim=1, end_dim=-1)(linear_1): Linear(in_features=1024, out_features=64, bias=True)(linear_2): Linear(in_features=64, out_features=10, bias=True)

)

"""input = torch.zeros((64,3,32,32))

print(input.shape)#torch.Size([64, 3, 32, 32])

output = beyond(input)

print(output.shape)#torch.Size([64, 10])#將網絡圖上傳值tensorboard中進行可視化展示

writer = SummaryWriter("y_log")

writer.add_graph(beyond,input)

writer.close()

在Terminal下運行tensorboard --logdir=y_log --port=7870,logdir為打開事件文件的路徑,port為指定端口打開;

通過指定端口2312進行打開tensorboard,若不設置port參數,默認通過6006端口進行打開。

實現效果是完全一樣的,使用Sequential看起來更加簡介,可視化效果更好些。

)

)

)

)