目錄

?一、深度學習項目的基本構成

二、實戰(貓狗分類)

1、數據集下載

2、dataset.py文件

3、model.py

4、config.py

5、predict.py

?一、深度學習項目的基本構成

一個深度學習模型一般包含以下幾個文件:

datasets文件夾:存放需要訓練和測試的數據集

dataset.py:加載數據集,將數據集轉換為固定的格式,返回圖像集和標簽集

model.py:根據自己的需求搭建一個深度學習模型,具體搭建方法參考

【深度學習】——pytorch搭建模型及相關模型

https://blog.csdn.net/qq_45769063/article/details/120246601config.py:將需要配置的參數均放在這個文件中,比如batchsize,transform,epochs,lr等超參數

train.py:加載數據集,訓練

predict.py:加載訓練好的模型,對圖像進行預測

requirements.txt:一些需要的庫,通過pip install -r requirements.txt可以進行安裝

readme:記錄一些log

log文件:存放訓練好的模型

loss文件夾:存放訓練記錄的loss圖像

二、實戰(貓狗分類)

1、數據集下載

下載數據

- 訓練數據

鏈接: https://pan.baidu.com/s/1UOJUi-Wm6w0D7JGQduq7Ow 提取碼: 485q

- 測試數據

鏈接: https://pan.baidu.com/s/1sSgLFkv9K3ciRVLAryWUKg 提取碼: gyvs

下載好之后解壓,可以發現訓練數據以cat或dog開頭,測試數據都以數字命名。

這里我重命名了,cats以0開始,dogs以1開始

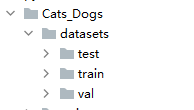

創建dataset文件夾

一般習慣這樣構造目錄,直接人為劃分三個數據集,當然也可以用程序進行劃分

?

2、dataset.py文件

主要是繼承dataset類,然后在__getitem__方法中編寫代碼,得到一個可以通過字典key來取值的實例化對象

## 導入模塊

from torch.utils.data import DataLoader,Dataset

from skimage import io,transform

import matplotlib.pyplot as plt

import os

import torch

from torchvision import transforms, utils

from PIL import Image

import pandas as pd

import numpy as np

#過濾警告信息

import warnings

warnings.filterwarnings("ignore")class MyDataset(Dataset): # 繼承Datasetdef __init__(self, path_dir, transform=None,train=True,test=True,val=True): # 初始化一些屬性self.path_dir = path_dir # 文件路徑,如'.\data\cat-dog'self.transform = transform # 對圖形進行處理,如標準化、截取、轉換等self.images = os.listdir(self.path_dir) # 把路徑下的所有文件放在一個列表中self.train = trainself.test = testself.val = valif self.test:self.images = os.listdir(self.path_dir + r"\cats")self.images.extend(os.listdir(self.path_dir+r"\dogs"))if self.train:self.images = os.listdir(self.path_dir + r"\cats")self.images.extend(os.listdir(self.path_dir+r"\dogs"))if self.val:self.images = os.listdir(self.path_dir + r"\cats")self.images.extend(os.listdir(self.path_dir+r"\dogs"))def __len__(self): # 返回整個數據集的大小return len(self.images)def __getitem__(self, index): # 根據索引index返回圖像及標簽image_index = self.images[index] # 根據索引獲取圖像文件名稱if image_index[0] == "0":img_path = os.path.join(self.path_dir,"cats", image_index) # 獲取圖像的路徑或目錄else:img_path = os.path.join(self.path_dir,"dogs", image_index) # 獲取圖像的路徑或目錄img = Image.open(img_path).convert('RGB') # 讀取圖像# 根據目錄名稱獲取圖像標簽(cat或dog)# 把字符轉換為數字cat-0,dog-1label = 0 if image_index[0] == "0" else 1if self.transform is not None:img = self.transform(img)# print(type(img))# print(img.size)return img, label3、model.py

模型是在VGG16的基礎上進行修改的,主要是增加了一層卷積層和兩層全連接層,將輸入的圖像resize成448,448大小

from torch import nnclass VGG19(nn.Module):def __init__(self, num_classes=2):super(VGG19, self).__init__() # 繼承父類屬性和方法# 根據前向傳播的順序,搭建各個子網絡模塊## 十四個卷積層,每個卷積模塊都有卷積層、激活層和池化層,用nn.Sequential()這個容器將各個模塊存放起來# [1,3,448,448]self.conv0 = nn.Sequential(nn.Conv2d(3, 32, (3, 3), (1, 1), (1, 1)),nn.ReLU(inplace=True), # inplace = True表示是否進行覆蓋計算nn.MaxPool2d((2, 2), (2, 2)))# [1,32,224,224]self.conv1 = nn.Sequential(nn.Conv2d(32, 64, (3, 3), (1, 1), (1, 1)),nn.ReLU(inplace=True), # inplace = True表示是否進行覆蓋計算)# [1,64,224,224]self.conv2 = nn.Sequential(nn.Conv2d(64, 64, (3, 3), (1, 1), (1, 1)),nn.ReLU(inplace=True), # inplace = True表示是否進行覆蓋計算nn.MaxPool2d((2, 2), (2, 2)))# [1,64,112,112]self.conv3 = nn.Sequential(nn.Conv2d(64, 128, (3, 3), (1, 1), (1, 1)),nn.ReLU(inplace=True), # inplace = True表示是否進行覆蓋計算)# [1,128,112,112]self.conv4 = nn.Sequential(nn.Conv2d(128, 128, (3, 3), (1, 1), (1, 1)),nn.ReLU(inplace=True), # inplace = True表示是否進行覆蓋計算nn.MaxPool2d((2, 2), (2, 2)))# [1,128,56,56]self.conv5 = nn.Sequential(nn.Conv2d(128, 256, (3, 3), (1, 1), (1, 1)),nn.ReLU(inplace=True), # inplace = True表示是否進行覆蓋計算)# [1,256,56,56]self.conv6 = nn.Sequential(nn.Conv2d(256, 256, (3, 3), (1, 1), (1, 1)),nn.ReLU(inplace=True), # inplace = True表示是否進行覆蓋計算)# [1,256,56,56]self.conv7 = nn.Sequential(nn.Conv2d(256, 256, (3, 3), (1, 1), (1, 1)),nn.ReLU(inplace=True), # inplace = True表示是否進行覆蓋計算nn.MaxPool2d((2, 2), (2, 2)))# [1,256,28,28]self.conv8 = nn.Sequential(nn.Conv2d(256, 512, (3, 3), (1, 1), (1, 1)),nn.ReLU(inplace=True))# [1,512,28,28]self.conv9 = nn.Sequential(nn.Conv2d(512, 512, (3, 3), (1, 1), (1, 1)),nn.ReLU(inplace=True))# [1,512,28,28]self.conv10 = nn.Sequential(nn.Conv2d(512, 512, (3, 3), (1, 1), (1, 1)),nn.ReLU(inplace=True),nn.MaxPool2d((2, 2), (2, 2)))# [1,512,14,14]self.conv11 = nn.Sequential(nn.Conv2d(512, 512, (3, 3), (1, 1), (1, 1)),nn.ReLU(inplace=True),)# [1,512,14,14]self.conv12 = nn.Sequential(nn.Conv2d(512, 512, (3, 3), (1, 1), (1, 1)),nn.ReLU(inplace=True),)# [1,512,14,14]-->[1,512,7,7]self.conv13 = nn.Sequential(nn.Conv2d(512, 512, (3, 3), (1, 1), (1, 1)),nn.ReLU(inplace=True),nn.MaxPool2d((2, 2), (2, 2)))# 五個全連接層,每個全連接層之間存在激活層和dropout層self.classfier = nn.Sequential(# [1*512*7*7]nn.Linear(1 * 512 * 7 * 7, 4096),nn.ReLU(True),nn.Dropout(),# 4096nn.Linear(4096, 4096),nn.ReLU(True),nn.Dropout(),# 4096-->1000nn.Linear(4096, 1000),nn.ReLU(True),nn.Dropout(),# 1000-->100nn.Linear(1000, 100),nn.ReLU(True),nn.Dropout(),nn.Linear(100, num_classes),nn.Softmax(dim=1))# 前向傳播函數def forward(self, x):# 十四個卷積層x = self.conv0(x)x = self.conv1(x)x = self.conv2(x)x = self.conv3(x)x = self.conv4(x)x = self.conv5(x)x = self.conv6(x)x = self.conv7(x)x = self.conv8(x)x = self.conv9(x)x = self.conv10(x)x = self.conv11(x)x = self.conv12(x)x = self.conv13(x)# 將圖像扁平化為一維向量,[1,512,7,7]-->1*512*7*7x = x.view(x.size(0), -1)# 三個全連接層output = self.classfier(x)return outputif __name__ == '__main__':import torchnet = VGG19()print(net)input = torch.randn([1,3,448,448])output = net(input)print(output)4、config.py

from torchvision import transforms as T# 數據集準備

trainFlag = True

valFlag = True

testFlag = Falsetrainpath = r".\datasets\train"

testpath = r".\datasets\test"

valpath = r".\datasets\val"transform_ = T.Compose([T.Resize(448), # 縮放圖片(Image),保持長寬比不變,最短邊為224像素T.CenterCrop(448), # 從圖片中間切出224*224的圖片T.ToTensor(), # 將圖片(Image)轉成Tensor,歸一化至[0, 1]T.Normalize(mean=[.5, .5, .5], std=[.5, .5, .5]) # 標準化至[-1, 1],規定均值和標準差

])# 訓練相關參數

batchsize = 2

lr = 0.001

epochs = 1005、predict.py

?加載訓練好的模型,對圖像進行預測

from pytorch.Cats_Dogs.model import VGG19

from PIL import Image

import torch

from pytorch.Cats_Dogs.configs import transform_def predict_(model, img):# 將輸入的圖像從array格式轉為imageimg = Image.fromarray(img)# 自己定義的pytorch transform方法img = transform_(img)# .view()用來增加一個維度# 我的圖像的shape為(1, 64, 64)# channel為1,H為64, W為64# 因為訓練的時候輸入的照片的維度為(batch_size, channel, H, W) 所以需要再新增一個維度# 增加的維度是batch size,這里輸入的一張圖片,所以為1img = img.view(1, 1, 64, 64)output = model(img)_, prediction = torch.max(output, 1)# 將預測結果從tensor轉為array,并抽取結果prediction = prediction.numpy()[0]return predictionif __name__ == '__main__':img_path = r"*.jpg"img = Image.open(img_path).convert('RGB') # 讀取圖像device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')# device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')model = VGG19()# save_path,和模型的保存那里的save_path一樣# .eval() 預測結果前必須要做的步驟,其作用為將模型轉為evaluation模式# Sets the module in evaluation mode.model.load_state_dict(torch.load("*.pth"))model.eval()pred = predict_(model,img)print(pred)

![BZOJ4426 : [Nwerc2015]Better Productivity最大生產率](http://pic.xiahunao.cn/BZOJ4426 : [Nwerc2015]Better Productivity最大生產率)

![[codevs1105][COJ0183][NOIP2005]過河](http://pic.xiahunao.cn/[codevs1105][COJ0183][NOIP2005]過河)

模板方法模式詳解(包含與類加載器不得不說的故事))

)

)

:C++分布式實時應用框架——微服務架構的演進)