文章目錄

- 前言

- 一、FFmpeg 解碼流程

- 二、FFmpeg 轉碼流程

- 三、編解碼 API 詳解

- 1、解碼 API 使用詳解

- 2、編碼 API 使用詳解

- 四、編碼案例實戰

- 1、示例源碼

- 2、運行結果

- 五、解碼案例實戰

- 1、示例源碼

- 2、運行結果

前言

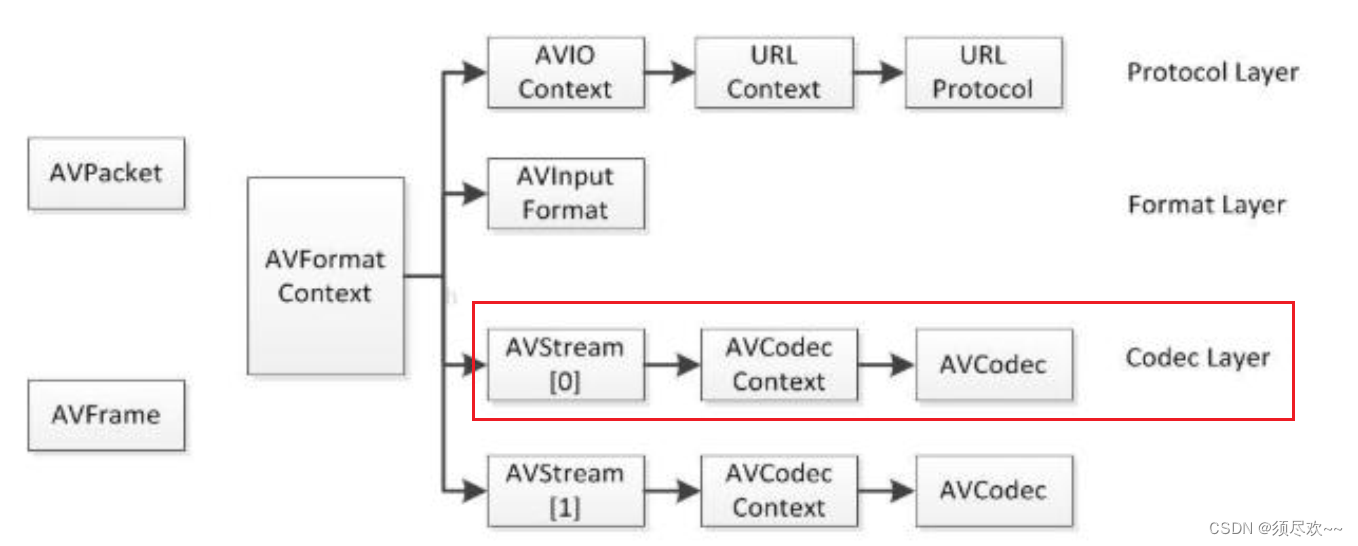

AVFormatContext 是一個貫穿始終的數據結構,很多函數都用到它作為參數,是輸入輸出相關信息的一個容器,本文講解 AVFormatContext 的編解碼層,主要包括三大數據結構:AVStream,AVCodecContex,AVCodec。

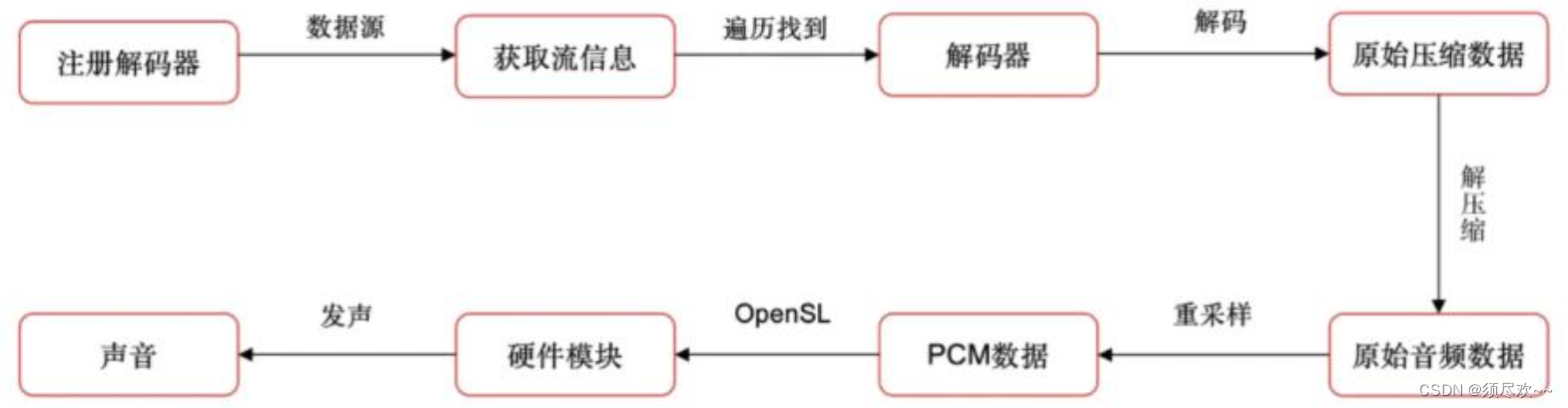

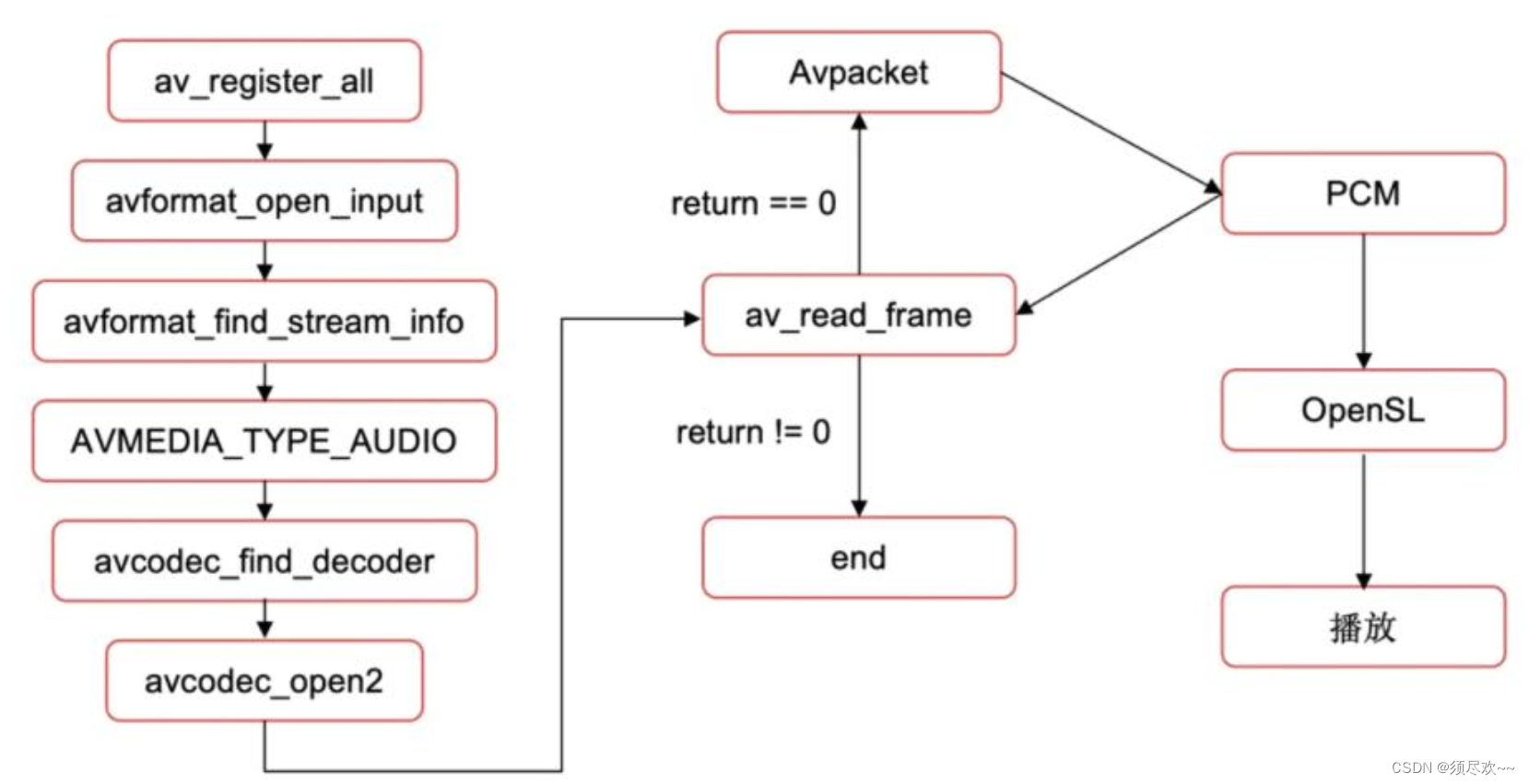

一、FFmpeg 解碼流程

得到輸入文件 -> 解封格式 -> 得到編碼的數據包 -> 解碼數據包 -> 得到解碼后的數據幀 -> 處理數據幀 -> 編碼 -> 得到編碼后的數據包 -> 封裝格式 -> 輸出文件

涉及到下面的 API 函數:

- 注冊所有容器格式和 CODEC:

av_register_all(); - 打開文件:

av_open_input_file(); - 從文件中提取流信息:

av_find_stream_info(); - 窮舉所有的流,查找其中種類為

CODEC_TYPE_VIDEO; - 查找對應的解碼器:

avcodec_find_decoder(); - 打開編解碼器:

avcodec_open(); - 為解碼幀分配內存:

avcodec_alloc_frame(); - 不停地從碼流中提取出幀數據:

av_read_frame(); - 判斷幀的類型,對于視頻幀調用:

avcodec_decode_video(); - 解碼完后,釋放解碼器:

avcodec_close(); - 關閉輸入文件:

av_close_input_file();

程序流程圖如下圖所示:

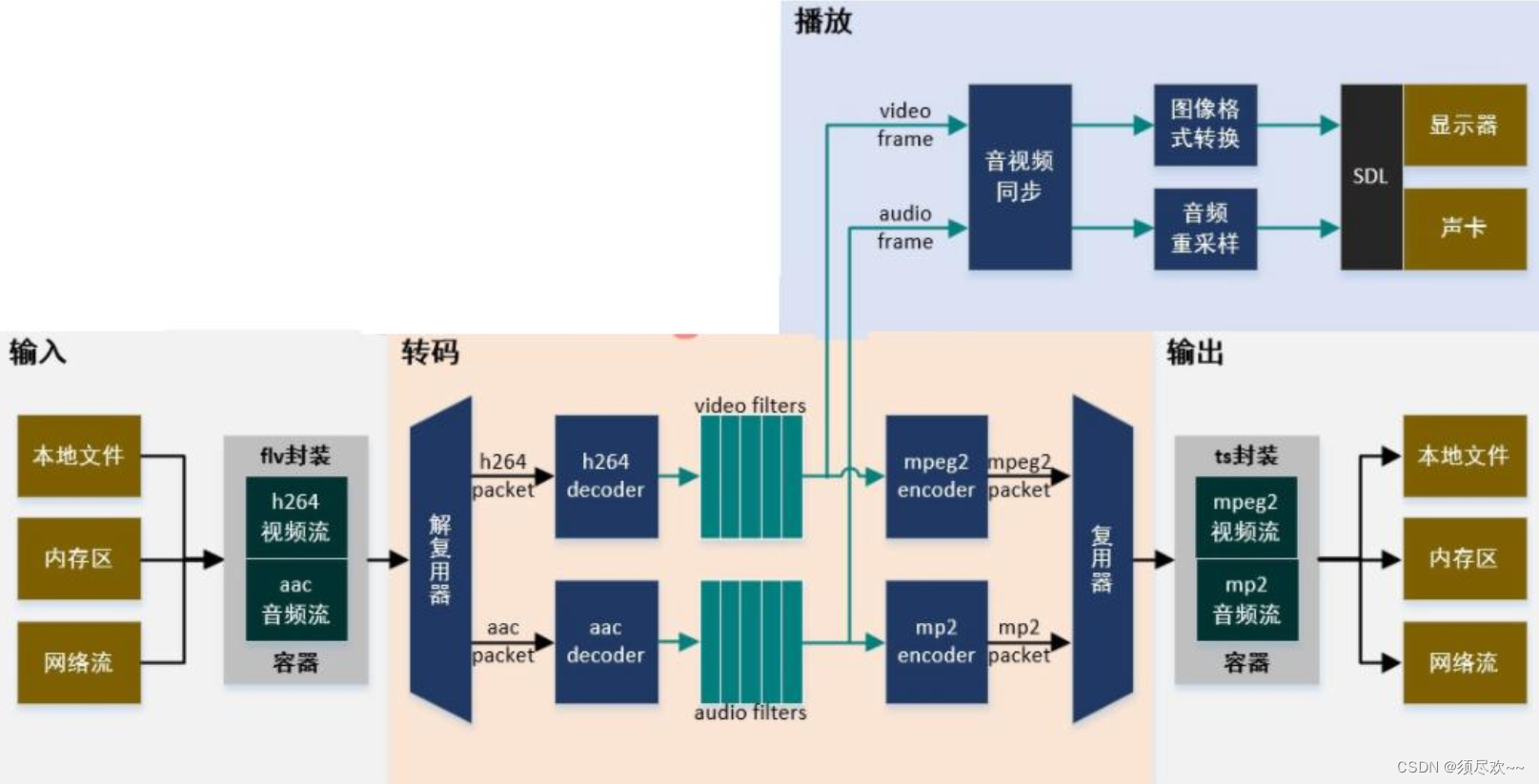

二、FFmpeg 轉碼流程

- 大流程可以劃分為輸入、輸出、轉碼、播放四大塊;

- 其中轉碼涉及比較多的處理環節,從圖中可以看出,轉碼功能在整個功能圖中占比很大。轉碼的核心功能在解碼和編碼兩個部分,但在一個可用的示例程序中,編碼解碼與輸入輸出是難以分割的。

- 解復用器為解碼器提供輸入,解碼器會輸出原始幀,對原始幀可進行各種復雜的濾鏡處理,濾鏡處理后的幀經編碼器生成編碼幀,多路流的編碼幀經復用器輸出到輸出文件。

三、編解碼 API 詳解

- 解碼使用

avcodec_send_packet()和avcodec_receive_frame()兩個函數。 - 編碼使用

avcodec_send_frame()和avcodec_receive_packet()兩個函數。

1、解碼 API 使用詳解

關于 avcodec_send_packet() 與 avcodec_receive_frame() 的使用說明:

- ①、按 dts 遞增的順序向解碼器送入編碼幀 packet,解碼器按 pts 遞增的順序輸出原始幀 frame,實際上解碼器不關注輸入 packet 的 dts(錯值都沒關系),它只管依次處理收到的 packet,按需緩沖和解碼;

- ②、

avcodec_receive_frame()輸出 frame 時,會根據各種因素設置好frame->best_effort_timestamp(文檔明確說明),實測frame->pts也會被設置(通常直接拷貝自對應的packet.pts,文檔未明確說明)用戶應確保avcodec_send_packet()發送的 packet 具有正確的 pts,編碼幀 packet 與原始幀 frame 間的對應關系通過 pts 確定; - ③、

avcodec_receive_frame()輸 出 frame 時 ,frame->pkt_dts拷貝自當前avcodec_send_packet()發送的 packet 中的 dts, 如果當前 packet 為 NULL(flush packet),解碼器進入 flush 模式,當前及剩余的frame->pkt_dts值總為AV_NOPTS_VALUE。因為解碼器中有緩存幀,當前輸出的 frame 并不是由當前輸入的 packet 解碼得到的,所以這個frame->pkt_dts沒什么實際意義,可以不必關注; - ④、

avcodec_send_packet()發送第一個 NULL 會返回成功,后續的 NULL 會返回AVERROR_EOF; - ⑤、

avcodec_send_packet()多次發送 NULL 并不會導致解碼器中緩存的幀丟失,使用avcodec_flush_buffers()可以立即丟掉解碼器中緩存幀。因此播放完畢時應avcodec_send_packet(NULL)來取完緩存的幀,而 SEEK 操作或切換流時應調用avcodec_flush_buffers()來直接丟棄緩存幀; - ⑥、解碼器通常的沖洗方法:調用一次

avcodec_send_packet(NULL)(返回成功),然后不停調用avcodec_receive_frame()直到其返回AVERROR_EOF,取出所有緩存幀,avcodec_receive_frame()返回AVERROR_EOF這一次是沒有有效數據的,僅僅獲取到一個結束標志;

2、編碼 API 使用詳解

關于 avcodec_send_frame() 與 avcodec_receive_packet() 的使用說明:

- ①、按 pts 遞增的順序向編碼器送入原始幀 frame, 編碼器按 dts 遞增的順序輸出編碼幀 packet,實際上編碼器關注輸入 frame 的 pts 不關注其 dts,它只管依次處理收到的 frame,按需緩沖和編碼;

- ②、

avcodec_receive_packet()輸出 packet 時,會設置 packet.dts,從 0 開始,每次輸出的 packet 的 dts 加 1,這是視頻層的 dts,用戶寫輸出前應將其轉換為容器層的 dts; - ③、

avcodec_receive_packet()輸出 packet 時,packet.pts拷貝自對應的frame.pts,這是視頻層的 pts,用戶寫輸出前應將其轉換為容器層的 pts; - ④、

avcodec_send_frame()發送 NULL frame 時, 編碼器進入 flush 模式; - ⑤、

avcodec_send_frame()發送第一個 NULL 會返回成功 ,后續的 NULL 會返回AVERROR_EOF; - ⑥、

avcodec_send_frame()多次發送 NULL 并不會導致編碼器中緩存的幀丟失,使用avcodec_flush_buffers()可以立即丟掉編碼器中緩存幀。因此編碼完畢時應使用avcodec_send_frame(NULL)來取完緩存的幀,而 SEEK 操作或切換流時應調用avcodec_flush_buffers()來直接丟棄緩存幀; - ⑦、編碼器通常的沖洗方法:調用一次

avcodec_send_frame(NULL)(返回成功),然后不停調用avcodec_receive_packet()直到其返回AVERROR_EOF,取出所有緩存幀,avcodec_receive_packet()返回AVERROR_EOF這一次是沒有有效數據的,僅僅獲取到一個結束標志; - ⑧、對音頻來說,如果

AV_CODEC_CAP_VARIABLE_FRAME_SIZE(在AVCodecContext.codec.capabilities變量中,只讀)標志有效,表示編碼器支持可變尺寸音頻幀,送入編碼器的音頻幀可以包含任意數量的采樣點。如果此標志無效,則每一個音頻幀的采樣點數目(frame->nb_samples)必須等于編碼器設定的音頻幀尺寸(avctx->frame_size),最后一幀除外,最后一幀音頻幀采樣點數可以小于avctx->frame_size;

四、編碼案例實戰

下面代碼使用實現了一個簡單的視頻編碼器,將指定的圖像序列編碼為 MPEG 視頻文件

1、示例源碼

#include <stdio.h>

#include <stdlib.h>

#include <string.h>#include <libavcodec/avcodec.h>#include <libavutil/opt.h>

#include <libavutil/imgutils.h>static void encode(AVCodecContext *enc_ctx, AVFrame *frame, AVPacket *pkt,FILE *outfile)

{int ret;/* send the frame to the encoder */if (frame)printf("Send frame %3"PRId64"\n", frame->pts);ret = avcodec_send_frame(enc_ctx, frame);if (ret < 0) {fprintf(stderr, "Error sending a frame for encoding\n");exit(1);}while (ret >= 0) {ret = avcodec_receive_packet(enc_ctx, pkt);if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF)return;else if (ret < 0) {fprintf(stderr, "Error during encoding\n");exit(1);}printf("Write packet %3"PRId64" (size=%5d)\n", pkt->pts, pkt->size);fwrite(pkt->data, 1, pkt->size, outfile);av_packet_unref(pkt);}

}int main(int argc, char **argv)

{const char *filename, *codec_name;const AVCodec *codec;AVCodecContext *c= NULL;int i, ret, x, y;FILE *f;AVFrame *frame;AVPacket *pkt;uint8_t endcode[] = { 0, 0, 1, 0xb7 };filename = "./debug/test666.mp4";codec_name = "mpeg1video";/* find the mpeg1video encoder */codec = avcodec_find_encoder_by_name(codec_name);if (!codec) {fprintf(stderr, "Codec '%s' not found\n", codec_name);exit(1);}c = avcodec_alloc_context3(codec);if (!c) {fprintf(stderr, "Could not allocate video codec context\n");exit(1);}pkt = av_packet_alloc();if (!pkt)exit(1);/* put sample parameters */c->bit_rate = 400000;/* resolution must be a multiple of two */c->width = 352;c->height = 288;/* frames per second */c->time_base = (AVRational){1, 25};c->framerate = (AVRational){25, 1};/* emit one intra frame every ten frames* check frame pict_type before passing frame* to encoder, if frame->pict_type is AV_PICTURE_TYPE_I* then gop_size is ignored and the output of encoder* will always be I frame irrespective to gop_size*/c->gop_size = 10;c->max_b_frames = 1;c->pix_fmt = AV_PIX_FMT_YUV420P;if (codec->id == AV_CODEC_ID_H264)av_opt_set(c->priv_data, "preset", "slow", 0);/* open it */ret = avcodec_open2(c, codec, NULL);if (ret < 0) {fprintf(stderr, "Could not open codec: %s\n", av_err2str(ret));exit(1);}f = fopen(filename, "wb");if (!f) {fprintf(stderr, "Could not open %s\n", filename);exit(1);}frame = av_frame_alloc();if (!frame) {fprintf(stderr, "Could not allocate video frame\n");exit(1);}frame->format = c->pix_fmt;frame->width = c->width;frame->height = c->height;ret = av_frame_get_buffer(frame, 0);if (ret < 0) {fprintf(stderr, "Could not allocate the video frame data\n");exit(1);}/* encode 1 second of video */for (i = 0; i < 25; i++) {fflush(stdout);/* make sure the frame data is writable */ret = av_frame_make_writable(frame);if (ret < 0)exit(1);/* prepare a dummy image *//* Y */for (y = 0; y < c->height; y++) {for (x = 0; x < c->width; x++) {frame->data[0][y * frame->linesize[0] + x] = x + y + i * 3;}}/* Cb and Cr */for (y = 0; y < c->height/2; y++) {for (x = 0; x < c->width/2; x++) {frame->data[1][y * frame->linesize[1] + x] = 128 + y + i * 2;frame->data[2][y * frame->linesize[2] + x] = 64 + x + i * 5;}}frame->pts = i;/* encode the image */encode(c, frame, pkt, f);}/* flush the encoder */encode(c, NULL, pkt, f);/* add sequence end code to have a real MPEG file */if (codec->id == AV_CODEC_ID_MPEG1VIDEO || codec->id == AV_CODEC_ID_MPEG2VIDEO)fwrite(endcode, 1, sizeof(endcode), f);fclose(f);avcodec_free_context(&c);av_frame_free(&frame);av_packet_free(&pkt);return 0;

}

2、運行結果

Send frame 0

Send frame 1

Write packet 0 (size= 6731)

Send frame 2

Write packet 2 (size= 3727)

Send frame 3

Write packet 1 (size= 1680)

Send frame 4

Write packet 4 (size= 2744)

Send frame 5

Write packet 3 (size= 1678)

Send frame 6

Write packet 6 (size= 2963)

Send frame 7

Write packet 5 (size= 1819)

Send frame 8

Write packet 8 (size= 3194)

Send frame 9

Write packet 7 (size= 1977)

Send frame 10

Write packet 10 (size=12306)

Send frame 11

Write packet 9 (size= 2231)

Send frame 12

Write packet 12 (size= 3762)

Send frame 13

Write packet 11 (size= 2039)

[mpeg1video @ 02562100] warning, clipping 1 dct coefficients to -255..255

....

[mpeg1video @ 02562100] warning, clipping 1 dct coefficients to -255..255

[mpeg1video @ 02562100] waSend frame 14

Write packet 14 (size= 3278)

Send frame 15

Write packet 13 (size= 1939)

Send frame 16

Write packet 16 (size= 3150)

Send frame 17

Write packet 15 (size= 1929)

Send frame 18

Write packet 18 (size= 3422)

Send frame 19

Write packet 17 (size= 2116)

Send frame 20

Write packet 20 (size=12236)

Send frame 21

Write packet 19 (size= 2055)

Send frame 22

Write packet 22 (size= 4054)

Send frame 23

Write packet 21 (size= 2048)

Send frame 24

Write packet 24 (size= 3191)

Write packet 23 (size= 1955)

rning, clipping 1 dct coefficients to -255..255

[mpeg1video @ 02562100] warning, clipping 1 dct coefficients to -255..255

....

[mpeg1video @ 02562100] warning, clipping 1 dct coefficients to -255..255

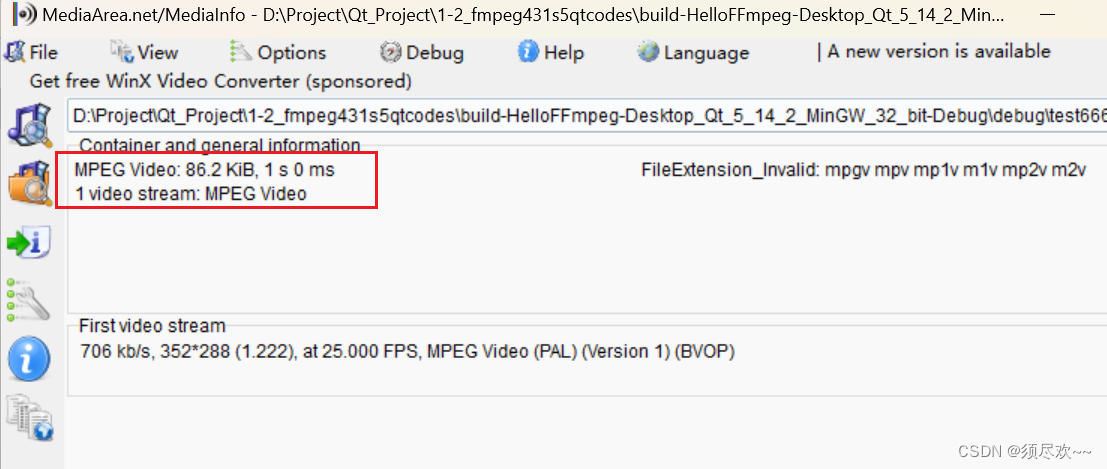

在 debug 目錄下生成一個 test666.mp4,使用 MediaInfo 查看相關信息如下:

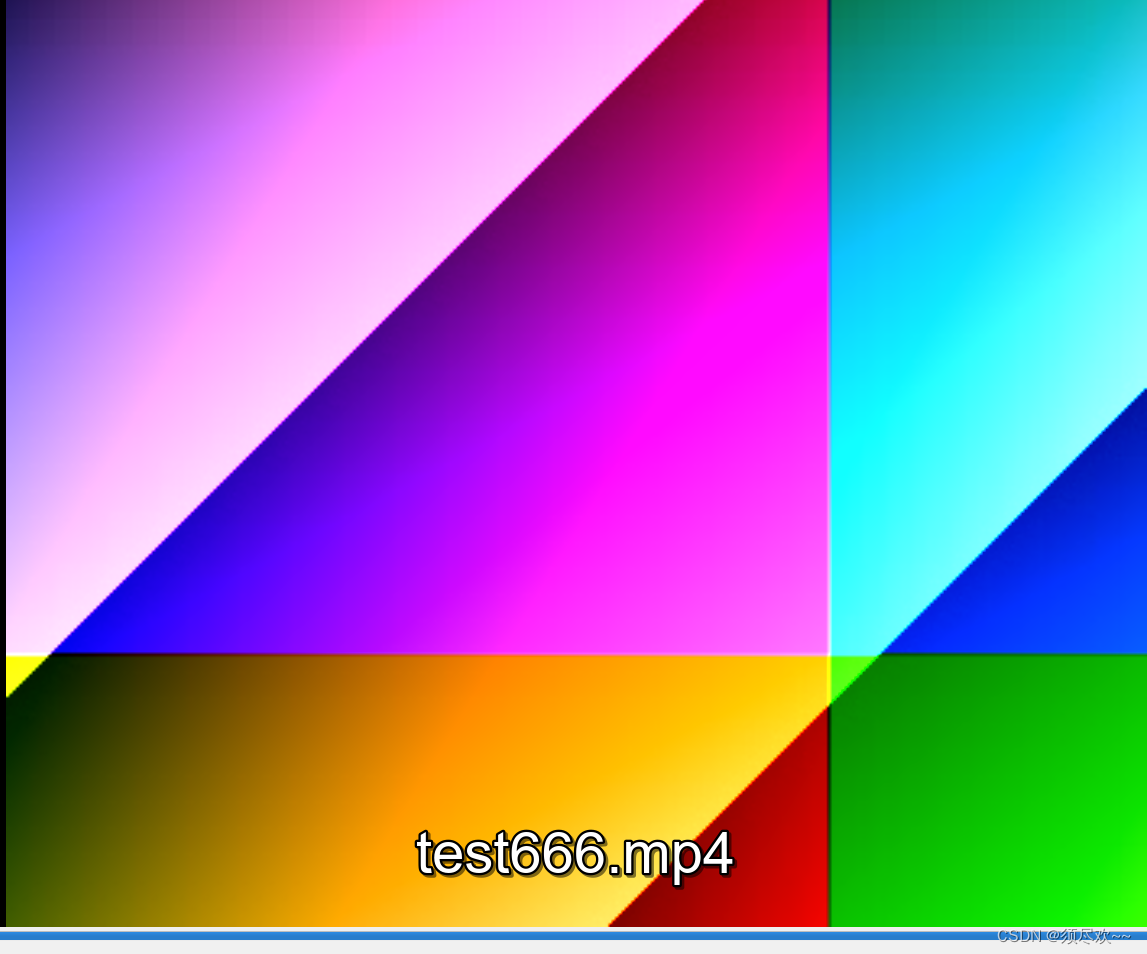

可以看到只有一路視頻流。使用 VLC 播放可以看到 1s 的視頻如下:

五、解碼案例實戰

下面代碼使用實現了一個視頻解碼,將指定的視頻 test.flv 解碼為原始視頻數據 yuv 和原始音頻數據 pcm

1、示例源碼

/*** @file* Demuxing and decoding example.** Show how to use the libavformat and libavcodec API to demux and* decode audio and video data.* @example demuxing_decoding.c*/#include <libavutil/imgutils.h>

#include <libavutil/samplefmt.h>

#include <libavutil/timestamp.h>

#include <libavformat/avformat.h>static AVFormatContext *fmt_ctx = NULL;

static AVCodecContext *video_dec_ctx = NULL, *audio_dec_ctx;

static int width, height;

static enum AVPixelFormat pix_fmt;

static AVStream *video_stream = NULL, *audio_stream = NULL;

static const char *src_filename = NULL;

static const char *video_dst_filename = NULL;

static const char *audio_dst_filename = NULL;

static FILE *video_dst_file = NULL;

static FILE *audio_dst_file = NULL;static uint8_t *video_dst_data[4] = {NULL};

static int video_dst_linesize[4];

static int video_dst_bufsize;static int video_stream_idx = -1, audio_stream_idx = -1;

static AVFrame *frame = NULL;

static AVPacket pkt;

static int video_frame_count = 0;

static int audio_frame_count = 0;static int output_video_frame(AVFrame *frame)

{if (frame->width != width || frame->height != height ||frame->format != pix_fmt) {/* To handle this change, one could call av_image_alloc again and* decode the following frames into another rawvideo file. */fprintf(stderr, "Error: Width, height and pixel format have to be ""constant in a rawvideo file, but the width, height or ""pixel format of the input video changed:\n""old: width = %d, height = %d, format = %s\n""new: width = %d, height = %d, format = %s\n",width, height, av_get_pix_fmt_name(pix_fmt),frame->width, frame->height,av_get_pix_fmt_name(frame->format));return -1;}printf("video_frame n:%d coded_n:%d\n",video_frame_count++, frame->coded_picture_number);/* copy decoded frame to destination buffer:* this is required since rawvideo expects non aligned data */av_image_copy(video_dst_data, video_dst_linesize,(const uint8_t **)(frame->data), frame->linesize,pix_fmt, width, height);/* write to rawvideo file */fwrite(video_dst_data[0], 1, video_dst_bufsize, video_dst_file);return 0;

}static int output_audio_frame(AVFrame *frame)

{size_t unpadded_linesize = frame->nb_samples * av_get_bytes_per_sample(frame->format);printf("audio_frame n:%d nb_samples:%d pts:%s\n",audio_frame_count++, frame->nb_samples,av_ts2timestr(frame->pts, &audio_dec_ctx->time_base));/* Write the raw audio data samples of the first plane. This works* fine for packed formats (e.g. AV_SAMPLE_FMT_S16). However,* most audio decoders output planar audio, which uses a separate* plane of audio samples for each channel (e.g. AV_SAMPLE_FMT_S16P).* In other words, this code will write only the first audio channel* in these cases.* You should use libswresample or libavfilter to convert the frame* to packed data. */fwrite(frame->extended_data[0], 1, unpadded_linesize, audio_dst_file);return 0;

}static int decode_packet(AVCodecContext *dec, const AVPacket *pkt)

{int ret = 0;// submit the packet to the decoderret = avcodec_send_packet(dec, pkt);if (ret < 0) {fprintf(stderr, "Error submitting a packet for decoding (%s)\n", av_err2str(ret));return ret;}// get all the available frames from the decoderwhile (ret >= 0) {ret = avcodec_receive_frame(dec, frame);if (ret < 0) {// those two return values are special and mean there is no output// frame available, but there were no errors during decodingif (ret == AVERROR_EOF || ret == AVERROR(EAGAIN))return 0;fprintf(stderr, "Error during decoding (%s)\n", av_err2str(ret));return ret;}// write the frame data to output fileif (dec->codec->type == AVMEDIA_TYPE_VIDEO)ret = output_video_frame(frame);elseret = output_audio_frame(frame);av_frame_unref(frame);if (ret < 0)return ret;}return 0;

}static int open_codec_context(int *stream_idx,AVCodecContext **dec_ctx, AVFormatContext *fmt_ctx, enum AVMediaType type)

{int ret, stream_index;AVStream *st;AVCodec *dec = NULL;AVDictionary *opts = NULL;ret = av_find_best_stream(fmt_ctx, type, -1, -1, NULL, 0);if (ret < 0) {fprintf(stderr, "Could not find %s stream in input file '%s'\n",av_get_media_type_string(type), src_filename);return ret;} else {stream_index = ret;st = fmt_ctx->streams[stream_index];/* find decoder for the stream */dec = avcodec_find_decoder(st->codecpar->codec_id);if (!dec) {fprintf(stderr, "Failed to find %s codec\n",av_get_media_type_string(type));return AVERROR(EINVAL);}/* Allocate a codec context for the decoder */*dec_ctx = avcodec_alloc_context3(dec);if (!*dec_ctx) {fprintf(stderr, "Failed to allocate the %s codec context\n",av_get_media_type_string(type));return AVERROR(ENOMEM);}/* Copy codec parameters from input stream to output codec context */if ((ret = avcodec_parameters_to_context(*dec_ctx, st->codecpar)) < 0) {fprintf(stderr, "Failed to copy %s codec parameters to decoder context\n",av_get_media_type_string(type));return ret;}/* Init the decoders */if ((ret = avcodec_open2(*dec_ctx, dec, &opts)) < 0) {fprintf(stderr, "Failed to open %s codec\n",av_get_media_type_string(type));return ret;}*stream_idx = stream_index;}return 0;

}static int get_format_from_sample_fmt(const char **fmt,enum AVSampleFormat sample_fmt)

{int i;struct sample_fmt_entry {enum AVSampleFormat sample_fmt; const char *fmt_be, *fmt_le;} sample_fmt_entries[] = {{ AV_SAMPLE_FMT_U8, "u8", "u8" },{ AV_SAMPLE_FMT_S16, "s16be", "s16le" },{ AV_SAMPLE_FMT_S32, "s32be", "s32le" },{ AV_SAMPLE_FMT_FLT, "f32be", "f32le" },{ AV_SAMPLE_FMT_DBL, "f64be", "f64le" },};*fmt = NULL;for (i = 0; i < FF_ARRAY_ELEMS(sample_fmt_entries); i++) {struct sample_fmt_entry *entry = &sample_fmt_entries[i];if (sample_fmt == entry->sample_fmt) {*fmt = AV_NE(entry->fmt_be, entry->fmt_le);return 0;}}fprintf(stderr,"sample format %s is not supported as output format\n",av_get_sample_fmt_name(sample_fmt));return -1;

}int main(int argc, char **argv)

{int ret = 0;src_filename = "./debug/test.flv";video_dst_filename = "./debug/test.yuv";audio_dst_filename = "./debug/test.pcm";/* open input file, and allocate format context */if (avformat_open_input(&fmt_ctx, src_filename, NULL, NULL) < 0) {fprintf(stderr, "Could not open source file %s\n", src_filename);exit(1);}/* retrieve stream information */if (avformat_find_stream_info(fmt_ctx, NULL) < 0) {fprintf(stderr, "Could not find stream information\n");exit(1);}if (open_codec_context(&video_stream_idx, &video_dec_ctx, fmt_ctx, AVMEDIA_TYPE_VIDEO) >= 0) {video_stream = fmt_ctx->streams[video_stream_idx];video_dst_file = fopen(video_dst_filename, "wb");if (!video_dst_file) {fprintf(stderr, "Could not open destination file %s\n", video_dst_filename);ret = 1;goto end;}/* allocate image where the decoded image will be put */width = video_dec_ctx->width;height = video_dec_ctx->height;pix_fmt = video_dec_ctx->pix_fmt;ret = av_image_alloc(video_dst_data, video_dst_linesize,width, height, pix_fmt, 1);if (ret < 0) {fprintf(stderr, "Could not allocate raw video buffer\n");goto end;}video_dst_bufsize = ret;}if (open_codec_context(&audio_stream_idx, &audio_dec_ctx, fmt_ctx, AVMEDIA_TYPE_AUDIO) >= 0) {audio_stream = fmt_ctx->streams[audio_stream_idx];audio_dst_file = fopen(audio_dst_filename, "wb");if (!audio_dst_file) {fprintf(stderr, "Could not open destination file %s\n", audio_dst_filename);ret = 1;goto end;}}/* dump input information to stderr */av_dump_format(fmt_ctx, 0, src_filename, 0);if (!audio_stream && !video_stream) {fprintf(stderr, "Could not find audio or video stream in the input, aborting\n");ret = 1;goto end;}frame = av_frame_alloc();if (!frame) {fprintf(stderr, "Could not allocate frame\n");ret = AVERROR(ENOMEM);goto end;}/* initialize packet, set data to NULL, let the demuxer fill it */av_init_packet(&pkt);pkt.data = NULL;pkt.size = 0;if (video_stream)printf("Demuxing video from file '%s' into '%s'\n", src_filename, video_dst_filename);if (audio_stream)printf("Demuxing audio from file '%s' into '%s'\n", src_filename, audio_dst_filename);/* read frames from the file */while (av_read_frame(fmt_ctx, &pkt) >= 0) {// check if the packet belongs to a stream we are interested in, otherwise// skip itif (pkt.stream_index == video_stream_idx)ret = decode_packet(video_dec_ctx, &pkt);else if (pkt.stream_index == audio_stream_idx)ret = decode_packet(audio_dec_ctx, &pkt);av_packet_unref(&pkt);if (ret < 0)break;}/* flush the decoders */if (video_dec_ctx)decode_packet(video_dec_ctx, NULL);if (audio_dec_ctx)decode_packet(audio_dec_ctx, NULL);printf("Demuxing succeeded.\n");if (video_stream) {printf("Play the output video file with the command:\n""ffplay -f rawvideo -pix_fmt %s -video_size %dx%d %s\n",av_get_pix_fmt_name(pix_fmt), width, height,video_dst_filename);}if (audio_stream) {enum AVSampleFormat sfmt = audio_dec_ctx->sample_fmt;int n_channels = audio_dec_ctx->channels;const char *fmt;if (av_sample_fmt_is_planar(sfmt)) {const char *packed = av_get_sample_fmt_name(sfmt);printf("Warning: the sample format the decoder produced is planar ""(%s). This example will output the first channel only.\n",packed ? packed : "?");sfmt = av_get_packed_sample_fmt(sfmt);n_channels = 1;}if ((ret = get_format_from_sample_fmt(&fmt, sfmt)) < 0)goto end;printf("Play the output audio file with the command:\n""ffplay -f %s -ac %d -ar %d %s\n",fmt, n_channels, audio_dec_ctx->sample_rate,audio_dst_filename);}end:avcodec_free_context(&video_dec_ctx);avcodec_free_context(&audio_dec_ctx);avformat_close_input(&fmt_ctx);if (video_dst_file)fclose(video_dst_file);if (audio_dst_file)fclose(audio_dst_file);av_frame_free(&frame);av_free(video_dst_data[0]);return ret < 0;

}

2、運行結果

Demuxing video from file './debug/test.flv' into './debug/test.yuv'

Demuxing audio from file './debug/test.flv' into './debug/test.pcm'

video_frame n:0 coded_n:0

audio_frame n:0 nb_samples:1024 pts:0

audio_frame n:1 nb_samples:1024 pts:0.0004375

video_frame n:1 coded_n:1

audio_frame n:2 nb_samples:1024 pts:0.000895833

audio_frame n:3 nb_samples:1024 pts:0.00133333

video_frame n:2 coded_n:2

audio_frame n:4 nb_samples:1024 pts:0.00177083

audio_frame n:5 nb_samples:1024 pts:0.00222917

....

video_frame n:2931 coded_n:2931

audio_frame n:5496 nb_samples:1024 pts:2.44267

audio_frame n:5497 nb_samples:1024 pts:2.4431

audio_frame n:5498 nb_samples:1024 pts:2.44356

Demuxing succeeded.

Play the output video file with the command:

ffplay -f rawvideo -pix_fmt yuv420p -video_size 1280x720 ./debug/test.yuv

Warning: the sample format the decoder produced is planar (fltp). This example will output the first channel only.

Play the output audio file with the command:

ffplay -f f32le -ac 1 -ar 48000 ./debug/test.pcm

Input #0, flv, from './debug/test.flv':Metadata:encoder : Lavf58.45.100Duration: 00:01:57.31, start: 0.000000, bitrate: 1442 kb/sStream #0:0: Video: h264 (Main), yuv420p(progressive), 1280x720 [SAR 1:1 DAR 16:9], 1048 kb/s, 25 fps, 25 tbr, 1k tbn, 50 tbcStream #0:1: Audio: aac (LC), 48000 Hz, 5.1, fltp, 383 kb/s

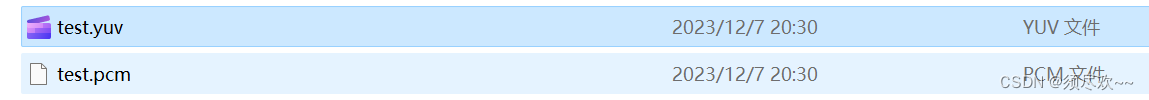

在 debug 目錄下生成了原始視頻數據 test.yuv 以及原始音頻數據 test.pcm

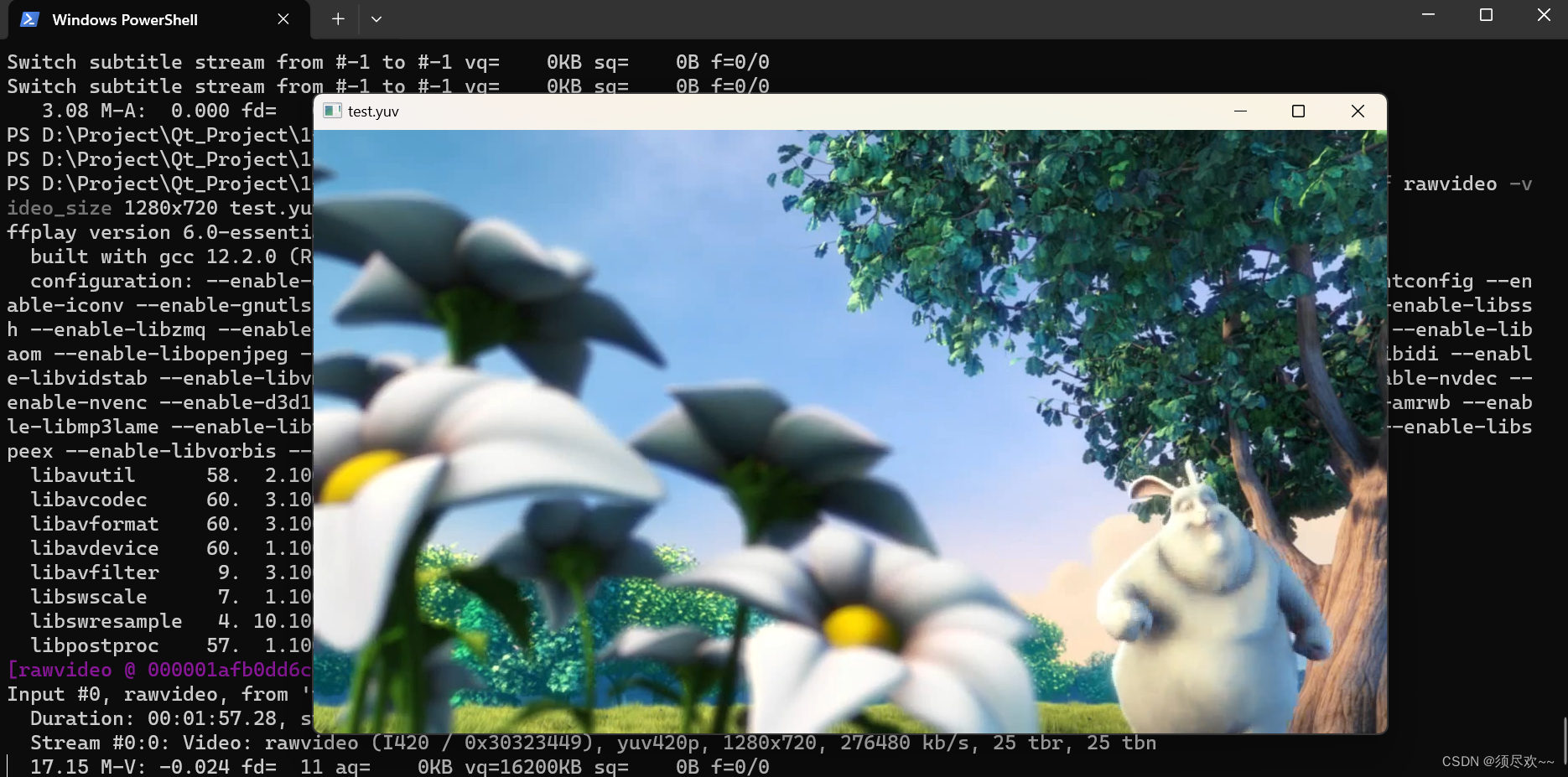

在 debug 目錄下打開終端使用 ffplay 播放 test.yuv:

ffplay -f rawvideo -video_size 1280x720 test.yuv

此時可以看到原視頻中的視頻(無音頻)。

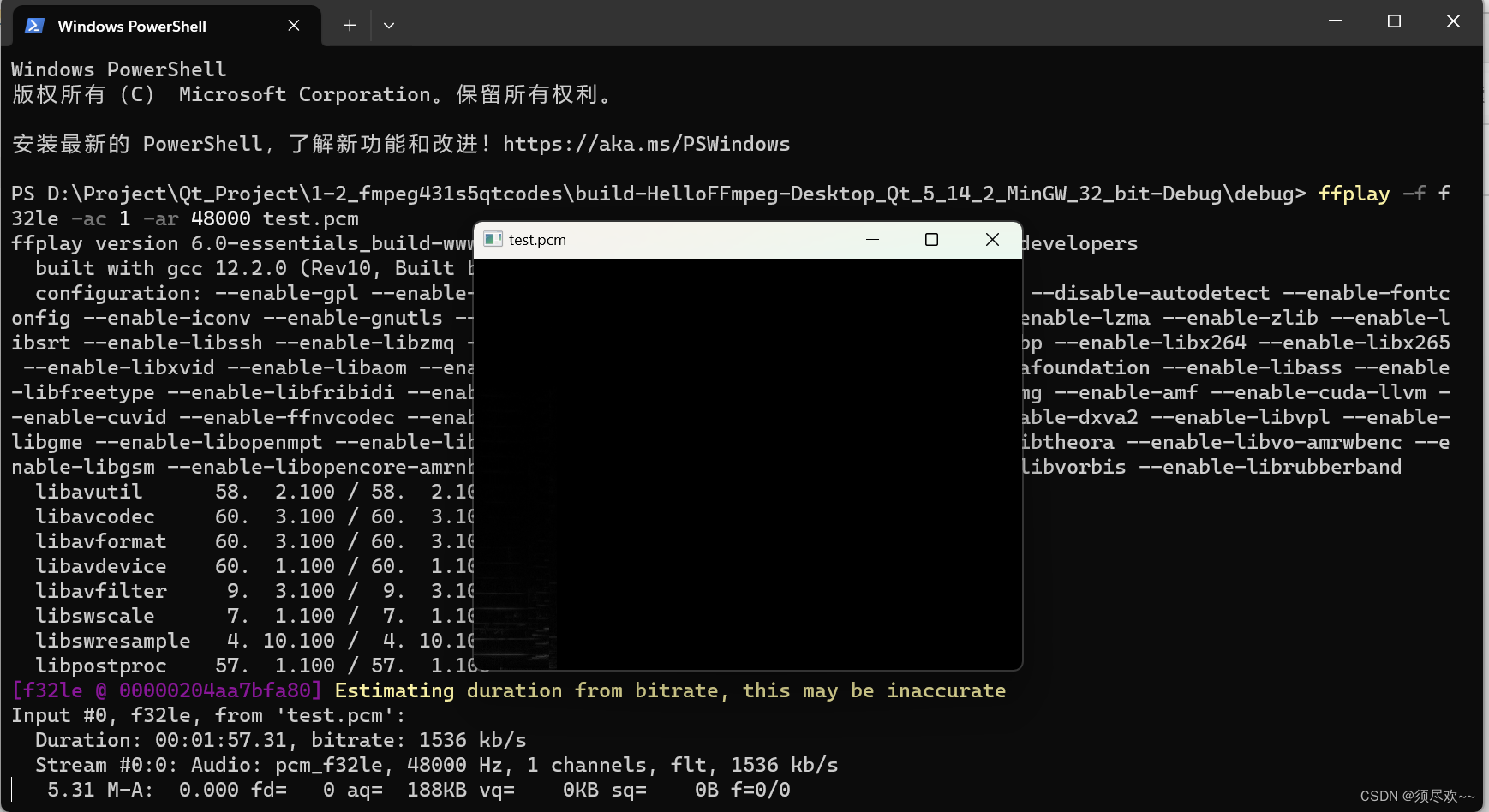

在 debug 目錄下打開終端使用 ffplay 播放 test.pcm:

ffplay -f f32le -ac 1 -ar 48000 test.pcm

此時可以聽到原視頻中的音頻(無視頻)。

我的qq:2442391036,歡迎交流!

)