中英文混合輸出是文本轉語音(TTS)項目中很常見的需求場景,尤其在技術文章或者技術視頻領域里,其中文文本中一定會夾雜著海量的英文單詞,我們當然不希望AI口播只會念中文,Bert-vits2老版本(2.0以下版本)并不支持英文訓練和推理,但更新了底模之后,V2.0以上版本支持了中英文混合推理(mix)模式。

還是以霉霉為例子:

https://www.bilibili.com/video/BV1bB4y1R7Nu/

截取霉霉說英文的30秒音頻素材片段:

Bert-vits2英文素材處理

首先克隆項目:

git clone https://github.com/v3ucn/Bert-VITS2_V210.git

安裝依賴:

pip3 install -r requirements.txt

將音頻素材放入Data/meimei_en/raw目錄中,這里en代表英文角色。

隨后對素材進行切分:

python3 audio_slicer.py

隨后對音頻進行識別和重新采樣:

python3 short_audio_transcribe.py

這里還是使用語音識別模型whisper,默認選擇medium模型,如果顯存不夠可以針對short_audio_transcribe.py文件進行修改:

import whisper

import os

import json

import torchaudio

import argparse

import torch

from config import config

lang2token = { 'zh': "ZH|", 'ja': "JP|", "en": "EN|", }

def transcribe_one(audio_path): # load audio and pad/trim it to fit 30 seconds audio = whisper.load_audio(audio_path) audio = whisper.pad_or_trim(audio) # make log-Mel spectrogram and move to the same device as the model mel = whisper.log_mel_spectrogram(audio).to(model.device) # detect the spoken language _, probs = model.detect_language(mel) print(f"Detected language: {max(probs, key=probs.get)}") lang = max(probs, key=probs.get) # decode the audio options = whisper.DecodingOptions(beam_size=5) result = whisper.decode(model, mel, options) # print the recognized text print(result.text) return lang, result.text

if __name__ == "__main__": parser = argparse.ArgumentParser() parser.add_argument("--languages", default="CJ") parser.add_argument("--whisper_size", default="medium") args = parser.parse_args() if args.languages == "CJE": lang2token = { 'zh': "ZH|", 'ja': "JP|", "en": "EN|", } elif args.languages == "CJ": lang2token = { 'zh': "ZH|", 'ja': "JP|", } elif args.languages == "C": lang2token = { 'zh': "ZH|", }

識別后的語音文件:

Data\meimei_en\raw/meimei_en/processed_0.wav|meimei_en|EN|But these were songs that didn't make it on the album.

Data\meimei_en\raw/meimei_en/processed_1.wav|meimei_en|EN|because I wanted to save them for the next album. And then it turned out the next album was like a whole different thing. And so they get left behind.

Data\meimei_en\raw/meimei_en/processed_2.wav|meimei_en|EN|and you always think back on these songs, and you're like.

Data\meimei_en\raw/meimei_en/processed_3.wav|meimei_en|EN|What would have happened? I wish people could hear this.

Data\meimei_en\raw/meimei_en/processed_4.wav|meimei_en|EN|but it belongs in that moment in time.

Data\meimei_en\raw/meimei_en/processed_5.wav|meimei_en|EN|So, now that I get to go back and revisit my old work,

Data\meimei_en\raw/meimei_en/processed_6.wav|meimei_en|EN|I've dug up those songs.

Data\meimei_en\raw/meimei_en/processed_7.wav|meimei_en|EN|from the crypt they were in.

Data\meimei_en\raw/meimei_en/processed_8.wav|meimei_en|EN|And I have like, I've reached out to artists that I love and said, do you want to?

Data\meimei_en\raw/meimei_en/processed_9.wav|meimei_en|EN|do you want to sing this with me? You know, Phoebe Bridgers is one of my favorite artists.

可以看到,每個切片都有對應的英文字符。

接著就是標注,以及bert模型文件生成:

python3 preprocess_text.py

python3 emo_gen.py

python3 spec_gen.py

python3 bert_gen.py

運行完畢后,查看英文訓練集:

Data\meimei_en\raw/meimei_en/processed_3.wav|meimei_en|EN|What would have happened? I wish people could hear this.|_ w ah t w uh d hh ae V hh ae p ah n d ? ay w ih sh p iy p ah l k uh d hh ih r dh ih s . _|0 0 2 0 0 2 0 0 2 0 0 2 0 1 0 0 0 2 0 2 0 0 2 0 1 0 0 2 0 0 2 0 0 2 0 0 0|1 3 3 3 6 1 1 3 5 3 3 3 1 1

Data\meimei_en\raw/meimei_en/processed_6.wav|meimei_en|EN|I've dug up those songs.|_ ay V d ah g ah p dh ow z s ao ng z . _|0 2 0 0 2 0 2 0 0 2 0 0 2 0 0 0 0|1 1 1 0 3 2 3 4 1 1

Data\meimei_en\raw/meimei_en/processed_5.wav|meimei_en|EN|So, now that I get to go back and revisit my old work,|_ s ow , n aw dh ae t ay g eh t t uw g ow b ae k ae n d r iy V ih z ih t m ay ow l d w er k , _|0 0 2 0 0 2 0 2 0 2 0 2 0 0 2 0 2 0 2 0 2 0 0 0 1 0 2 0 1 0 0 2 2 0 0 0 2 0 0 0|1 2 1 2 3 1 3 2 2 3 3 7 2 3 3 1 1

Data\meimei_en\raw/meimei_en/processed_1.wav|meimei_en|EN|because I wanted to save them for the next album. And then it turned out the next album was like a whole different thing. And so they get left behind.|_ b ih k ao z ay w aa n t ah d t uw s ey V dh eh m f ao r dh ah n eh k s t ae l b ah m . ae n d dh eh n ih t t er n d aw t dh ah n eh k s t ae l b ah m w aa z l ay k ah hh ow l d ih f er ah n t th ih ng . ae n d s ow dh ey g eh t l eh f t b ih hh ay n d . _|0 0 1 0 2 0 2 0 2 0 0 1 0 0 2 0 2 0 0 2 0 0 2 0 0 1 0 2 0 0 0 2 0 0 1 0 0 2 0 0 0 2 0 2 0 0 2 0 0 2 0 0 1 0 2 0 0 0 2 0 0 1 0 0 2 0 0 2 0 1 0 2 0 0 2 0 1 1 0 0 0 2 0 0 2 0 0 0 2 0 2 0 2 0 0 2 0 0 0 1 0 2 0 0 0 0|1 5 1 6 2 3 3 3 2 5 5 1 3 3 2 4 2 2 5 5 3 3 1 3 7 3 1 3 2 2 3 4 6 1 1

Data\meimei_en\raw/meimei_en/processed_2.wav|meimei_en|EN|and you always think back on these songs, and you're like.|_ ae n d y uw ao l w ey z th ih ng k b ae k aa n dh iy z s ao ng z , ae n d y uh r l ay k . _|0 2 0 0 0 2 2 0 0 3 0 0 2 0 0 0 2 0 2 0 0 2 0 0 2 0 0 0 2 0 0 0 2 0 0 2 0 0 0|1 3 2 5 4 3 2 3 4 1 3 1 1 1 3 1 1

至此,英文數據集就處理好了。

Bert-vits2英文模型訓練

隨后運行訓練文件:

python3 train_ms.py

就可以在本地訓練英文模型了。

這里需要注意的是,中文模型和英文模型通常需要分別進行訓練,換句話說,不能把英文訓練集和中文訓練集混合著進行訓練。

中文和英文在語言結構、詞匯和語法等方面存在顯著差異。中文采用漢字作為基本單元,而英文使用字母作為基本單元。中文的句子結構和語序也與英文有所不同。因此,中文模型和英文模型在學習語言特征和模式時需要不同的處理方式和模型架構。

中英文文本數據的編碼方式不同。中文通常使用Unicode編碼,而英文使用ASCII或Unicode編碼。這導致了中文和英文文本數據的表示方式存在差異。在混合訓練時,中英文文本數據的編碼和處理方式需要統一,否則會導致模型訓練過程中的不一致性和錯誤。

所以,Bert-vits2所謂的Mix模式也僅僅指的是推理,而非訓練,當然,雖然沒法混合數據集進行訓練,但是開多進程進行中文和英文模型的并發訓練還是可以的。

Bert-vits2中英文模型混合推理

英文模型訓練完成后(所謂的訓練完成,往往是先跑個50步看看效果),將中文模型也放入Data目錄,關于中文模型的訓練,請移步:本地訓練,立等可取,30秒音頻素材復刻霉霉講中文音色基于Bert-VITS2V2.0.2,囿于篇幅,這里不再贅述。

模型結構如下:

E:\work\Bert-VITS2-v21_demo\Data>tree /f

Folder PATH listing for volume myssd

Volume serial number is 7CE3-15AE

E:.

├───meimei_cn

│ │ config.json

│ │ config.yml

│ │

│ ├───filelists

│ │ cleaned.list

│ │ short_character_anno.list

│ │ train.list

│ │ val.list

│ │

│ ├───models

│ │ G_50.pth

│ │

│ └───raw

│ └───meimei

│ meimei_0.wav

│ meimei_1.wav

│ meimei_2.wav

│ meimei_3.wav

│ meimei_4.wav

│ meimei_5.wav

│ meimei_6.wav

│ meimei_7.wav

│ meimei_8.wav

│ meimei_9.wav

│ processed_0.bert.pt

│ processed_0.emo.npy

│ processed_0.spec.pt

│ processed_0.wav

│ processed_1.bert.pt

│ processed_1.emo.npy

│ processed_1.spec.pt

│ processed_1.wav

│ processed_2.bert.pt

│ processed_2.emo.npy

│ processed_2.spec.pt

│ processed_2.wav

│ processed_3.bert.pt

│ processed_3.emo.npy

│ processed_3.spec.pt

│ processed_3.wav

│ processed_4.bert.pt

│ processed_4.emo.npy

│ processed_4.spec.pt

│ processed_4.wav

│ processed_5.bert.pt

│ processed_5.emo.npy

│ processed_5.spec.pt

│ processed_5.wav

│ processed_6.bert.pt

│ processed_6.emo.npy

│ processed_6.spec.pt

│ processed_6.wav

│ processed_7.bert.pt

│ processed_7.emo.npy

│ processed_7.spec.pt

│ processed_7.wav

│ processed_8.bert.pt

│ processed_8.emo.npy

│ processed_8.spec.pt

│ processed_8.wav

│ processed_9.bert.pt

│ processed_9.emo.npy

│ processed_9.spec.pt

│ processed_9.wav

│

└───meimei_en │ config.json │ config.yml │ ├───filelists │ cleaned.list │ short_character_anno.list │ train.list │ val.list │ ├───models │ │ DUR_0.pth │ │ DUR_50.pth │ │ D_0.pth │ │ D_50.pth │ │ events.out.tfevents.1701484053.ly.16484.0 │ │ events.out.tfevents.1701620324.ly.10636.0 │ │ G_0.pth │ │ G_50.pth │ │ train.log │ │ │ └───eval │ events.out.tfevents.1701484053.ly.16484.1 │ events.out.tfevents.1701620324.ly.10636.1 │ └───raw └───meimei_en meimei_en_0.wav meimei_en_1.wav meimei_en_2.wav meimei_en_3.wav meimei_en_4.wav meimei_en_5.wav meimei_en_6.wav meimei_en_7.wav meimei_en_8.wav meimei_en_9.wav processed_0.bert.pt processed_0.emo.npy processed_0.wav processed_1.bert.pt processed_1.emo.npy processed_1.spec.pt processed_1.wav processed_2.bert.pt processed_2.emo.npy processed_2.spec.pt processed_2.wav processed_3.bert.pt processed_3.emo.npy processed_3.spec.pt processed_3.wav processed_4.bert.pt processed_4.emo.npy processed_4.wav processed_5.bert.pt processed_5.emo.npy processed_5.spec.pt processed_5.wav processed_6.bert.pt processed_6.emo.npy processed_6.spec.pt processed_6.wav processed_7.bert.pt processed_7.emo.npy processed_7.wav processed_8.bert.pt processed_8.emo.npy processed_8.wav processed_9.bert.pt processed_9.emo.npy processed_9.wav

這里meimei_cn代表中文角色模型,meimei_en代表英文角色模型,分別都只訓練了50步。

啟動推理服務:

python3 webui.py

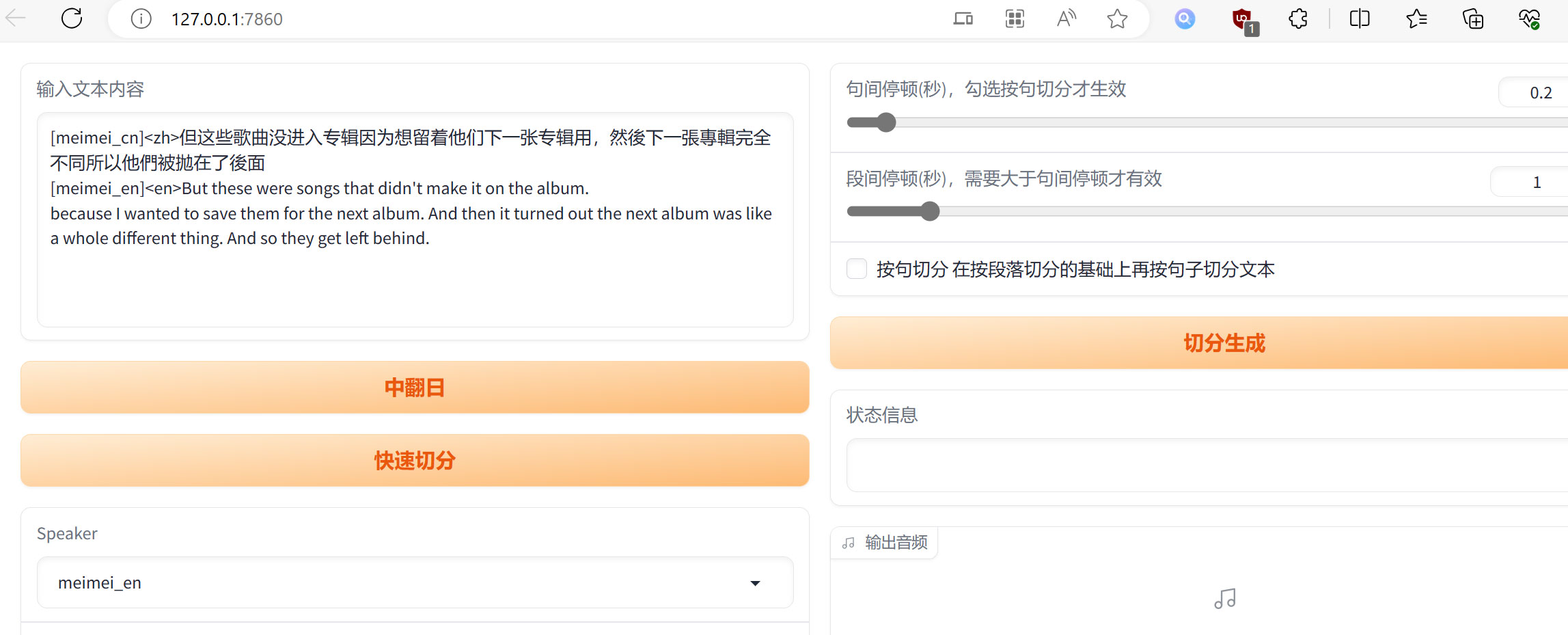

訪問http://127.0.0.1:7860/,在文本框中輸入:

[meimei_cn]<zh>但這些歌曲沒進入專輯因為想留著他們下一張專輯用,然後下一張專輯完全不同所以他們被拋在了後面

[meimei_en]<en>But these were songs that didn't make it on the album.

because I wanted to save them for the next album. And then it turned out the next album was like a whole different thing. And so they get left behind.

隨后將語言設置為mix。

這里通過[角色]和<語言>對文本進行標識,讓系統選擇對應的中文或者英文模型進行并發推理:

如果本地只有一個英文模型和一個中文模型,也可以選擇auto模型,進行自動中英文混合推理:

但這些歌曲沒進入專輯因為想留著他們下一張專輯用,然後下一張專輯完全不同所以他們被拋在了後面

But these were songs that didn't make it on the album.

because I wanted to save them for the next album. And then it turned out the next album was like a whole different thing. And so they get left behind.

系統會自動偵測文本語言從而選擇對應模型進行推理。

結語

在技術文章翻譯轉口播或者視頻、跨語言信息檢索等任務中需要處理中英文之間的轉換和對齊,通過Bert-vits2中英文混合推理,可以更有效地處理這些任務,并提供更準確和連貫的結果,Bert-vits2中英文混合推理整合包地址如下:

https://pan.baidu.com/s/1iaC7f1GPXevDrDMCRCs8uQ?pwd=v3uc

)