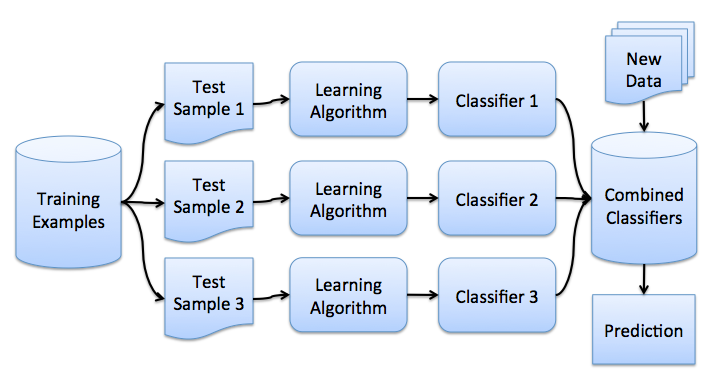

使用同一個框架,獨立在一個數據集上面,分別訓練多次,每個單獨模型訓練超參數可以一樣,也可以不一樣,最后若干個訓練好的獨立模型在測試集數據上面做最后集中決策。

實例代碼如下:

class MyEnsemble(nn.Module):def __init__(self, modelA, modelB, nb_classes=10):super(MyEnsemble, self).__init__()self.modelA = modelAself.modelB = modelB# Remove last linear layerself.modelA.fc = nn.Identity()self.modelB.fc = nn.Identity()# Create new classifierself.classifier = nn.Linear(2048+512, nb_classes)def forward(self, x):x1 = self.modelA(x.clone()) # clone to make sure x is not changed by inplace methodsx1 = x1.view(x1.size(0), -1)x2 = self.modelB(x)x2 = x2.view(x2.size(0), -1)x = torch.cat((x1, x2), dim=1)x = self.classifier(F.relu(x))return x# Train your separate models

# ...

# We use pretrained torchvision models here

modelA = models.resnet50(pretrained=True)

modelB = models.resnet18(pretrained=True)# Freeze these models

for param in modelA.parameters():param.requires_grad_(False)for param in modelB.parameters():param.requires_grad_(False)# Create ensemble model

model = MyEnsemble(modelA, modelB)

x = torch.randn(1, 3, 224, 224)

output = model(x)?

)

Ceph 架構)

![【Sorted Set】Redis常用數據類型: ZSet [使用手冊]](http://pic.xiahunao.cn/【Sorted Set】Redis常用數據類型: ZSet [使用手冊])

)

-uniclould增刪改查業務開發)