學習參考來自

- 使用CNN在MNIST上實現簡單的攻擊樣本

- https://github.com/wmn7/ML_Practice/blob/master/2019_06_03/CNN_MNIST%E5%8F%AF%E8%A7%86%E5%8C%96.ipynb

文章目錄

- 在 MNIST 上實現簡單的攻擊樣本

- 1 訓練一個數字分類網絡

- 2 控制輸出的概率, 看輸入是什么

- 3 讓正確的圖片分類錯誤

在 MNIST 上實現簡單的攻擊樣本

要看某個filter在檢測什么,我們讓通過其后的輸出激活值較大。

想要一些攻擊樣本,有一個簡單的想法就是讓最后結果中是某一類的概率值變大,接著進行反向傳播(固定住網絡的參數),去修改input

原理圖:

1 訓練一個數字分類網絡

模型基礎數據、超參數的配置

import time

import csv, osimport PIL.Image

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import cv2

from cv2 import resizeimport torch

import torch.nn as nn

import torch.nn.functional as F

from torch.utils.data.sampler import SubsetRandomSampler

from torch.autograd import Variableimport copy

import torchvision

import torchvision.transforms as transforms

os.environ["CUDA_VISIBLE_DEVICES"] = "1"# --------------------

# Device configuration

# --------------------

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')# ----------------

# Hyper-parameters

# ----------------

num_classes = 10

num_epochs = 3

batch_size = 100

validation_split = 0.05 # 每次訓練集中選出10%作為驗證集

learning_rate = 0.001# -------------

# MNIST dataset

# -------------

train_dataset = torchvision.datasets.MNIST(root='./',train=True,transform=transforms.ToTensor(),download=True)test_dataset = torchvision.datasets.MNIST(root='./',train=False,transform=transforms.ToTensor())

# -----------

# Data loader

# -----------

test_len = len(test_dataset) # 計算測試集的個數 10000

test_loader = torch.utils.data.DataLoader(dataset=test_dataset,batch_size=batch_size,shuffle=False)for (inputs, labels) in test_loader:print(inputs.size()) # [100, 1, 28, 28]print(labels.size()) # [100]break# ------------------

# 下面切分validation

# ------------------

dataset_len = len(train_dataset) # 60000

indices = list(range(dataset_len))

# Randomly splitting indices:

val_len = int(np.floor(validation_split * dataset_len)) # validation的長度

validation_idx = np.random.choice(indices, size=val_len, replace=False) # validatiuon的index

train_idx = list(set(indices) - set(validation_idx)) # train的index

## Defining the samplers for each phase based on the random indices:

train_sampler = SubsetRandomSampler(train_idx)

validation_sampler = SubsetRandomSampler(validation_idx)

train_loader = torch.utils.data.DataLoader(dataset=train_dataset,sampler=train_sampler,batch_size=batch_size)

validation_loader = torch.utils.data.DataLoader(train_dataset,sampler=validation_sampler,batch_size=batch_size)

train_dataloaders = {"train": train_loader, "val": validation_loader} # 使用字典的方式進行保存

train_datalengths = {"train": len(train_idx), "val": val_len} # 保存train和validation的長度

搭建簡單的神經網絡

# -------------------------------------------------------

# Convolutional neural network (two convolutional layers)

# -------------------------------------------------------

class ConvNet(nn.Module):def __init__(self, num_classes=10):super(ConvNet, self).__init__()self.layer1 = nn.Sequential(nn.Conv2d(in_channels=1, out_channels=16, kernel_size=5, stride=1, padding=2),nn.BatchNorm2d(16),nn.ReLU(),nn.MaxPool2d(kernel_size=2, stride=2))self.layer2 = nn.Sequential(nn.Conv2d(in_channels=16, out_channels=32, kernel_size=5, stride=1, padding=2),nn.BatchNorm2d(32),nn.ReLU(),nn.MaxPool2d(kernel_size=2, stride=2))self.fc = nn.Linear(7*7*32, num_classes)def forward(self, x):out = self.layer1(x)out = self.layer2(out)out = out.reshape(out.size(0), -1)out = self.fc(out)return out

繪制損失和精度變化曲線

# -----------

# draw losses

# -----------

def draw_loss_acc(train_list, validation_list, mode="loss"):plt.style.use("seaborn")# set interdata_len = len(train_list)x_ticks = np.arange(1, data_len + 1)plt.xticks(x_ticks)if mode == "Loss":plt.plot(x_ticks, train_list, label="Train Loss")plt.plot(x_ticks, validation_list, label="Validation Loss")plt.xlabel("Epoch")plt.ylabel("Loss")plt.legend()plt.savefig("Epoch_loss.jpg")elif mode == "Accuracy":plt.plot(x_ticks, train_list, label="Train AccuracyAccuracy")plt.plot(x_ticks, validation_list, label="Validation Accuracy")plt.xlabel("Epoch")plt.ylabel("Accuracy")plt.legend()plt.savefig("Epoch_Accuracy.jpg")

定義訓練模型的函數

# ---------------

# Train the model

# ---------------

def train_model(model, criterion, optimizer, dataloaders, train_datalengths, scheduler=None, num_epochs=2):"""傳入的參數分別是:1. model:定義的模型結構2. criterion:損失函數3. optimizer:優化器4. dataloaders:training dataset5. train_datalengths:train set和validation set的大小, 為了計算準確率6. scheduler:lr的更新策略7. num_epochs:訓練的epochs"""since = time.time()# 保存最好一次的模型參數和最好的準確率best_model_wts = copy.deepcopy(model.state_dict())best_acc = 0.0train_loss = [] # 記錄每一個epoch后的train的losstrain_acc = []validation_loss = []validation_acc = []for epoch in range(num_epochs):print('Epoch [{}/{}]'.format(epoch + 1, num_epochs))print('-' * 10)# Each epoch has a training and validation phasefor phase in ['train', 'val']:if phase == 'train':if scheduler != None:scheduler.step()model.train() # Set model to training modeelse:model.eval() # Set model to evaluate moderunning_loss = 0.0 # 這個是一個epoch積累一次running_corrects = 0 # 這個是一個epoch積累一次# Iterate over data.total_step = len(dataloaders[phase])for i, (inputs, labels) in enumerate(dataloaders[phase]):# inputs = inputs.reshape(-1, 28*28).to(device)inputs = inputs.to(device)labels = labels.to(device)# zero the parameter gradientsoptimizer.zero_grad()# forward# track history if only in trainwith torch.set_grad_enabled(phase == 'train'):outputs = model(inputs)_, preds = torch.max(outputs, 1) # 使用output(概率)得到預測loss = criterion(outputs, labels) # 使用output計算誤差# backward + optimize only if in training phaseif phase == 'train':loss.backward()optimizer.step()# statisticsrunning_loss += loss.item() * inputs.size(0)running_corrects += torch.sum(preds == labels.data)if (i + 1) % 100 == 0:# 這里相當于是i*batch_size的樣本個數打印一次, i*100iteration_loss = loss.item() / inputs.size(0)iteration_acc = 100 * torch.sum(preds == labels.data).item() / len(preds)print('Mode {}, Epoch [{}/{}], Step [{}/{}], Accuracy: {}, Loss: {:.4f}'.format(phase, epoch + 1,num_epochs, i + 1,total_step,iteration_acc,iteration_loss))epoch_loss = running_loss / train_datalengths[phase]epoch_acc = running_corrects.double() / train_datalengths[phase]if phase == 'train':train_loss.append(epoch_loss)train_acc.append(epoch_acc)else:validation_loss.append(epoch_loss)validation_acc.append(epoch_acc)print('*' * 10)print('Mode: [{}], Loss: {:.4f}, Acc: {:.4f}'.format(phase, epoch_loss, epoch_acc))print('*' * 10)# deep copy the modelif phase == 'val' and epoch_acc > best_acc:best_acc = epoch_accbest_model_wts = copy.deepcopy(model.state_dict())print()time_elapsed = time.time() - sinceprint('*' * 10)print('Training complete in {:.0f}m {:.0f}s'.format(time_elapsed // 60, time_elapsed % 60))print('Best val Acc: {:4f}'.format(best_acc))print('*' * 10)# load best model weightsfinal_model = copy.deepcopy(model) # 最后得到的modelmodel.load_state_dict(best_model_wts) # 在驗證集上最好的modeldraw_loss_acc(train_list=train_loss, validation_list=validation_loss, mode='Loss') # 繪制Loss圖像draw_loss_acc(train_list=train_acc, validation_list=validation_acc, mode='Accuracy') # 繪制準確率圖像return (model, final_model)

開始訓練,并保存模型

if __name__ == "__main__":# 模型初始化model = ConvNet(num_classes=num_classes).to(device)# 打印模型結構#print(model)"""ConvNet((layer1): Sequential((0): Conv2d(1, 16, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(1): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU()(3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False))(layer2): Sequential((0): Conv2d(16, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU()(3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False))(fc): Linear(in_features=1568, out_features=10, bias=True))"""# -------------------# Loss and optimizer# ------------------criterion = nn.CrossEntropyLoss()optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)# -------------# 進行模型的訓練# -------------(best_model, final_model) = train_model(model=model, criterion=criterion, optimizer=optimizer,dataloaders=train_dataloaders, train_datalengths=train_datalengths,num_epochs=num_epochs)"""Epoch [1/3]----------Mode train, Epoch [1/3], Step [100/570], Accuracy: 96.0, Loss: 0.0012Mode train, Epoch [1/3], Step [200/570], Accuracy: 96.0, Loss: 0.0011Mode train, Epoch [1/3], Step [300/570], Accuracy: 100.0, Loss: 0.0004Mode train, Epoch [1/3], Step [400/570], Accuracy: 100.0, Loss: 0.0003Mode train, Epoch [1/3], Step [500/570], Accuracy: 96.0, Loss: 0.0010**********Mode: [train], Loss: 0.1472, Acc: 0.9579********************Mode: [val], Loss: 0.0653, Acc: 0.9810**********Epoch [2/3]----------Mode train, Epoch [2/3], Step [100/570], Accuracy: 99.0, Loss: 0.0005Mode train, Epoch [2/3], Step [200/570], Accuracy: 98.0, Loss: 0.0003Mode train, Epoch [2/3], Step [300/570], Accuracy: 98.0, Loss: 0.0003Mode train, Epoch [2/3], Step [400/570], Accuracy: 97.0, Loss: 0.0005Mode train, Epoch [2/3], Step [500/570], Accuracy: 98.0, Loss: 0.0004**********Mode: [train], Loss: 0.0470, Acc: 0.9853********************Mode: [val], Loss: 0.0411, Acc: 0.9890**********Epoch [3/3]----------Mode train, Epoch [3/3], Step [100/570], Accuracy: 99.0, Loss: 0.0006Mode train, Epoch [3/3], Step [200/570], Accuracy: 99.0, Loss: 0.0009Mode train, Epoch [3/3], Step [300/570], Accuracy: 98.0, Loss: 0.0004Mode train, Epoch [3/3], Step [400/570], Accuracy: 99.0, Loss: 0.0003Mode train, Epoch [3/3], Step [500/570], Accuracy: 100.0, Loss: 0.0002**********Mode: [train], Loss: 0.0348, Acc: 0.9890********************Mode: [val], Loss: 0.0432, Acc: 0.9867********************Training complete in 0m 32sBest val Acc: 0.989000**********"""torch.save(model, 'CNN_MNIST.pkl')

訓練完的 loss 和 acc 曲線

載入模型進行簡單的測試,以下面這張 7 為例子

model = torch.load('CNN_MNIST.pkl')

# print(test_dataset.data[0].shape) # torch.Size([28, 28])

# print(test_dataset.targets[0]) # tensor(7)

#

unload = transforms.ToPILImage()

img = unload(test_dataset.data[0])

img.save("test_data_0.jpg")# 帶入模型進行預測

inputdata = test_dataset.data[0].view(1,1,28,28).float()/255

inputdata = inputdata.to(device)

outputs = model(inputdata)

print(outputs)

"""

tensor([[ -7.4075, -3.4618, -1.5322, 1.1591, -10.6026, -5.5818, -17.4020,14.6429, -4.5378, 1.0360]], device='cuda:0',grad_fn=<AddmmBackward0>)

"""

print(torch.max(outputs, 1))

"""

torch.return_types.max(

values=tensor([14.6429], device='cuda:0', grad_fn=<MaxBackward0>),

indices=tensor([7], device='cuda:0'))

"""

OK,預測結果正常,為 7

2 控制輸出的概率, 看輸入是什么

步驟

- 初始隨機一張圖片

- 我們希望讓分類中某個數的概率最大

- 最后看一下這個網絡認為什么樣的圖像是這個數字

# hook類的寫法

class SaveFeatures():"""注冊hook和移除hook"""def __init__(self, module):self.hook = module.register_forward_hook(self.hook_fn)def hook_fn(self, module, input, output):# self.features = output.clone().detach().requires_grad_(True)self.features = output.clone()def close(self):self.hook.remove()

下面開始操作

# hook住模型

layer = 2

activations = SaveFeatures(list(model.children())[layer])# 超參數

lr = 0.005 # 學習率

opt_steps = 100 # 迭代次數

upscaling_factor = 10 # 放大的倍數(為了最后圖像的保存)# 保存迭代后的數字

true_nums = []

random_init, num_init = 1, 0 # the type of initial# 帶入網絡進行迭代

for true_num in range(0, 10):# 初始化隨機圖片(數據定義和優化器一定要在一起)# 定義數據sz = 28if random_init:img = np.uint8(np.random.uniform(0, 255, (1,sz, sz))) / 255img = torch.from_numpy(img[None]).float().to(device)img_var = Variable(img, requires_grad=True)if num_init:# 將7變成0,1,2,3,4,5,6,7,8,9img = test_dataset.data[0].view(1, 1, 28, 28).float() / 255img = img.to(device)img_var = Variable(img, requires_grad=True)# 定義優化器optimizer = torch.optim.Adam([img_var], lr=lr, weight_decay=1e-6)for n in range(opt_steps): # optimize pixel values for opt_steps timesoptimizer.zero_grad()model(img_var) # 正向傳播if random_init:loss = -activations.features[0, true_num] # 這里的loss確保某個值的輸出大if num_init:loss = -activations.features[0, true_num] + F.mse_loss(img_var, img) # 這里的loss確保某個值的輸出大, 并且與原圖不會相差很多loss.backward()optimizer.step()# 打印最后的img的樣子print(activations.features[0, true_num]) # tensor(23.8736, device='cuda:0', grad_fn=<SelectBackward0>)print(activations.features[0])"""tensor([ 23.8736, -32.0724, -5.7329, -18.6501, -16.1558, -18.9483, -0.3033,-29.1561, 14.9260, -13.5412], device='cuda:0',grad_fn=<SelectBackward0>)"""print('========')img = img_var.cpu().clone()img = img.squeeze(0)# 圖像的裁剪(確保像素值的范圍)img[img > 1] = 1img[img < 0] = 0true_nums.append(img)unloader = transforms.ToPILImage()img = unloader(img)img = cv2.cvtColor(np.asarray(img), cv2.COLOR_RGB2BGR)sz = int(upscaling_factor * sz) # calculate new image sizeimg = cv2.resize(img, (sz, sz), interpolation=cv2.INTER_CUBIC) # scale image upif random_init:cv2.imwrite('random_regonize{}.jpg'.format(true_num), img)if num_init:cv2.imwrite('real7_regonize{}.jpg'.format(true_num), img)"""========tensor(22.3530, device='cuda:0', grad_fn=<SelectBackward0>)tensor([-22.4025, 22.3530, 6.0451, -9.4460, -1.0577, -17.7650, -11.3686,-11.8474, -5.5310, -16.9936], device='cuda:0',grad_fn=<SelectBackward0>)========tensor(50.2202, device='cuda:0', grad_fn=<SelectBackward0>)tensor([-16.9364, -16.5120, 50.2202, -9.5287, -25.2837, -32.3480, -22.8569,-20.1231, 1.1174, -31.7244], device='cuda:0',grad_fn=<SelectBackward0>)========tensor(48.8004, device='cuda:0', grad_fn=<SelectBackward0>)tensor([-33.3715, -30.5732, -6.0252, 48.8004, -34.9467, -17.8136, -35.1371,-17.4484, 2.8954, -11.9694], device='cuda:0',grad_fn=<SelectBackward0>)========tensor(31.5068, device='cuda:0', grad_fn=<SelectBackward0>)tensor([-24.5204, -13.6857, -5.1833, -22.7889, 31.5068, -20.2855, -16.7245,-19.1719, 2.4699, -16.2246], device='cuda:0',grad_fn=<SelectBackward0>)========tensor(37.4866, device='cuda:0', grad_fn=<SelectBackward0>)tensor([-20.2235, -26.1013, -25.9511, -2.7806, -19.5546, 37.4866, -10.8689,-30.3888, 0.1591, -8.5250], device='cuda:0',grad_fn=<SelectBackward0>)========tensor(35.9310, device='cuda:0', grad_fn=<SelectBackward0>)tensor([ -6.1996, -31.2246, -8.3396, -21.6307, -16.9098, -9.5194, 35.9310,-33.0918, 10.2462, -28.6393], device='cuda:0',grad_fn=<SelectBackward0>)========tensor(23.6772, device='cuda:0', grad_fn=<SelectBackward0>)tensor([-21.5441, -10.3366, -4.9905, -0.9289, -6.9219, -23.5643, -23.9894,23.6772, -0.7960, -16.9556], device='cuda:0',grad_fn=<SelectBackward0>)========tensor(57.8378, device='cuda:0', grad_fn=<SelectBackward0>)tensor([-13.5191, -53.9004, -9.2996, -10.3597, -27.3806, -27.5858, -15.3235,-46.7014, 57.8378, -17.2299], device='cuda:0',grad_fn=<SelectBackward0>)========tensor(37.0334, device='cuda:0', grad_fn=<SelectBackward0>)tensor([-26.2983, -37.7131, -16.6210, -1.8686, -11.5330, -11.7843, -25.7539,-27.0036, 6.3785, 37.0334], device='cuda:0',grad_fn=<SelectBackward0>)========"""

# 移除hook

activations.close()for i in range(0,10):_ , pre = torch.max(model(true_nums[i][None].to(device)),1)print("i:{},Pre:{}".format(i,pre))"""i:0,Pre:tensor([0], device='cuda:0')i:1,Pre:tensor([1], device='cuda:0')i:2,Pre:tensor([2], device='cuda:0')i:3,Pre:tensor([3], device='cuda:0')i:4,Pre:tensor([4], device='cuda:0')i:5,Pre:tensor([5], device='cuda:0')i:6,Pre:tensor([6], device='cuda:0')i:7,Pre:tensor([7], device='cuda:0')i:8,Pre:tensor([8], device='cuda:0')i:9,Pre:tensor([9], device='cuda:0')"""

可以看到相應最高的圖片的樣子,依稀可以看見 0,1,2,3,4,5,6,7,8,9 的輪廓

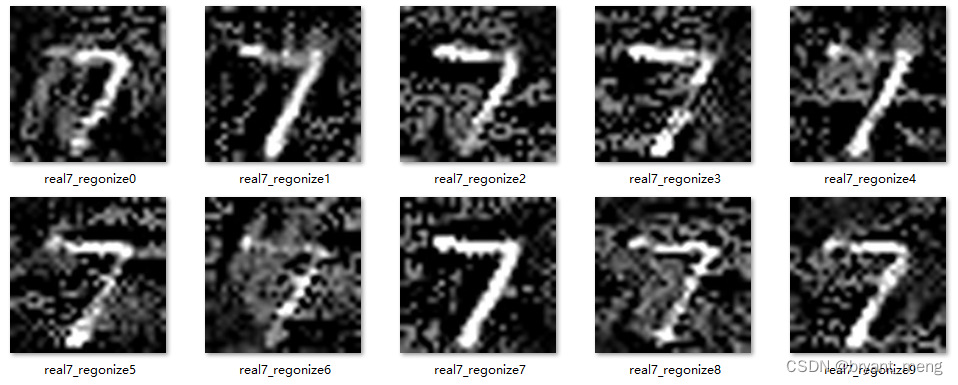

3 讓正確的圖片分類錯誤

步驟如下

- 使用特定數字的圖片,如數字7(初始化方式與前面的不一樣,是特定而不是隨機)

- 使用上面訓練好的網絡,固定網絡參數;

- 最后一層因為是10個輸出(相當于是概率), 我們 loss 設置為某個的負的概率值(利于梯度下降)

- 梯度下降, 使負的概率下降,即相對應的概率值上升,我們最終修改的是初始的圖片

- 這樣使得網絡認為這張圖片識別的數字的概率增加

簡單描述,輸入圖片數字7,固定網絡參數,讓其他數字的概率不斷變大,改變輸入圖片,最終達到迷惑網絡的目的

只要將第二節中的代碼 random_init, num_init = 1, 0 # the type of initial 改為 random_init, num_init = 0, 1 # the type of initial 即可

成功用 7 迷惑了所有類別

注意損失函數中還額外引入了 F.mse_loss(img_var, img) ,確保某個數字類別的概率輸出增大, 并且與原圖數字 7 像素上不會相差很多