安裝 containerd

需要在集群內的每個節點上都安裝容器運行時(containerd runtime),這個軟件是負責運行容器的軟件。

1.?啟動 ipv4?數據包轉發

# 設置所需的 sysctl 參數,參數在重新啟動后保持不變

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

EOF# 應用 sysctl 參數而不重新啟動

sudo sysctl --system然后使用?sysctl net.ipv4.ip_forward?驗證是否設置成功

2.?安裝 containerd runtime

sudo apt-get update && sudo apt-get install -y containerd3.?創建默認的配置文件

sudo mkdir -p /etc/containerd

containerd config default | sudo tee /etc/containerd/config.toml4.?配置 systemd cgroup?驅動

在 /etc/containerd/config.toml?中找到如下位置,將 SystemdCgroup? 設置為 true

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]...[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]SystemdCgroup = true5.?重啟 containerd,并設置開機自啟動

sudo systemctl restart containerd

sudo systemctl enable containerd安裝 kubeadm, kubelet, kubectl

1.?安裝必要依賴

# apt-transport-https 可能是一個虛擬包(dummy package);如果是的話,你可以跳過安裝這個包

sudo apt-get install -y apt-transport-https ca-certificates curl2.?下載用于 Kubernetes 軟件包倉庫的公共簽名密鑰

# 如果 `/etc/apt/keyrings` 目錄不存在,則應在 curl 命令之前創建它,請閱讀下面的注釋。

# sudo mkdir -p -m 755 /etc/apt/keyrings

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.33/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

3.?添加 Kubernetes apt 倉庫。

# 此操作會覆蓋 /etc/apt/sources.list.d/kubernetes.list 中現存的所有配置。

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.33/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list4.?更新 apt 包索引,安裝 kubelet、kubeadm 和 kubectl,并鎖定其版本:

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl# 立即啟用 kubelet

sudo systemctl enable --now kubeletmaster節點初始化控制平面

以下操作僅需要在 master?節點執行

1.?初始化

sudo kubeadm init \--apiserver-advertise-address=10.150.1.90 \--pod-network-cidr=10.244.0.0/16 \注意 --pod-network-cidr?參數,這個跟你實際安裝的網絡插件有關,指明 Pod 網絡可以使用的 IP 地址段。

注意此指令執行成功之后,你會得到類似于如下的輸出:

Your Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configYou should now deploy a Pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:/docs/concepts/cluster-administration/addons/You can now join any number of machines by running the following on each node

as root:kubeadm join <control-plane-host>:<control-plane-port> --token <token> --discovery-token-ca-cert-hash sha256:<hash>記住最后的 kubeadm join ,這是從節點加入集群的指令。

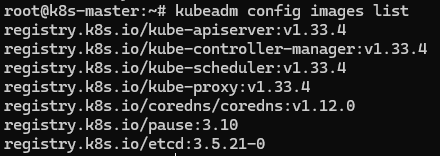

kubeadm init?過程中,需要從官方下載一系列的鏡像,可以通過?kubeadm config images list?指令查看

而這些鏡像呢,你大概率也是下不下來的,你可以去找替換的源,然后通過?--image-repository?參數來替換,但是我實在是沒有找到,因此我的做法是,用翻墻工具將這幾個官方源下載到本地,打上?tag?推送到我私有的 nexus?倉庫中,比如 registry.k8s.io/kube-apiserver:v1.33.4?會打 tag?成為?walli.nexus.repo/kube-apiserver:v1.33.4,這樣初始化的指令就變成了

sudo kubeadm init \--image-repository=walli.nexus.repo \--apiserver-advertise-address=10.150.1.90 \--pod-network-cidr=10.244.0.0/16 \2.?配置 kubectl

非root用戶:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configroot用戶:

export KUBECONFIG=/etc/kubernetes/admin.conf當你完成 kubeadm init?之后,你可以使用指令 kubectl get pods -n kube-system?查看當前有一些容器已經起來了,其中?coredns?應該并不會啟動,因為你還沒有安裝網絡插件,這個容器需要等到網絡插件安裝好之后才會正常啟動。

安裝?Pod?網絡插件(CNI)

kubernetes?支持很多種網絡插件,具體可參考?安裝擴展(Addon) | Kubernetes

本文就使用最簡單的?Flannel?插件

kubectl apply -f https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

注意這個 yml?中也有一些鏡像,我也是通過翻墻下載,打上私有 tag?的方式部署的。yml?內容可參考《附錄-kube-flannel.yml》

另外,flannel?使用的 bridge?的方式橋接網絡,但是操作系統相關的配置可能不正確,因此你需要做如下檢查:

- 使用指令?lsmod | grep bridge?檢查是否已經啟用模塊,如果沒有任何輸出表示沒有啟用,通過如下指令啟用:

sudo modprobe bridge sudo modprobe br_netfilter - 設置?sysctl ,編輯文件?/etc/sysctl.d/k8s.conf,添加如下設置:

net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1然后?sudo sysctl --system?重啟。注意,這個設置 bridge?的操作,主節點從節點均需要執行。

如果之前網絡插件有失敗的安裝,可以使用 kubectl delete -f kube-flanne.yml?來刪除之前的安裝。

安裝好?Pod?網絡插件之后,再使用?kubectl get pods -n kube-system?就能看到 coredns?也正常了

從節點加入集群

在從節點上,使用 kubeadm init?時輸出的 join?指令,加入到集群

sudo kubeadm join 192.168.1.10:6443 --token <your-token> --discovery-token-ca-cert-hash <your-hash>如果你忘記了這個指令,可以在 master?節點上使用?kubeadm token create --print-join-command?重新生成一個。這個指令有效期是24小時,如果過了一段時間有其他節點需要加入集群,一樣操作即可。

加入集群成功之后,使用 kubectl get node?你可以看到集群信息

附錄

kube-flannel.yml?內容

apiVersion: v1

kind: Namespace

metadata:labels:k8s-app: flannelpod-security.kubernetes.io/enforce: privilegedname: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:labels:k8s-app: flannelname: flannelnamespace: kube-flannel

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:labels:k8s-app: flannelname: flannel

rules:

- apiGroups:- ""resources:- podsverbs:- get

- apiGroups:- ""resources:- nodesverbs:- get- list- watch

- apiGroups:- ""resources:- nodes/statusverbs:- patch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:labels:k8s-app: flannelname: flannel

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: flannel

subjects:

- kind: ServiceAccountname: flannelnamespace: kube-flannel

---

apiVersion: v1

data:cni-conf.json: |{"name": "cbr0","cniVersion": "0.3.1","plugins": [{"type": "flannel","delegate": {"hairpinMode": true,"isDefaultGateway": true}},{"type": "portmap","capabilities": {"portMappings": true}}]}net-conf.json: |{"Network": "10.244.0.0/16","EnableNFTables": false,"Backend": {"Type": "vxlan"}}

kind: ConfigMap

metadata:labels:app: flannelk8s-app: flanneltier: nodename: kube-flannel-cfgnamespace: kube-flannel

---

apiVersion: apps/v1

kind: DaemonSet

metadata:labels:app: flannelk8s-app: flanneltier: nodename: kube-flannel-dsnamespace: kube-flannel

spec:selector:matchLabels:app: flannelk8s-app: flanneltemplate:metadata:labels:app: flannelk8s-app: flanneltier: nodespec:affinity:nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/osoperator: Invalues:- linuxcontainers:- args:- --ip-masq- --kube-subnet-mgrcommand:- /opt/bin/flanneldenv:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: EVENT_QUEUE_DEPTHvalue: "5000"- name: CONT_WHEN_CACHE_NOT_READYvalue: "false"image: walli.nexus.repo/flannel:v0.27.2 # 修改此處為私有鏡像name: kube-flannelresources:requests:cpu: 100mmemory: 50MisecurityContext:capabilities:add:- NET_ADMIN- NET_RAWprivileged: falsevolumeMounts:- mountPath: /run/flannelname: run- mountPath: /etc/kube-flannel/name: flannel-cfg- mountPath: /run/xtables.lockname: xtables-lockhostNetwork: trueinitContainers:- args:- -f- /flannel- /opt/cni/bin/flannelcommand:- cpimage: walli.nexus.repo/flannel-cni-plugin:v1.7.1-flannel1 # 修改此處為私有鏡像name: install-cni-pluginvolumeMounts:- mountPath: /opt/cni/binname: cni-plugin- args:- -f- /etc/kube-flannel/cni-conf.json- /etc/cni/net.d/10-flannel.conflistcommand:- cpimage: walli.nexus.repo/flannel:v0.27.2 # 修改此處為私有鏡像name: install-cnivolumeMounts:- mountPath: /etc/cni/net.dname: cni- mountPath: /etc/kube-flannel/name: flannel-cfgpriorityClassName: system-node-criticalserviceAccountName: flanneltolerations:- effect: NoScheduleoperator: Existsvolumes:- hostPath:path: /run/flannelname: run- hostPath:path: /opt/cni/binname: cni-plugin- hostPath:path: /etc/cni/net.dname: cni- configMap:name: kube-flannel-cfgname: flannel-cfg- hostPath:path: /run/xtables.locktype: FileOrCreatename: xtables-lock

——論文閱讀)

)

)