TiDB從0到1系列

- TiDB-從0到1-體系結構

- TiDB-從0到1-分布式存儲

- TiDB-從0到1-分布式事務

- TiDB-從0到1-MVCC

- TiDB-從0到1-部署篇

- TiDB-從0到1-配置篇

- TiDB-從0到1-集群擴縮容

一、數據導出

TiDB中通過Dumpling來實現數據導出,與MySQL中的mysqldump類似,其屬于邏輯備份,備份出的文件格式為SQL或CSV。

同樣與MySQL中的mysqldump應用場景類似,Dumping最好使用于對小規模的數據備份(導出)

二、Dumpling實操

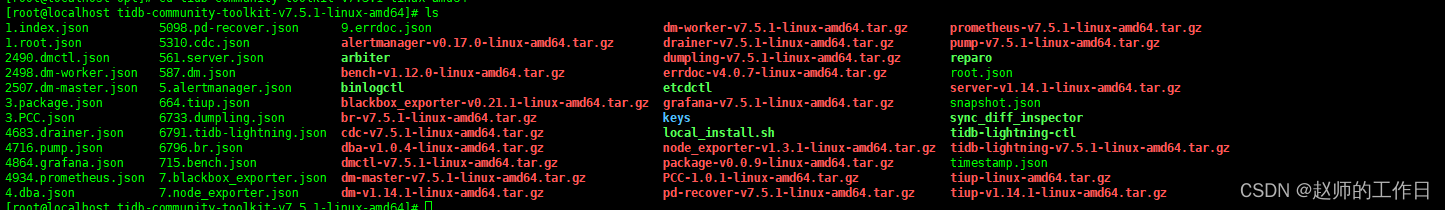

1、下載安裝

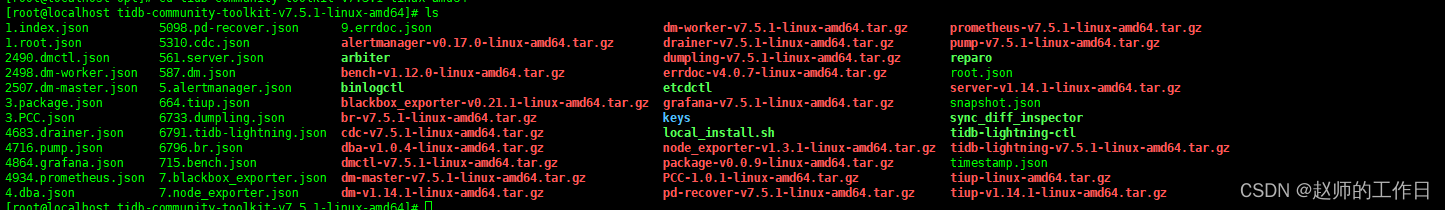

wget https://download.pingcap.org/tidb-community-toolkit-v7.5.1-linux-amd64.tar.gz

------

tar -xvf tidb-community-toolkit-v7.5.1-linux-amd64.tar.gz

2、解壓需要的工具包

tar xvf dumpling-v7.5.1-linux-amd64.tar.gz

2、權限控制

使用dumpling的用戶需要有對應的權限

- select

- reload

- lock tables

- replication client

- process

3、參數

./dumpling --help

-----------------

Flags:--allow-cleartext-passwords Allow passwords to be sent in cleartext (warning: don't use without TLS)--azblob.access-tier string Specify the storage class for azblob--azblob.account-key string Specify the account key for azblob--azblob.account-name string Specify the account name for azblob--azblob.encryption-key string Specify the server side encryption customer provided key--azblob.encryption-scope string Specify the server side encryption scope--azblob.endpoint string (experimental) Set the Azblob endpoint URL--azblob.sas-token string Specify the SAS (shared access signatures) for azblob--ca string The path name to the certificate authority file for TLS connection--case-sensitive whether the filter should be case-sensitive--cert string The path name to the client certificate file for TLS connection--complete-insert Use complete INSERT statements that include column names-c, --compress string Compress output file type, support 'gzip', 'snappy', 'zstd', 'no-compression' now--consistency string Consistency level during dumping: {auto|none|flush|lock|snapshot} (default "auto")--csv-delimiter string The delimiter for values in csv files, default '"' (default "\"")--csv-line-terminator string The line terminator for csv files, default '\r\n' (default "\r\n")--csv-null-value string The null value used when export to csv (default "\\N")--csv-separator string The separator for csv files, default ',' (default ",")-B, --database strings Databases to dump--dump-empty-database whether to dump empty database (default true)--escape-backslash use backslash to escape special characters (default true)-F, --filesize string The approximate size of output file--filetype string The type of export file (sql/csv)-f, --filter strings filter to select which tables to dump (default [*.*,!/^(mysql|sys|INFORMATION_SCHEMA|PERFORMANCE_SCHEMA|METRICS_SCHEMA|INSPECTION_SCHEMA)$/.*])--gcs.credentials-file string (experimental) Set the GCS credentials file path--gcs.endpoint string (experimental) Set the GCS endpoint URL--gcs.predefined-acl string (experimental) Specify the GCS predefined acl for objects--gcs.storage-class string (experimental) Specify the GCS storage class for objects--help Print help message and quit-h, --host string The host to connect to (default "127.0.0.1")--key string The path name to the client private key file for TLS connection-L, --logfile path Log file path, leave empty to write to console--logfmt format Log format: {text|json} (default "text")--loglevel string Log level: {debug|info|warn|error|dpanic|panic|fatal} (default "info")-d, --no-data Do not dump table data--no-header whether not to dump CSV table header-m, --no-schemas Do not dump table schemas with the data--no-sequences Do not dump sequences (default true)-W, --no-views Do not dump views (default true)--order-by-primary-key Sort dump results by primary key through order by sql (default true)-o, --output string Output directory (default "./export-2024-06-26T11:19:24+08:00")--output-filename-template string The output filename template (without file extension)--params stringToString Extra session variables used while dumping, accepted format: --params "character_set_client=latin1,character_set_connection=latin1" (default [])-p, --password string User password-P, --port int TCP/IP port to connect to (default 4000)-r, --rows uint If specified, dumpling will split table into chunks and concurrently dump them to different files to improve efficiency. For TiDB v3.0+, specify this will make dumpling split table with each file one TiDB region(no matter how many rows is).If not specified, dumpling will dump table without inner-concurrency which could be relatively slow. default unlimited--s3.acl string (experimental) Set the S3 canned ACLs, e.g. authenticated-read--s3.endpoint string (experimental) Set the S3 endpoint URL, please specify the http or https scheme explicitly--s3.external-id string (experimental) Set the external ID when assuming the role to access AWS S3--s3.provider string (experimental) Set the S3 provider, e.g. aws, alibaba, ceph--s3.region string (experimental) Set the S3 region, e.g. us-east-1--s3.role-arn string (experimental) Set the ARN of the IAM role to assume when accessing AWS S3--s3.sse string Set S3 server-side encryption, e.g. aws:kms--s3.sse-kms-key-id string KMS CMK key id to use with S3 server-side encryption.Leave empty to use S3 owned key.--s3.storage-class string (experimental) Set the S3 storage class, e.g. STANDARD--snapshot string Snapshot position (uint64 or MySQL style string timestamp). Valid only when consistency=snapshot-s, --statement-size uint Attempted size of INSERT statement in bytes (default 1000000)--status-addr string dumpling API server and pprof addr (default ":8281")-T, --tables-list strings Comma delimited table list to dump; must be qualified table names-t, --threads int Number of goroutines to use, default 4 (default 4)--tidb-mem-quota-query uint The maximum memory limit for a single SQL statement, in bytes.-u, --user string Username with privileges to run the dump (default "root")-V, --version Print Dumpling version--where string Dump only selected records

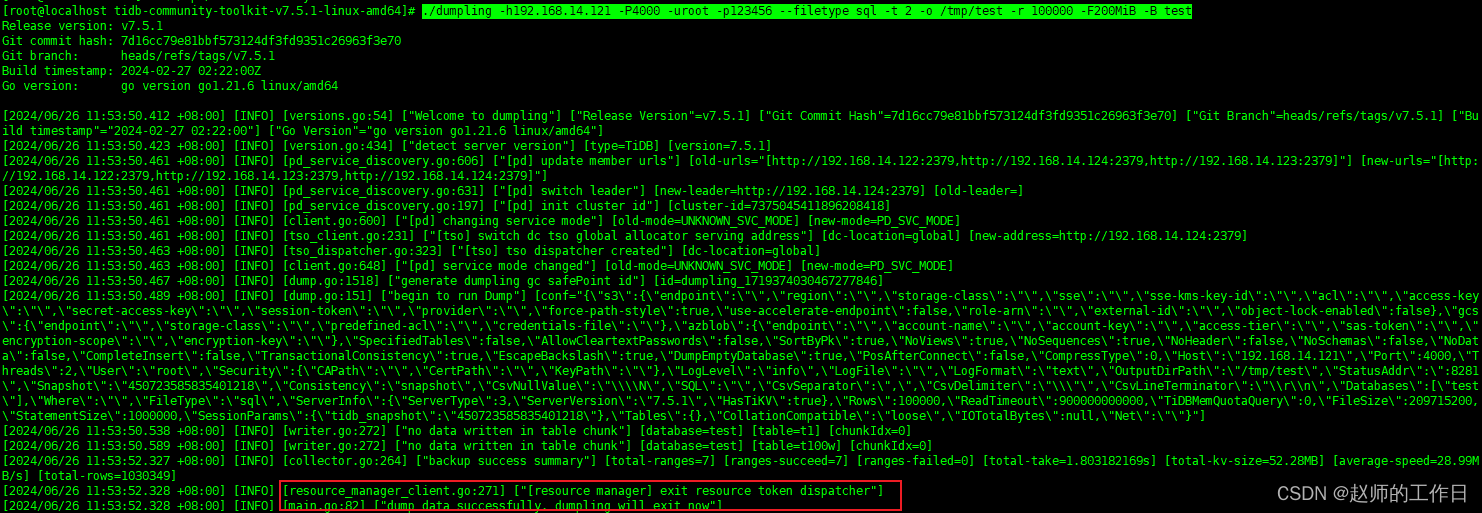

4、導出數據

導出test庫下的所有數據,同時指定導出文件為sql,導出目錄為/tmp/test,導出線程2,每10w行數據切換一次文件,每200MB切換一次文件

./dumpling -h192.168.14.121 -P4000 -uroot -p123456 --filetype sql -t 2 -o /tmp/test -r 100000 -F200MiB -B test

(備份成功)

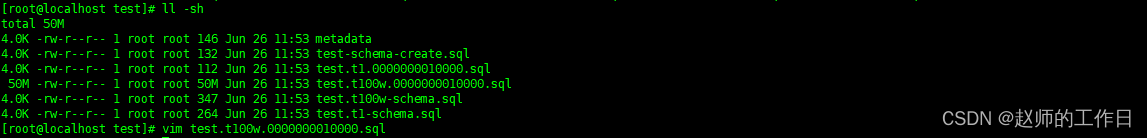

查看導出的內容

其中

- metadata:數據導出時的時間,binlog位置點

- xxx.schema.sql:建庫建表語句

- xxx.000000100.sql:數據

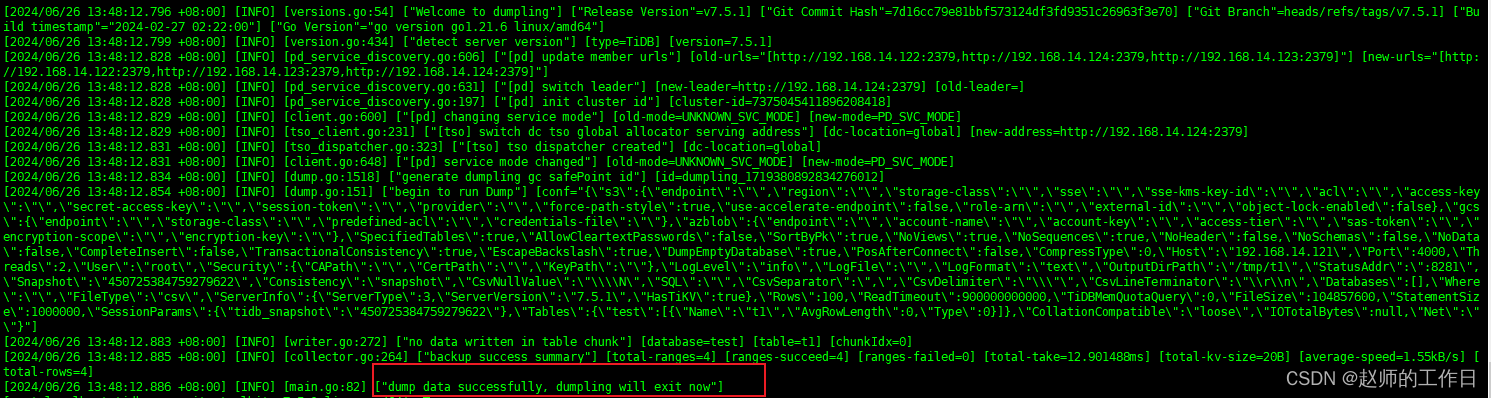

導出test庫下t1的表中id>10的數據,同時指定導出文件為CSV,導出目錄為/tmp/t1,導出線程2,每100行數據切換一次文件,每100MB切換一次文件

./dumpling -h192.168.14.121 -P4000 -uroot -p123456 --filetype csv -t 2 -o /tmp/t1 -r 100 -F100MiB -T test.t1 --where "id>10"

(備份成功)

查看導出的內容

建庫\建表語句依舊是SQL文件

不過數據為CSV格式

同時Dumpling默認也是一致性備份,通過MVCC機制備份出某個時間點的快照數據

三、數據導入

TiDB中提供了一種叫TiDB Lightning(Physical Import Mode模式)的數據導入方式,因為其導入過程TiDB是不能對外提供服務的,而且數據是從本地直接導入到TiKV,所以應用場景更適合TiDB集群初始化。

整個Lightning原理如下

- 將集群切換為導入模式

- 創建對應庫表

- 分割導入數據源

- 讀取數據源文件

- 將源數據文件寫入本地臨時文件

- 導入臨時文件到TiKV集群

- 檢驗與分析

- 將集群切換回正常模式

四、Lightning實操

1、下載安裝

wget https://download.pingcap.org/tidb-community-toolkit-v7.5.1-linux-amd64.tar.gz

------

tar -xvf tidb-community-toolkit-v7.5.1-linux-amd64.tar.gz

2、解壓需要的工具包

tar xvf tidb-lightning-v7.5.1-linux-amd64.tar.gz

3、準備配置文件

vim tidb-lighning.toml

-----------------

[lightning]

#邏輯cpu數量

#region-concurrency =

#日志

level = "info"

file = "tidb-lighning.log"[tikv-importer]

#開啟并行導入

incremental-import = true

#設置為local模式

backend = "local"

#設置本地臨時存儲路徑

sorted-kv-dir = "/tmp/sorted-kv-dir"[mydumper]

#源數據目錄

data-source-dir = "/tmp/test"[tidb]

#tidb-server監聽地址

host = "192.168.14.121"

port = 4000

user = "root"

password = ""

#表架構信息

status-port = 10080

#pd地址

pd-addr = "192.168.14.122:2379"

4、導入數據

我這里就將原集群清空,然后將上面-B導出的test庫恢復回去

./tidb-lightning --config /opt/tidb-lighning.toml

(導入成功)

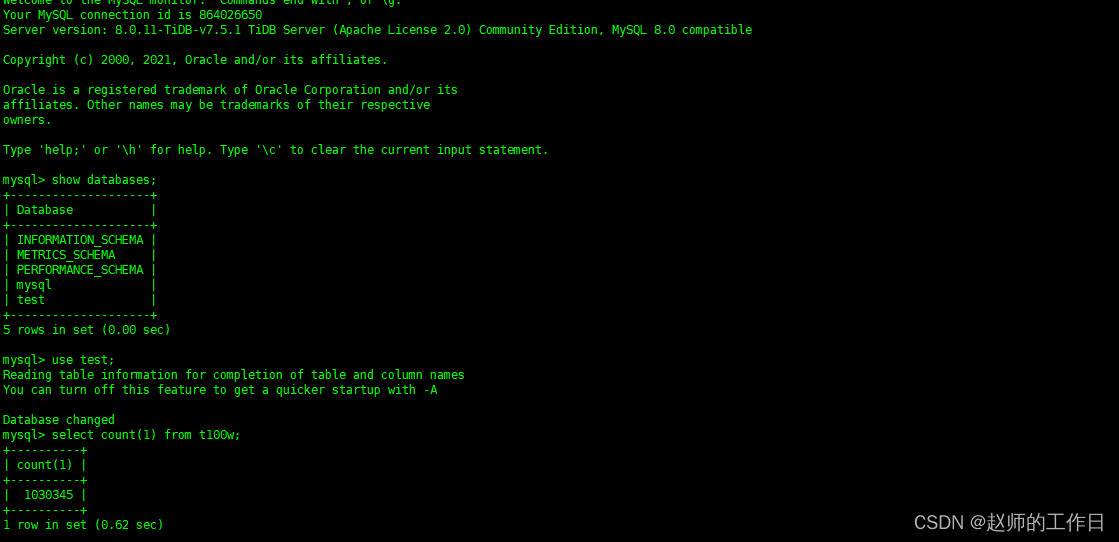

5、進入數據庫校驗

(驗證無誤)

彩蛋

TiDB-Lightning功能強大,可以通過配置文件過濾導入指定的表,同時也支持將MySQL中分庫分表數據導入到TiDB中合并為一張表,還有斷點續傳等功能。

具體可以參考官方文檔