🚀該系列將會持續整理和更新BBR相關的問題,如有錯誤和不足懇請大家指正,歡迎討論!!!

SCYLLA-IoU(SIoU)來自掛在2022年arxiv上的文章:《SIoU Loss: More Powerful Learning for Bounding Box Regression》

文章介紹了一個新的損失函數SIoU,用于邊界框回歸的訓練。傳統的邊界框回歸的損失函數依賴于預測框與真實框之間的距離、重疊面積和長寬比等度量,但是沒有考慮預測框與真實框之間的方向。因此,SIoU引入了角度敏感的懲罰項,使得在訓練過程中預測框更快地向最近的坐標軸靠近,從而減少了自由度,提高了訓練速度和準確度。

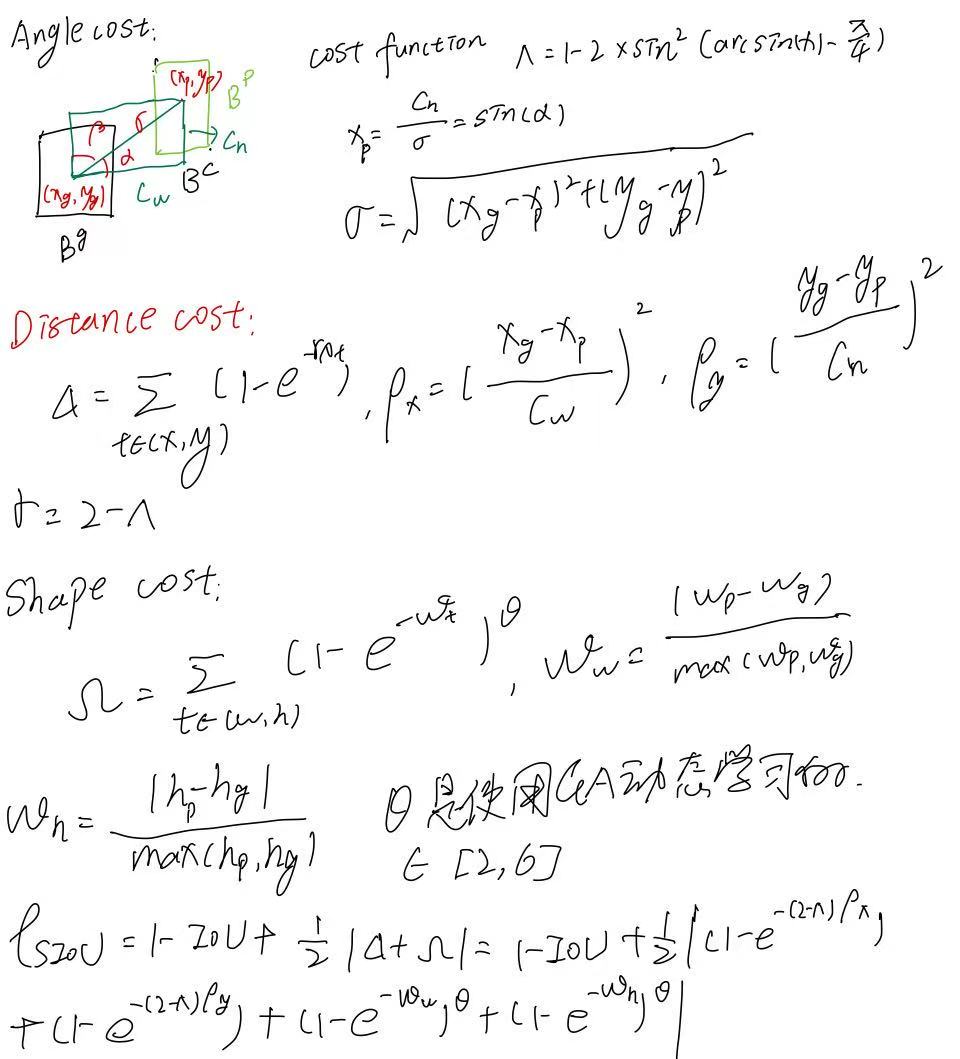

SIoU損失函數 = 角度損失 + 距離損失 + 形狀損失 + IoU損失

Angle Cost(角度懲罰)

目的是讓預測框優先向X或Y軸對齊,減少“漂移”自由度,數學表達中引入了角度懲罰函數。

Distance Cost(距離懲罰)

在角度懲罰的影響下重新定義了中心點距離損失,更貼合于角度優化。

Shape Cost(形狀懲罰)

對預測框的寬高比和尺寸的偏差進行懲罰,提升尺度與比例的擬合性,使用遺傳算法為每個數據集優化 θ控制懲罰強度。

IoU Cost

使用標準IoU定義衡量預測框和目標框之間的重疊程度。

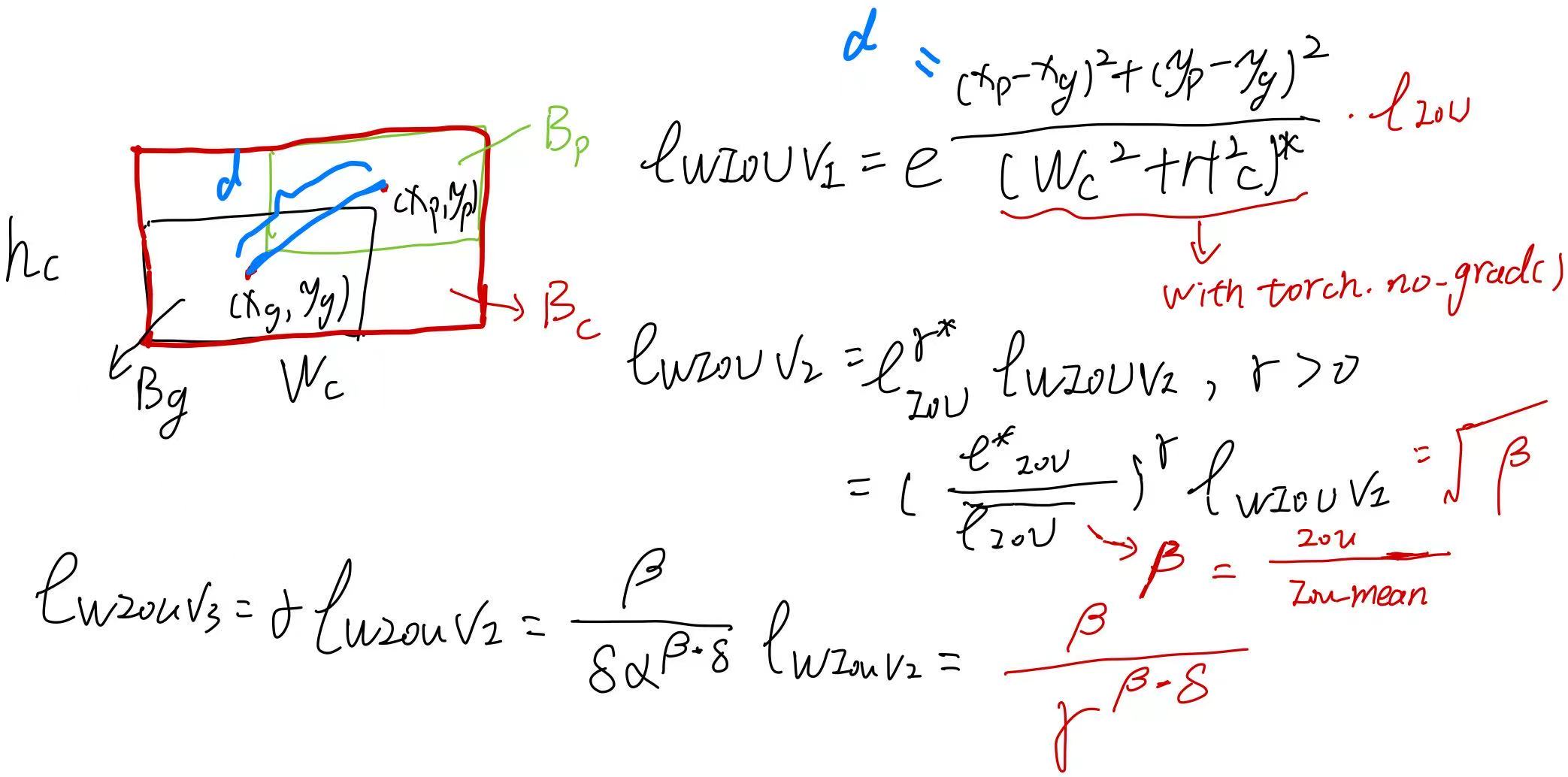

?Wise-IoU(WIoU)來自掛在2023年arxiv上的文章:《Wise-IoU: Bounding Box Regression Loss with Dynamic Focusing Mechanism》

文章主要介紹了一種基于動態非單調聚焦機制(FM)的IoU損失函數WIoU,用于BBR的損失函數。WIoU采用異常度來評估錨框的質量,并提供了一種聰明的梯度增益分配策略,使其關注普通質量的錨框并提高檢測器的整體性能。

文章詳細地對已有且使用廣泛地IoU loss做了一個詳細地描述,分析了它們存在的問題。

該文作者做了一個詳細地講解:

Wise-IoU 作者導讀:基于動態非單調聚焦機制的邊界框損失_wiseiou-CSDN博客文章瀏覽閱讀4.9w次,點贊176次,收藏662次。目標檢測作為計算機視覺的核心問題,其檢測性能依賴于損失函數的設計。邊界框損失函數作為目標檢測損失函數的重要組成部分,其良好的定義將為目標檢測模型帶來顯著的性能提升。近年來的研究大多假設訓練數據中的示例有較高的質量,致力于強化邊界框損失的擬合能力。但我們注意到目標檢測訓練集中含有低質量示例,如果一味地強化邊界框對低質量示例的回歸,顯然會危害模型檢測性能的提升。Focal-EIoU v1 被提出以解決這個問題,但由于其聚焦機制是靜態的,并未充分挖掘非單調聚焦機制的潛能。_wiseiouhttps://blog.csdn.net/qq_55745968/article/details/128888122?spm=1001.2014.3001.5502

🌍?WIoU的動機就是針對目標檢測訓練集中含有的低質量數據如何進行才能更好地進行邊界框回歸呢?

這篇文章的確缺少很多實驗去說明超參數的設置以及這樣的做法能否帶來一個很好的性能。

主要有四個超參數,分別是alpha、delta、t和n。?

? 創新點:

-

與以往采用策略(如 Focal Loss、Focal-EIoU)不同,WIoU 提出了動態的、非單調的梯度權重函數

-

基于outlier degree(離群度)計算每個 anchor box 相對質量的動態指標,從而調整梯度權重

🎯 解決的問題:

-

避免盲目加強對高質量或低質量樣本的學習

-

減少低質量樣本帶來的“有害梯度”

-

提高模型對“普通質量樣本”的關注,有利于泛化

在ultralytics-main/ultralytics/utils/metrics.py中的實現:

class WIoU_Scale:''' monotonous: {None: origin v1True: monotonic FM v2False: non-monotonic FM v3}momentum: The momentum of running mean'''iou_mean = 1.monotonous = False# 論文里面用的是0.05,作者說這樣比較可解釋一些# 1 - pow(0.05, 1 / (890 * 34))_momentum = 1 - 0.5 ** (1 / 7000) # 1e-2_is_train = Truedef __init__(self, iou):self.iou = iouself._update(self)@classmethoddef _update(cls, self):if cls._is_train: cls.iou_mean = (1 - cls._momentum) * cls.iou_mean + \cls._momentum * self.iou.detach().mean().item()@classmethoddef _scaled_loss(cls, self, gamma=1.9, delta=3):if isinstance(self.monotonous, bool):if self.monotonous:return (self.iou.detach() / self.iou_mean).sqrt()else:beta = self.iou.detach() / self.iou_meanalpha = delta * torch.pow(gamma, beta - delta)return beta / alphareturn 1def bbox_iou(box1, box2, xywh=True, GIoU=False, DIoU=False, CIoU=False, SIoU=False, EIoU=False, WIoU=False, Focal=False, alpha=1, gamma=0.5, scale=False, eps=1e-7):# Returns Intersection over Union (IoU) of box1(1,4) to box2(n,4)# Get the coordinates of bounding boxesif xywh: # transform from xywh to xyxy(x1, y1, w1, h1), (x2, y2, w2, h2) = box1.chunk(4, -1), box2.chunk(4, -1)w1_, h1_, w2_, h2_ = w1 / 2, h1 / 2, w2 / 2, h2 / 2b1_x1, b1_x2, b1_y1, b1_y2 = x1 - w1_, x1 + w1_, y1 - h1_, y1 + h1_b2_x1, b2_x2, b2_y1, b2_y2 = x2 - w2_, x2 + w2_, y2 - h2_, y2 + h2_else: # x1, y1, x2, y2 = box1b1_x1, b1_y1, b1_x2, b1_y2 = box1.chunk(4, -1)b2_x1, b2_y1, b2_x2, b2_y2 = box2.chunk(4, -1)w1, h1 = b1_x2 - b1_x1, (b1_y2 - b1_y1).clamp(eps)w2, h2 = b2_x2 - b2_x1, (b2_y2 - b2_y1).clamp(eps)# Intersection areainter = (b1_x2.minimum(b2_x2) - b1_x1.maximum(b2_x1)).clamp(0) * \(b1_y2.minimum(b2_y2) - b1_y1.maximum(b2_y1)).clamp(0)# Union Areaunion = w1 * h1 + w2 * h2 - inter + epsif scale:self = WIoU_Scale(1 - (inter / union))# IoU# iou = inter / union # ori iouiou = torch.pow(inter/(union + eps), alpha) # alpha iouif CIoU or DIoU or GIoU or EIoU or SIoU or WIoU:cw = b1_x2.maximum(b2_x2) - b1_x1.minimum(b2_x1) # convex (smallest enclosing box) widthch = b1_y2.maximum(b2_y2) - b1_y1.minimum(b2_y1) # convex heightif CIoU or DIoU or EIoU or SIoU or WIoU: # Distance or Complete IoU https://arxiv.org/abs/1911.08287v1c2 = (cw ** 2 + ch ** 2) ** alpha + eps # convex diagonal squaredrho2 = (((b2_x1 + b2_x2 - b1_x1 - b1_x2) ** 2 + (b2_y1 + b2_y2 - b1_y1 - b1_y2) ** 2) / 4) ** alpha # center dist ** 2if CIoU: # https://github.com/Zzh-tju/DIoU-SSD-pytorch/blob/master/utils/box/box_utils.py#L47v = (4 / math.pi ** 2) * (torch.atan(w2 / h2) - torch.atan(w1 / h1)).pow(2)with torch.no_grad():alpha_ciou = v / (v - iou + (1 + eps))if Focal:return iou - (rho2 / c2 + torch.pow(v * alpha_ciou + eps, alpha)), torch.pow(inter/(union + eps), gamma) # Focal_CIoUelse:# iou - (rho2 / c2 + (v * alpha_ciou + eps) ** alpha)return iou - (rho2 / c2 + torch.pow(v * alpha_ciou + eps, alpha)) # CIoUelif EIoU:rho_w2 = ((b2_x2 - b2_x1) - (b1_x2 - b1_x1)) ** 2rho_h2 = ((b2_y2 - b2_y1) - (b1_y2 - b1_y1)) ** 2cw2 = torch.pow(cw ** 2 + eps, alpha)ch2 = torch.pow(ch ** 2 + eps, alpha)if Focal:return iou - (rho2 / c2 + rho_w2 / cw2 + rho_h2 / ch2), torch.pow(inter/(union + eps), gamma) # Focal_EIouelse:return iou - (rho2 / c2 + rho_w2 / cw2 + rho_h2 / ch2) # EIouelif SIoU:# SIoU Loss https://arxiv.org/pdf/2205.12740.pdfs_cw = (b2_x1 + b2_x2 - b1_x1 - b1_x2) * 0.5 + epss_ch = (b2_y1 + b2_y2 - b1_y1 - b1_y2) * 0.5 + epssigma = torch.pow(s_cw ** 2 + s_ch ** 2, 0.5)sin_alpha_1 = torch.abs(s_cw) / sigmasin_alpha_2 = torch.abs(s_ch) / sigmathreshold = pow(2, 0.5) / 2sin_alpha = torch.where(sin_alpha_1 > threshold, sin_alpha_2, sin_alpha_1)angle_cost = torch.cos(torch.arcsin(sin_alpha) * 2 - math.pi / 2)rho_x = (s_cw / cw) ** 2rho_y = (s_ch / ch) ** 2gamma = angle_cost - 2distance_cost = 2 - torch.exp(gamma * rho_x) - torch.exp(gamma * rho_y)omiga_w = torch.abs(w1 - w2) / torch.max(w1, w2)omiga_h = torch.abs(h1 - h2) / torch.max(h1, h2)shape_cost = torch.pow(1 - torch.exp(-1 * omiga_w), 4) + torch.pow(1 - torch.exp(-1 * omiga_h), 4)if Focal:return iou - torch.pow(0.5 * (distance_cost + shape_cost) + eps, alpha), torch.pow(inter/(union + eps), gamma) # Focal_SIouelse:return iou - torch.pow(0.5 * (distance_cost + shape_cost) + eps, alpha) # SIouelif WIoU:if Focal:raise RuntimeError("WIoU do not support Focal.")elif scale:# WIoU_Scale._scaled_loss(self)return getattr(WIoU_Scale, '_scaled_loss')(self), (1 - iou) * torch.exp((rho2 / c2)), iou # WIoU https://arxiv.org/abs/2301.10051else:return iou, torch.exp((rho2 / c2)) # WIoU v1if Focal:return iou - rho2 / c2, torch.pow(inter/(union + eps), gamma) # Focal_DIoUelse:return iou - rho2 / c2 # DIoUc_area = cw * ch + eps # convex areaif Focal:return iou - torch.pow((c_area - union) / c_area + eps, alpha), torch.pow(inter/(union + eps), gamma) # Focal_GIoU https://arxiv.org/pdf/1902.09630.pdfelse:return iou - torch.pow((c_area - union) / c_area + eps, alpha) # GIoU https://arxiv.org/pdf/1902.09630.pdfif Focal:return iou, torch.pow(inter/(union + eps), gamma) # Focal_IoUelse:return iou # IoU在ultralytics-main/ultralytics/utils/loss.py中的調用,替換掉原有的那兩行代碼:??

iou = bbox_iou(pbox, tbox[i], WIoU=True, scale=True).squeeze()

if type(iou) is tuple:lbox += (iou[1].detach() * (1.0 - iou[0])).mean()

else:lbox += (1.0 - iou).mean()這樣寫會報一個錯誤:

AttributeError: 'tuple' object has no attribute 'squeeze'

需要寫成這個樣子:?

iou = bbox_iou(pbox, tbox[i], WIoU=True, scale=True)if type(iou) is tuple:if len(iou) == 2:lbox += (iou[1].detach().squeeze() * (1 - iou[0].squeeze())).mean()iou = iou[0].squeeze()else:lbox += (iou[0] * iou[1]).mean()iou = iou[2].squeeze()else:lbox += (1.0 - iou.squeeze()).mean() iou = iou.squeeze()

以上的實現來源于此:https://github.com/z1069614715/objectdetection_script/blob/master/yolo-improve/iou.py![]() https://github.com/z1069614715/objectdetection_script/blob/master/yolo-improve/iou.py

https://github.com/z1069614715/objectdetection_script/blob/master/yolo-improve/iou.py

)

![[ctfshow web入門] web55](http://pic.xiahunao.cn/[ctfshow web入門] web55)

以2步進乘方除以階乘加減第N項)

:NL2SQL繪制河流-軌跡緩沖區如何生成)

)