一、前言

前面幾篇都是在 kernel space 對 dma-buf 進行訪問的,本篇我們將一起來學習,如何在 user space 訪問 dma-buf。當然,user space 訪問 dma-buf 也屬于 CPU Access 的一種。

二、mmap

為了方便應用程序能直接在用戶空間讀寫 dma-buf 的內存,dma_buf_ops?為我們提供了一個?mmap?回調接口,可以把 dma-buf 的物理內存直接映射到用戶空間,這樣應用程序就可以像訪問普通文件那樣訪問 dma-buf 的物理內存了。

在linux??設備驅動中,大多數驅動的?mmap?操作接口都是通過調用?remap_pfn_range()?函數來實現的,dma-buf 也不例外。

除了?dma_buf_ops?提供的?mmap?回調接口外,dma-buf 還為我們提供了?dma_buf_mmap()?內核 API,使得我們可以在其他設備驅動中就地取材,直接引用 dma-buf 的?mmap?實現,以此來間接的實現設備驅動的?mmap?文件操作接口.

?

接下來,我們將通過兩個示例來演示如何在 Userspace 訪問 dma-buf 的物理內存。

- 示例一:直接使用 dma-buf 的 fd 做?mmap()?操作

- 示例二:使用 exporter 的 fd 做?mmap()?操作

三、直接使用 dma-buf 的 fd 做?mmap()?操作

本示例主要演示如何在驅動層實現 dma-buf 的?mmap?回調接口,以及如何在用戶空間直接使用 dma-buf 的 fd 進行?mmap()?操作。

export_test.c

#include <linux/dma-buf.h>

#include <linux/module.h>

#include <linux/slab.h>

#include <linux/mm.h>

#include <linux/miscdevice.h>struct dma_buf *dmabuf_export;

EXPORT_SYMBOL(dmabuf_export);static int exporter_attach(struct dma_buf* dmabuf, struct dma_buf_attachment *attachment)

{pr_info("dmanbuf attach device :%s \n",dev_name(attachment->dev));return 0;}static void exporter_detach(struct dma_buf *dmabuf, struct dma_buf_attachment *attachment)

{pr_info("dmabuf detach device :%s \n",dev_name(attachment->dev));

}static struct sg_table *exporter_map_dma_buf(struct dma_buf_attachment *attachment,enum dma_data_direction dir)

{

// void *vaddr = attachment->dmabuf->priv;struct sg_table *table;int ret;table = kmalloc(sizeof(struct sg_table),GFP_KERNEL);ret = sg_alloc_table(table, 1, GFP_KERNEL);if(ret)pr_info("sg_alloc_table err\n");sg_dma_len(table->sgl) = PAGE_SIZE;pr_info("sg_dma_len: %d\n ", sg_dma_len(table->sgl));// sg_dma_address(table->sgl) = dma_map_single(NULL, vaddr, PAGE_SIZE,dir);

// pr_info("sg_dma_address: 0x%llx\n",(unsigned long long)sg_dma_address(table->sgl));return table;

}static void exporter_unmap_dma_buf(struct dma_buf_attachment *attachment,struct sg_table *table,enum dma_data_direction dir)

{dma_unmap_single(NULL, sg_dma_address(table->sgl), PAGE_SIZE, dir);sg_free_table(table);kfree(table);

}static void exporter_release(struct dma_buf *dmabuf)

{return kfree(dmabuf->priv);

}/*static void *exporter_kmap_atomic(struct dma_buf *dmabuf, unsigned long page_num)

{return NULL;

}static void *exporter_kmap(struct dma_buf *dmabuf, unsigned long page_num)

{return NULL;

}*/

static void* exporter_vmap(struct dma_buf *dmabuf)

{return dmabuf->priv;}

static int exporter_mmap(struct dma_buf *dmabuf, struct vm_area_struct *vma)

{void *vaddr = dmabuf->priv;struct page * page_ptr = virt_to_page(vaddr);return remap_pfn_range(vma,vma->vm_start, page_to_pfn(page_ptr),PAGE_SIZE, vma->vm_page_prot);

}static const struct dma_buf_ops exp_dmabuf_ops = {.attach = exporter_attach,.detach = exporter_detach,.map_dma_buf = exporter_map_dma_buf,.unmap_dma_buf = exporter_unmap_dma_buf,.release = exporter_release,

// .map_atomic = exporter_kmap_atomic,

// .map = exporter_kmap,.vmap = exporter_vmap,.mmap = exporter_mmap,

};static struct dma_buf *exporter_alloc_page(void)

{DEFINE_DMA_BUF_EXPORT_INFO(exp_info);struct dma_buf *dmabuf;void *vaddr;vaddr = kzalloc(PAGE_SIZE,GFP_KERNEL);exp_info.ops = &exp_dmabuf_ops;exp_info.size = PAGE_SIZE;exp_info.flags = O_CLOEXEC;exp_info.priv = vaddr;dmabuf= dma_buf_export(&exp_info);if(dmabuf == NULL)printk(KERN_INFO"DMA buf export error\n");sprintf(vaddr, "hello world");return dmabuf;

}static long exporter_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{int fd = dma_buf_fd(dmabuf_export, O_CLOEXEC);if(unlikely(copy_to_user((void __user*)arg, &fd,sizeof(fd)))){return -EFAULT;}return 0;}

static struct file_operations exporter_fops = {.owner = THIS_MODULE,.unlocked_ioctl = exporter_ioctl,};static struct miscdevice mdev ={.minor = MISC_DYNAMIC_MINOR,.name = "exporter",.fops = &exporter_fops,};static int __init exporter_init(void)

{dmabuf_export = exporter_alloc_page();return misc_register(&mdev);}static void __exit exporter_exit(void)

{misc_deregister(&mdev);}

module_init(exporter_init);

module_exit(exporter_exit);MODULE_LICENSE("GPL");

MODULE_AUTHOR("ZWQ");

MODULE_DESCRIPTION("zwq dma used buffer");從上面的示例可以看到,除了要實現 dma-buf 的 mmap 回調接口外,我們還引入了 misc driver,目的是想通過 misc driver 的 ioctl 接口將 dma-buf 的 fd 傳遞給上層應用程序,這樣才能實現應用程序直接使用這個 dma-buf fd 做 mmap() 操作。

補充:

?

static int exporter_mmap(struct dma_buf *dmabuf, struct vm_area_struct *vma)

{

? ? ? ? void *vaddr = dmabuf->priv;

? ? ? ? struct page * page_ptr = virt_to_page(vaddr);

? ? ? ? return remap_pfn_range(vma,vma->vm_start, page_to_pfn(page_ptr),

? ? ? ? ? ? ? ? ? ? ? ? PAGE_SIZE, vma->vm_page_prot);

}

?

?上面的虛擬地址 vaddr 如果使用:

remap_pfn_range(vma, vma->vm_start, virt_to_pfn(vaddr), PAGE_SIZE, vma->vm_page_prot);

這個會編譯不過,只能先把vaddr 轉換page ,在page 轉換成頁號

為什么非要通過 ioctl 的方式來傳遞 fd ?這個問題我會在下一篇中詳細討論。

?在?ioctl?接口中,我們使用到了?dma_buf_fd()?函數,該函數用于創建一個新的 fd,并與該 dma-buf 的文件相綁定。關于該函數,我也會在下一篇中做詳細介紹。

userspace 程序

mmap_dmabuf.c

#include <stdio.h>

#include <stddef.h>#include <fcntl.h>

#include <sys/ioctl.h>

#include <unistd.h>

#include <sys/mman.h>int main(int argc, char *argv[])

{int fd;int dmabuf_fd = 0;fd = open("/dev/exporter", O_RDONLY);ioctl(fd, 0, &dmabuf_fd);close(fd);char *str = mmap(NULL, 4096, PROT_READ, MAP_SHARED, dmabuf_fd, 0);printf("read from dmabuf mmap: %s\n", str);return 0;

}

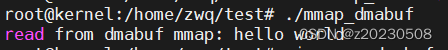

?編譯運行后,結果如下:

可以看到,userspace 程序通過?mmap()?接口成功的訪問到 dma-buf 的物理內存。

?

四、使用 exporter 的 fd 做?mmap()?操作

本示例主要演示如何使用?dma_buf_mmap()?內核 API,以此來簡化設備驅動的?mmap?文件操作接口的實現。

export_test.c

新增?exporter_misc_mmap()?函數, 具體修改如下:

#include <linux/dma-buf.h>

#include <linux/module.h>

#include <linux/slab.h>

#include <linux/mm.h>

#include <linux/miscdevice.h>struct dma_buf *dmabuf_export;

EXPORT_SYMBOL(dmabuf_export);static int exporter_attach(struct dma_buf* dmabuf, struct dma_buf_attachment *attachment)

{pr_info("dmanbuf attach device :%s \n",dev_name(attachment->dev));return 0;}static void exporter_detach(struct dma_buf *dmabuf, struct dma_buf_attachment *attachment)

{pr_info("dmabuf detach device :%s \n",dev_name(attachment->dev));

}static struct sg_table *exporter_map_dma_buf(struct dma_buf_attachment *attachment,enum dma_data_direction dir)

{

// void *vaddr = attachment->dmabuf->priv;struct sg_table *table;int ret;table = kmalloc(sizeof(struct sg_table),GFP_KERNEL);ret = sg_alloc_table(table, 1, GFP_KERNEL);if(ret)pr_info("sg_alloc_table err\n");sg_dma_len(table->sgl) = PAGE_SIZE;pr_info("sg_dma_len: %d\n ", sg_dma_len(table->sgl));// sg_dma_address(table->sgl) = dma_map_single(NULL, vaddr, PAGE_SIZE,dir);

// pr_info("sg_dma_address: 0x%llx\n",(unsigned long long)sg_dma_address(table->sgl));return table;

}static void exporter_unmap_dma_buf(struct dma_buf_attachment *attachment,struct sg_table *table,enum dma_data_direction dir)

{dma_unmap_single(NULL, sg_dma_address(table->sgl), PAGE_SIZE, dir);sg_free_table(table);kfree(table);

}static void exporter_release(struct dma_buf *dmabuf)

{return kfree(dmabuf->priv);

}/*static void *exporter_kmap_atomic(struct dma_buf *dmabuf, unsigned long page_num)

{return NULL;

}static void *exporter_kmap(struct dma_buf *dmabuf, unsigned long page_num)

{return NULL;

}*/

static void* exporter_vmap(struct dma_buf *dmabuf)

{return dmabuf->priv;}

static int exporter_mmap(struct dma_buf *dmabuf, struct vm_area_struct *vma)

{void *vaddr = dmabuf->priv;struct page * page_ptr = virt_to_page(vaddr);return remap_pfn_range(vma,vma->vm_start, page_to_pfn(page_ptr),PAGE_SIZE, vma->vm_page_prot);

}static const struct dma_buf_ops exp_dmabuf_ops = {.attach = exporter_attach,.detach = exporter_detach,.map_dma_buf = exporter_map_dma_buf,.unmap_dma_buf = exporter_unmap_dma_buf,.release = exporter_release,

// .map_atomic = exporter_kmap_atomic,

// .map = exporter_kmap,.vmap = exporter_vmap,.mmap = exporter_mmap,

};static struct dma_buf *exporter_alloc_page(void)

{DEFINE_DMA_BUF_EXPORT_INFO(exp_info);struct dma_buf *dmabuf;void *vaddr;vaddr = kzalloc(PAGE_SIZE,GFP_KERNEL);exp_info.ops = &exp_dmabuf_ops;exp_info.size = PAGE_SIZE;exp_info.flags = O_CLOEXEC;exp_info.priv = vaddr;dmabuf= dma_buf_export(&exp_info);if(dmabuf == NULL)printk(KERN_INFO"DMA buf export error\n");sprintf(vaddr, "hello world");return dmabuf;

}static long exporter_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{int fd = dma_buf_fd(dmabuf_export, O_CLOEXEC);if(unlikely(copy_to_user((void __user*)arg, &fd,sizeof(fd)))){return -EFAULT;}return 0;}

static int exporter_misc_mmap(struct file *file, struct vm_area_struct *vma)

{return dma_buf_mmap(dmabuf_export, vma, 0);

}static struct file_operations exporter_fops = {.owner = THIS_MODULE,.unlocked_ioctl = exporter_ioctl,.mmap = exporter_misc_mmap,

};static struct miscdevice mdev ={.minor = MISC_DYNAMIC_MINOR,.name = "exporter",.fops = &exporter_fops,};

static int __init exporter_init(void)

{dmabuf_export = exporter_alloc_page();return misc_register(&mdev);}static void __exit exporter_exit(void)

{misc_deregister(&mdev);}

module_init(exporter_init);

module_exit(exporter_exit);MODULE_LICENSE("GPL");

MODULE_AUTHOR("ZWQ");

MODULE_DESCRIPTION("zwq dma used buffer");與示例一的驅動相比,示例二的驅動可以不再需要把 dma-buf 的 fd 通過 ioctl 傳給上層,而是直接將 dma-buf 的 mmap 回調接口嫁接到 misc driver 的 mmap 文件操作接口上。這樣上層在對 misc device 進行 mmap() 操作時,實際映射的是 dma-buf 的物理內存。

userspace 程序

mmap_dmabuf.c

#include <stdio.h>

#include <stddef.h>#include <fcntl.h>

#include <sys/ioctl.h>

#include <unistd.h>

#include <sys/mman.h>int main(int argc, char *argv[])

{int fd;

// int dmabuf_fd = 0;fd = open("/dev/exporter", O_RDONLY);

#if 0ioctl(fd, 0, &dmabuf_fd);close(fd);char *str = mmap(NULL, 4096, PROT_READ, MAP_SHARED, dmabuf_fd, 0);printf("read from dmabuf mmap: %s\n", str);

#endifchar *str = mmap(NULL, 4096, PROT_READ, MAP_SHARED,fd, 0);printf("read from dmabuf mmap: %s\n", str);return 0;

}

與示例一的 userspace 程序相比,示例二不再通過 ioctl() 方式獲取 dma-buf 的 fd,而是直接使用 exporter misc device 的 fd 進行 mmap() 操作,此時執行的則是 misc driver 的 mmap 文件操作接口。當然最終輸出的結果都是一樣的

運行結果:

![]()

)

)

】)

)

】- Python operator 模塊)

文件保存格式探索以及mmdetection加載預訓練模型參數對不齊和收到意外參數報錯解決方案)