DStream創建(kafka數據源)

1.在idea中的 pom.xml 中添加依賴

<dependency><groupId>org.apache.spark</groupId><artifactId>spark-streaming-kafka-0-10_2.12</artifactId><version>3.0.0</version>

</dependency>2.創建一個新的object,并寫入以下代碼

import org.apache.kafka.clients.consumer.ConsumerConfig

import org.apache.kafka.common.serialization.StringDeserializer

import org.apache.spark.SparkConf

import org.apache.spark.streaming.dstream.InputDStream

import org.apache.spark.streaming.kafka010.{ConsumerStrategies, KafkaUtils, LocationStrategies}

import org.apache.spark.streaming.{Seconds, StreamingContext}

import org.apache.kafka.clients.consumer.ConsumerRecord/*** 通過 DirectAPI 0 - 10 消費 Kafka 數據* 消費的 offset 保存在 _consumer_offsets 主題中*/

object DirectAPI {def main(args: Array[String]): Unit = {val sparkConf = new SparkConf().setMaster("local[*]").setAppName("direct")val ssc = new StreamingContext(sparkConf, Seconds(3))// 定義 Kafka 相關參數val kafkaPara: Map[String, Object] = Map[String, Object](ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG -> "node01:9092,node02:9092,node03:9092",ConsumerConfig.GROUP_ID_CONFIG -> "kafka","key.deserializer" -> classOf[StringDeserializer],"value.deserializer" -> classOf[StringDeserializer])// 通過讀取 Kafka 數據,創建 DStreamval kafkaDStream: InputDStream[ConsumerRecord[String, String]] = KafkaUtils.createDirectStream[String, String](ssc,LocationStrategies.PreferConsistent,ConsumerStrategies.Subscribe[String, String](Set("kafka"), kafkaPara))// 提取出數據中的 value 部分val valueDStream = kafkaDStream.map(record => record.value())// WordCount 計算邏輯valueDStream.flatMap(_.split(" ")).map((_, 1)).reduceByKey(_ + _).print()ssc.start()ssc.awaitTermination()}

} 3.在虛擬機中,開啟kafka、zookeeper、yarn、dfs集群

4.創建一個新的topic---kafka,用于接下來的操作

查看所有的topic(是否創建成功)

開啟kafka生產者,用于產生數據

啟動idea中的代碼,在虛擬機中輸入數據

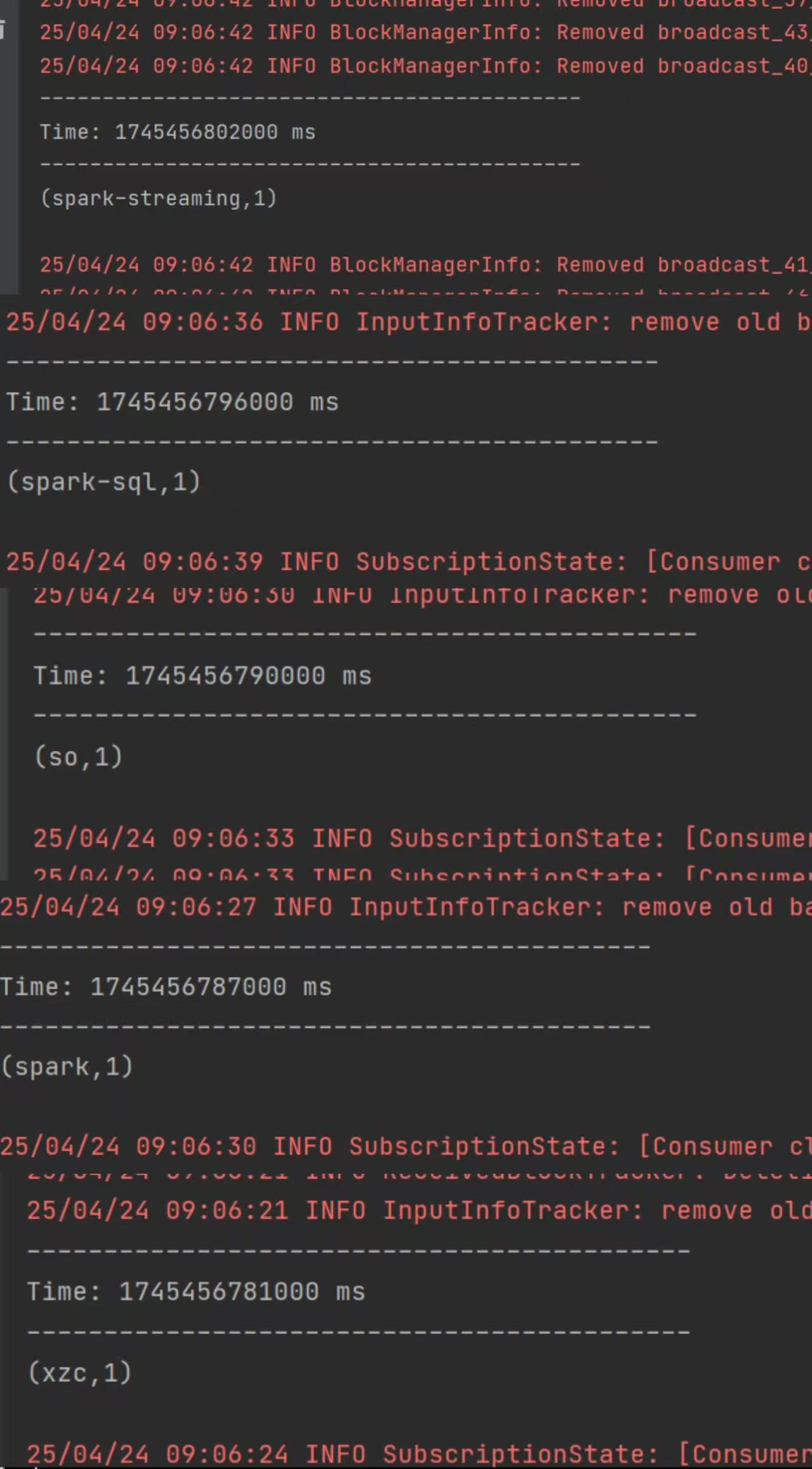

輸入后可以在idea中查看到

查看消費進度

)

- KMP算法)

)