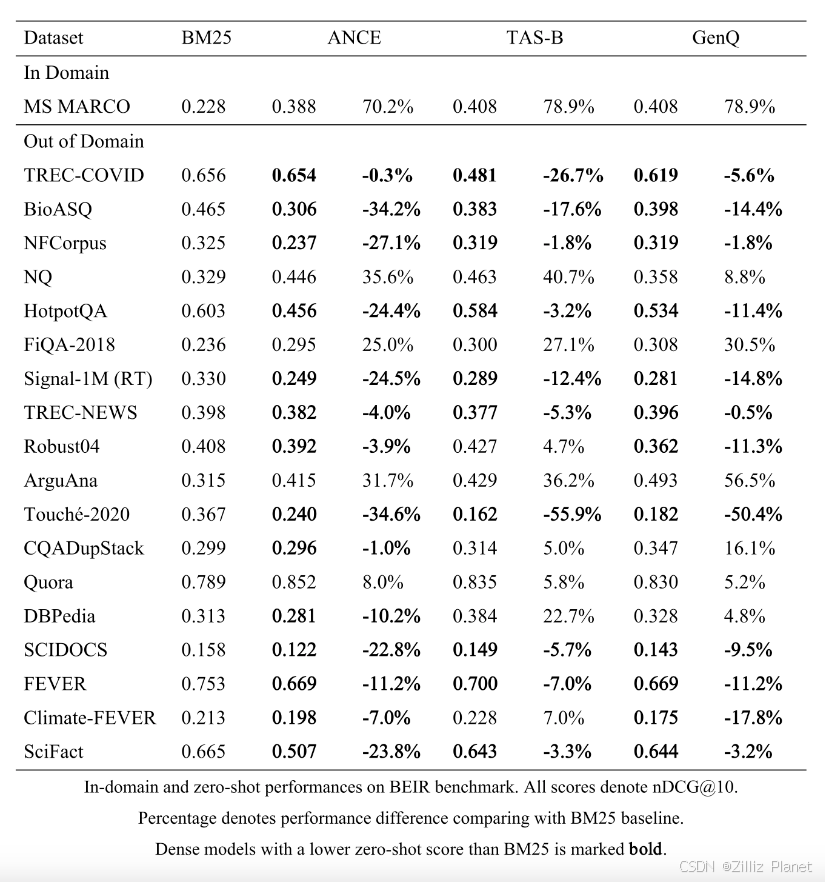

在信息檢索方法的發展歷程中,我們見證了從傳統的統計關鍵詞匹配到如 BERT 這樣的深度學習模型的轉變。雖然傳統方法提供了堅實的基礎,但往往難以精準捕捉文本的語義關系。如 BERT 這樣的稠密檢索方法通過利用高維向量捕獲文本的上下文語義,為搜索技術帶來了顯著進步。然而,由于這些方法依賴于特定領域的知識,它們在處理領域外(out-of-domain)問題時可能會遇到困難。

Learned 稀疏 Embedding 提供了一套獨特的解決方案,結合了稀疏表示的可解釋性(interpretability)和深度學習模型的語境理解能力。在本篇博客中,我們將探討 Learned 稀疏向量的底層機制、優勢以及它們在實際應用中的潛力。特別是與 Milvus 向量數據庫結合時,稀疏向量能夠改進信息檢索系統,通過提高檢索效率,提供富含上下文的答案,最終優化系統性能。

信息檢索方式演變:從關鍵詞匹配到上下文理解

早期信息檢索系統主要依靠基于統計的關鍵詞匹配方法,如 TF-IDF 和 BM25 等詞袋(Bag of Words)算法。這些方法通過分析術語的出現頻率和在整個文檔庫中的分布來評估文檔的相關性。這些簡單而又豐富的傳統方法成為了互聯網快速增長時期的流行工具。

2013 年,Google 推出了 Word2Vec,這是首次嘗試使用高維向量來表示單詞并捕捉它們細微的語義差異。這一方法標志著信息檢索方法逐漸轉向由機器學習驅動。

隨著 BERT 的出現——一種基于 Transformer 的革命性預訓練語言模型,徹底改變了信息檢索的方式。BERT 利用強大的 Transformer 中的注意力機制,捕捉文檔的復雜語境語義,并將其壓縮成稠密向量,為查詢提供強大且語義準確的檢索,從根本上優化了信息檢索過程。

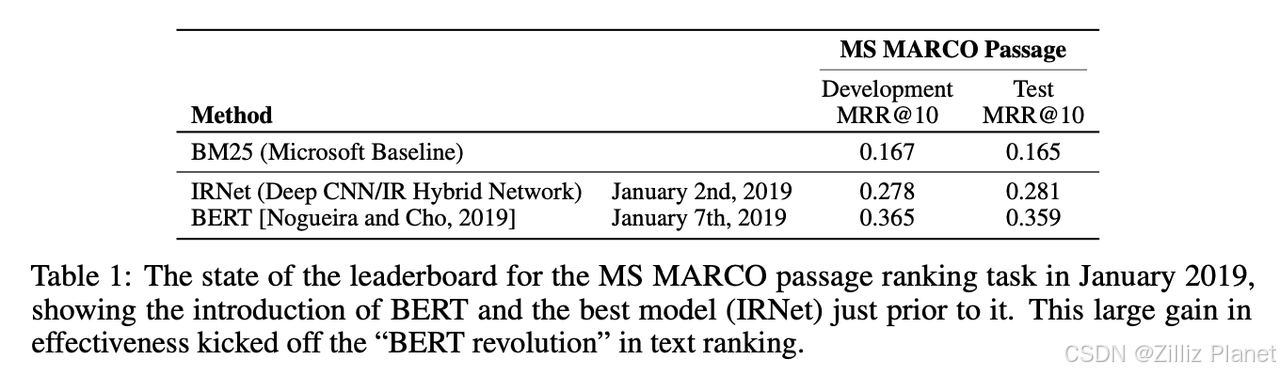

BERT 推出三個月后,Nogueira 和 Cho 將其應用于 MS MARCO 段落排名任務。與之前最佳的 IRNet 方法相比,BERT 的效果提升了 30%,是 BM25 baseline 的 2 倍。到了 2024 年 3 月,文本排名分數更是高達 0.450 (eval)和 0.463(dev)。

這些突破凸顯了信息檢索方法的不斷演變。我們自此步入了自然語言處理(NLP)的紀元。

領域外信息檢索挑戰

稠密向量技術,如 BERT,與傳統的詞袋模型相比有著其獨有的優勢——能夠精確把握文本中的復雜語境。這一特性極大地提升了信息檢索系統在處理熟悉領域查詢時的性能。

然而,當 BERT 進入非熟悉的領域進行 Out-of-Domain(OOD)檢索時,會面臨挑戰。其訓練過程本質上偏向于訓練數據的特點,這使得它在為 OOD 數據集中未見過的文本片段生成有意義的 embeddings 時表現不佳,特別是在含有豐富特定領域術語的數據集中,這一限制尤為突出。

解決這一問題的一種可能方法是微調。然而,這種解決方案有時行不通或者成本較高。微調需要訪問一個中到大型的標注數據集,包括與目標領域相關的查詢、正面和負面文檔。此外,創建這樣的數據集需要領域專家的專業知識,以確保數據質量,這一過程十分耗時且成本高昂。

另一方面,盡管傳統的詞袋算法在處理 OOD 信息檢索時面臨詞匯不匹配問題(查詢與相關文檔之間通常缺乏術語重疊),但它們在性能上仍然優于 BERT。這是因為在詞袋算法中,未被識別的術語不是被“學習”,而是被“精確匹配”的。

學習得到的稀疏向量:將傳統稀疏向量表示與上下文信息相結合

結合 Out-of-Domain 檢索的精確詞匹配技術,如詞袋模型和 BERT 等稠密向量檢索方法進行語義檢索,長期以來一直是信息檢索領域的一項主要任務。幸運的是,出現了新的解決方法:學習得到的稀疏 embedding。

那么,到底什么是學習得到的稀疏 embedding 向量呢?

學習得到的稀疏 embedding 指的是通過復雜的 ML 模型(如 SPLADE 和 BGE-M3 等)生成的稀疏向量表示。與僅依賴于統計方法(如 BM25)生成的傳統稀疏向量不同,學習得到的稀疏 embedding 在保留關鍵詞搜索能力的同時,豐富了稀疏表示的上下文信息。它們能夠辨識相鄰或相關詞語的重要性,即使這些詞語在文本中沒有明確出現。最終生成一種擅長捕捉相關關鍵詞和類別的“學習得到的”稀疏表示。

以 SPLADE 為例。在編碼給定文本時,SPLADE 生成的稀疏 embedding 形式為 token-to-weight 映射,例如:

{"hello": 0.33, "world": 0.72}乍看之下,這些 embedding 與由統計方法生成的傳統稀疏 embedding 類似。然而,它們的組成有一個關鍵區別:維度(詞匯)和權重。帶有上下文化信息的機器學習模型決定了學習型稀疏 embedding 的維度(詞匯)和權重。這種稀疏表示與學習得到的上下文的結合為信息檢索任務提供了一種強大的工具,無縫彌合了精確詞匹配和語義理解之間的鴻溝。

與稠密向量不同,學習得到的稀疏 embedding 采取更為簡潔的方式,只保留文本信息中的關鍵內容。這種簡化有助于防止模型過擬合(over-fitting)訓練數據,提高模型在遇到新的數據模式時的泛化能力,尤其是在 Out-Of-Domain 信息檢索場景中。

通過優先處理關鍵文本元素,同時舍棄不必要的細節,學習得到的稀疏 embedding 完美平衡了捕獲相關信息與避免過擬合兩個方面,從而增強了它們在各種檢索任務中的應用價值。

學習得到的稀疏 Embedding與稠密檢索方法相結合,在領域內檢索任務中展現了強大的性能。來自 BGE-M3 的研究顯示,學習得到的稀疏向量在多語言或跨語言檢索任務中優于稠密向量,在準確性方面表現更佳。結合使用這兩種 embeddings 時,檢索準確性得到了進一步提升,展現了兩種 Embedding 相輔相成的效果。

此外,這些 embedding 固有的稀疏性大大簡化了向量相似性搜索,減少了需要消耗的計算資源。此外,學習得到的稀疏向量通過匹配增強上下文理解,可以快速檢查匹配的文檔,以確定相應的匹配詞。此功能為深入了解檢索過程提供了更精確的見解,提高了系統的透明度和可用性

代碼示例

現在讓我們來看看在密集檢索效果不佳的情況下,學習得到的稀疏向量時如何表現的。

數據集:MIRACL。

查詢:What years did Zhu Xi live?

備注:

-

朱熹是宋朝時期的中國書法家、歷史學家、哲學家、詩人和政治家。

-

MIRACL 數據集是多語言的,本展示中我們僅使用英文部分的“訓練”切分。它包含 26746 篇文章,其中七篇與朱熹相關。 我們分別使用密集和稀疏檢索方法檢索了這七個與查詢相關的故事。最初,我們分別將所有故事編碼為密集或稀疏 embeddings,并將它們存儲在 Milvus 向量數據庫中。隨后,我們對查詢進行編碼,并使用 KNN 搜索識別與查詢 embedding 最接近的前 10 個故事。

Sparse Search Results(IP distance, larger is closer):Score: 26.221 Id: 244468#1 Text: Zhu Xi, whose family originated in Wuyuan County, Huizhou ...Score: 26.041 Id: 244468#3 Text: In 1208, eight years after his death, Emperor Ningzong of Song rehabilitated Zhu Xi and honored him ...Score: 25.772 Id: 244468#2 Text: In 1179, after not serving in an official capacity since 1156, Zhu Xi was appointed Prefect of Nankang Military District (南康軍) ...Score: 23.905 Id: 5823#39 Text: During the Song dynasty, the scholar Zhu Xi (AD 1130–1200) added ideas from Daoism and Buddhism into Confucianism ...Score: 23.639 Id: 337115#2 Text: ... During the Song Dynasty, the scholar Zhu Xi (AD 1130–1200) added ideas from Taoism and Buddhism into Confucianism ...Score: 23.061 Id: 10443808#22 Text: Zhu Xi was one of many critics who argued that ...Score: 22.577 Id: 55800148#11 Text: In February 1930, Zhu decided to live the life of a devoted revolutionary after leaving his family at home ...Score: 20.779 Id: 12662060#3 Text: Holding to Confucius and Mencius' conception of humanity as innately good, Zhu Xi articulated an understanding of "li" as the basic pattern of the universe ...Score: 20.061 Id: 244468#28 Text: Tao Chung Yi (around 1329~1412) of the Ming dynasty: Whilst Master Zhu inherited the orthodox teaching and propagated it to the realm of sages ...Score: 19.991 Id: 13658250#10 Text: Zhu Shugui was 66 years old (by Chinese reckoning; 65 by Western standards) at the time of his suicide ... Dense Search Results(L2 distance, smaller is closer):Score: 0.600 Id: 244468#1 Text: Zhu Xi, whose family originated in Wuyuan County, Huizhou ...Score: 0.706 Id: 244468#3 Text: In 1208, eight years after his death, Emperor Ningzong of Song rehabilitated Zhu Xi and honored him ...Score: 0.759 Id: 13658250#10 Text: Zhu Shugui was 66 years old (by Chinese reckoning; 65 by Western standards) at the time of his suicide ...Score: 0.804 Id: 6667852#0 Text: King Zhaoxiang of Qin (; 325–251 BC), or King Zhao of Qin (秦昭王), born Ying Ji ...Score: 0.818 Id: 259901#4 Text: According to the 3rd-century historical text "Records of the Three Kingdoms", Liu Bei was born in Zhuo County ...Score: 0.868 Id: 343207#0 Text: Ruzi Ying (; 5 – 25), also known as Emperor Ruzi of Han and the personal name of Liu Ying (劉嬰), was the last emperor ...Score: 0.876 Id: 31071887#1 Text: The founder of the Ming dynasty was the Hongwu Emperor (21 October 1328 – 24 June 1398), who is also known variably by his personal name "Zhu Yuanzhang" ...Score: 0.882 Id: 2945225#0 Text: Emperor Ai of Tang (27 October 89226 March 908), also known as Emperor Zhaoxuan (), born Li Zuo, later known as Li Chu ...Score: 0.890 Id: 33230345#0 Text: Li Xi or Li Qi (李谿 per the "Zizhi Tongjian" and the "History of the Five Dynasties" or 李磎 per the "Old Book of Tang" ...Score: 0.893 Id: 172890#1 Text: The Wusun originally lived between the Qilian Mountains and Dunhuang (Gansu) near the Yuezhi ...仔細查看結果:在稀疏檢索中,7 個與朱熹相關的故事都排在前 10 名。而在稠密檢索中,只有 2 個故事位于前 10。雖然稀疏和稠密檢索方法均正確識別了編號為

244468#1和244468#3的段落,但稠密檢索未能捕捉到其他相關故事。相反,稠密檢索返回的其他 8 個故事與中國的其他歷史故事相關,這些內容雖然模型認為與朱熹有關,但實際上無直接關聯。如果您對背后的原理感興趣,請繼續閱讀,我們將詳細介紹如何使用 Milvus 進行向量搜索。

如何使用 Milvus 進行向量搜索

Milvus 是一款高度可擴展、性能出色的開源向量數據庫。在最新的 2.4 版本中,Milvus 支持了稀疏和稠密向量(公測中)。本文將利用 Milvus 2.4 來存儲數據集并執行向量搜索。

接下來,我們將演示如何利用 Milvus 在 MIRACL 數據集上執行查詢“朱熹生活在哪個年代?”。

我們使用 SPLADE 和 MiniLM-L6-v2 模型,將查詢內容及 MIRACL 源數據集轉化為稀疏和稠密向量。

首先,我們需要創建一個目錄,并配置環境與 Milvus 服務,請確保您的系統中已安裝 python(>=3.8)、virtualenv、docker 以及 docker-compose。

mkdir milvus_sparse_demo && cd milvus_sparse_demo

# spin up a milvus local cluster

wget https://github.com/milvus-io/milvus/releases/download/v2.4.0-rc.1/milvus-standalone-docker-compose.yml -O docker-compose.yml

docker-compose up -d

# create a virtual environment

virtualenv -p python3.8 .venv && source .venv/bin/activate

touch milvus_sparse_demo.py從 2.4 版本開始,pymilvus(Milvus 的 Python SDK)引入了一個可選的 model 模型模塊。這個模塊簡化了使用模型將文本編碼成稀疏或稠密向量的流程。此外,我們使用 pip 來安裝 pymilvus model ,以便訪問 HuggingFace 上的數據集。

pip install "pymilvus[model]" datasets tqdm首先,使用 HuggingFace 的 Datasets 庫下載數據集,收集所有的段落。

from datasets import load_dataset

miracl = load_dataset('miracl/miracl', 'en')['train']

# collect all passages in the dataset

docs = list({doc['docid']: doc for entry in miracl for doc in entry['positive_passages'] + entry['negative_passages']}.values())

print(docs[0])

# {'docid': '2078963#10', 'text': 'The third thread in the development of quantum field theory...'}將查詢編碼。

from pymilvus import model

sparse_ef = model.sparse.SpladeEmbeddingFunction(model_name="naver/splade-cocondenser-selfdistil", device="cpu",

)

dense_ef = model.dense.SentenceTransformerEmbeddingFunction(model_name='all-MiniLM-L6-v2',device='cpu',

)query = "What years did Zhu Xi live?"query_sparse_emb = sparse_ef([query])

query_dense_emb = dense_ef([query])查看生成的稀疏向量并找到權重最高的 Token。

sparse_token_weights = sorted([(sparse_ef.model.tokenizer.decode(col), query_sparse_emb[0, col])for col in query_sparse_emb.indices[query_sparse_emb.indptr[0]:query_sparse_emb.indptr[1]]], key=lambda item: item[1], reverse=True)

print(sparse_token_weights[:7])

[('zhu', 3.0623178), ('xi', 2.4944391), ('zhang', 1.4790473), ('date', 1.4589322), ('years', 1.4154341), ('live', 1.3365831), ('china', 1.2351396)]將所有文檔進行編碼。

from tqdm import tqdm

doc_sparse_embs = [sparse_ef([doc['text']]) for doc in tqdm(docs, desc='Encoding Sparse')]

doc_dense_embs = [dense_ef([doc['text']])[0] for doc in tqdm(docs, desc='Encoding Dense')]接著,在 Milvus 中創建 Collection 以存儲文檔 id、文本、稠密和稀疏向量等。然后插入數據。

from pymilvus import (MilvusClient, FieldSchema, CollectionSchema, DataType

)

milvus_client = MilvusClient("http://localhost:19530")

collection_name = 'miracl_demo'

fields = [FieldSchema(name="doc_id", dtype=DataType.VARCHAR, is_primary=True, auto_id=False, max_length=100),FieldSchema(name="text", dtype=DataType.VARCHAR, max_length=65530),FieldSchema(name="sparse_vector", dtype=DataType.SPARSE_FLOAT_VECTOR),FieldSchema(name="dense_vector", dtype=DataType.FLOAT_VECTOR, dim=384),

]

milvus_client.create_collection(collection_name, schema=CollectionSchema(fields, "miracl demo"), timeout=5, consistency_level="Strong")batch_size = 30

n_docs = len(docs)

for i in tqdm(range(0, n_docs, batch_size), desc='Inserting documents'):milvus_client.insert(collection_name, [{"doc_id": docs[idx]['docid'],"text": docs[idx]['text'],"sparse_vector": doc_sparse_embs[idx],"dense_vector": doc_dense_embs[idx]}for idx in range(i, min(i+batch_size, n_docs))])為加速搜索,對向量字段創建索引。

index_params = milvus_client.prepare_index_params()

index_params.add_index(field_name="sparse_vector",index_type="SPARSE_INVERTED_INDEX",metric_type="IP",

)

index_params.add_index(field_name="dense_vector",index_type="FLAT",metric_type="L2",

)

milvus_client.create_index(collection_name, index_params=index_params)

milvus_client.load_collection(collection_name)進行搜索并查看結果。

k = 10

sparse_results = milvus_client.search(collection_name, query_sparse_emb, anns_field="sparse_vector", limit=k, search_params={"metric_type": "IP"}, output_fields=['doc_id', 'text'])[0]

dense_results = milvus_client.search(collection_name, query_dense_emb, anns_field="dense_vector", limit=k, search_params={"metric_type": "L2"}, output_fields=['doc_id', 'text'])[0]print(f'Sparse Search Results:')

for result in sparse_results:print(f"\tScore: {result['distance']} Id: {result['entity']['doc_id']} Text: {result['entity']['text']}")print(f'Dense Search Results:')

for result in dense_results:print(f"\tScore: {result['distance']} Id: {result['entity']['doc_id']} Text: {result['entity']['text']}")以下為輸出。稠密向量搜索結果中,僅前兩個結果為和朱熹有關的故事。示例中我們精簡了文本以提升可讀性。

Sparse Search Results:Score: 26.221 Id: 244468#1 Text: Zhu Xi, whose family originated in Wuyuan County, Huizhou ...Score: 26.041 Id: 244468#3 Text: In 1208, eight years after his death, Emperor Ningzong of Song rehabilitated Zhu Xi and honored him ...Score: 25.772 Id: 244468#2 Text: In 1179, after not serving in an official capacity since 1156, Zhu Xi was appointed Prefect of Nankang Military District (南康軍) ...Score: 23.905 Id: 5823#39 Text: During the Song dynasty, the scholar Zhu Xi (AD 1130–1200) added ideas from Daoism and Buddhism into Confucianism ...Score: 23.639 Id: 337115#2 Text: ... During the Song Dynasty, the scholar Zhu Xi (AD 1130–1200) added ideas from Taoism and Buddhism into Confucianism ...Score: 23.061 Id: 10443808#22 Text: Zhu Xi was one of many critics who argued that ...Score: 22.577 Id: 55800148#11 Text: In February 1930, Zhu decided to live the life of a devoted revolutionary after leaving his family at home ...Score: 20.779 Id: 12662060#3 Text: Holding to Confucius and Mencius' conception of humanity as innately good, Zhu Xi articulated an understanding of "li" as the basic pattern of the universe ...Score: 20.061 Id: 244468#28 Text: Tao Chung Yi (around 1329~1412) of the Ming dynasty: Whilst Master Zhu inherited the orthodox teaching and propagated it to the realm of sages ...Score: 19.991 Id: 13658250#10 Text: Zhu Shugui was 66 years old (by Chinese reckoning; 65 by Western standards) at the time of his suicide ...

Dense Search Results:Score: 0.600 Id: 244468#1 Text: Zhu Xi, whose family originated in Wuyuan County, Huizhou ...Score: 0.706 Id: 244468#3 Text: In 1208, eight years after his death, Emperor Ningzong of Song rehabilitated Zhu Xi and honored him ...Score: 0.759 Id: 13658250#10 Text: Zhu Shugui was 66 years old (by Chinese reckoning; 65 by Western standards) at the time of his suicide ...Score: 0.804 Id: 6667852#0 Text: King Zhaoxiang of Qin (; 325–251 BC), or King Zhao of Qin (秦昭王), born Ying Ji ...Score: 0.818 Id: 259901#4 Text: According to the 3rd-century historical text "Records of the Three Kingdoms", Liu Bei was born in Zhuo County ...Score: 0.868 Id: 343207#0 Text: Ruzi Ying (; 5 – 25), also known as Emperor Ruzi of Han and the personal name of Liu Ying (劉嬰), was the last emperor ...Score: 0.876 Id: 31071887#1 Text: The founder of the Ming dynasty was the Hongwu Emperor (21 October 1328 – 24 June 1398), who is also known variably by his personal name "Zhu Yuanzhang" ...Score: 0.882 Id: 2945225#0 Text: Emperor Ai of Tang (27 October 89226 March 908), also known as Emperor Zhaoxuan (), born Li Zuo, later known as Li Chu ...Score: 0.890 Id: 33230345#0 Text: Li Xi or Li Qi (李谿 per the "Zizhi Tongjian" and the "History of the Five Dynasties" or 李磎 per the "Old Book of Tang" ...Score: 0.893 Id: 172890#1 Text: The Wusun originally lived between the Qilian Mountains and Dunhuang (Gansu) near the Yuezhi ...至此,示例已完成,如果無需再使用,可以通過以下代碼刪除相關內容。

docker-compose down

cd .. && rm -rf milvus_sparse_demo總結

本文探索了復雜的 Embedding 向量空間,展現了信息檢索方法如何從傳統的稀疏向量檢索和稠密向量檢索演變為創新型的 Learned 稀疏向量檢索。我們還探究了兩種機器學習模型—— BGE-M3 和 SPLADE,介紹了它們是如何生成 Learned 稀疏向量的。

利用這些先進的 Embedding 技術,我們實現了搜索和檢索系統優化,為開發直觀且響應迅速的平臺注入了更多新的可能性。

敬請期待我們后續發布的文章!我們將展示如何在實際應用中利用這些技術,幫助您直觀了解它們是如何重新定義信息檢索的標準的。

注:

MIRACL鏈接:

https://huggingface.co/datasets/miracl/miracl

Redux@4.x(5)- 實現 createStore)

)

--引用、const、inline、nullptr)