如何使用C++調用Pytorch模型進行推理測試:使用libtorch庫

目錄

- 如何使用C++調用Pytorch模型進行推理測試:使用libtorch庫

- 一、環境準備

- 1,linux:以ubuntu 22.04系統為例

- 1. 準備CUDA和CUDNN

- 2. 準備C++環境

- 3, 下載libtorch文件

- 4, 編寫測試libtorch是否安裝成功

- 2, windows: 以win10系統為例

- 1, 準備CUDA和CUDNN

- 2,準備C++編譯環境

- 3,下載安裝libtorch

- 4. 注意事項

- 二、C++代碼封裝Pytorch模型測試:以resnet-18分類為例

- 1, 安裝opencv用于讀取圖像

- 2,用python導出訓練好的pytorch模型

- 3,編寫C++代碼測試

一、環境準備

1,linux:以ubuntu 22.04系統為例

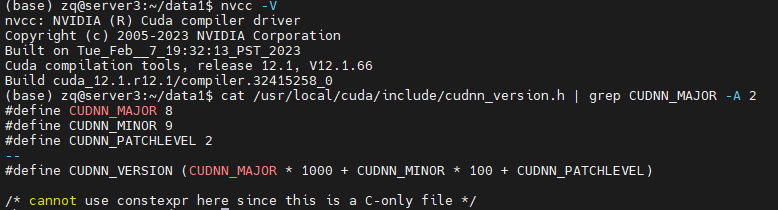

1. 準備CUDA和CUDNN

有兩種方式配置cuda和cudnn,一種是在系統環境安裝,可以參考:深度學習環境配置——ubuntu安裝CUDA與CUDNN

還有一種是在conda虛擬環境使用cudatoolkit-dev包,具體可以參考:Installing-and-Test-PyTorch-C-API-on-Ubuntu-with-GPU-enabled

我選擇的方式是在系統環境安裝cuda12.1和cudnn8.9.2。

可使用如下命令查看是否安裝成功:

NVCC -V

cat /usr/local/cuda/include/cudnn_version.h | grep CUDNN_MAJOR -A 2

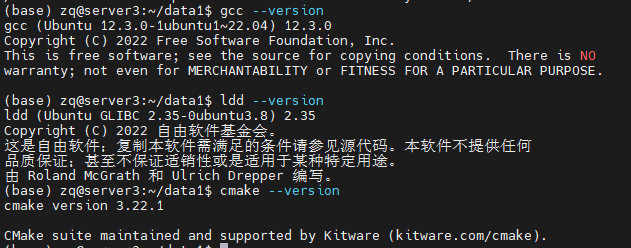

2. 準備C++環境

安裝gcc, cmake和GLIBC,用apt install即可

可使用如下命令是否查看是否安裝成功:

gcc --version

cmake --version

ldd --version

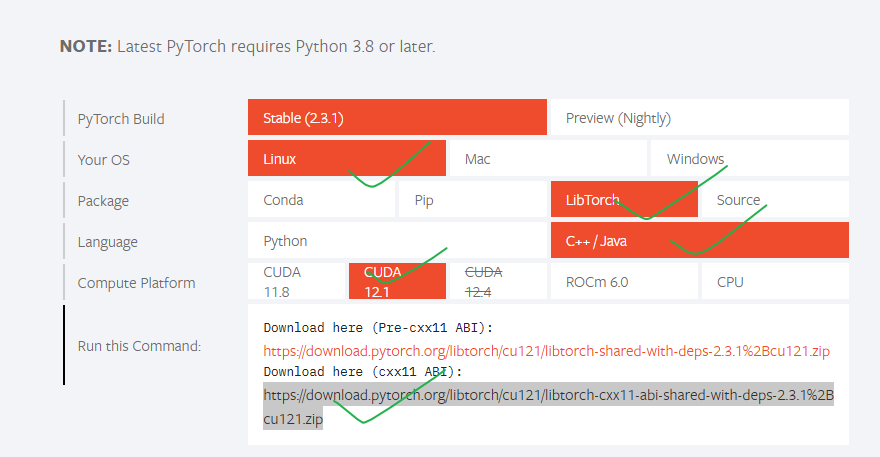

3, 下載libtorch文件

去pytoch官網https://pytorch.org/下載即可:

可使用如下命令下載并解壓:

wget https://download.pytorch.org/libtorch/cu121/libtorch-cxx11-abi-shared-with-deps-2.3.1%2Bcu121.zip

unzip libtorch-cxx11-abi-shared-with-deps-2.3.1+cu121.zip

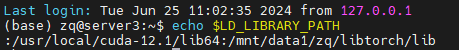

將libtorch路徑配置到path變量:

vim ~/.bashrc

最后一行加入:

export LD_LIBRARY_PATH=/path/to/libtorch/lib:$LD_LIBRARY_PATH

注意將/path/to/libtorch替換為實際的path,我這里是/mnt/data1/zq/libtorch

查看是否成功:

source ~/.bashrc

echo $LD_LIBRARY_PATH

4, 編寫測試libtorch是否安裝成功

創建main.cpp文件,內容如下:

#include <torch/torch.h>

#include <iostream>int main() {if (torch::cuda::is_available()) {std::cout << "CUDA is available! Running on GPU." << std::endl;// 創建一個隨機張量并將其移到GPU上torch::Tensor tensor_gpu = torch::rand({2, 3}).cuda();std::cout << "Tensor on GPU:\n" << tensor_gpu << std::endl;} else {std::cout << "CUDA not available! Running on CPU." << std::endl;// 創建一個隨機張量并保持在CPU上torch::Tensor tensor_cpu = torch::rand({2, 3});std::cout << "Tensor on CPU:\n" << tensor_cpu << std::endl;}return 0;

}

編譯和運行

創建CMakeLists.txt文件,內容如下:

cmake_minimum_required(VERSION 3.10 FATAL_ERROR)

project(test_project)# Setting the C++ standard to C++17

set(CMAKE_CXX_STANDARD 17)

set(CMAKE_CXX_STANDARD_REQUIRED ON)# If additional compiler flags are needed

add_compile_options(-Wall -Wextra -pedantic)# Setting the location of LibTorch

set(Torch_DIR "/path/to/libtorch/share/cmake/Torch")

find_package(Torch REQUIRED)# Specify the name of the executable and the corresponding source file

add_executable(test_project main.cpp)# Linking LibTorch libraries

target_link_libraries(test_project "${TORCH_LIBRARIES}")# Set the output directory for the executable

set(EXECUTABLE_OUTPUT_PATH ${PROJECT_SOURCE_DIR}/bin)/path/to/libtorch替換為實際的path

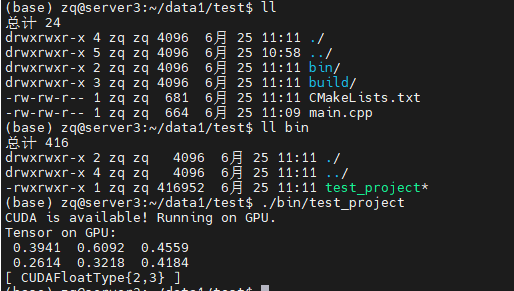

編譯并測試:

mkdir build

cd build

cmake ..

make

編譯完成之后,應該會出現一個bin目錄,其中有一個test_project文件,直接運行即可看到輸出。

出現CUDAFloatType說明,libtorch的GPU版本安裝成功。

2, windows: 以win10系統為例

1, 準備CUDA和CUDNN

可參考:Windows10下CUDA與cuDNN的安裝

2,準備C++編譯環境

這一步需要配置cmake, mingw。可參考:Windows 配置 C/C++ 開發環境

建議直接安裝Visual Studio這個IDE,可參考:Windows libtorch C++部署GPU版

3,下載安裝libtorch

參考這個視頻:

win10系統上LibTorch的安裝和使用(cuda10.1版本)

一個很水的LibTorch教程(1)

4. 注意事項

windows環境我沒有做測試,不保證一定可以成功。linux環境是親自測試的,保證可以復現

二、C++代碼封裝Pytorch模型測試:以resnet-18分類為例

1, 安裝opencv用于讀取圖像

需要使用opencv來讀取圖像數據,可通過如下命令安裝:

sudo apt install libopencv-dev

dpkg -l | grep libopencv # 查看是否安裝成功

2,用python導出訓練好的pytorch模型

在將PyTorch模型應用于C++環境之前,需要將其轉換為TorchScript。這可以通過兩種方式實現:tracing 或 scripting。可以通過如下代碼導出訓練好的ResNet-18模型:

import torch

import torchvision# 加載預訓練的模型

model = torchvision.models.resnet18(pretrained=True)# 將模型設置為評估模式

model.eval()# 創建一個示例輸入

example_input = torch.rand(1, 3, 224, 224) # 模型輸入的大小# 使用tracing導出模型

traced_script_module = torch.jit.trace(model, example_input)

traced_script_module.save("resnet18.pt")

3,編寫C++代碼測試

創建main.cpp文件,內容如下:

#include <torch/script.h>

#include <torch/torch.h>

#include <opencv2/opencv.hpp>

#include <iostream>

#include <filesystem>// Function to transform image to tensor

torch::Tensor transform_image(const cv::Mat& image) {cv::Mat img_transformed;cv::cvtColor(image, img_transformed, cv::COLOR_BGR2RGB);cv::resize(img_transformed, img_transformed, cv::Size(224, 224));img_transformed.convertTo(img_transformed, CV_32FC3, 1.0/255);auto img_tensor = torch::from_blob(img_transformed.data, {img_transformed.rows, img_transformed.cols, 3}, torch::kFloat);img_tensor = img_tensor.permute({2, 0, 1});img_tensor = torch::data::transforms::Normalize<torch::Tensor>({0.485, 0.456, 0.406}, {0.229, 0.224, 0.225})(img_tensor);img_tensor = img_tensor.unsqueeze(0);return img_tensor;

}// Load the model and classify an image

void classify_image(const std::string& model_path, const std::string& image_path) {// Load the modeltorch::jit::script::Module model = torch::jit::load(model_path);model.eval(); // Switch to evaluation mode// Load and transform the imagecv::Mat image = cv::imread(image_path, cv::IMREAD_COLOR);if (image.empty()) {std::cerr << "Could not read the image: " << image_path << std::endl;return;}torch::Tensor tensor_image = transform_image(image);// Perform inferencetorch::Tensor output = model.forward({tensor_image}).toTensor();int64_t pred = output.argmax(1).item<int64_t>();std::cout << "The image is classified as class index: " << pred << std::endl;

}int main(int argc, char* argv[]) {std::string model_path = "resnet18.pt"; // Default model pathstd::string image_path = "default_image.jpg"; // Default image path// 從命令行接受兩個參數, 分別作為model_path和image_pathif (argc >= 3) {model_path = argv[1];image_path = argv[2];} else {std::cout << "Using default model and image paths." << std::endl;}classify_image(model_path, image_path);return 0;

}

創建CMakeLists.txt,內容如下:

cmake_minimum_required(VERSION 3.10 FATAL_ERROR)

project(ImageClassification)set(CMAKE_CXX_STANDARD 17)

set(CMAKE_CXX_STANDARD_REQUIRED ON)# 設置LibTorch的位置, /path/to/libtorch替換為實際路徑

set(Torch_DIR "/path/to/libtorch/share/cmake/Torch")

find_package(Torch REQUIRED)find_package(OpenCV REQUIRED)add_executable(ImageClassification main.cpp)

target_link_libraries(ImageClassification "${TORCH_LIBRARIES}" "${OpenCV_LIBS}")

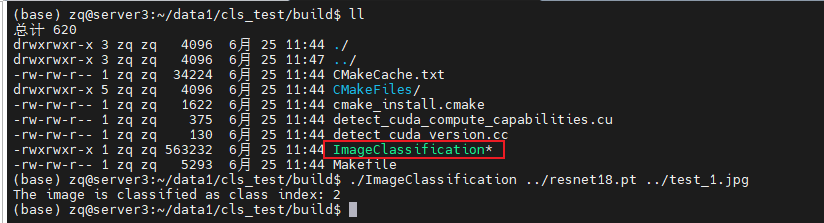

編譯并運行:

mkdir build && cd build

cmake ..

make

在build目錄下會出現ImageClassification這個可執行文件,直接運行傳入model_path和image_path即可。

安裝Mysql5.7.x)

:面向對象(下))

數據集)

)