原文:

https://lightning.ai/pages/community/tutorial/accelerating-large-language-models-with-mixed-precision-techniques/

This approach allows for efficient training while maintaining the accuracy and stability of the neural network.

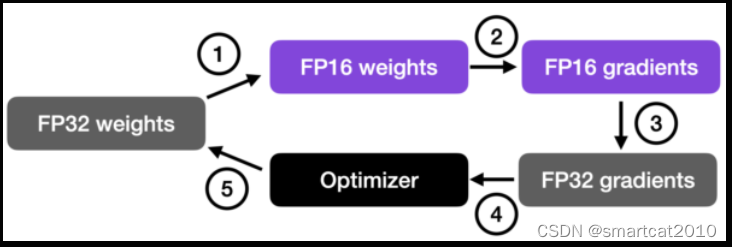

In more detail, the steps are as follows.

- Convert weights to FP16: In this step, the weights (or parameters) of the neural network, which are initially in FP32 format, are converted to lower-precision FP16 format. This reduces the memory footprint and allows for faster computation, as FP16 operations require less memory and can be processed more quickly by the hardware.

- Compute gradients: The forward and backward passes of the neural network are performed using the lower-precision FP16 weights. This step calculates the gradients (partial derivatives) of the loss function with respect to the network’s weights, which are used to update the weights during the optimization process.

- Convert gradients to FP32: After computing the gradients in FP16, they are converted back to the higher-precision FP32 format. This conversion is essential for maintaining numerical stability and avoiding issues such as vanishing or exploding gradients that can occur when using lower-precision arithmetic.

- Multiply by learning rate and update weights: Now in FP32 format, the gradients are multiplied by a learning rate (a scalar value that determines the step size during optimization).

- The product from step 4 is then used to update the original FP32 neural network weights. The learning rate helps control the convergence of the optimization process and is crucial for achieving good performance.

簡而言之:

g * lr + w老 --> w新,這里的g、w老、w新,都是FP32的;

其余計算梯度中的w、activation、gradient等,全部都是FP16的;

訓練效果:

耗時縮減為FP32的1/2 ~ 1/3

顯存變化不大(因為,增加顯存:weight多專一份FP16,減少顯存:forward時保存的activation變成FP16了,二者基本抵消)

推理效果:

顯存減少一半;耗時縮減為FP32的1/2;

使用FP16后的test accuracy反而上升,解釋:(正則效應,帶來噪音,幫助模型泛化得更好,減少過擬合)

A likely explanation is that this is due to regularizing effects of using a lower precision. Lower precision may introduce some level of noise in the training process, which can help the model generalize better and reduce overfitting, potentially leading to higher accuracy on the validation and test sets.

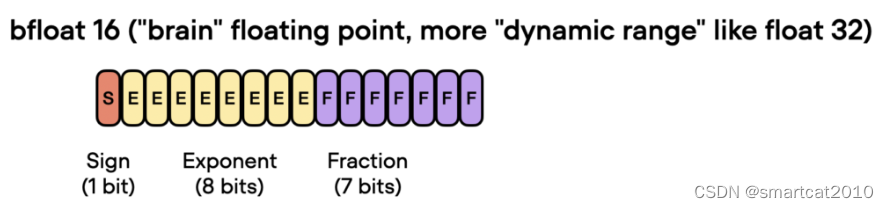

bf16,指數位增加,所以能覆蓋更大的數值范圍,所以能使訓練過程更魯棒,減少overflow和underflow的出現概率;

)

(附文檔))

:觀察者模式)

)