封裝層

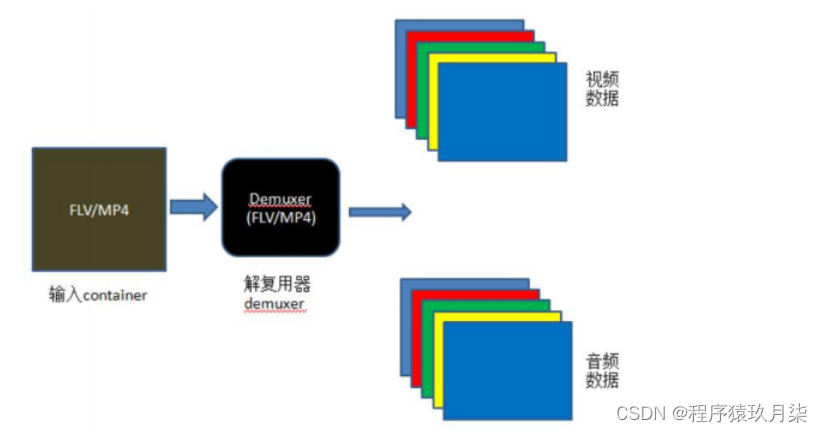

封裝格式(container format)可以看作是編碼流(音頻流、視頻流等)數據的一層外殼,將編碼后的數據存儲于此封裝格式的文件之內。

封裝又稱容器,容器的稱法更為形象,所謂容器,就是存放內容的器具,飲料是內容,那么裝飲料的瓶子就是容器。

- avformat_open_input()

- avformat_find_stream_info()

- av_read_frame()

- av_write_frame()

- av_interleaved_write_frame()

- avio_open()

- avformat_write_header()

- av_write_trailer()

1.解封裝

1.分配解復用器上下文 avformat_alloc_context

2.根據 url 打開本地文件或網絡流 avformat_open_input

3.讀取媒體的部分數據包以獲取碼流信息 avformat_find_stream_info

4.讀取碼流信息:循環處理

4.1 從文件中讀取數據包 av_read_frame

4.2 定位文件 avformat_seek_file 或 av_seek_frame

5.關閉解復用器 avformat_close_input

本例子實現的是將音視頻分離,例如將封裝格式為 FLV、MKV、MP4、AVI 等封裝格式的文件,將音頻、視頻讀取出來并打印。

實現的過程,可以大致用如下圖表示:

#include <stdio.h>

extern "C"

{#include <libavformat/avformat.h>

}

/**

* @brief 將一個AVRational類型的分數轉換為double類型的浮點數

* @param r:r為一個AVRational類型的結構體變量,成員num表示分子,成員den表示分母,r的值即為(double)r.num / (double)r.den。用這種方法表示可以最大程度地避免精度的損失

* @return 如果變量r的分母den為0,則返回0(為了避免除數為0導致程序死掉);其余情況返回(double)r.num / (double)r.den

*/

static double r2d(AVRational r)

{return r.den == 0 ? 0 : (double)r.num / (double)r.den;

}int main()

{//需要讀取的本地媒體文件相對路徑為video1.mp4,這里由于文件video1.mp4就在工程目錄下,所以相對路徑為video1.mp4const char *path = "ande_10s.mp4";//const char *path = "audio1.mp3";///av_register_all(); //初始化所有組件,只有調用了該函數,才能使用復用器和編解碼器。否則,調用函數avformat_open_input會失敗,無法獲取媒體文件的信息avformat_network_init(); //打開網絡流。這里如果只需要讀取本地媒體文件,不需要用到網絡功能,可以不用加上這一句AVDictionary *opts = NULL;//AVFormatContext是描述一個媒體文件或媒體流的構成和基本信息的結構體AVFormatContext *ic = NULL;//媒體打開函數,調用該函數可以獲得路徑為path的媒體文件的信息,并把這些信息保存到指針ic指向的空間中(調用該函數后會分配一個空間,讓指針ic指向該空間)int re = avformat_open_input(&ic, path, NULL, &opts);if (re != 0) //如果打開媒體文件失敗,打印失敗原因。比如,如果上面沒有調用函數av_register_all,則會打印“XXX failed!:Invaliddata found when processing input”{char buf[1024] = { 0 };av_strerror(re, buf, sizeof(buf) - 1);printf("open %s failed!:%s", path, buf);}else //打開媒體文件成功{printf("打開媒體文件 %s 成功!\n", path);//調用該函數可以進一步讀取一部分視音頻數據并且獲得一些相關的信息。//調用avformat_open_input之后,我們無法獲取到正確和所有的媒體參數,所以還得要調用avformat_find_stream_info進一步的去獲取。avformat_find_stream_info(ic, NULL);//調用avformat_open_input讀取到的媒體文件的路徑/名字printf("媒體文件名稱:%s\n", ic->filename);//視音頻流的個數,如果一個媒體文件既有音頻,又有視頻,則nb_streams的值為2。如果媒體文件只有音頻,則值為1printf("視音頻流的個數:%d\n", ic->nb_streams);//媒體文件的平均碼率,單位為bpsprintf("媒體文件的平均碼率:%lldbps\n", ic->bit_rate);printf("duration:%d\n", ic->duration);int tns, thh, tmm, tss;tns = (ic->duration) / AV_TIME_BASE;thh = tns / 3600;tmm = (tns % 3600) / 60;tss = (tns % 60);printf("媒體文件總時長:%d時%d分%d秒\n", thh, tmm, tss); //通過上述運算,可以得到媒體文件的總時長printf("\n");//通過遍歷的方式讀取媒體文件視頻和音頻的信息,//新版本的FFmpeg新增加了函數av_find_best_stream,也可以取得同樣的效果,但這里為了兼容舊版本還是用這種遍歷的方式for (int i = 0; i < ic->nb_streams; i++){AVStream *as = ic->streams[i];if (AVMEDIA_TYPE_AUDIO == as->codecpar->codec_type) //如果是音頻流,則打印音頻的信息{printf("音頻信息:\n");printf("index:%d\n", as->index); //如果一個媒體文件既有音頻,又有視頻,則音頻index的值一般為1。但該值不一定準確,所以還是得通過as->codecpar->codec_type判斷是視頻還是音頻printf("音頻采樣率:%dHz\n", as->codecpar->sample_rate); //音頻編解碼器的采樣率,單位為Hzif (AV_SAMPLE_FMT_FLTP == as->codecpar->format) //音頻采樣格式{printf("音頻采樣格式:AV_SAMPLE_FMT_FLTP\n");}else if (AV_SAMPLE_FMT_S16P == as->codecpar->format){printf("音頻采樣格式:AV_SAMPLE_FMT_S16P\n");}printf("音頻信道數目:%d\n", as->codecpar->channels); //音頻信道數目if (AV_CODEC_ID_AAC == as->codecpar->codec_id) //音頻壓縮編碼格式{printf("音頻壓縮編碼格式:AAC\n");}else if (AV_CODEC_ID_MP3 == as->codecpar->codec_id){printf("音頻壓縮編碼格式:MP3\n");}int DurationAudio = (as->duration) * r2d(as->time_base); //音頻總時長,單位為秒。注意如果把單位放大為毫秒或者微妙,音頻總時長跟視頻總時長不一定相等的printf("音頻總時長:%d時%d分%d秒\n", DurationAudio / 3600, (DurationAudio % 3600) / 60, (DurationAudio % 60)); //將音頻總時長轉換為時分秒的格式打印到控制臺上printf("\n");}else if (AVMEDIA_TYPE_VIDEO == as->codecpar->codec_type) //如果是視頻流,則打印視頻的信息{printf("視頻信息:\n");printf("index:%d\n", as->index); //如果一個媒體文件既有音頻,又有視頻,則視頻index的值一般為0。但該值不一定準確,所以還是得通過as->codecpar->codec_type判斷是視頻還是音頻printf("視頻幀率:%lffps\n", r2d(as->avg_frame_rate)); //視頻幀率,單位為fps,表示每秒出現多少幀if (AV_CODEC_ID_MPEG4 == as->codecpar->codec_id) //視頻壓縮編碼格式{printf("視頻壓縮編碼格式:MPEG4\n");}printf("幀寬度:%d 幀高度:%d\n", as->codecpar->width, as->codecpar->height); //視頻幀寬度和幀高度int DurationVideo = (as->duration) * r2d(as->time_base); //視頻總時長,單位為秒。注意如果把單位放大為毫秒或者微妙,音頻總時長跟視頻總時長不一定相等的printf("視頻總時長:%d時%d分%d秒\n", DurationVideo / 3600, (DurationVideo % 3600) / 60, (DurationVideo % 60)); //將視頻總時長轉換為時分秒的格式打印到控制臺上printf("\n");}}//av_dump_format(ic, 0, path, 0);}if (ic){avformat_close_input(&ic); //關閉一個AVFormatContext,和函數avformat_open_input()成對使用}avformat_network_deinit();getchar(); //加上這一句,防止程序打印完信息就馬上退出了return 0;

}int mainsdf3324sf(int argc, char **argv)

{// 1. 打開文件const char *ifilename = "ande_10s.mp4";printf("in_filename = %s\n", ifilename);avformat_network_init();// AVFormatContext是描述一個媒體文件或媒體流的構成和基本信息的結構體AVFormatContext *ifmt_ctx = NULL; // 輸入文件的demux// 打開文件,主要是探測協議類型,如果是網絡文件則創建網絡鏈接int ret = avformat_open_input(&ifmt_ctx, ifilename, NULL, NULL);if (ret < 0) {char buf[1024] = {0};av_strerror(ret, buf, sizeof (buf) - 1);printf("open %s failed: %s\n", ifilename, buf);return -1;}// 2. 讀取碼流信息ret = avformat_find_stream_info(ifmt_ctx, NULL);if (ret < 0) //如果打開媒體文件失敗,打印失敗原因{char buf[1024] = { 0 };av_strerror(ret, buf, sizeof(buf) - 1);printf("avformat_find_stream_info %s failed:%s\n", ifilename, buf);avformat_close_input(&ifmt_ctx);return -1;}// 3.打印總體信息printf_s("\n==== av_dump_format in_filename:%s ===\n", ifilename);av_dump_format(ifmt_ctx, 0, ifilename, 0);printf_s("\n==== av_dump_format finish =======\n\n");printf("media name:%s\n", ifmt_ctx->url);printf("stream number:%d\n", ifmt_ctx->nb_streams); // nb_streams媒體流數量printf("media average ratio:%lldkbps\n",(int64_t)(ifmt_ctx->bit_rate/1024)); // 媒體文件的碼率,單位為bps/1000=Kbps// duration: 媒體文件時長,單位微妙int total_seconds = (ifmt_ctx->duration) / AV_TIME_BASE; // 1000us = 1ms, 1000ms = 1秒printf("audio duration: %02d:%02d:%02d\n",total_seconds / 3600, (total_seconds % 3600) / 60, (total_seconds % 60));printf("\n");// 4.讀取碼流信息// 音頻int audioindex = av_find_best_stream(ifmt_ctx, AVMEDIA_TYPE_AUDIO, -1, -1, NULL, 0);if (audioindex < 0) {printf("av_find_best_stream %s eror.", av_get_media_type_string(AVMEDIA_TYPE_AUDIO));return -1;}AVStream *audio_stream = ifmt_ctx->streams[audioindex];printf("----- Audio info:\n");printf("index: %d\n", audio_stream->index); // 序列號printf("samplarate: %d Hz\n", audio_stream->codecpar->sample_rate); // 采樣率printf("sampleformat: %d\n", audio_stream->codecpar->format); // 采樣格式 AV_SAMPLE_FMT_FLTP:8printf("audio codec: %d\n", audio_stream->codecpar->codec_id); // 編碼格式 AV_CODEC_ID_MP3:86017 AV_CODEC_ID_AAC:86018if (audio_stream->duration != AV_NOPTS_VALUE) {int audio_duration = audio_stream->duration * av_q2d(audio_stream->time_base);printf("audio duration: %02d:%02d:%02d\n",audio_duration / 3600, (audio_duration % 3600) / 60, (audio_duration % 60));}// 視頻int videoindex = av_find_best_stream(ifmt_ctx, AVMEDIA_TYPE_VIDEO, -1, -1, NULL, 0);if (videoindex < 0) {printf("av_find_best_stream %s eror.", av_get_media_type_string(AVMEDIA_TYPE_VIDEO));return -1;}AVStream *video_stream = ifmt_ctx->streams[videoindex];printf("----- Video info:\n");printf("index: %d\n", video_stream->index); // 序列號printf("fps: %lf\n", av_q2d(video_stream->avg_frame_rate)); // 幀率printf("width: %d, height:%d \n", video_stream->codecpar->width, video_stream->codecpar->height);printf("video codec: %d\n", video_stream->codecpar->codec_id); // 編碼格式 AV_CODEC_ID_H264: 27if (video_stream->duration != AV_NOPTS_VALUE) {int video_duration = video_stream->duration * av_q2d(video_stream->time_base);printf("audio duration: %02d:%02d:%02d\n",video_duration / 3600, (video_duration % 3600) / 60, (video_duration % 60));}// 5.提取碼流AVPacket *pkt = av_packet_alloc();int pkt_count = 0;int print_max_count = 100;printf("\n-----av_read_frame start\n");while (1){ret = av_read_frame(ifmt_ctx, pkt);if (ret < 0) {printf("av_read_frame end\n");break;}if(pkt_count++ < print_max_count){if (pkt->stream_index == audioindex){printf("audio pts: %lld\n", pkt->pts);printf("audio dts: %lld\n", pkt->dts);printf("audio size: %d\n", pkt->size);printf("audio pos: %lld\n", pkt->pos);printf("audio duration: %lf\n\n",pkt->duration * av_q2d(ifmt_ctx->streams[audioindex]->time_base));}else if (pkt->stream_index == videoindex){printf("video pts: %lld\n", pkt->pts);printf("video dts: %lld\n", pkt->dts);printf("video size: %d\n", pkt->size);printf("video pos: %lld\n", pkt->pos);printf("video duration: %lf\n\n",pkt->duration * av_q2d(ifmt_ctx->streams[videoindex]->time_base));}else{printf("unknown stream_index:\n", pkt->stream_index);}}av_packet_unref(pkt);}// 6.結束if(pkt)av_packet_free(&pkt);if(ifmt_ctx)avformat_close_input(&ifmt_ctx);avformat_network_deinit();//getchar(); //加上這一句,防止程序打印完信息馬上退出return 0;

}

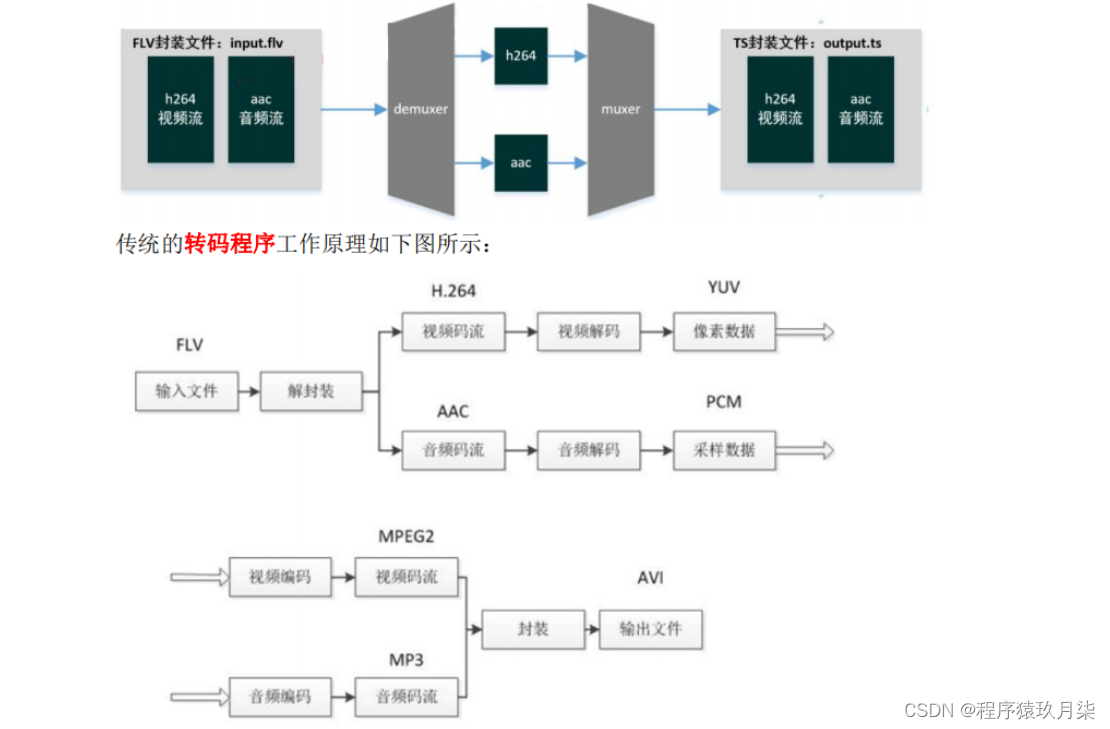

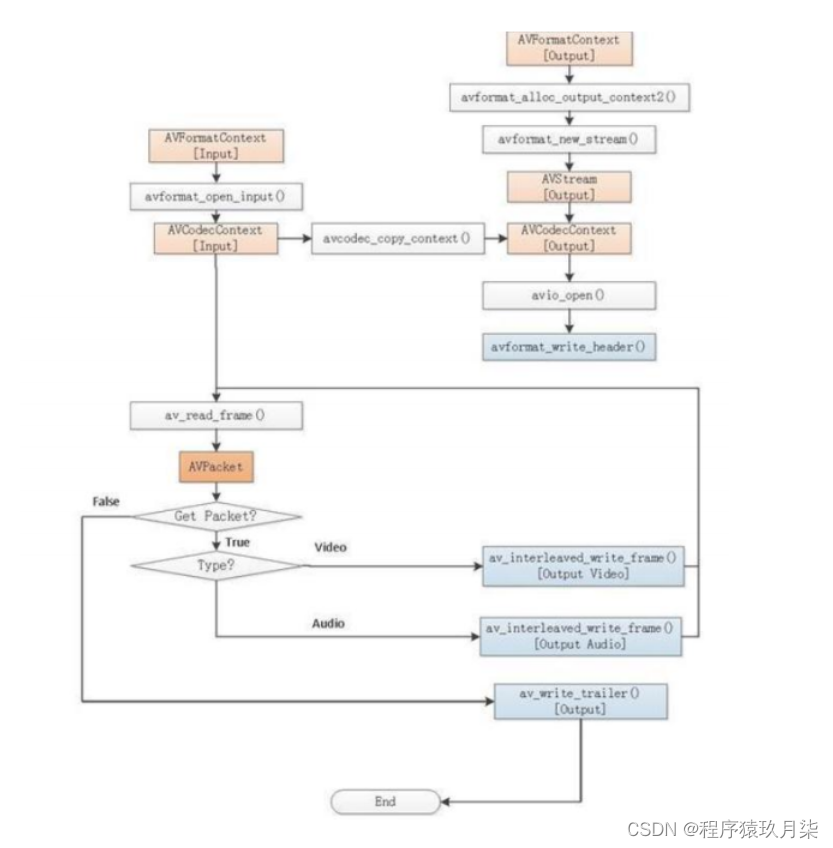

2.轉封裝

從一種視頻容器轉成另一種視頻容器

所謂的封 裝格 式轉 換,就是在 AVI,FLV,MKV ,MP4 這些格式 之間轉換(對應.avi,.flv,.mkv,.mp4 文件)。需要注意的是,本程序并不進行視音頻的編碼和解碼工作。而是直接將視音頻壓縮碼流從一種封裝格式文件中獲取出來然后打包成另外一種封裝格式的文件。

上圖例舉了一個舉例:FLV(視頻:H.264,音頻:AAC)轉碼為 AVI(視頻:MPEG2,音頻 MP3)的例子。可見視頻轉碼的過程通俗地講相當于把視頻和音頻重新“錄”了一遍。

/*** @file* libavformat/libavcodec demuxing and muxing API example.** Remux streams from one container format to another.* @example remuxing.c*/#include <libavutil/timestamp.h>

#include <libavformat/avformat.h>static void log_packet(const AVFormatContext *fmt_ctx, const AVPacket *pkt, const char *tag)

{AVRational *time_base = &fmt_ctx->streams[pkt->stream_index]->time_base;printf("%s: pts:%s pts_time:%s dts:%s dts_time:%s duration:%s duration_time:%s stream_index:%d\n",tag,av_ts2str(pkt->pts), av_ts2timestr(pkt->pts, time_base),av_ts2str(pkt->dts), av_ts2timestr(pkt->dts, time_base),av_ts2str(pkt->duration), av_ts2timestr(pkt->duration, time_base),pkt->stream_index);

}int main234ssfdx(int argc, char **argv)

{AVOutputFormat *ofmt = NULL;AVFormatContext *ifmt_ctx = NULL, *ofmt_ctx = NULL;AVPacket pkt;const char *in_filename, *out_filename;int ret, i;int stream_index = 0;int *stream_mapping = NULL;int stream_mapping_size = 0;if (argc < 3) {printf("usage: %s input output\n""API example program to remux a media file with libavformat and libavcodec.\n""The output format is guessed according to the file extension.\n""\n", argv[0]);return 1;}in_filename = argv[1];out_filename = argv[2];if ((ret = avformat_open_input(&ifmt_ctx, in_filename, 0, 0)) < 0) {fprintf(stderr, "Could not open input file '%s'", in_filename);goto end;}if ((ret = avformat_find_stream_info(ifmt_ctx, 0)) < 0) {fprintf(stderr, "Failed to retrieve input stream information");goto end;}printf("\n\n-------av_dump_format:ifmt_ctx----------------\n");av_dump_format(ifmt_ctx, 0, in_filename, 0);avformat_alloc_output_context2(&ofmt_ctx, NULL, NULL, out_filename);if (!ofmt_ctx) {fprintf(stderr, "Could not create output context\n");ret = AVERROR_UNKNOWN;goto end;}stream_mapping_size = ifmt_ctx->nb_streams;stream_mapping = av_mallocz_array(stream_mapping_size, sizeof(*stream_mapping));if (!stream_mapping) {ret = AVERROR(ENOMEM);goto end;}ofmt = ofmt_ctx->oformat;for (i = 0; i < ifmt_ctx->nb_streams; i++) {AVStream *out_stream;AVStream *in_stream = ifmt_ctx->streams[i];AVCodecParameters *in_codecpar = in_stream->codecpar;if (in_codecpar->codec_type != AVMEDIA_TYPE_AUDIO &&in_codecpar->codec_type != AVMEDIA_TYPE_VIDEO &&in_codecpar->codec_type != AVMEDIA_TYPE_SUBTITLE) {stream_mapping[i] = -1;continue;}stream_mapping[i] = stream_index++;out_stream = avformat_new_stream(ofmt_ctx, NULL);if (!out_stream) {fprintf(stderr, "Failed allocating output stream\n");ret = AVERROR_UNKNOWN;goto end;}ret = avcodec_parameters_copy(out_stream->codecpar, in_codecpar);if (ret < 0) {fprintf(stderr, "Failed to copy codec parameters\n");goto end;}out_stream->codecpar->codec_tag = 0;}printf("\n\n-------av_dump_format:ofmt_ctx----------------\n");av_dump_format(ofmt_ctx, 0, out_filename, 1);if (!(ofmt->flags & AVFMT_NOFILE)) {ret = avio_open(&ofmt_ctx->pb, out_filename, AVIO_FLAG_WRITE);if (ret < 0) {fprintf(stderr, "Could not open output file '%s'", out_filename);goto end;}}ret = avformat_write_header(ofmt_ctx, NULL);if (ret < 0) {fprintf(stderr, "Error occurred when opening output file\n");goto end;}while (1) {AVStream *in_stream, *out_stream;/// 讀取音視頻壓縮包ret = av_read_frame(ifmt_ctx, &pkt);if (ret < 0)break;in_stream = ifmt_ctx->streams[pkt.stream_index];if (pkt.stream_index >= stream_mapping_size ||stream_mapping[pkt.stream_index] < 0) {av_packet_unref(&pkt);continue;}pkt.stream_index = stream_mapping[pkt.stream_index];out_stream = ofmt_ctx->streams[pkt.stream_index];log_packet(ifmt_ctx, &pkt, "in");/* copy packet */pkt.pts = av_rescale_q_rnd(pkt.pts, in_stream->time_base, out_stream->time_base, AV_ROUND_NEAR_INF|AV_ROUND_PASS_MINMAX);pkt.dts = av_rescale_q_rnd(pkt.dts, in_stream->time_base, out_stream->time_base, AV_ROUND_NEAR_INF|AV_ROUND_PASS_MINMAX);pkt.duration = av_rescale_q(pkt.duration, in_stream->time_base, out_stream->time_base);pkt.pos = -1;log_packet(ofmt_ctx, &pkt, "out");/// 交織寫音視頻包ret = av_interleaved_write_frame(ofmt_ctx, &pkt);if (ret < 0) {fprintf(stderr, "Error muxing packet\n");break;}av_packet_unref(&pkt);//包需要解引用}av_write_trailer(ofmt_ctx);

end:avformat_close_input(&ifmt_ctx);/* close output */if (ofmt_ctx && !(ofmt->flags & AVFMT_NOFILE))avio_closep(&ofmt_ctx->pb);avformat_free_context(ofmt_ctx);av_freep(&stream_mapping);if (ret < 0 && ret != AVERROR_EOF) {fprintf(stderr, "Error occurred: %s\n", av_err2str(ret));return 1;}return 0;

}

)

)

方法導致的內存泄露問題)

![[算法][差分][延遲相差][leetcode]2960. 統計已測試設備](http://pic.xiahunao.cn/[算法][差分][延遲相差][leetcode]2960. 統計已測試設備)

數據流圖)

)

)

)