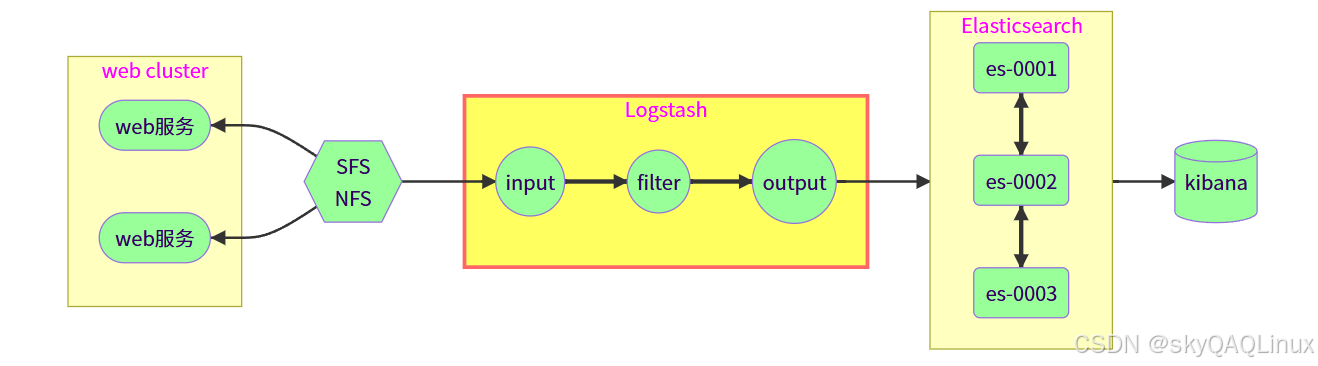

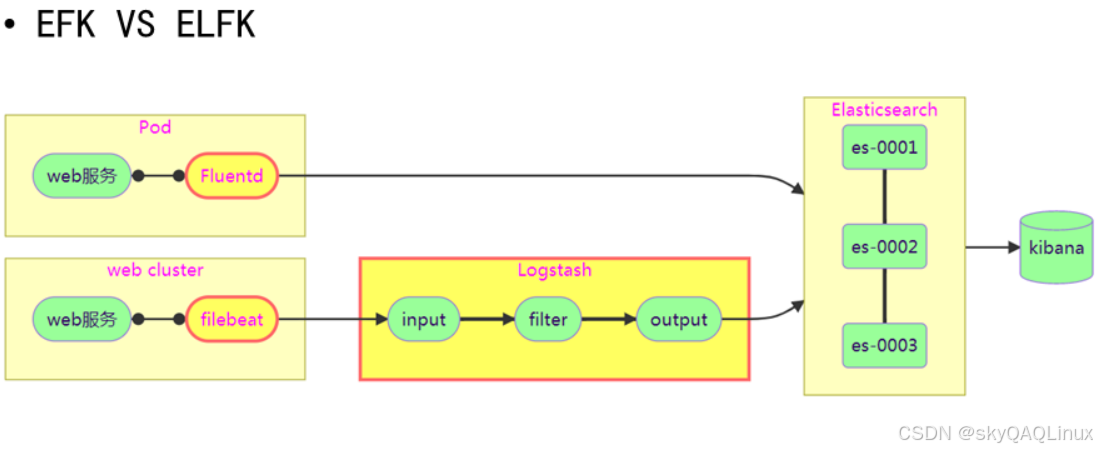

一.ELK架構

Elasticsearch + Logstash + Kibana

數據庫+日志處理+日志顯示

1.logstash的使用

(1)input:輸入

(2)filter:處理

(3)output:輸出

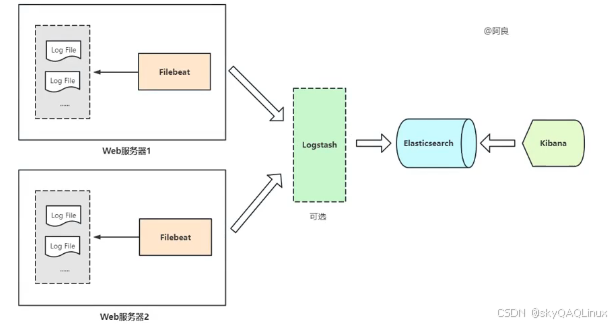

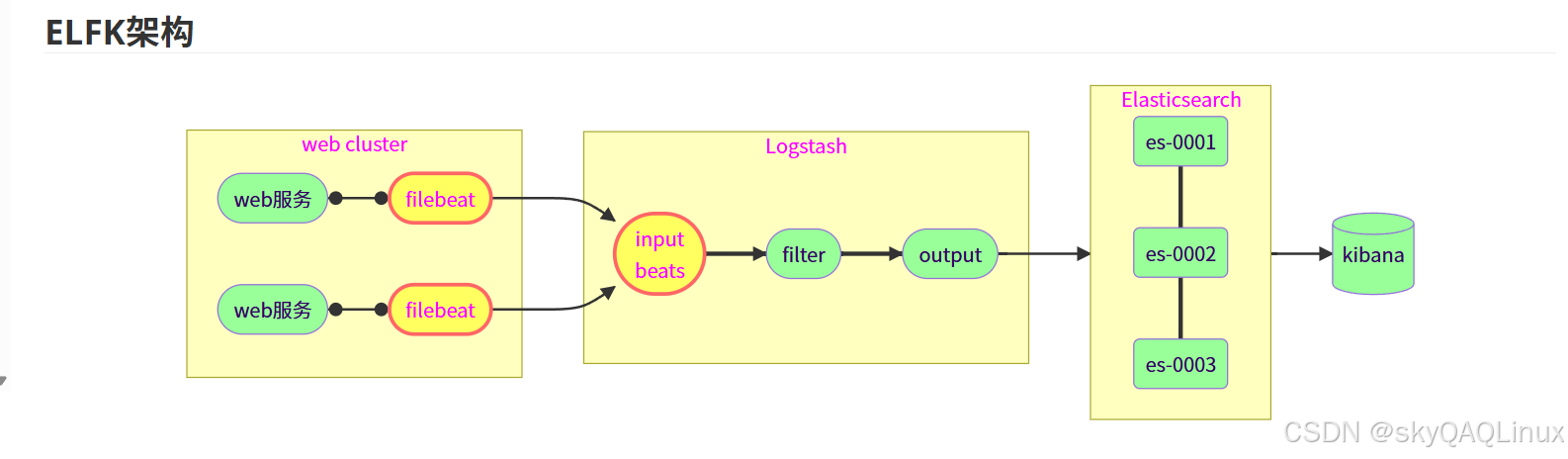

2.ELFK架構

Filebeat-->Elasticsearch-->Logstash-->Kibana

Filebeat 部署在節點上輕量采集日志,Logstash 集中處理。

ELK 的弊端:

NFS數據共享會成為流量瓶頸,不適合大規模集群

是否可以在每臺 Web 服務器上安裝 Logstash

Logstash占用資源多,在節點部署會爭搶應用的資源

ELFK 優勢:

Filebeat占用資源非常小,可以在所有節點部署

在每臺Web服務器上安裝客戶端,通過網絡發送日志,沒有單點故障,沒有流量瓶頸

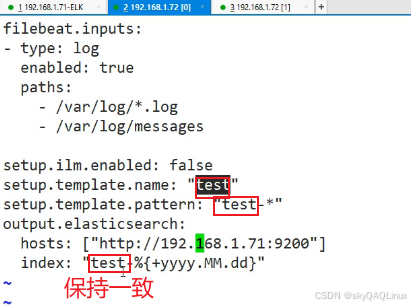

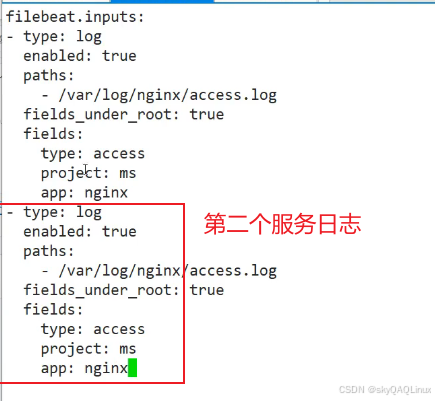

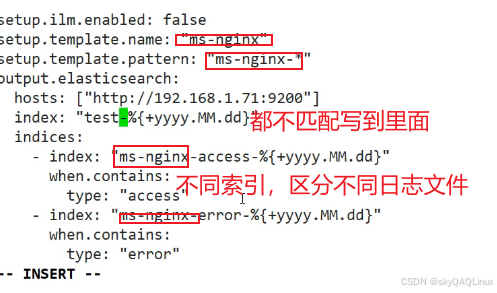

filebeat的配置文件

test可以設置為業務名

filebeat直接區分不同服務的日志

示例filebeat:

filebeat.inputs:- type: filestreamid: oss-logspaths:- /var/oss/*.logfields:log_source: "oss"fields_under_root: true- type: filestreamid: dps-logspaths:- /var/dps/*.logfields:log_source: "dps"fields_under_root: trueoutput.logstash: # 輸出到 Logstashhosts: ["your-logstash-host:5044"] # 替換為 Logstash 地址# 示例2

# ============================== Filebeat inputs ===============================

filebeat.inputs:# 收集 OSS 服務日志- type: filestreamenabled: trueid: oss-servicepaths:- /var/log/oss/*.log- /var/log/oss/*.log.gzfields:log_source: "oss" # 關鍵字段,用于標識日志來源environment: "production" # 可選:添加環境標識fields_under_root: true # 將 fields 提升到事件頂級# 可選:為OSS日志添加特定標簽tags: ["oss", "application"]# 收集 DPS 服務日志- type: filestreamenabled: trueid: dps-servicepaths:- /var/log/dps/*.log- /var/log/dps/*.log.gzfields:log_source: "dps" # 關鍵字段,用于標識日志來源environment: "production"fields_under_root: truetags: ["dps", "application"]# ======================== Filebeat 模塊(禁用避免干擾) ========================

filebeat.config.modules:path: ${path.config}/modules.d/*.ymlreload.enabled: false# ================================= Outputs ====================================

output.logstash:hosts: ["logstash-server-ip:5044"] # 替換為你的 Logstash 服務器 IP# 可選:開啟負載均衡,如果有多個 Logstash 節點# loadbalance: true# ================================== Logging ===================================

logging.level: info

logging.to_files: true

logging.files:path: /var/log/filebeatname: filebeatkeepfiles: 7permissions: 0644

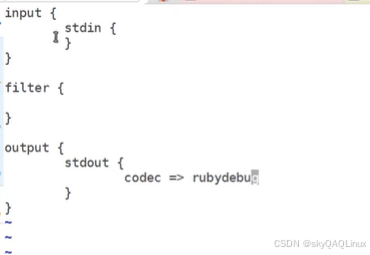

示例logstash:

# 例如在 /etc/logstash/conf.d/ 下創建 log_processing.conf

input {beats {port => 5044}

}# 這里也可以不過濾

filter {# 此處可添加任意過濾解析規則,如Grok解析消息體:cite[10]# 所有日志都會經過這里

}output {# 根據 log_source 字段值判斷輸出到哪個索引if [log_source] == "oss" {elasticsearch {hosts => ["http://your-es-host:9200"] #Elasticsearchindex => "oss-logs-%{+YYYY.MM.dd}" # 定義OSS索引格式}} else if [log_source] == "dps" {elasticsearch {hosts => ["http://your-es-host:9200"]index => "dps-logs-%{+YYYY.MM.dd}" # 定義DPS索引格式}}# 可選: stdout { codec => rubydebug } # 調試時可在終端輸出結果

}# 示例2

input {beats {port => 5044host => "0.0.0.0"# 可選:增加并發處理能力# threads => 4}

}filter {# 通用處理:添加主機信息mutate {add_field => {"host_ip" => "%{[host][ip]}""host_name" => "%{[host][name]}"}}# 根據 log_source 字段進行路由和特定處理,log_source是filebeat里面設置的if [log_source] == "oss" {# OSS 服務日志的特定處理grok {match => { "message" => "\[%{TIMESTAMP_ISO8601:timestamp}\] %{LOGLEVEL:loglevel} %{GREEDYDATA:message}" }overwrite => [ "message" ]}# 解析OSS特定字段(示例)grok {match => { "message" => "Bucket: %{NOTSPACE:bucket_name}, Object: %{NOTSPACE:object_key}, Action: %{WORD:action}" }}date {match => [ "timestamp", "ISO8601" ]remove_field => [ "timestamp" ]}# 為OSS日志添加特定標簽mutate {add_tag => [ "processed_oss" ]}} else if [log_source] == "dps" {# DPS 服務日志的特定處理grok {match => { "message" => "%{TIMESTAMP_ISO8601:timestamp} %{LOGLEVEL:loglevel} \[%{NOTSPACE:thread}\] %{JAVACLASS:class} - %{GREEDYDATA:message}" }overwrite => [ "message" ]}# 解析DPS特定字段(示例)grok {match => { "message" => "TransactionID: %{NOTSPACE:transaction_id}, Duration: %{NUMBER:duration}ms" }}date {match => [ "timestamp", "ISO8601" ]remove_field => [ "timestamp" ]}# 轉換持續時間字段為數字mutate {convert => { "duration" => "integer" }add_tag => [ "processed_dps" ]}}# 通用后處理:移除不必要的字段mutate {remove_field => [ "ecs", "agent", "input", "log" ]}

}output {# 根據 log_source 路由到不同的 Elasticsearch 索引if [log_source] == "oss" {elasticsearch {hosts => ["http://elasticsearch-host:9200"]index => "oss-logs-%{+YYYY.MM.dd}" # OSS 索引格式# 可選:為OSS索引指定不同的身份驗證或配置# user => "oss_writer"# password => "${OSS_ES_PASSWORD}"}} else if [log_source] == "dps" {elasticsearch {hosts => ["http://elasticsearch-host:9200"]index => "dps-logs-%{+YYYY.MM.dd}" # DPS 索引格式# 可選:為DPS索引指定不同的配置# document_type => "dps_log"}}# 調試輸出(生產環境可注釋掉)stdout {codec => rubydebug# 可選:只在調試時開啟特定標簽的日志# if "debug" in [tags]}# 可選:對于解析失敗的日志,發送到死信隊列if "_grokparsefailure" in [tags] or "_dateparsefailure" in [tags] {elasticsearch {hosts => ["http://elasticsearch-host:9200"]index => "failed-logs-%{+YYYY.MM.dd}"}}

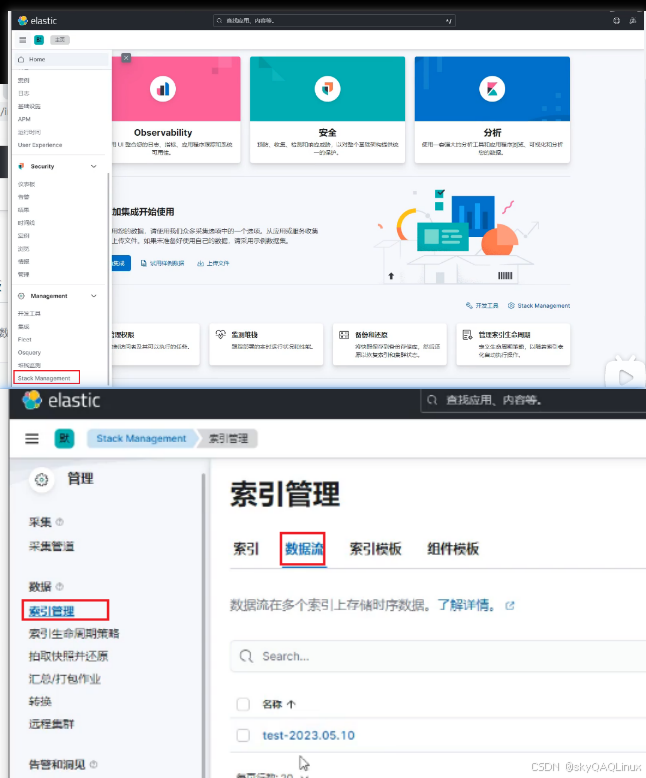

}kibana查看索引日志

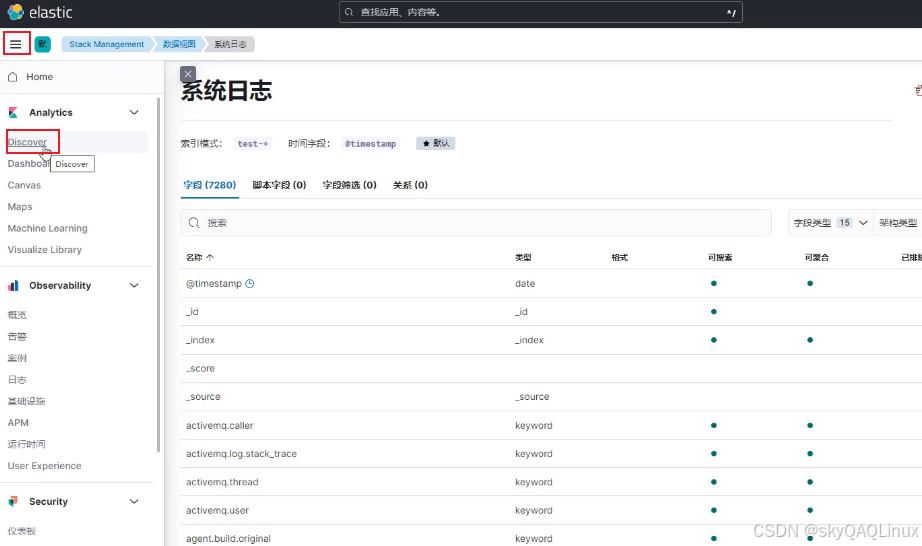

(1)查看filebeat采集過來的日志

可以查看到索引日志說明成功采集過來了

(2)創建數據視圖,查看日志

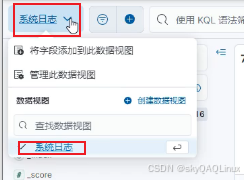

(3)選擇創建了哪些數據視圖(不同業務日志)

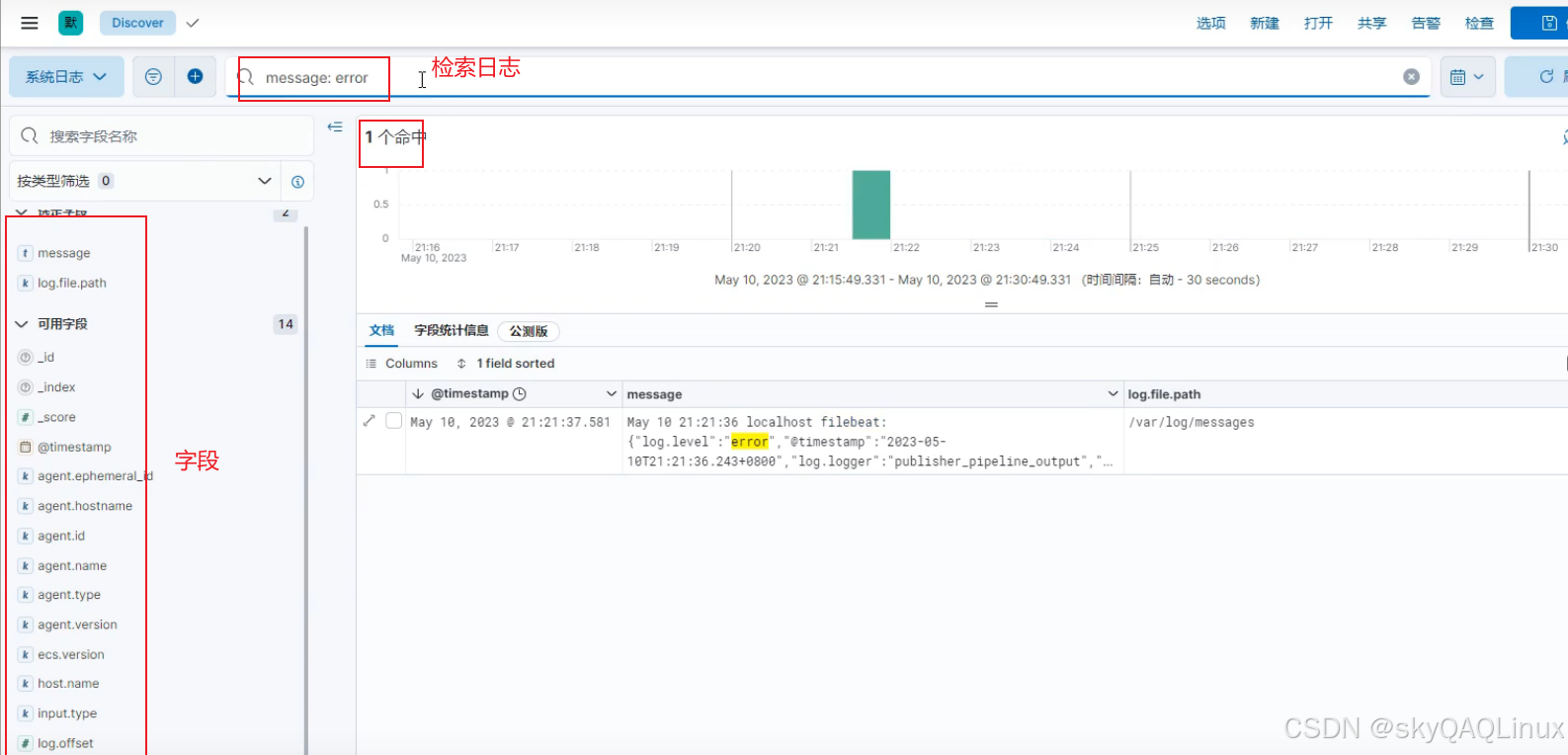

(4)日志檢索

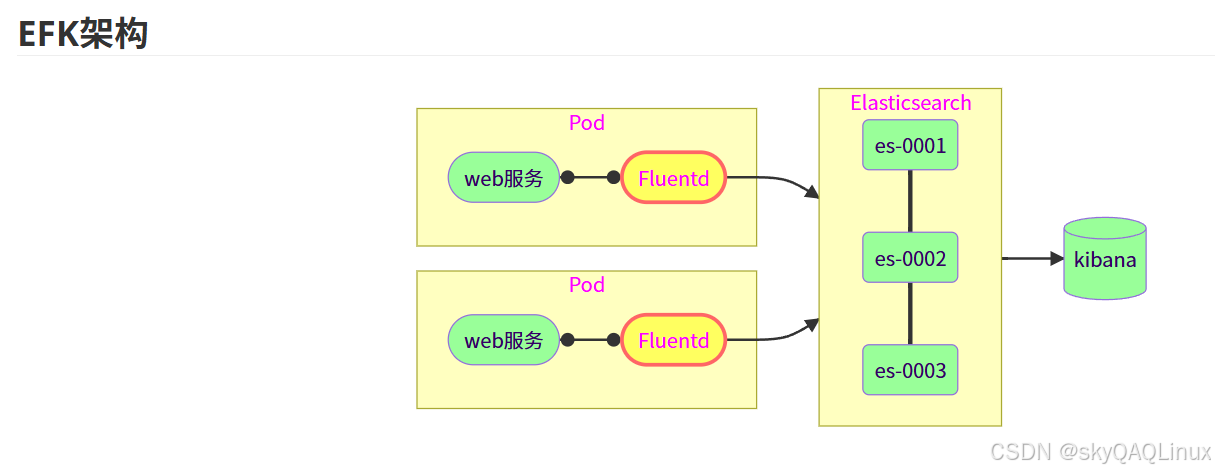

3.EFK架構

Fluentd 或 Fluent-bit 替代 Logstash,資源占用極低(<10MB),支持插件擴展。

Fluent-bit 更適合容器環境,如 Kubernetes 中的 DaemonSet 部署

ELFK 的弊端:

ELFK 組件較多,需要配置 Logstash 處理數據

Logstash 體積大,占用資源多,不適用放在容器內運行

EFK 優勢:

Fluent 整合了 Filebeat 和 Logstash 的功能

Fluent 組件更少,占用資源更小,非常適合容器部署

容器部署的優選方案

)

作用(管理數據庫對象的存儲位置)(pg_default、pg_global))