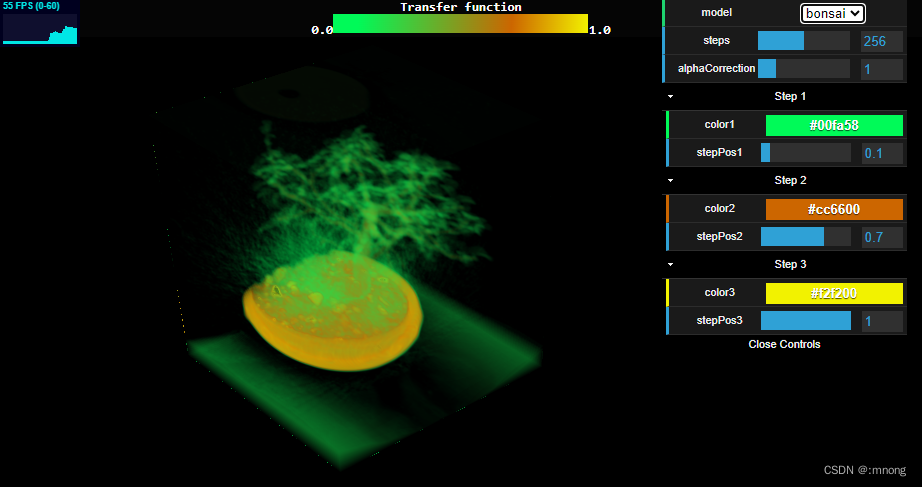

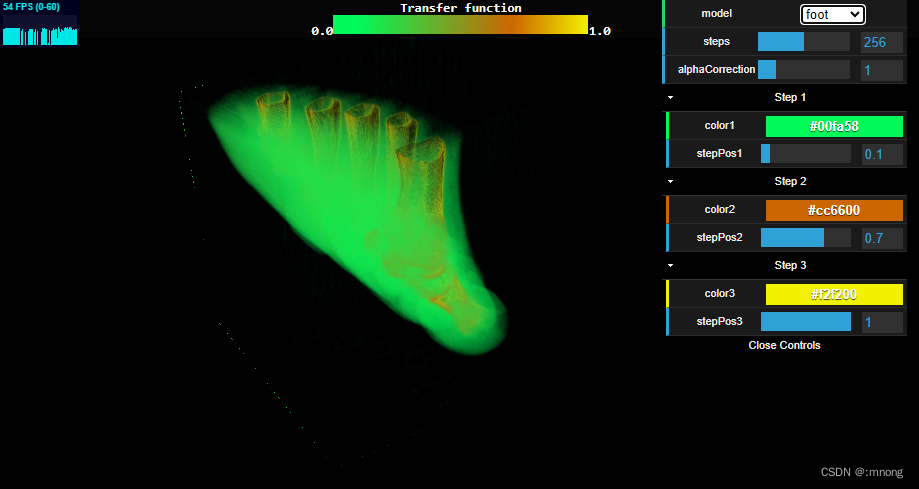

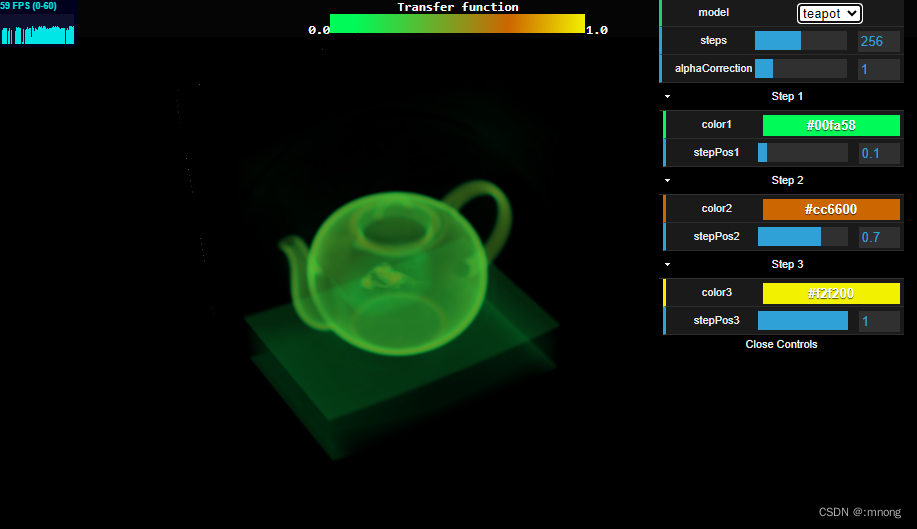

界面效果

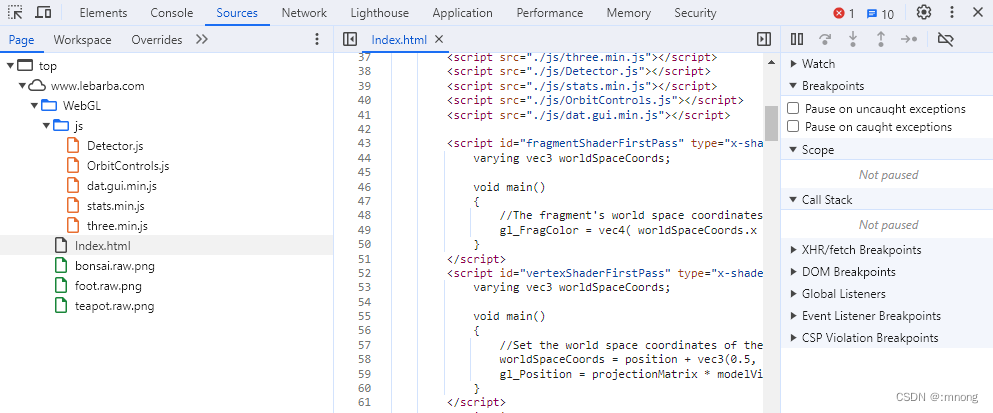

代碼結構

模型素材類似CT (Computed Tomography),即電子計算機斷層掃描,它是利用精確準直的X線束、γ射線、超聲波等,與靈敏度極高的探測器一同圍繞物體的某一部位作一個接一個的斷面掃描。

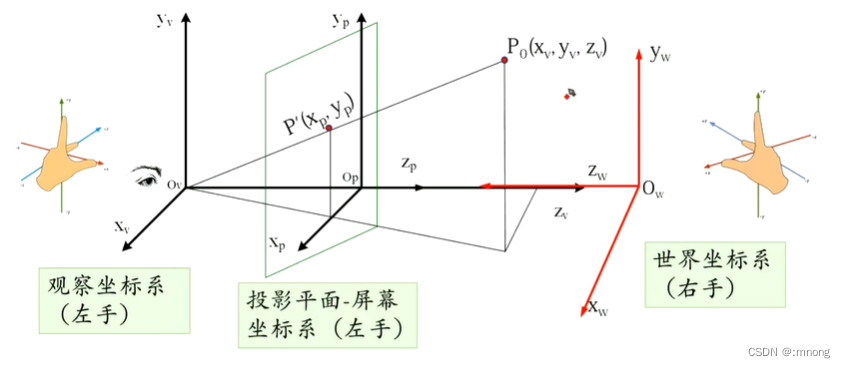

坐標系統

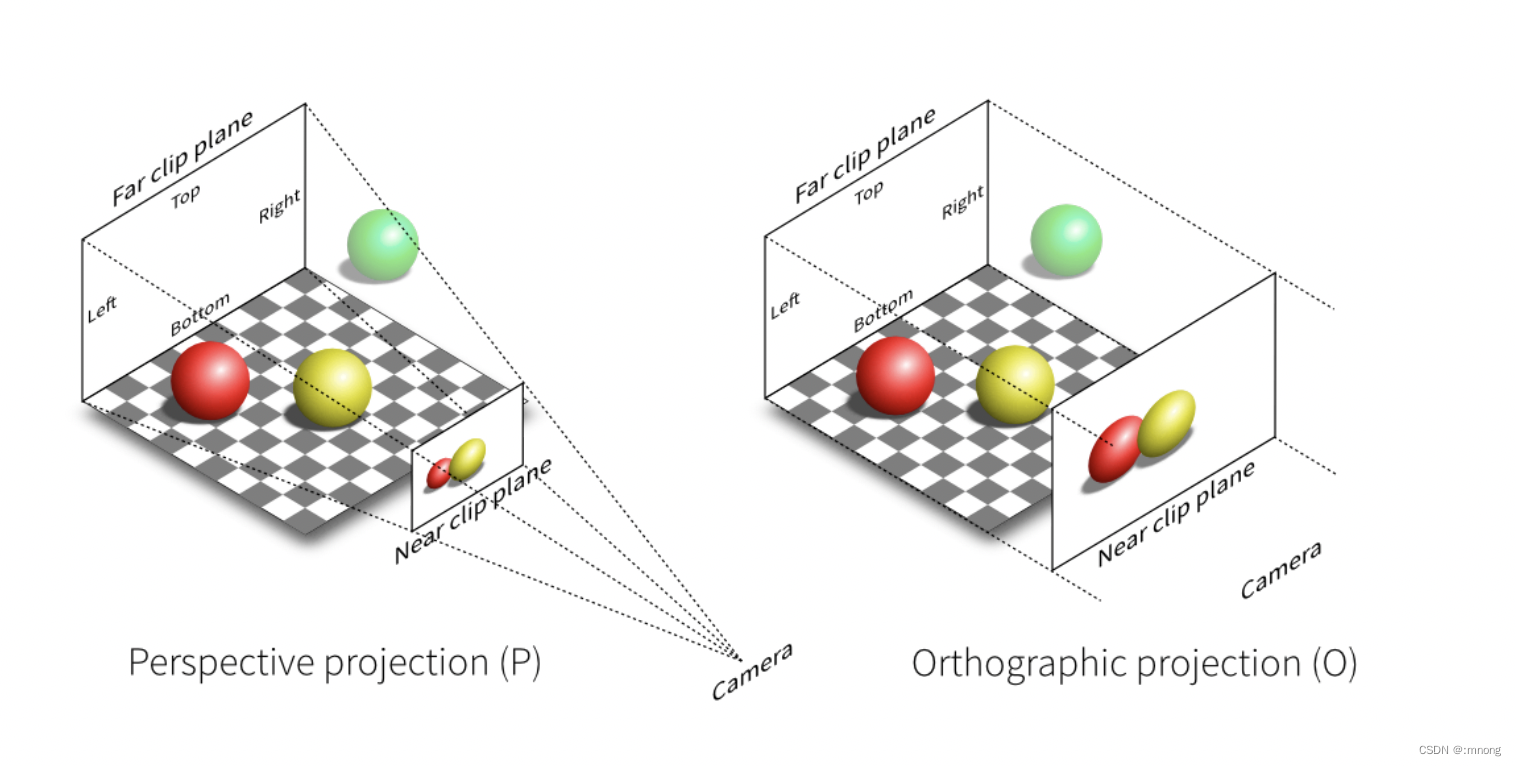

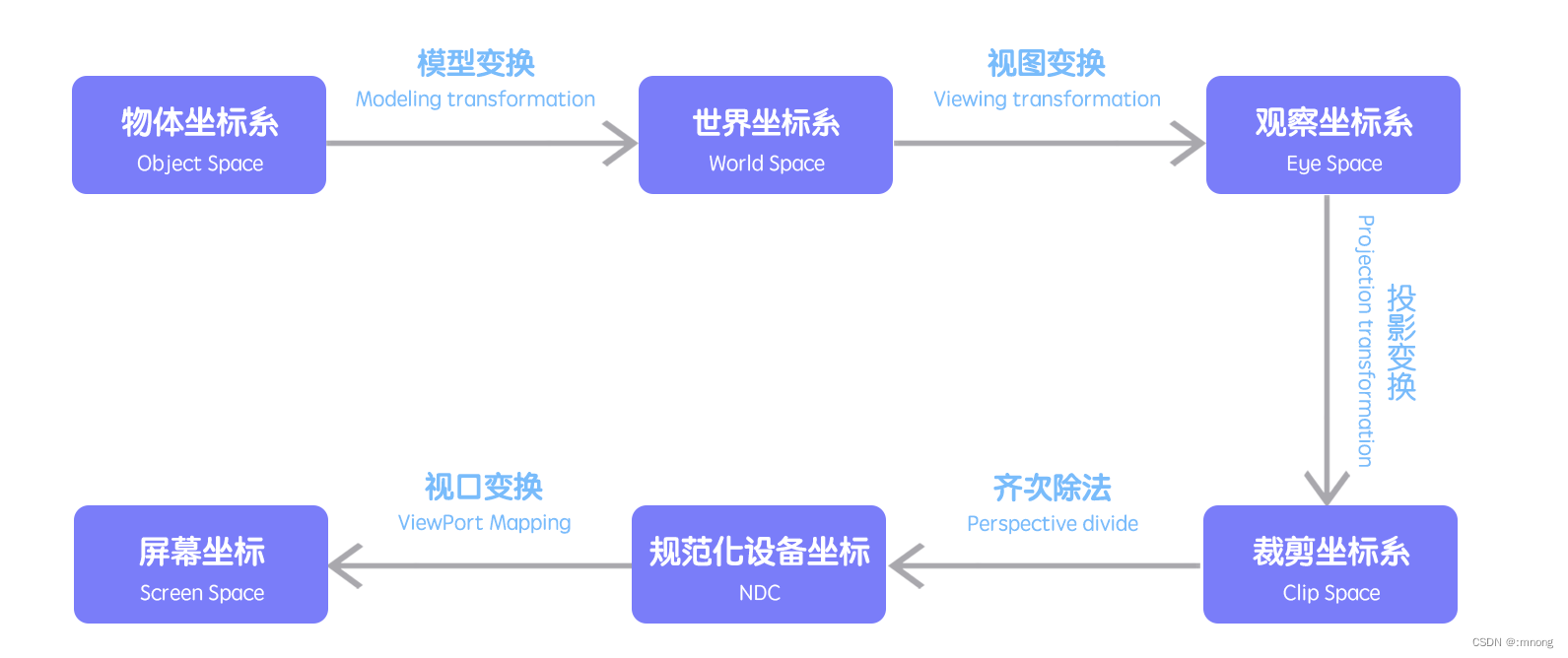

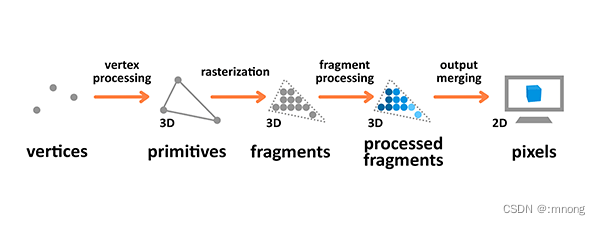

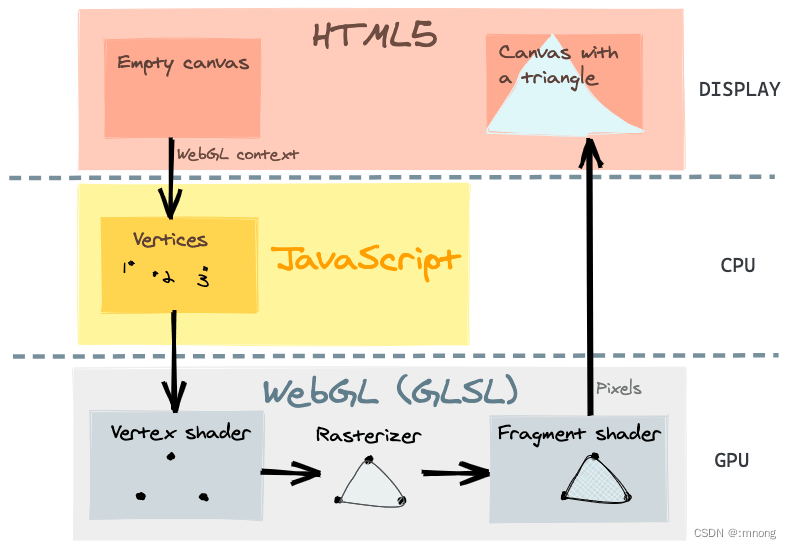

渲染流程

渲染流程是個將之前準備好的模型輸出到屏幕的過程。3D 渲染流程會接受使用頂點描述 3D 物體的原始數據作為輸入用于處理,并計算其片段 (fragment), 然后渲染為像素 (pixels) 輸出到屏幕。

著色器

使用 GLSL 的著色器(shader),GLSL 是一門特殊的有著類似于 C 語言的語法,在圖形管道 (graphic pipeline) 中直接可執行的 OpenGL 著色語言。著色器有兩種類型——頂點著色器 (Vertex Shader) 和片段著色器(Fragment Shader)。前者是將形狀轉換到真實的 3D 繪制坐標中,后者是計算最終渲染的顏色和其他屬性用的。

GLSL 不同于 JavaScript, 它是強類型語言,并且內置很多數學公式用于計算向量和矩陣。快速編寫著色器非常復雜,但創建一個簡單的著色器并不難。

一個著色器實際上就是一個繪制東西到屏幕上的函數。著色器運行在 GPU 中,它對這些操作進行了很多的優化,這樣你就可以卸載很多不必要的 CPU, 然后集中處理能力去執行你自己的代碼。

頂點著色器操作 3D 空間的坐標并且每個頂點都會調用一次這個函數。其目的是設置?gl_Position?變量——這是一個特殊的全局內置變量,它是用來存儲當前頂點的位置。

片段 (或者紋理) 著色器 在計算時定義了每像素的 RGBA 顏色 — 每個像素只調用一次片段著色器。這個著色器的作用是設置?gl_FragColor?變量,也是一個 GLSL 內置變量。

<script id="fragmentShaderFirstPass" type="x-shader/x-fragment">varying vec3 worldSpaceCoords;void main(){//The fragment's world space coordinates as fragment output.gl_FragColor = vec4( worldSpaceCoords.x , worldSpaceCoords.y, worldSpaceCoords.z, 1 );}</script><script id="vertexShaderFirstPass" type="x-shader/x-vertex">varying vec3 worldSpaceCoords;void main(){//Set the world space coordinates of the back faces vertices as output.worldSpaceCoords = position + vec3(0.5, 0.5, 0.5); //move it from [-0.5;0.5] to [0,1]gl_Position = projectionMatrix * modelViewMatrix * vec4( position, 1.0 );}</script><script id="fragmentShaderSecondPass" type="x-shader/x-fragment">varying vec3 worldSpaceCoords;varying vec4 projectedCoords;uniform sampler2D tex, cubeTex, transferTex;uniform float steps;uniform float alphaCorrection;// The maximum distance through our rendering volume is sqrt(3).// The maximum number of steps we take to travel a distance of 1 is 512.// ceil( sqrt(3) * 512 ) = 887// This prevents the back of the image from getting cut off when steps=512 & viewing diagonally.const int MAX_STEPS = 887;//Acts like a texture3D using Z slices and trilinear filtering.vec4 sampleAs3DTexture( vec3 texCoord ){vec4 colorSlice1, colorSlice2;vec2 texCoordSlice1, texCoordSlice2;//The z coordinate determines which Z slice we have to look for.//Z slice number goes from 0 to 255.float zSliceNumber1 = floor(texCoord.z * 255.0);//As we use trilinear we go the next Z slice.float zSliceNumber2 = min( zSliceNumber1 + 1.0, 255.0); //Clamp to 255//The Z slices are stored in a matrix of 16x16 of Z slices.//The original UV coordinates have to be rescaled by the tile numbers in each row and column.texCoord.xy /= 16.0;texCoordSlice1 = texCoordSlice2 = texCoord.xy;//Add an offset to the original UV coordinates depending on the row and column number.texCoordSlice1.x += (mod(zSliceNumber1, 16.0 ) / 16.0);texCoordSlice1.y += floor((255.0 - zSliceNumber1) / 16.0) / 16.0;texCoordSlice2.x += (mod(zSliceNumber2, 16.0 ) / 16.0);texCoordSlice2.y += floor((255.0 - zSliceNumber2) / 16.0) / 16.0;//Get the opacity value from the 2D texture.//Bilinear filtering is done at each texture2D by default.colorSlice1 = texture2D( cubeTex, texCoordSlice1 );colorSlice2 = texture2D( cubeTex, texCoordSlice2 );//Based on the opacity obtained earlier, get the RGB color in the transfer function texture.colorSlice1.rgb = texture2D( transferTex, vec2( colorSlice1.a, 1.0) ).rgb;colorSlice2.rgb = texture2D( transferTex, vec2( colorSlice2.a, 1.0) ).rgb;//How distant is zSlice1 to ZSlice2. Used to interpolate between one Z slice and the other.float zDifference = mod(texCoord.z * 255.0, 1.0);//Finally interpolate between the two intermediate colors of each Z slice.return mix(colorSlice1, colorSlice2, zDifference) ;}void main( void ) {//Transform the coordinates it from [-1;1] to [0;1]vec2 texc = vec2(((projectedCoords.x / projectedCoords.w) + 1.0 ) / 2.0,((projectedCoords.y / projectedCoords.w) + 1.0 ) / 2.0 );//The back position is the world space position stored in the texture.vec3 backPos = texture2D(tex, texc).xyz;//The front position is the world space position of the second render pass.vec3 frontPos = worldSpaceCoords;//The direction from the front position to back position.vec3 dir = backPos - frontPos;float rayLength = length(dir);//Calculate how long to increment in each step.float delta = 1.0 / steps;//The increment in each direction for each step.vec3 deltaDirection = normalize(dir) * delta;float deltaDirectionLength = length(deltaDirection);//Start the ray casting from the front position.vec3 currentPosition = frontPos;//The color accumulator.vec4 accumulatedColor = vec4(0.0);//The alpha value accumulated so far.float accumulatedAlpha = 0.0;//How long has the ray travelled so far.float accumulatedLength = 0.0;//If we have twice as many samples, we only need ~1/2 the alpha per sample.//Scaling by 256/10 just happens to give a good value for the alphaCorrection slider.float alphaScaleFactor = 25.6 * delta;vec4 colorSample;float alphaSample;//Perform the ray marching iterationsfor(int i = 0; i < MAX_STEPS; i++){//Get the voxel intensity value from the 3D texture.colorSample = sampleAs3DTexture( currentPosition );//Allow the alpha correction customization.alphaSample = colorSample.a * alphaCorrection;//Applying this effect to both the color and alpha accumulation results in more realistic transparency.alphaSample *= (1.0 - accumulatedAlpha);//Scaling alpha by the number of steps makes the final color invariant to the step size.alphaSample *= alphaScaleFactor;//Perform the composition.accumulatedColor += colorSample * alphaSample;//Store the alpha accumulated so far.accumulatedAlpha += alphaSample;//Advance the ray.currentPosition += deltaDirection;accumulatedLength += deltaDirectionLength;//If the length traversed is more than the ray length, or if the alpha accumulated reaches 1.0 then exit.if(accumulatedLength >= rayLength || accumulatedAlpha >= 1.0 )break;}gl_FragColor = accumulatedColor;}</script><script id="vertexShaderSecondPass" type="x-shader/x-vertex">varying vec3 worldSpaceCoords;varying vec4 projectedCoords;void main(){worldSpaceCoords = (modelMatrix * vec4(position + vec3(0.5, 0.5,0.5), 1.0 )).xyz;gl_Position = projectionMatrix * modelViewMatrix * vec4( position, 1.0 );projectedCoords = projectionMatrix * modelViewMatrix * vec4( position, 1.0 );}</script>三維場景構建

<script>if ( ! Detector.webgl ) Detector.addGetWebGLMessage();var container, stats;var camera, sceneFirstPass, sceneSecondPass, renderer;var clock = new THREE.Clock();var rtTexture, transferTexture;var cubeTextures = ['bonsai', 'foot', 'teapot'];var histogram = [];var guiControls;var materialSecondPass;init();//animate();function init() {//Parameters that can be modified.guiControls = new function() {this.model = 'bonsai';this.steps = 256.0;this.alphaCorrection = 1.0;this.color1 = "#00FA58";this.stepPos1 = 0.1;this.color2 = "#CC6600";this.stepPos2 = 0.7;this.color3 = "#F2F200";this.stepPos3 = 1.0;};container = document.getElementById( 'container' );camera = new THREE.PerspectiveCamera( 40, window.innerWidth / window.innerHeight, 0.01, 3000.0 );camera.position.z = 2.0;controls = new THREE.OrbitControls( camera, container );controls.center.set( 0.0, 0.0, 0.0 );//Load the 2D texture containing the Z slices.THREE.ImageUtils.crossOrigin = 'anonymous'; //處理紋理圖加載跨域問題cubeTextures['bonsai'] = THREE.ImageUtils.loadTexture('./images/bonsai.raw.png');cubeTextures['teapot'] = THREE.ImageUtils.loadTexture('./images/teapot.raw.png');cubeTextures['foot'] = THREE.ImageUtils.loadTexture('./images/foot.raw.png');//Don't let it generate mipmaps to save memory and apply linear filtering to prevent use of LOD.cubeTextures['bonsai'].generateMipmaps = false;cubeTextures['bonsai'].minFilter = THREE.LinearFilter;cubeTextures['bonsai'].magFilter = THREE.LinearFilter;cubeTextures['teapot'].generateMipmaps = false;cubeTextures['teapot'].minFilter = THREE.LinearFilter;cubeTextures['teapot'].magFilter = THREE.LinearFilter;cubeTextures['foot'].generateMipmaps = false;cubeTextures['foot'].minFilter = THREE.LinearFilter;cubeTextures['foot'].magFilter = THREE.LinearFilter;var transferTexture = updateTransferFunction();var screenSize = new THREE.Vector2( window.innerWidth, window.innerHeight );rtTexture = new THREE.WebGLRenderTarget( screenSize.x, screenSize.y,{ minFilter: THREE.LinearFilter,magFilter: THREE.LinearFilter,wrapS: THREE.ClampToEdgeWrapping,wrapT: THREE.ClampToEdgeWrapping,format: THREE.RGBFormat,type: THREE.FloatType,generateMipmaps: false} );var materialFirstPass = new THREE.ShaderMaterial( {vertexShader: document.getElementById( 'vertexShaderFirstPass' ).textContent,fragmentShader: document.getElementById( 'fragmentShaderFirstPass' ).textContent,side: THREE.BackSide} );materialSecondPass = new THREE.ShaderMaterial( {vertexShader: document.getElementById( 'vertexShaderSecondPass' ).textContent,fragmentShader: document.getElementById( 'fragmentShaderSecondPass' ).textContent,side: THREE.FrontSide,uniforms: { tex: { type: "t", value: rtTexture },cubeTex: { type: "t", value: cubeTextures['bonsai'] },transferTex: { type: "t", value: transferTexture },steps : {type: "1f" , value: guiControls.steps },alphaCorrection : {type: "1f" , value: guiControls.alphaCorrection }}});sceneFirstPass = new THREE.Scene();sceneSecondPass = new THREE.Scene();var boxGeometry = new THREE.BoxGeometry(1.0, 1.0, 1.0);boxGeometry.doubleSided = true;var meshFirstPass = new THREE.Mesh( boxGeometry, materialFirstPass );var meshSecondPass = new THREE.Mesh( boxGeometry, materialSecondPass );sceneFirstPass.add( meshFirstPass );sceneSecondPass.add( meshSecondPass );renderer = new THREE.WebGLRenderer();container.appendChild( renderer.domElement );stats = new Stats();stats.domElement.style.position = 'absolute';stats.domElement.style.top = '0px';container.appendChild( stats.domElement );var gui = new dat.GUI();var modelSelected = gui.add(guiControls, 'model', [ 'bonsai', 'foot', 'teapot' ] );gui.add(guiControls, 'steps', 0.0, 512.0);gui.add(guiControls, 'alphaCorrection', 0.01, 5.0).step(0.01);modelSelected.onChange(function(value) { materialSecondPass.uniforms.cubeTex.value = cubeTextures[value]; } );//Setup transfer function steps.var step1Folder = gui.addFolder('Step 1');var controllerColor1 = step1Folder.addColor(guiControls, 'color1');var controllerStepPos1 = step1Folder.add(guiControls, 'stepPos1', 0.0, 1.0);controllerColor1.onChange(updateTextures);controllerStepPos1.onChange(updateTextures);var step2Folder = gui.addFolder('Step 2');var controllerColor2 = step2Folder.addColor(guiControls, 'color2');var controllerStepPos2 = step2Folder.add(guiControls, 'stepPos2', 0.0, 1.0);controllerColor2.onChange(updateTextures);controllerStepPos2.onChange(updateTextures);var step3Folder = gui.addFolder('Step 3');var controllerColor3 = step3Folder.addColor(guiControls, 'color3');var controllerStepPos3 = step3Folder.add(guiControls, 'stepPos3', 0.0, 1.0);controllerColor3.onChange(updateTextures);controllerStepPos3.onChange(updateTextures);step1Folder.open();step2Folder.open();step3Folder.open();onWindowResize();window.addEventListener( 'resize', onWindowResize, false );}function imageLoad(url){ var image = document.createElement('img');image.crossOrigin = '';image.src = url;var loader = new THREE.TextureLoader();// load a resourceconst textureAssets = nullloader.load(// resource URLimage,// Function when resource is loadedfunction ( texture ) {// do something with the texturetexture.wrapS = THREE.RepeatWrapping;texture.wrapT = THREE.RepeatWrapping;texture.offset.x = 90/(2*Math.PI);textureAssets = texture},// Function called when download progressesfunction ( xhr ) {console.log( (xhr.loaded / xhr.total * 100) + '% loaded' );},// Function called when download errorsfunction ( xhr ) {console.log( 'An error happened' );});return textureAssets; // return the texture}function updateTextures(value){materialSecondPass.uniforms.transferTex.value = updateTransferFunction();}function updateTransferFunction(){var canvas = document.createElement('canvas');canvas.height = 20;canvas.width = 256;var ctx = canvas.getContext('2d');var grd = ctx.createLinearGradient(0, 0, canvas.width -1 , canvas.height - 1);grd.addColorStop(guiControls.stepPos1, guiControls.color1);grd.addColorStop(guiControls.stepPos2, guiControls.color2);grd.addColorStop(guiControls.stepPos3, guiControls.color3);ctx.fillStyle = grd;ctx.fillRect(0,0,canvas.width -1 ,canvas.height -1 );var img = document.getElementById("transferFunctionImg");img.src = canvas.toDataURL();img.style.width = "256 px";img.style.height = "128 px";transferTexture = new THREE.Texture(canvas);transferTexture.wrapS = transferTexture.wrapT = THREE.ClampToEdgeWrapping;transferTexture.needsUpdate = true;return transferTexture;}function onWindowResize( event ) {camera.aspect = window.innerWidth / window.innerHeight;camera.updateProjectionMatrix();renderer.setSize( window.innerWidth, window.innerHeight );}function animate() {requestAnimationFrame( animate );render();stats.update();}function render() {var delta = clock.getDelta();//Render first pass and store the world space coords of the back face fragments into the texture.renderer.render( sceneFirstPass, camera, rtTexture, true );//Render the second pass and perform the volume rendering.renderer.render( sceneSecondPass, camera );materialSecondPass.uniforms.steps.value = guiControls.steps;materialSecondPass.uniforms.alphaCorrection.value = guiControls.alphaCorrection;}//Leandro R Barbagallo - 2015 - lebarba at gmail.comconst VSHADER_SOURCE = `attribute vec4 a_Position;attribute vec2 a_uv;varying vec2 v_uv;void main() {gl_Position = a_Position;v_uv = a_uv;}`const FSHADER_SOURCE = `precision mediump float;// 定義一個取樣器。sampler2D 是一種數據類型,就像 vec2uniform sampler2D u_Sampler;uniform sampler2D u_Sampler2;varying vec2 v_uv;void main() {// texture2D(sampler2D sampler, vec2 coord) - 著色器語言內置函數,從 sampler 指定的紋理上獲取 coord 指定的紋理坐標處的像素vec4 color = texture2D(u_Sampler, v_uv);vec4 color2 = texture2D(u_Sampler2, v_uv);gl_FragColor = color * color2;}`function main() {const canvas = document.getElementById('webgl');const gl = canvas.getContext("webgl");if (!gl) {console.log('Failed to get the rendering context for WebGL');return;}if (!initShaders(gl, VSHADER_SOURCE, FSHADER_SOURCE)) {console.log('Failed to intialize shaders.');return;}gl.clearColor(0.0, 0.5, 0.5, 1.0);// 幾何圖形的4個頂點的坐標const verticesOfPosition = new Float32Array([// 左下角是第一個點,逆時針-0.5, -0.5,0.5, -0.5,0.5, 0.5,-0.5, 0.5,])// 紋理的4個點的坐標const uvs = new Float32Array([// 左下角是第一個點,逆時針,與頂點坐標保持對應0.0, 0.0,1.0, 0.0,1.0, 1.0,0.0, 1.0])initVertexBuffers(gl, verticesOfPosition)initUvBuffers(gl, uvs)initTextures(gl)initMaskTextures(gl)}// 初始化紋理。之所以為復數 s 是因為可以貼多張圖片。function initTextures(gl) {// 定義圖片const img = new Image();// 請求 CORS 許可。解決圖片跨域問題img.crossOrigin = "";// The image element contains cross-origin data, and may not be loaded.img.src = "./images/bonsai.raw.png";img.onload = () => {// 創建紋理const texture = gl.createTexture();// 取得取樣器const u_Sampler = gl.getUniformLocation(gl.program, 'u_Sampler');if (!u_Sampler) {console.log('Failed to get the storage location of u_Sampler');return false;}// pixelStorei - 圖像預處理:圖片上下對稱翻轉坐標軸 (圖片本身不變)gl.pixelStorei(gl.UNPACK_FLIP_Y_WEBGL, true);// 激活紋理單元gl.activeTexture(gl.TEXTURE0);// 綁定紋理對象gl.bindTexture(gl.TEXTURE_2D, texture);// 配置紋理參數gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR);gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.LINEAR);gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE);gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.MIRRORED_REPEAT);// 紋理圖片分配給紋理對象gl.texImage2D(gl.TEXTURE_2D, 0, gl.RGB, gl.RGB, gl.UNSIGNED_BYTE, img);// 將紋理單元傳給片元著色器gl.uniform1i(u_Sampler, 0);gl.clear(gl.COLOR_BUFFER_BIT);gl.drawArrays(gl.TRIANGLE_FAN, 0, 4);}}// 初始化紋理。之所以為復數 s 是因為可以貼多張圖片。function initMaskTextures(gl) {const img = new Image();img.src = "./images/teapot.raw.png";img.onload = () => {// 創建紋理const texture = gl.createTexture();// 取得取樣器const u_Sampler = gl.getUniformLocation(gl.program, 'u_Sampler2');if (!u_Sampler) {console.log('Failed to get the storage location of u_Sampler');return false;}// pixelStorei - 圖像預處理:圖片上下對稱翻轉坐標軸 (圖片本身不變)gl.pixelStorei(gl.UNPACK_FLIP_Y_WEBGL, true);// 激活紋理單元gl.activeTexture(gl.TEXTURE1);// 綁定紋理對象gl.bindTexture(gl.TEXTURE_2D, texture);// 配置紋理參數gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR);gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.LINEAR);// 紋理圖片分配給紋理對象gl.texImage2D(gl.TEXTURE_2D, 0, gl.RGB, gl.RGB, gl.UNSIGNED_BYTE, img);// 將紋理單元傳給片元著色器gl.uniform1i(u_Sampler, 1);gl.clear(gl.COLOR_BUFFER_BIT);gl.drawArrays(gl.TRIANGLE_FAN, 0, 4);}}function initVertexBuffers(gl, positions) {const vertexBuffer = gl.createBuffer();if (!vertexBuffer) {console.log('創建緩沖區對象失敗');return -1;}gl.bindBuffer(gl.ARRAY_BUFFER, vertexBuffer);gl.bufferData(gl.ARRAY_BUFFER, positions, gl.STATIC_DRAW);const a_Position = gl.getAttribLocation(gl.program, 'a_Position');if (a_Position < 0) {console.log('Failed to get the storage location of a_Position');return -1;}gl.vertexAttribPointer(a_Position, 2, gl.FLOAT, false, 0, 0);gl.enableVertexAttribArray(a_Position);}function initUvBuffers(gl, uvs) {const uvsBuffer = gl.createBuffer();if (!uvsBuffer) {console.log('創建 uvs 緩沖區對象失敗');return -1;}gl.bindBuffer(gl.ARRAY_BUFFER, uvsBuffer);gl.bufferData(gl.ARRAY_BUFFER, uvs, gl.STATIC_DRAW);const a_uv = gl.getAttribLocation(gl.program, 'a_uv');if (a_uv < 0) {console.log('Failed to get the storage location of a_uv');return -1;}gl.vertexAttribPointer(a_uv, 2, gl.FLOAT, false, 0, 0);gl.enableVertexAttribArray(a_uv);}</script>環境設置

你只需關注著色器代碼。Three.js 和其他 3D 庫給你抽象了很多東西出來——如果你想要用純 WebGL 創建這個例子,你得寫很多其他的代碼才能運行。要開始編寫 WebGL 著色器你不需要做太多,只需如下三步:

1、確保你在使用對 WebGL 有良好支持的現代瀏覽器,比如最新版的 Firefox 或 Chrome.

2、創建一個目錄保存你的實驗。

3、拷貝一份的 壓縮版的 Three.js 庫 到你的目錄。

參見:

LearnOpenGL - Coordinate Systems

WebGL model view projection - Web API 接口參考 | MDN

GLSL 著色器 - 游戲開發 | MDN

解釋基本的 3D 原理 - 游戲開發 | MDN

Lebarba - WebGL Volume Rendering made easy

模板類、模板繼承(嚴格模式和自由模式))

特征點三角化)

)

控制表單(el-form-item)顯示/隱藏)

:2024.02.05-2024.02.10)

——8266發布數據到mqtt服務器)