操作流程

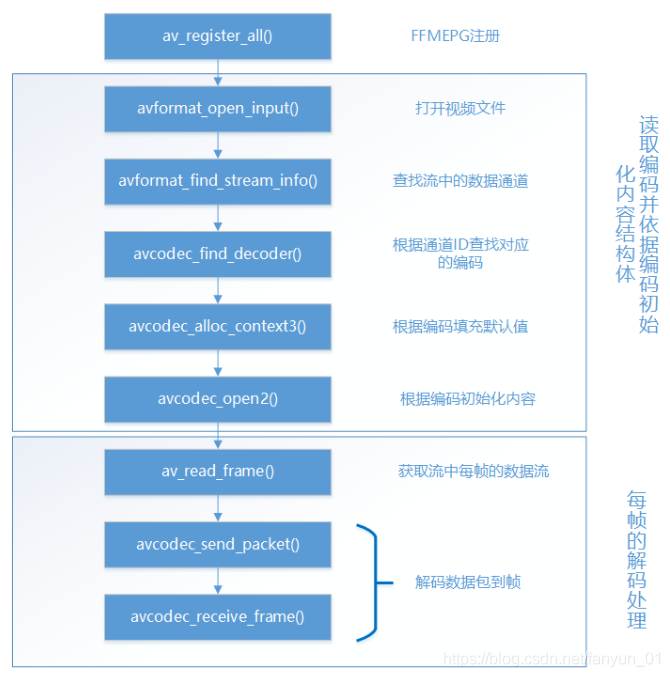

- 目的:使用FFmpeg將視頻的每一幀數據轉換為圖片

- 1,打開輸入的多媒體文件,檢索多媒體文件中的流信息

- 2,查找視頻流的索引號,通過索引號獲取數據流;通過解析視頻流中的編碼參數得到解碼器ID,進一步通過編碼器ID獲取編碼器

- 3,創建輸出上下文環境,并將視頻流中的編解碼參數拷貝到輸出上下文環境中(結構體)

- 4,循環讀取視頻流中的每一幀數據進行解碼

- 5,將解碼后的數據進行圖像色彩的空間轉換、分辨率的縮放、前后圖像濾波處理等,涉及到sws_getContext()、sws_scale()、sws_freeContext()這三個函數,分別進行初始化、轉換、釋放。

- 轉換后 加入 圖片的頭部信息進行保存

- 參考鏈接:

- FFmpeg將視頻轉為圖片 - 簡書? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ?未成功

- FFmpeg代碼實現視頻轉jpg圖片_Geek.Fan的博客-CSDN博客_ffmpeg jpg? 成功

- 1).打開視頻文件;(2)獲取視頻流;(3)找到對應的解碼器;(4).初始化解碼器上下文;(5).設置編解碼器參數;(6)打開解碼器;(7)讀取視頻幀;(8)發送等待解碼幀;(9).接收解碼幀數據;

補充鏈接

- #pragma pack(2)意義與用法_Toryci的博客-CSDN博客_#pragma pack(2)

- FFmpeg新舊接口對照使用一覽 - schips - 博客園

- FFmpeg: Image related? 官方文檔:av_image_get_buffer_size

- av_image_get_buffer_size 與 av_image_fill_arrays_jackuylove的博客-程序員宅基地_av_image_get_buffer_size - 程序員宅基地

補充知識

兩臺Ubuntu之間通過scp進行數據的傳輸

- 協議 傳輸的文件 接收端的賬戶 接收端的ip地址 文件的存儲路徑

- scp test.mp4 root@192.168.253.131:/home/chy-cpabe/Videos?

- 輸入密碼

avpicture_get_size

- avpicture_get_size已經被棄用,現在改為使用av_image_get_size()

- 具體用法如下:

- old: avpicture_get_size(AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height);

- new: //最后一個參數align這里是置1的,具體看情況是否需要置1

- av_image_get_buffer_size(AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height, 1);

avpicture_fill

- avpicture_fill已經被棄用,現在改為使用av_image_fill_arrays

- 具體用法如下:

- old: avpicture_fill((AVPicture *)pFrame, buffer, AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height);

- new: //最后一個參數align這里是置1的,具體看情況是否需要置1

- av_image_fill_arrays(pFrame->data, pFrame->linesize, buffer, ?AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height,1);

avcodec_decode_video2

- 原本的解碼函數被拆解為兩個函數avcodec_send_packet()和avcodec_receive_frame()

- 具體用法如下:

- old: avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, pPacket);

- new: avcodec_send_packet(pCodecCtx, pPacket); avcodec_receive_frame(pCodecCtx, pFrame);

ret = avcodec_send_packet(pCodecCtx, packet);got_picture = avcodec_receive_frame(pCodecCtx, pFrame); //got_picture = 0 success, a frame was returned//注意:got_picture含義相反或者:int ret = avcodec_send_packet(aCodecCtx, &pkt);if (ret != 0){prinitf("%s/n","error");return;}while( avcodec_receive_frame(aCodecCtx, &frame) == 0){//讀取到一幀音頻或者視頻//處理解碼后音視頻 frame}代碼

#include <cstdio>

#include <cstdlib>

#include <cstring>extern "C" {

#include <libavformat/avformat.h>

#include<libavcodec/avcodec.h>

#include<libswscale/swscale.h>

#include <libavutil/imgutils.h>

}int SavePicture(AVFrame* pFrame,char* out_name){int width = pFrame->width;int height = pFrame->height;AVCodecContext *pCodeCtx = nullptr;AVFormatContext *pFormatCtx = avformat_alloc_context();// 設置輸出文件格式pFormatCtx->oformat = av_guess_format("mjpeg",NULL,NULL);// 創建并初始化輸出AVIOContextif (avio_open(&pFormatCtx->pb,out_name,AVIO_FLAG_READ_WRITE) < 0){printf("Couldn't open output file.");return -1;}//create streamAVStream *pAVStream = avformat_new_stream(pFormatCtx,0);if (pAVStream == NULL){return -1;}AVCodecParameters *parameters = pAVStream->codecpar;parameters->codec_id = pFormatCtx->oformat->video_codec;parameters->codec_type = AVMEDIA_TYPE_VIDEO;parameters->format = AV_PIX_FMT_YUV420P;parameters->width = pFrame->width;parameters->height = pFrame->height;AVCodec *pCodec = const_cast<AVCodec *>(avcodec_find_encoder(pAVStream->codecpar->codec_id));if (!pCodec){printf("Could not find encoder\n");return -1;}pCodeCtx = avcodec_alloc_context3(pCodec);if (!pCodeCtx){fprintf(stderr,"Could not allocate video codec context\n");exit(1);}if ((avcodec_parameters_to_context(pCodeCtx,pAVStream->codecpar)) < 0){fprintf(stderr,"Failed to copy %s codec parameters to decoder context\n",av_get_media_type_string(AVMEDIA_TYPE_VIDEO));return -1;}pCodeCtx->time_base = (AVRational){1,25};if (avcodec_open2(pCodeCtx,pCodec,NULL) < 0){printf("Could not open codec.");return -1;}int ret = avformat_write_header(pFormatCtx,NULL);if (ret < 0){printf("Write_header fail\n");return -1;}int y_size = width * height;//Encode//給AVPacket分配足夠大的空間AVPacket pkt;av_new_packet(&pkt,y_size*3);//encode dataret = avcodec_send_frame(pCodeCtx,pFrame);if (ret < 0){printf("Could not avcodec_send_frame");return -1;}//得到編碼后數據ret = avcodec_receive_packet(pCodeCtx,&pkt);if (ret < 0){printf("Could not avcodec_receive_packet");return -1;}ret = av_write_frame(pFormatCtx,&pkt);if (ret < 0){printf("Could not av_write_frame");return -1;}av_packet_unref(&pkt);av_write_trailer(pFormatCtx);avcodec_close(pCodeCtx);avio_close(pFormatCtx->pb);avformat_free_context(pFormatCtx);return 0;

}int main(int argc,char** argv){int ret = 0;const char* in_filename,*out_filename;AVFormatContext *fmt_ctx = NULL;const AVCodec *codec;AVCodecContext *codeCtx = NULL;AVStream *stream = NULL;int stream_index = 0;AVPacket avpkt;AVFrame *frame;int frame_count = 0;if (argc <= 2){printf("Usage:%s <input file> <output file>\n",argv[0]);exit(0);}in_filename = argv[1];out_filename = argv[2];if (avformat_open_input(&fmt_ctx,in_filename,NULL,NULL) < 0){printf("Could not open source file %s \n",in_filename);exit(1);}if (avformat_find_stream_info(fmt_ctx,NULL)<0){printf("Could not find stream information\n");exit(1);}av_dump_format(fmt_ctx,0,in_filename,0);av_init_packet(&avpkt);avpkt.data = NULL;avpkt.size = 0;stream_index = av_find_best_stream(fmt_ctx,AVMEDIA_TYPE_VIDEO,-1,-1,NULL,0);if (stream_index < 0){fprintf(stderr,"Could not find %s stream in input file '%s'\n",av_get_media_type_string(AVMEDIA_TYPE_VIDEO),in_filename);return stream_index;}stream = fmt_ctx->streams[stream_index];codec = avcodec_find_decoder(stream->codecpar->codec_id);if (!codec){return -1;}codeCtx = avcodec_alloc_context3(NULL);if (!codeCtx){fprintf(stderr,"Could not allocate video codec context\n");exit(1);}if (avcodec_parameters_to_context(codeCtx,stream->codecpar)<0){fprintf(stderr,"Failed to copy %s codec parameters to decoder context\n",av_get_media_type_string(AVMEDIA_TYPE_VIDEO));return -1;}avcodec_open2(codeCtx,codec,NULL);frame = av_frame_alloc();if (!frame){fprintf(stderr,"Could not allocate video frame\n");exit(1);}char buf[1024];while(av_read_frame(fmt_ctx,&avpkt) >= 0){if (avpkt.stream_index == stream_index){ret = avcodec_send_packet(codeCtx,&avpkt);if (ret < 0){continue;}while (avcodec_receive_frame(codeCtx,frame) == 0){snprintf(buf,sizeof (buf),"%s/picture-%d.jpg",out_filename,frame_count);SavePicture(frame,buf);}frame_count++;}av_packet_unref(&avpkt);}return 0;

}運行結果

錯誤代碼

#include <cstdio>

#include <cstdlib>

#include <cstring>extern "C" {#include <libavformat/avformat.h>#include<libavcodec/avcodec.h>#include<libswscale/swscale.h>#include <libavutil/imgutils.h>

}#define INBUF_SIZE 4096#define WORD uint16_t

#define DWORD uint32_t

#define LONG int32_t#pragma pack(2)

// 位圖文件頭(bitmap-file header)包含了圖像類型、圖像大小、圖像數據存放地址和兩個保留未使用的字段

typedef struct tagBITMAPFILEHEADER{WORD bfType;DWORD bfSize;WORD bfReserved1;WORD bfReserved2;DWORD bfOffBits;

}BITMAPFILEHEADER,*PBITMAPFILEHEADER;

// 位圖信息頭(bitmap-information header)包含了位圖信息頭的大小、圖像的寬高、圖像的色深、壓縮說明圖像數據的大小和其他一些參數。

typedef struct tagBITMAPINFOHEADER{DWORD biSize;LONG biWidth;LONG biHeight;LONG biPlans;LONG biBitCount;DWORD biCompression;DWORD biSizeImage;LONG biXPelsPerMeter;LONG biYPelsPerMeter;DWORD biClrUsed;DWORD biClrImportant;

}BITMAPINFOHEADER,*PBITMAPINFOHEADER;

//圖片轉換并保存

void saveBMP(struct SwsContext* img_convert_ctx,AVFrame* frame,char* filename){//1 先進行轉換, YUV420=>RGB24:int w = frame->width;int h = frame->height;int numBytes = av_image_get_buffer_size(AV_PIX_FMT_BGR24,w,h,1);uint8_t *buffer = (uint8_t *) av_malloc(numBytes * sizeof (uint8_t));AVFrame *pFrameRGB = av_frame_alloc();//buffer is going to be written to rawvideo file,no alignmentav_image_fill_arrays(pFrameRGB->data,pFrameRGB->linesize,buffer,AV_PIX_FMT_BGR24,w,h,1);/*進行轉換frame->data和pFrameRGB->data分別指輸入和輸出的bufframe->linesize和pFrameRGB->linesize可以看成是輸入和輸出的每列的byte數第4個參數是指第一列要處理的位置第5個參數是source slice 的高度*/sws_scale(img_convert_ctx,frame->data,frame->linesize,0,h,pFrameRGB->data,pFrameRGB->linesize);//2 create BITMAPINFOHEADERBITMAPINFOHEADER header;header.biSize = sizeof (BITMAPINFOHEADER);header.biWidth = w;header.biHeight = h*(-1);header.biBitCount = 24;header.biSizeImage = 0;header.biClrImportant = 0;header.biClrUsed = 0;header.biXPelsPerMeter = 0;header.biYPelsPerMeter = 0;header.biPlans = 1;//3 create file headerBITMAPFILEHEADER bmpFileHeader = {0,};//HANDLE hFILE = NULL;DWORD dwTotalWriten = 0;DWORD dwWriten;bmpFileHeader.bfType = 0x4d42;//'BM'bmpFileHeader.bfSize = sizeof(BITMAPFILEHEADER) + sizeof (BITMAPINFOHEADER) + numBytes;bmpFileHeader.bfOffBits = sizeof(BITMAPFILEHEADER) + sizeof (BITMAPINFOHEADER);FILE *pf = fopen(filename,"wb");fwrite(&bmpFileHeader,sizeof (BITMAPFILEHEADER),1,pf);fwrite(&header,sizeof (BITMAPINFOHEADER),1,pf);fwrite(pFrameRGB->data[0],1,numBytes,pf);fclose(pf);//4 釋放資源//av_free(buffer);av_free(&pFrameRGB[0]);av_free(pFrameRGB);

}static void pgm_save(unsigned char* buf,int wrap,int x_size,int y_size,char* filename){FILE *f;int i;f = fopen(filename,"w");fprintf(f,"P5\n%d %d\n%d\n",x_size,y_size,255);for (i = 0; i < y_size; ++i) {fwrite(buf+i*wrap,1,x_size,f);}fclose(f);

}//對數據進行解碼

static int decode_write_frame(const char* outfilename,AVCodecContext *avctx,struct SwsContext* img_convert_ctx,AVFrame *frame,int* frame_count,AVPacket *pkt,int last){int ret,got_frame;char buf[1024];// 對數據進行解碼,avctx是編解碼器上下文,解碼后的幀存放在frame,通過got_frame獲取解碼后的幀,pkt是輸入包ret = avcodec_send_packet(avctx,pkt);if (ret < 0){fprintf(stderr,"Error while decoding frame %d\n",*frame_count);return ret;}got_frame = avcodec_receive_frame(avctx,frame);// 解碼成功if (got_frame){printf("Saving %s frame %3d\n",last?"last":" ",*frame_count);fflush(stdout);//The picture is allocated by the decoder,no need to free itsnprintf(buf,sizeof (buf),"%s-%d.bmp",outfilename,*frame_count);//解碼后的數據幀保存rgb圖片saveBMP(img_convert_ctx,frame,buf);(*frame_count)++;}return 0;

}int main(int argc,char** argv){int ret;FILE *f;// 輸入、輸出文件路徑const char* filename,*outfilename;// 格式上下文環境(輸入文件的上下文環境)AVFormatContext *fmt_ctx = nullptr;// 編解碼器const AVCodec *codec;// 編解碼器上下文環境(輸出文件的上下文環境)AVCodecContext *codec_context = nullptr;// 多媒體文件中的流AVStream *stream = nullptr;// 流的indexint stream_index= 0;int frame_count;// 原始幀AVFrame *frame;// 圖片處理的上下文struct SwsContext* img_convert_ctx;//uint8_t inbuf[INBUF_SIZE + AV_INPUT_BUFFER_PADDING_SIZE];AVPacket avPacket;if (argc < 2){fprintf(stderr,"Usage: %s <input file> <out file>\n",argv[0]);exit(0);}filename = argv[1];outfilename = argv[2];// 打開輸入文件,創建格式上下文環境if (avformat_open_input(&fmt_ctx,filename,NULL,NULL) < 0){fprintf(stderr,"Could not open source file %s\n",filename);exit(1);}// 檢索多媒體文件中的流信息if (avformat_find_stream_info(fmt_ctx,NULL) < 0){fprintf(stderr,"Could not find stream information\n");exit(1);}// 打印輸入文件的詳細信息av_dump_format(fmt_ctx,0,filename,0);av_init_packet(&avPacket);// 查找視頻流,返回值ret是流的索引號ret = av_find_best_stream(fmt_ctx,AVMEDIA_TYPE_VIDEO,-1,-1,NULL,0);if (ret < 0){fprintf(stderr,"Could not find %d stream in input file '%s'\n",av_get_media_type_string(AVMEDIA_TYPE_VIDEO),filename);return ret;}// 根據索引號獲取流stream_index = ret;stream = fmt_ctx->streams[stream_index];// 根據流中編碼參數中的解碼器ID查找解碼器codec = avcodec_find_encoder(stream->codecpar->codec_id);if (!codec){fprintf(stderr,"Failed to find %s codec\n",av_get_media_type_string(AVMEDIA_TYPE_VIDEO));return AVERROR(EINVAL);}// 分配輸出文件的上下文環境codec_context = avcodec_alloc_context3(codec);if (!codec_context){fprintf(stderr,"Could not allocate video codec context\n");exit(1);}// 將視頻流的編解碼參數直接拷貝到輸出上下文環境中if (avcodec_parameters_to_context(codec_context,stream->codecpar) < 0){fprintf(stderr,"Failed to copy %s codec parameters to decoder context\n",av_get_media_type_string(AVMEDIA_TYPE_VIDEO));return ret;}// 打開解碼器if (avcodec_open2(codec_context,codec,NULL)<0){fprintf(stderr,"Could not open codec\n");exit(1);}/*初始化圖片轉換上下文環境sws_getContext(int srcW, int srcH, enum AVPixelFormat srcFormat,int dstW, int dstH, enum AVPixelFormat dstFormat,int flags, SwsFilter *srcFilter,SwsFilter *dstFilter, const double *param);srcW/srcH/srcFormat分別為原始圖像數據的寬高和數據類型,數據類型比如AV_PIX_FMT_YUV420、PAV_PIX_FMT_RGB24dstW/dstH/dstFormat分別為輸出圖像數據的寬高和數據類型flags是scale算法種類;SWS_BICUBIC、SWS_BICUBLIN、SWS_POINT、SWS_SINC等最后3個參數不用管,都設為NULL*/img_convert_ctx = sws_getContext(codec_context->width,codec_context->height,codec_context->pix_fmt,codec_context->width,codec_context->height,AV_PIX_FMT_BGR24,SWS_BICUBIC,NULL,NULL,NULL);if (img_convert_ctx == NULL){fprintf(stderr,"cannot initialize the conversion context\n");exit(1);}frame = av_frame_alloc();if (!frame){fprintf(stderr,"Could not allocate video frame\n");exit(1);}// 循環從多媒體文件中讀取一幀一幀的數據while (av_read_frame(fmt_ctx,&avPacket) >= 0){// 如果讀取到的數據包的stream_index和視頻流的stream_index一致就進行解碼if (avPacket.stream_index == stream_index){if (decode_write_frame(outfilename,codec_context,img_convert_ctx,frame,&frame_count,&avPacket,0) < 0){exit(1);}}av_packet_unref(&avPacket);}/* Some codecs, such as MPEG, transmit the I- and P-frame with alatency of one frame. You must do the following to have achance to get the last frame of the video. */avPacket.data = NULL;avPacket.size = 0;decode_write_frame(outfilename,codec_context,img_convert_ctx,frame,&frame_count,&avPacket,1);fclose(f);avformat_close_input(&fmt_ctx);sws_freeContext(img_convert_ctx);avcodec_free_context(&codec_context);av_frame_free(&frame);return 0;

}

?

源碼級別)

—建筑電氣...)