Opencv 之ORB特征提取與匹配API簡介及使用例程

- ORB因其速度較快常被用于視覺SLAM中的位姿估計、視覺里程、圖像處理中的特征提取與匹配及圖像拼接等領域

- 本文將詳細給出使用例程及實現效果展示

1. API 簡介

- 創建

static Ptr<ORB> cv::ORB::create (int nfeatures = 500, //nfeatures 最終輸出最大特征點數目float scaleFactor = 1.2f, // scaleFactor 金字塔上采樣比率int nlevels = 8, // nlevels 金字塔層數int edgeThreshold = 31, // edgeThreshold 邊緣閾值int firstLevel = 0,int WTA_K = 2, // WTA_K這個是跟BRIEF描述子用的ORB::ScoreType scoreType = ORB::HARRIS_SCORE, // scoreType 對所有的特征點進行排名用的方法int patchSize = 31,int fastThreshold = 20

)

- 檢測

void cv::Feature2D::detect ( InputArray image, //輸入圖像std::vector< KeyPoint > & keypoints, //待搜索特征點InputArray mask = noArray() //操作掩碼)

- 計算

void cv::Feature2D::compute ( InputArrayOfArrays images, //輸入圖像std::vector< std::vector< KeyPoint > > & keypoints,OutputArrayOfArrays descriptors //描述子)

- 檢測與計算

void cv::Feature2D::detectAndCompute ( InputArray image,InputArray mask,std::vector< KeyPoint > & keypoints,OutputArray descriptors,bool useProvidedKeypoints = false )

- 繪制特征點

void cv::drawMatches ( InputArray img1,const std::vector< KeyPoint > & keypoints1,InputArray img2,const std::vector< KeyPoint > & keypoints2,const std::vector< DMatch > & matches1to2,InputOutputArray outImg,const Scalar & matchColor = Scalar::all(-1),const Scalar & singlePointColor = Scalar::all(-1),const std::vector< char > & matchesMask = std::vector< char >(),DrawMatchesFlags flags = DrawMatchesFlags::DEFAULT )

- 繪制匹配點對

void cv::drawMatches ( InputArray img1,const std::vector< KeyPoint > & keypoints1,InputArray img2,const std::vector< KeyPoint > & keypoints2,const std::vector< DMatch > & matches1to2,InputOutputArray outImg,const Scalar & matchColor = Scalar::all(-1),const Scalar & singlePointColor = Scalar::all(-1),const std::vector< char > & matchesMask = std::vector< char >(),DrawMatchesFlags flags = DrawMatchesFlags::DEFAULT )

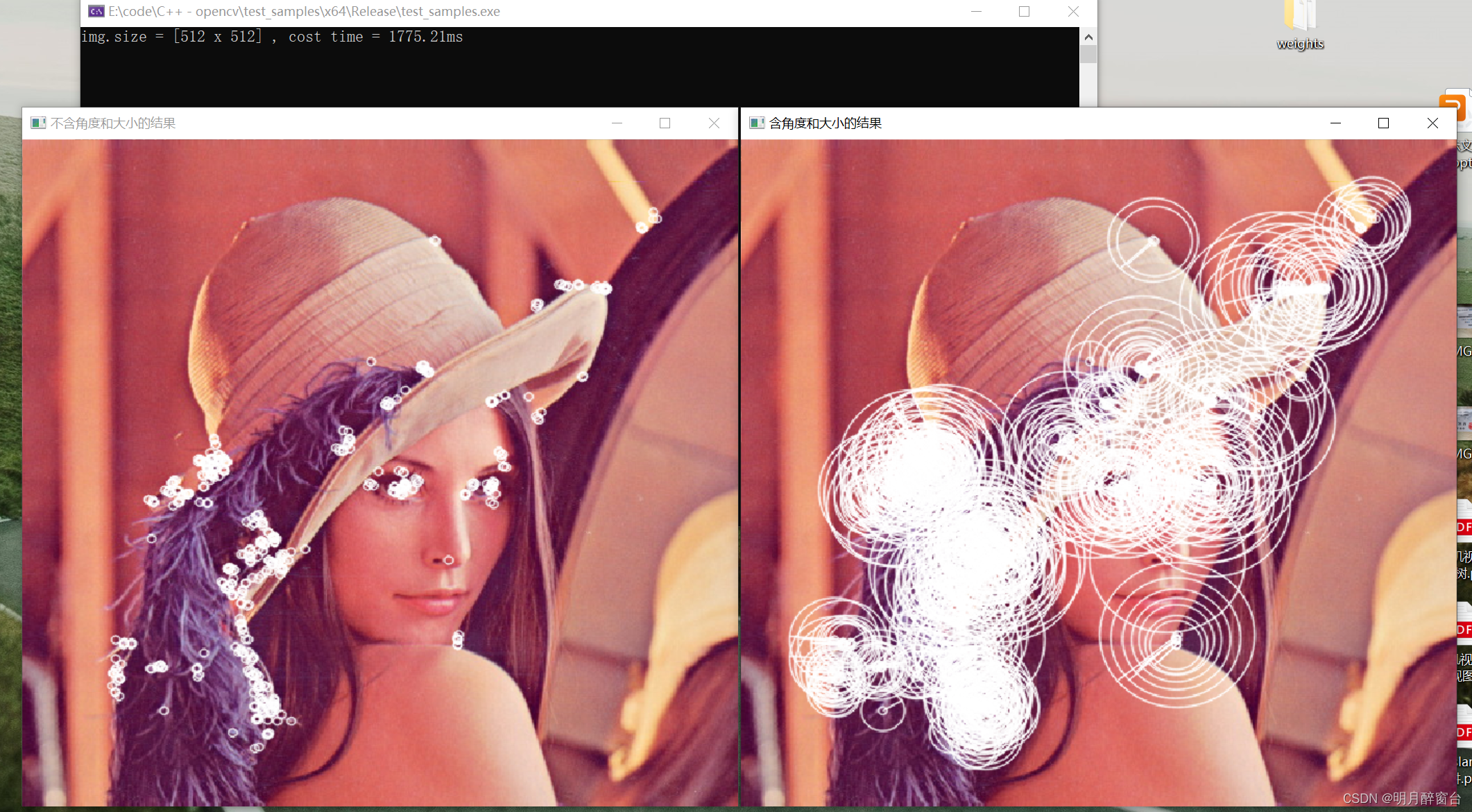

2. 特征提取

- 講述特征點提取與描述子計算,實現如下:

int main()

{Mat img = imread("./data/test3/lena.png");if (!img.data || img.empty()){cout << "圖像讀取錯誤!" << endl;return -1;}//創建ORB關鍵點Ptr<ORB> orb = ORB::create(500, 1.2f);double t1 = getTickCount();vector<KeyPoint>Keypoints;Mat descriptions;

#if 0//計算ORB關鍵點orb->detect(img, Keypoints);//計算ORB描述子orb->compute(img, Keypoints, descriptions);

#elseorb->detectAndCompute(img, cv::Mat(), Keypoints, descriptions);

#endif // 0double t2 = (getTickCount() - t1) / getTickFrequency() * 1000;cout << "img.size = " << img.size() << " , cost time = " << t2 << "ms\n";//繪制特征點Mat imgAngle;img.copyTo(imgAngle);//繪制不含角度和大小的結果drawKeypoints(img, Keypoints, img, Scalar(255, 255, 255));//繪制含有角度和大小的結果drawKeypoints(img, Keypoints, imgAngle, Scalar(255, 255, 255), DrawMatchesFlags::DRAW_RICH_KEYPOINTS);//顯示結果string wname1 = "不含角度和大小的結果", wname2 = "含角度和大小的結果";namedWindow(wname1, WINDOW_NORMAL);namedWindow(wname2, 0);imshow(wname1, img);imshow(wname2, imgAngle);waitKey(0);return 1;

}

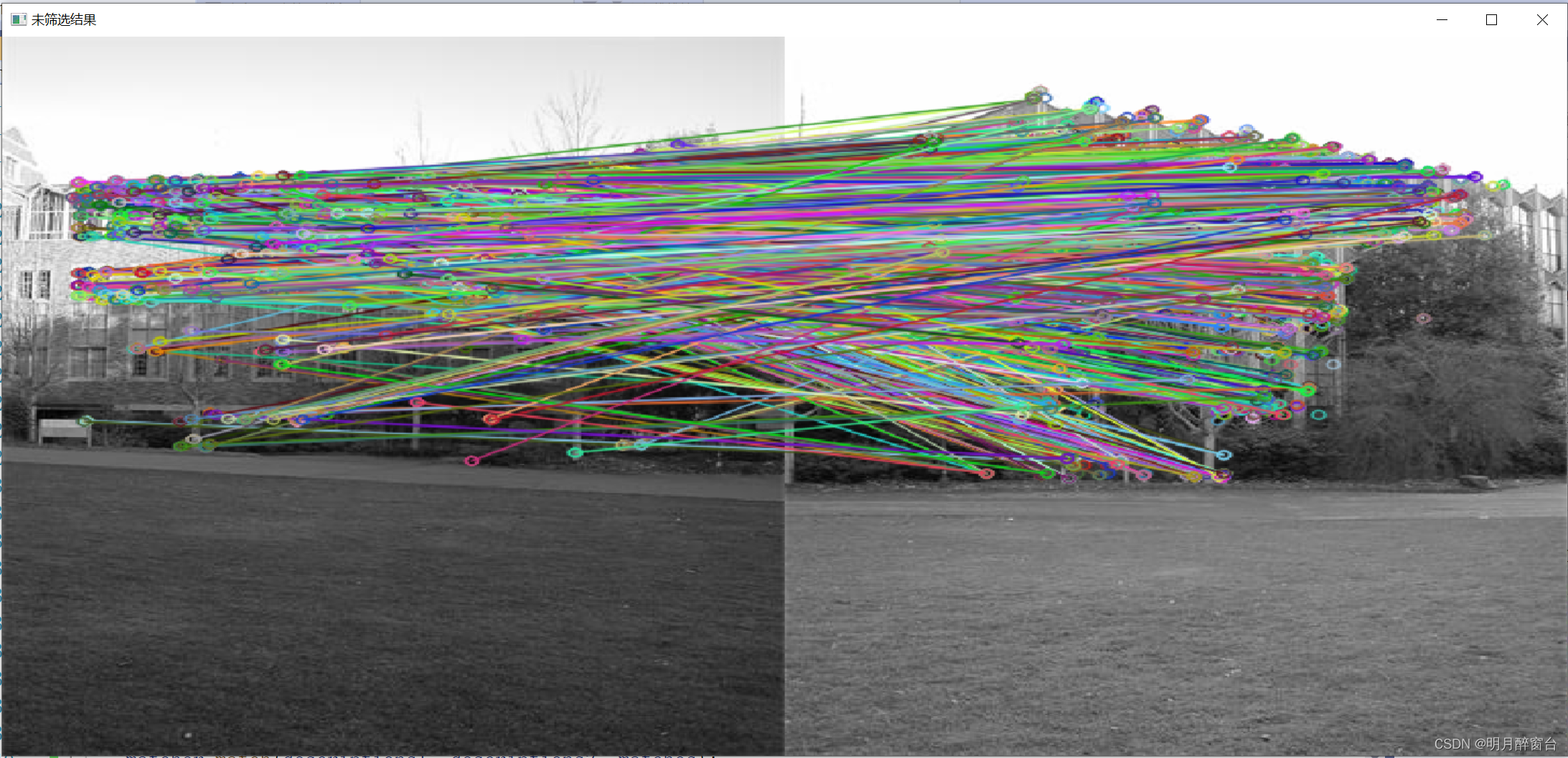

3. 特征匹配

- 暴力匹配實現:

#include<iostream>

#include<opencv2/opencv.hpp>

#include<vector>using namespace std;

using namespace cv;int main()

{//灰度格式讀取Mat img1, img2;img1 = imread("./data/test3/1.jpg",IMREAD_GRAYSCALE);img2 = imread("./data/test3/2.jpg",0);if (img1.empty() || img2.empty()){cout << "img.empty!!!\n";return -1;}//提取orb特征點vector<KeyPoint>Keypoints1, Keypoints2;Mat descriptions1, descriptions2;//計算特征點orb_features(img1, Keypoints1, descriptions1);orb_features(img2, Keypoints2, descriptions2);//特征點匹配vector<DMatch>matches; BFMatcher matcher(NORM_HAMMING); //定義特征點匹配的類,使用漢明距離matcher.match(descriptions1, descriptions2, matches);cout << "matches = " << matches.size() << endl;//通過漢明距離篩選匹配結果double min_dist = 10000, max_dist = 0;for (int i = 0; i < matches.size(); ++i){double dist = matches[i].distance;min_dist = min_dist < dist ? min_dist : dist;max_dist = max_dist > dist ? max_dist : dist;}//輸出計算的最大、最小距離cout << "min_dist = " << min_dist << endl;cout << "max_dist = " << max_dist << endl;//通過距離提出誤差大的點vector<DMatch>goodmatches;for (int i = 0; i < matches.size(); ++i){if (matches[i].distance <= MAX(1.8 * min_dist, 30)) //需調參{goodmatches.push_back(matches[i]);}}cout << "good_min = " << goodmatches.size() << endl;//繪制結果Mat outimg, outimg1;drawMatches(img1, Keypoints1, img2, Keypoints2, matches, outimg);drawMatches(img1, Keypoints1, img2, Keypoints2, goodmatches, outimg1);string wname1 = "未篩選結果", wname2 = "最小漢明距離篩選";namedWindow(wname1, WINDOW_NORMAL);namedWindow(wname2, 0);imshow(wname1, outimg);imshow(wname2, outimg1);waitKey(0);return 1;

}

其效果如下:

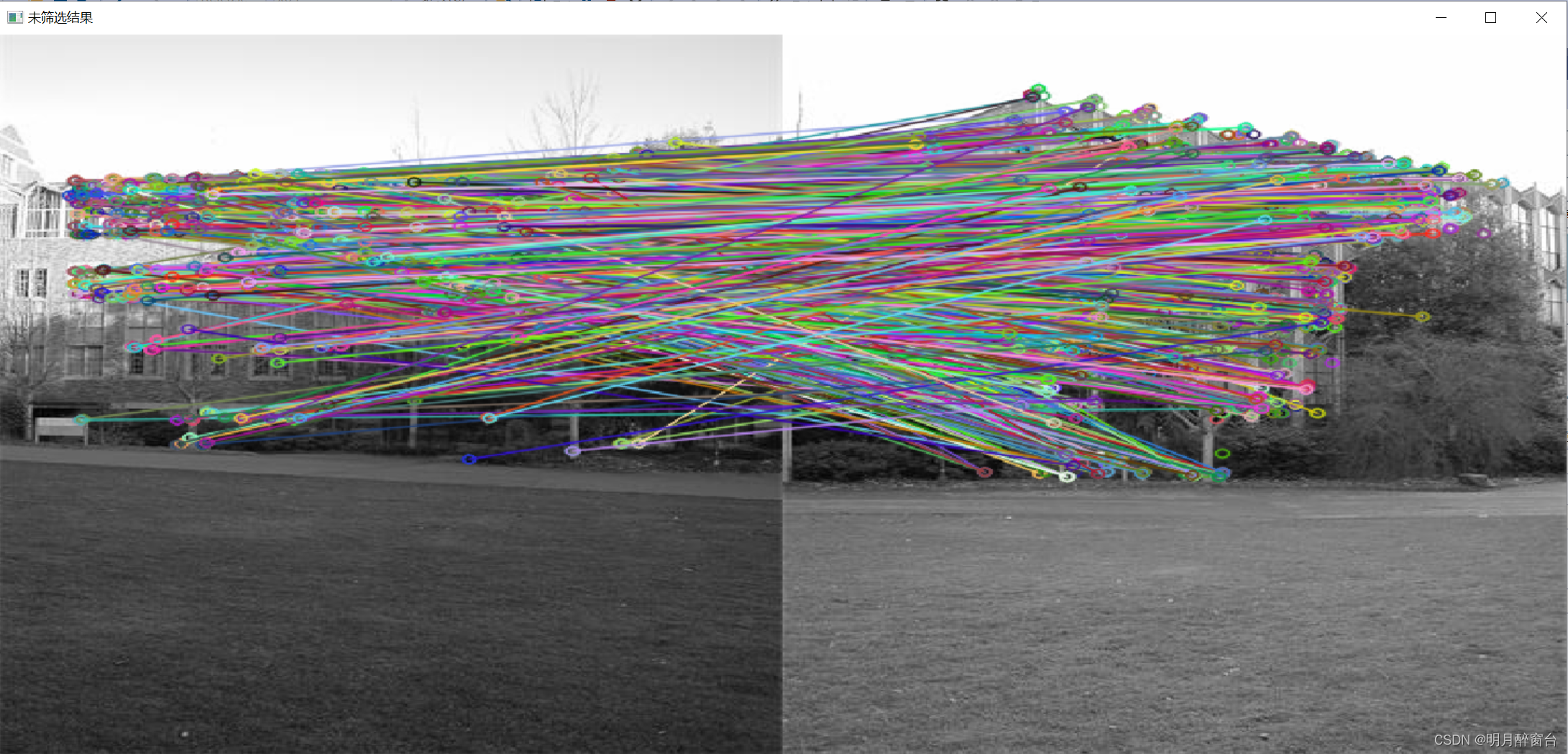

- 最近鄰匹配實現如下:

if (descriptions1.type() != CV_32F){descriptions1.convertTo(descriptions1, CV_32F);descriptions2.convertTo(descriptions2, CV_32F);}//特征點匹配vector<DMatch>matches;FlannBasedMatcher matcher; //定義特征點匹配的類,使用漢明距離matcher.match(descriptions1, descriptions2, matches);cout << "matches = " << matches.size() << endl;//通過漢明距離篩選匹配結果double min_dist = 10000, max_dist = 0;for (int i = 0; i < matches.size(); ++i){double dist = matches[i].distance;min_dist = min_dist < dist ? min_dist : dist;max_dist = max_dist > dist ? max_dist : dist;}//輸出計算的最大、最小距離cout << "min_dist = " << min_dist << endl;cout << "max_dist = " << max_dist << endl;//通過距離提出誤差大的點vector<DMatch>goodmatches;for (int i = 0; i < matches.size(); ++i){if (matches[i].distance <= 0.6 * max_dist) //需調參{goodmatches.push_back(matches[i]);}}cout << "good_min = " << goodmatches.size() << endl;

其效果如下:

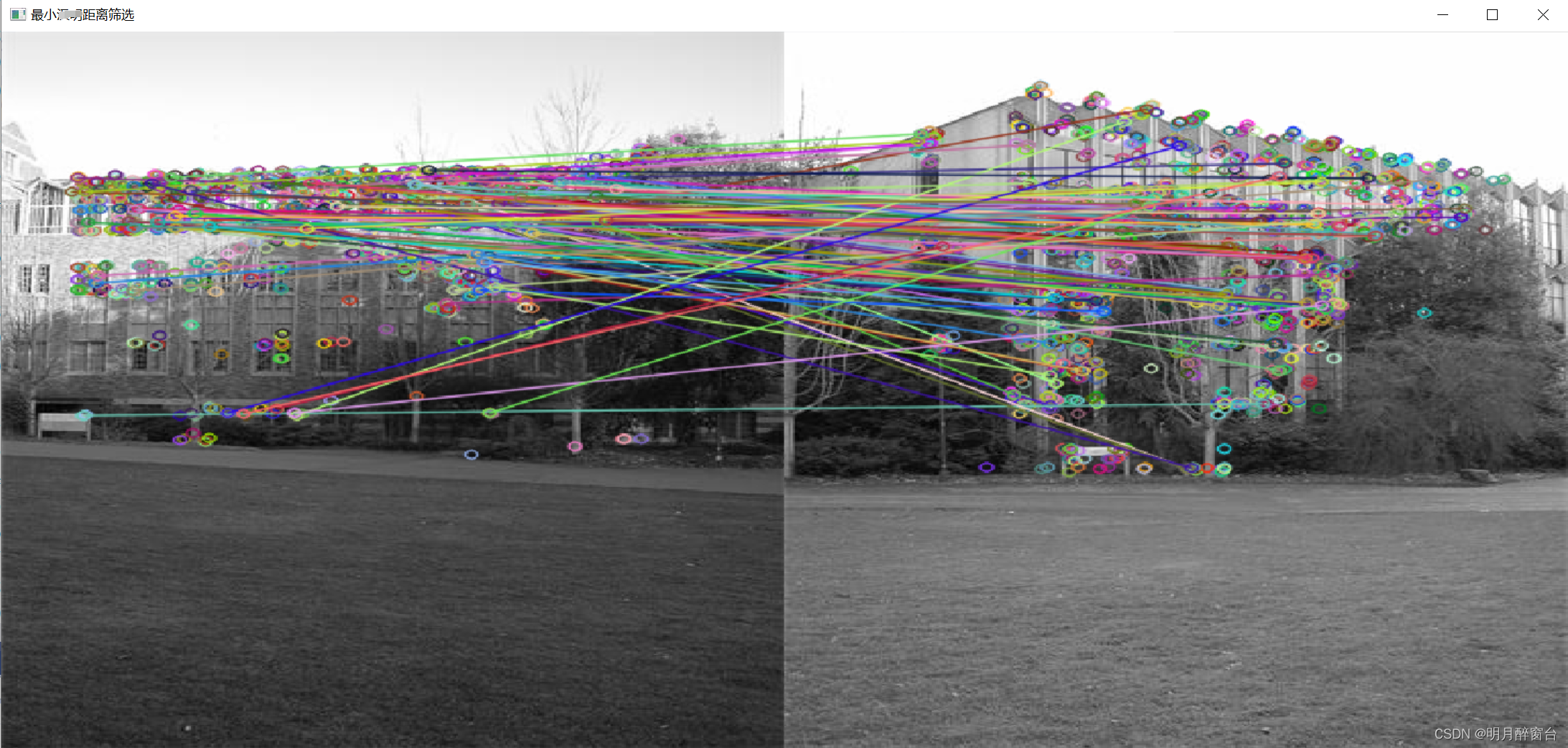

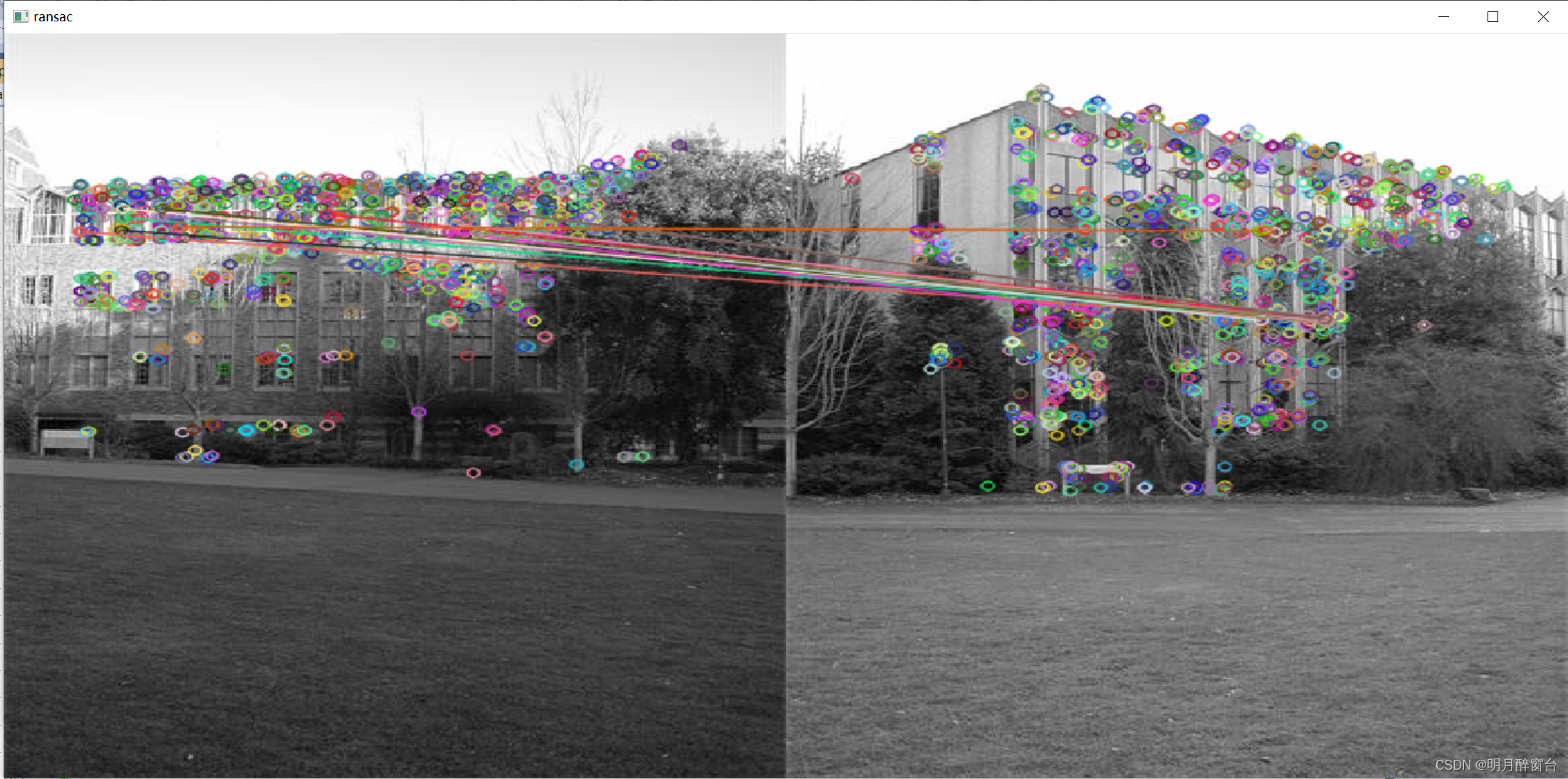

- RANSAC優化匹配

void ransac(vector<DMatch>matches, vector<KeyPoint>queryKeyPoint,vector<KeyPoint>trainKeyPoint, vector<DMatch>& matches_ransac)

{//定義保存匹配點對坐標vector<Point2f>srcPoints(matches.size()), dstPoints(matches.size());//保存從關鍵點中提取到的匹配點對坐標for (int i = 0; i < matches.size(); ++i){srcPoints[i] = queryKeyPoint[matches[i].queryIdx].pt;dstPoints[i] = trainKeyPoint[matches[i].trainIdx].pt;}//匹配點對RANSAC過濾vector<int>inlierMask(srcPoints.size());findHomography(srcPoints, dstPoints, RANSAC, 5, inlierMask);//手動保留RANSAC過濾后的匹配點對for (int i = 0; i < inlierMask.size(); ++i){if (inlierMask[i])matches_ransac.push_back(matches[i]);}

}//*************************RANSAC*******************************************

//main函數中放在暴力匹配代碼后://特征點匹配vector<DMatch>matches; BFMatcher matcher(NORM_HAMMING); //定義特征點匹配的類,使用漢明距離matcher.match(descriptions1, descriptions2, matches);cout << "matches = " << matches.size() << endl;//通過漢明距離篩選匹配結果double min_dist = 10000, max_dist = 0;for (int i = 0; i < matches.size(); ++i){double dist = matches[i].distance;min_dist = min_dist < dist ? min_dist : dist;max_dist = max_dist > dist ? max_dist : dist;}//輸出計算的最大、最小距離cout << "min_dist = " << min_dist << endl;cout << "max_dist = " << max_dist << endl;//通過距離提出誤差大的點vector<DMatch>goodmatches;for (int i = 0; i < matches.size(); ++i){if (matches[i].distance <= MAX(1.8 * min_dist, 30)) //需調參{goodmatches.push_back(matches[i]);}}cout << "good_min = " << goodmatches.size() << endl;//RANSAC優化:vector<DMatch>good_ransac;ransac(goodmatches, Keypoints1, Keypoints2, good_ransac);cout << "good_ransac = " << good_ransac.size() << endl;Mat output_;drawMatches(img1, Keypoints1, img2, Keypoints2, good_ransac, output_);namedWindow("ransac", 0);imshow("ransac", output_);

實現數據雙向綁定)

掌握最基本的Linux服務器用法——Linux下簡單的C/C++ 程序、項目編譯)