任務編排工具和工作流程 (Task orchestration tools and workflows)

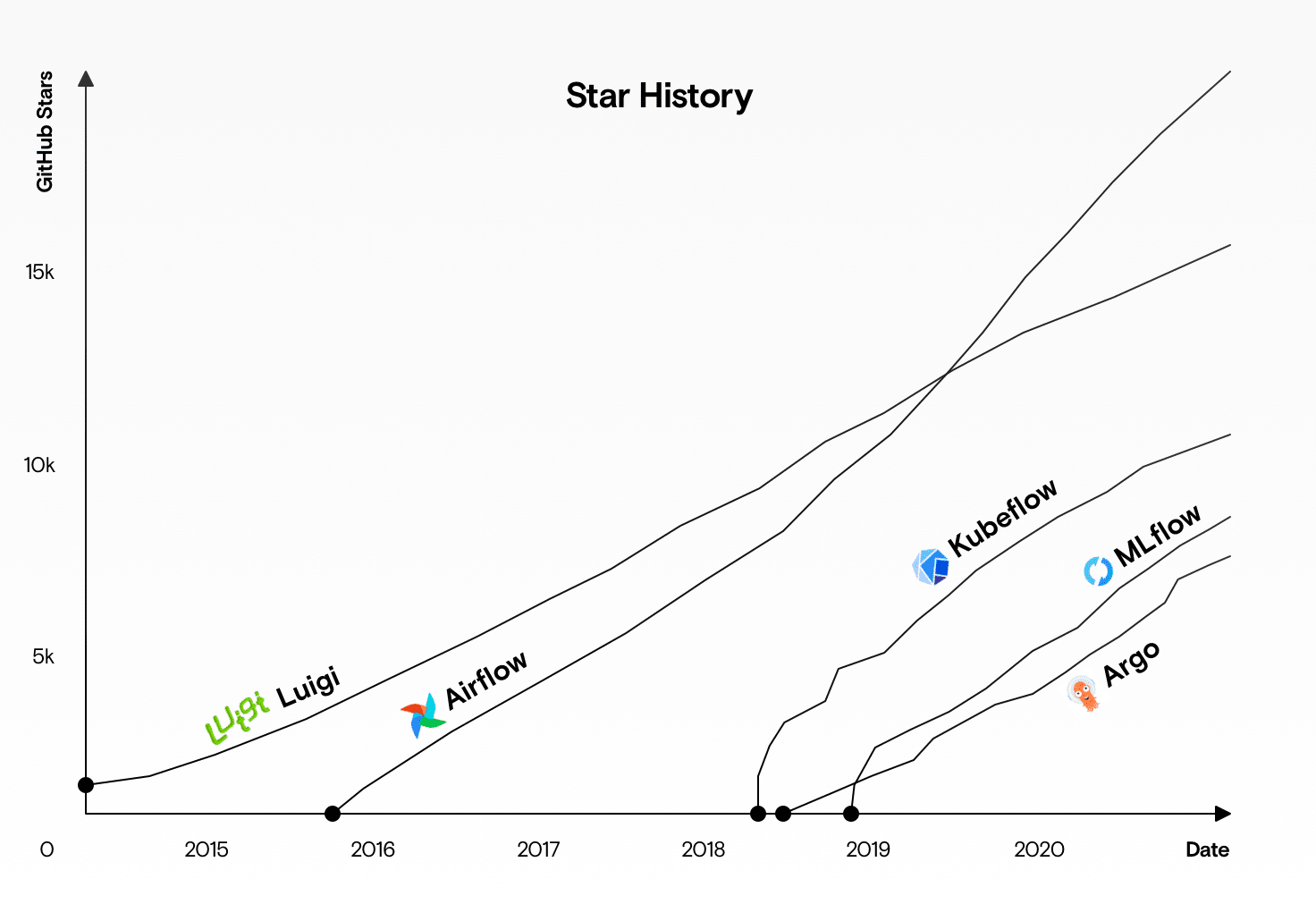

Recently there’s been an explosion of new tools for orchestrating task- and data workflows (sometimes referred to as “MLOps”). The quantity of these tools can make it hard to choose which ones to use and to understand how they overlap, so we decided to compare some of the most popular ones head to head.

最近,用于編排任務和數據工作流(有時稱為“ MLOps”) 的新工具激增。 這些工具的數量眾多,因此很難選擇要使用的工具,也難以理解它們的重疊方式,因此我們決定對一些最受歡迎的工具進行比較。

Overall Apache Airflow is both the most popular tool and also the one with the broadest range of features, but Luigi is a similar tool that’s simpler to get started with. Argo is the one teams often turn to when they’re already using Kubernetes, and Kubeflow and MLFlow serve more niche requirements related to deploying machine learning models and tracking experiments.

總體而言,Apache Airflow既是最受歡迎的工具,也是功能最廣泛的工具,但是Luigi是類似的工具,上手起來比較簡單。 Argo是團隊已經在使用Kubernetes時經常使用的一種,而Kubeflow和MLFlow滿足了與部署機器學習模型和跟蹤實驗有關的更多利基需求。

Before we dive into a detailed comparison, it’s useful to understand some broader concepts related to task orchestration.

在進行詳細比較之前,了解一些與任務編排相關的更廣泛的概念很有用。

什么是任務編排,為什么有用? (What is task orchestration and why is it useful?)

Smaller teams usually start out by managing tasks manually — such as cleaning data, training machine learning models, tracking results, and deploying the models to a production server. As the size of the team and the solution grows, so does the number of repetitive steps. It also becomes more important that these tasks are executed reliably.

較小的團隊通常從手動管理任務開始,例如清理數據,訓練機器學習模型,跟蹤結果以及將模型部署到生產服務器。 隨著團隊規模和解決方案的增長,重復步驟的數量也隨之增加。 可靠地執行這些任務也變得更加重要。

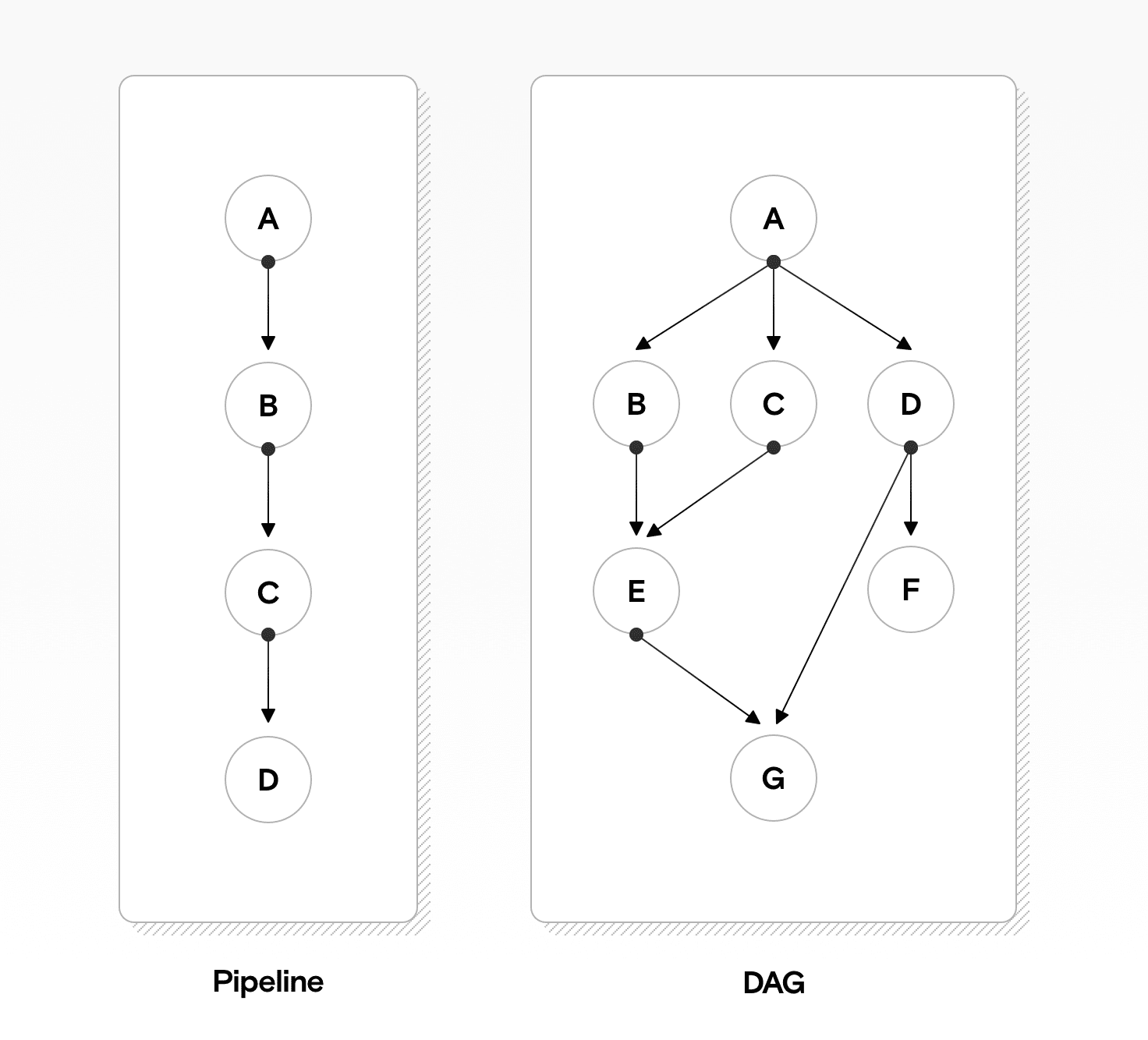

The complex ways these tasks depend on each other also increases. When you start out, you might have a pipeline of tasks that needs to be run once a week, or once a month. These tasks need to be run in a specific order. As you grow, this pipeline becomes a network with dynamic branches. In certain cases, some tasks set off other tasks, and these might depend on several other tasks running first.

這些任務相互依賴的復雜方式也在增加。 當你開始,你可能有任務的管道需要進行每周運行一次或每月一次。 這些任務需要按特定順序運行。 隨著您的成長,該管道變成具有動態分支的網絡 。 在某些情況下,某些任務會引發其他任務,而這些可能取決于首先運行的其他幾個任務。

This network can be modelled as a DAG — a Directed Acyclic Graph, which models each task and the dependencies between them.

可以將該網絡建模為DAG(有向無環圖),該模型對每個任務及其之間的依賴關系進行建模。

Workflow orchestration tools allow you to define DAGs by specifying all of your tasks and how they depend on each other. The tool then executes these tasks on schedule, in the correct order, retrying any that fail before running the next ones. It also monitors the progress and notifies your team when failures happen.

工作流程編排工具允許您通過指定所有任務以及它們如何相互依賴來定義DAG。 然后,該工具按正確的順序按計劃執行這些任務,然后在運行下一個任務之前重試任何失敗的任務。 它還會監視進度,并在發生故障時通知您的團隊。

CI/CD tools such as Jenkins are commonly used to automatically test and deploy code, and there is a strong parallel between these tools and task orchestration tools — but there are important distinctions too. Even though in theory you can use these CI/CD tools to orchestrate dynamic, interlinked tasks, at a certain level of complexity you’ll find it easier to use more general tools like Apache Airflow instead.

CI / CD工具(例如Jenkins)通常用于自動測試和部署代碼,這些工具與任務編排工具之間有很強的相似性-但也有重要的區別。 即使從理論上講, 您可以使用這些CI / CD工具來編排動態的,相互鏈接的任務 ,但在一定程度的復雜性下,您會發現改用Apache Airflow等更通用的工具會更容易。

[Want more articles like this? Sign up to our newsletter. We share a maximum of one article per week and never send any kind of promotional mail].

[想要更多這樣的文章嗎? 訂閱我們的新聞通訊 。 我們每周最多共享一篇文章,從不發送任何形式的促銷郵件]。

Overall, the focus of any orchestration tool is ensuring centralized, repeatable, reproducible, and efficient workflows: a virtual command center for all of your automated tasks. With that context in mind, let’s see how some of the most popular workflow tools stack up.

總體而言,任何業務流程工具的重點都是確保集中,可重復,可重現和高效的工作流程:虛擬命令中心,用于您的所有自動化任務。 考慮到這種情況,讓我們看看一些最流行的工作流工具是如何堆疊的。

告訴我使用哪一個 (Just tell me which one to use)

You should probably use:

您可能應該使用:

Apache Airflow if you want the most full-featured, mature tool and you can dedicate time to learning how it works, setting it up, and maintaining it.

阿帕奇氣流 如果您需要功能最全,最成熟的工具,則可以花時間來學習它的工作原理,設置和維護它。

Luigi if you need something with an easier learning curve than Airflow. It has fewer features, but it’s easier to get off the ground.

路易吉 如果您需要比Airflow更容易學習的東西。 它具有較少的功能,但更容易起步。

Argo if you’re already deeply invested in the Kubernetes ecosystem and want to manage all of your tasks as pods, defining them in YAML instead of Python.

Argo,如果您已經對Kubernetes生態系統進行了深入投資,并希望將所有任務作為Pod進行管理,請在YAML中定義它們,而不是Python。

KubeFlow if you want to use Kubernetes but still define your tasks with Python instead of YAML.

庫伯流 如果您想使用Kubernetes,但仍使用Python而不是YAML定義任務。

MLFlow if you care more about tracking experiments or tracking and deploying models using MLFlow’s predefined patterns than about finding a tool that can adapt to your existing custom workflows.

MLFlow,如果您更關心使用MLFlow的預定義模式跟蹤實驗或跟蹤和部署模型,而不是尋找可以適應現有自定義工作流程的工具。

比較表 (Comparison table)

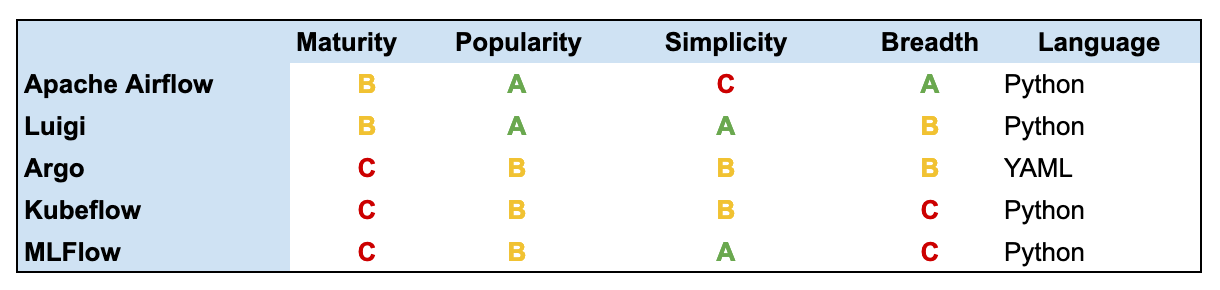

For a quick overview, we’ve compared the libraries when it comes to:

為了快速瀏覽,我們比較了以下方面的庫:

Maturity: based on the age of the project and the number of fixes and commits;

成熟度:基于項目的年齡以及修復和提交的次數;

Popularity: based on adoption and GitHub stars;

受歡迎程度:基于采用率和GitHub星級;

Simplicity: based on ease of onboarding and adoption;

簡潔性:基于易于注冊和采用;

Breadth: based on how specialized vs. how adaptable each project is;

廣度:基于每個項目的專業性與適應性;

Language: based on the primary way you interact with the tool.

語言:基于您與工具互動的主要方式。

These are not rigorous or scientific benchmarks, but they’re intended to give you a quick overview of how the tools overlap and how they differ from each other. For more details, see the head-to-head comparison below.

這些不是嚴格或科學的基準,但是它們旨在使您快速了解這些工具如何重疊以及它們如何彼此不同。 有關更多詳細信息,請參見下面的正面對比。

路易吉vs.氣流 (Luigi vs. Airflow)

Luigi and Airflow solve similar problems, but Luigi is far simpler. It’s contained in a single component, while Airflow has multiple modules which can be configured in different ways. Airflow has a larger community and some extra features, but a much steeper learning curve. Specifically, Airflow is far more powerful when it comes to scheduling, and it provides a calendar UI to help you set up when your tasks should run. With Luigi, you need to write more custom code to run tasks on a schedule.

Luigi和Airflow解決了類似的問題,但是Luigi要簡單得多。 它包含在單個組件中,而Airflow有多個模塊,可以用不同的方式進行配置。 氣流具有更大的社區和一些其他功能,但學習曲線卻陡峭得多。 具體來說,Airflow在計劃方面要強大得多,它提供了日歷UI,可幫助您設置任務應在何時運行。 使用Luigi,您需要編寫更多的自定義代碼以按計劃運行任務。

Both tools use Python and DAGs to define tasks and dependencies. Use Luigi if you have a small team and need to get started quickly. Use Airflow if you have a larger team and can take an initial productivity hit in exchange for more power once you’ve gotten over the learning curve.

兩種工具都使用Python和DAG定義任務和依賴項。 如果您的團隊較小并且需要快速上手,請使用Luigi。 如果您的團隊規模較大,可以使用Airflow,一旦您掌握了學習曲線,就可以以最初的生產力下降來換取更多的功能。

路易吉vs.阿爾戈 (Luigi vs. Argo)

Argo is built on top of Kubernetes, and each task is run as a separate Kubernetes pod. This can be convenient if you’re already using Kubernetes for most of your infrastructure, but it will add complexity if you’re not. Luigi is a Python library and can be installed with Python package management tools, such as pip and conda. Argo is a Kubernetes extension and is installed using Kubernetes. While both tools let you define your tasks as DAGs, with Luigi you’ll use Python to write these definitions, and with Argo you’ll use YAML.

Argo建立在Kubernetes之上 ,并且每個任務都作為單獨的Kubernetes容器運行。 如果您已經在大多數基礎架構中使用Kubernetes,這可能會很方便,但是如果您沒有使用Kubernetes,則會增加復雜性。 Luigi是一個Python庫,可以與Python包管理工具(如pip和conda)一起安裝。 Argo是Kubernetes擴展 ,使用Kubernetes安裝。 雖然這兩種工具都可以將任務定義為DAG,但使用Luigi時,您將使用Python編寫這些定義,而使用Argo時,您將使用YAML。

Use Argo if you’re already invested in Kubernetes and know that all of your tasks will be pods. You should also consider it if the developers who’ll be writing the DAG definitions are more comfortable with YAML than Python. Use Luigi if you’re not running on Kubernetes and have Python expertise on the team.

如果您已經對Kubernetes進行了投資,并且知道所有任務都是吊艙,請使用Argo。 如果將要編寫DAG定義的開發人員對YAML比對Python更滿意,則還應該考慮這一點。 如果您不是在Kubernetes上運行并且在團隊中擁有Python專業知識,請使用Luigi。

路易吉vs.庫伯福 (Luigi vs. Kubeflow)

Luigi is a Python-based library for general task orchestration, while Kubeflow is a Kubernetes-based tool specifically for machine learning workflows. Luigi is built to orchestrate general tasks, while Kubeflow has prebuilt patterns for experiment tracking, hyper-parameter optimization, and serving Jupyter notebooks. Kubeflow consists of two distinct components: Kubeflow and Kubeflow Pipelines. The latter is focused on model deployment and CI/CD, and it can be used independently of the main Kubeflow features.

Luigi是用于一般任務編排的基于Python的庫,而Kubeflow是專門用于機器學習工作流的基于Kubernetes的工具。 Luigi是為協調一般任務而構建的,而Kubeflow具有用于實驗跟蹤,超參數優化和為Jupyter筆記本服務的預構建模式。 Kubeflow由兩個不同的組件組成:Kubeflow和Kubeflow管道。 后者專注于模型部署和CI / CD,并且可以獨立于主要Kubeflow功能使用。

Use Luigi if you need to orchestrate a variety of different tasks, from data cleaning through model deployment. Use Kubeflow if you already use Kubernetes and want to orchestrate common machine learning tasks such as experiment tracking and model training.

如果需要安排從數據清理到模型部署的各種不同任務,請使用Luigi。 如果您已經使用Kubernetes并希望安排常見的機器學習任務(例如實驗跟蹤和模型訓練),請使用Kubeflow。

路易吉vs MLFlow (Luigi vs. MLFlow)

Luigi is a general task orchestration system, while MLFlow is a more specialized tool to help manage and track your machine learning lifecycle and experiments. You can use Luigi to define general tasks and dependencies (such as training and deploying a model), but you can import MLFlow directly into your machine learning code and use its helper function to log information (such as the parameters you’re using) and artifacts (such as the trained models). You can also use MLFlow as a command-line tool to serve models built with common tools (such as scikit-learn) or deploy them to common platforms (such as AzureML or Amazon SageMaker).

Luigi是一個通用的任務編排系統,而MLFlow是一個更專業的工具,可以幫助管理和跟蹤您的機器學習生命周期和實驗。 您可以使用Luigi定義常規任務和依賴項(例如訓練和部署模型),但是可以將MLFlow直接導入到機器學習代碼中,并使用其幫助函數來記錄信息(例如您正在使用的參數),并且工件(例如訓練有素的模型)。 您還可以將MLFlow用作命令行工具,以服務使用通用工具(例如scikit-learn)構建的模型或將其部署到通用平臺(例如AzureML或Amazon SageMaker)。

氣流與Argo (Airflow vs. Argo)

Argo and Airflow both allow you to define your tasks as DAGs, but in Airflow you do this with Python, while in Argo you use YAML. Argo runs each task as a Kubernetes pod, while Airflow lives within the Python ecosystem. Canva evaluated both options before settling on Argo, and you can watch this talk to get their detailed comparison and evaluation.

Argo和Airflow都允許您將任務定義為DAG,但是在Airflow中,您可以使用Python進行此操作,而在Argo中,您可以使用YAML。 Argo作為Kubernetes窗格運行每個任務,而Airflow則生活在Python生態系統中。 在選擇Argo之前,Canva評估了這兩個選項,您可以觀看此演講以獲取詳細的比較和評估 。

Use Airflow if you want a more mature tool and don’t care about Kubernetes. Use Argo if you’re already invested in Kubernetes and want to run a wide variety of tasks written in different stacks.

如果您想要更成熟的工具并且不關心Kubernetes,請使用Airflow。 如果您已經在Kubernetes上進行了投資,并且想要運行以不同堆棧編寫的各種任務,請使用Argo。

氣流與Kubeflow (Airflow vs. Kubeflow)

Airflow is a generic task orchestration platform, while Kubeflow focuses specifically on machine learning tasks, such as experiment tracking. Both tools allow you to define tasks using Python, but Kubeflow runs tasks on Kubernetes. Kubeflow is split into Kubeflow and Kubeflow Pipelines: the latter component allows you to specify DAGs, but it’s more focused on deployment and model serving than on general tasks.

Airflow是一個通用的任務編排平臺,而Kubeflow則特別專注于機器學習任務,例如實驗跟蹤。 兩種工具都允許您使用Python定義任務,但是Kubeflow在Kubernetes上運行任務。 Kubeflow分為Kubeflow和Kubeflow管道:后一個組件允許您指定DAG,但與常規任務相比,它更側重于部署和模型服務。

Use Airflow if you need a mature, broad ecosystem that can run a variety of different tasks. Use Kubeflow if you already use Kubernetes and want more out-of-the-box patterns for machine learning solutions.

如果您需要一個成熟的,廣泛的生態系統來執行各種不同的任務,請使用Airflow。 如果您已經使用Kubernetes,并希望使用更多現成的機器學習解決方案模式,請使用Kubeflow。

氣流與MLFlow (Airflow vs. MLFlow)

Airflow is a generic task orchestration platform, while MLFlow is specifically built to optimize the machine learning lifecycle. This means that MLFlow has the functionality to run and track experiments, and to train and deploy machine learning models, while Airflow has a broader range of use cases, and you could use it to run any set of tasks. Airflow is a set of components and plugins for managing and scheduling tasks. MLFlow is a Python library you can import into your existing machine learning code and a command-line tool you can use to train and deploy machine learning models written in scikit-learn to Amazon SageMaker or AzureML.

Airflow是一個通用的任務編排平臺,而MLFlow是專門為優化機器學習生命周期而構建的。 這意味著MLFlow具有運行和跟蹤實驗以及訓練和部署機器學習模型的功能,而Airflow具有更廣泛的用例,您可以使用它來運行任何任務集。 Airflow是一組用于管理和計劃任務的組件和插件。 MLFlow是一個Python庫,您可以將其導入到現有的機器學習代碼中,并且可以使用命令行工具來將scikit-learn編寫的機器學習模型訓練和部署到Amazon SageMaker或AzureML。

Use MLFlow if you want an opinionated, out-of-the-box way of managing your machine learning experiments and deployments. Use Airflow if you have more complicated requirements and want more control over how you manage your machine learning lifecycle.

如果您想以一種開明的,開箱即用的方式來管理機器學習實驗和部署的方法,請使用MLFlow。 如果您有更復雜的要求并且想要更好地控制如何管理機器學習生命周期,請使用Airflow。

Argo與Kubeflow (Argo vs. Kubeflow)

Parts of Kubeflow (like Kubeflow Pipelines) are built on top of Argo, but Argo is built to orchestrate any task, while Kubeflow focuses on those specific to machine learning — such as experiment tracking, hyperparameter tuning, and model deployment. Kubeflow Pipelines is a separate component of Kubeflow which focuses on model deployment and CI/CD, and can be used independently of Kubeflow’s other features. Both tools rely on Kubernetes and are likely to be more interesting to you if you’ve already adopted that. With Argo, you define your tasks using YAML, while Kubeflow allows you to use a Python interface instead.

Kubeflow的某些部分(例如Kubeflow管道)建立在Argo之上,但是Argo的建立是為了編排任何任務,而Kubeflow則專注于特定于機器學習的任務,例如實驗跟蹤,超參數調整和模型部署。 Kubeflow管道是Kubeflow的一個獨立組件,專注于模型部署和CI / CD,并且可以獨立于Kubeflow的其他功能使用。 這兩種工具都依賴Kubernetes,如果您已經采用了它,那么可能會讓您更感興趣。 使用Argo,您可以使用YAML定義任務,而Kubeflow允許您使用Python接口。

Use Argo if you need to manage a DAG of general tasks running as Kubernetes pods. Use Kubeflow if you want a more opinionated tool focused on machine learning solutions.

如果您需要管理作為Kubernetes Pod運行的常規任務的DAG,請使用Argo。 如果您想要更專注于機器學習解決方案的工具,請使用Kubeflow。

Argo與MLFlow (Argo vs. MLFlow)

Argo is a task orchestration tool that allows you to define your tasks as Kubernetes pods and run them as a DAG, defined with YAML. MLFlow is a more specialized tool that doesn’t allow you to define arbitrary tasks or the dependencies between them. Instead, you can import MLFlow into your existing (Python) machine learning code base as a Python library and use its helper functions to log artifacts and parameters to help with analysis and experiment tracking. You can also use MLFlow’s command-line tool to train scikit-learn models and deploy them to Amazon Sagemaker or Azure ML, as well as to manage your Jupyter notebooks.

Argo是一個任務編排工具,可讓您將任務定義為Kubernetes Pod,并將其作為DAG運行(使用YAML定義)。 MLFlow是一種更加專業的工具,它不允許您定義任意任務或它們之間的依賴關系。 相反,您可以將MLFlow作為Python庫導入到現有的(Python)機器學習代碼庫中,并使用其助手功能記錄工件和參數,以幫助進行分析和實驗跟蹤。 您還可以使用MLFlow的命令行工具來訓練scikit學習模型,并將其部署到Amazon Sagemaker或Azure ML,以及管理Jupyter筆記本。

Use Argo if you need to manage generic tasks and want to run them on Kubernetes. Use MLFlow if you want an opinionated way to manage your machine learning lifecycle with managed cloud platforms.

如果您需要管理常規任務并想在Kubernetes上運行它們,請使用Argo。 如果您想以一種明智的方式使用托管云平臺來管理機器學習生命周期,請使用MLFlow。

Kubeflow與MLFlow (Kubeflow vs. MLFlow)

Kubeflow and MLFlow are both smaller, more specialized tools than general task orchestration platforms such as Airflow or Luigi. Kubeflow relies on Kubernetes, while MLFlow is a Python library that helps you add experiment tracking to your existing machine learning code. Kubeflow lets you build a full DAG where each step is a Kubernetes pod, but MLFlow has built-in functionality to deploy your scikit-learn models to Amazon Sagemaker or Azure ML.

與諸如Airflow或Luigi之類的通用任務編排平臺相比,Kubeflow和MLFlow都是更小,更專業的工具。 Kubeflow依賴Kubernetes,而MLFlow是一個Python庫,可幫助您將實驗跟蹤添加到現有的機器學習代碼中。 Kubeflow允許您構建完整的DAG,其中每個步驟都是一個Kubernetes窗格,但是MLFlow具有內置功能,可以將scikit學習模型部署到Amazon Sagemaker或Azure ML。

Use Kubeflow if you want to track your machine learning experiments and deploy your solutions in a more customized way, backed by Kubernetes. Use MLFlow if you want a simpler approach to experiment tracking and want to deploy to managed platforms such as Amazon Sagemaker.

如果您想跟蹤機器學習實驗并以Kubernetes為后盾以更自定義的方式部署解決方案,請使用Kubeflow。 如果您想要一種更簡單的方法來進行實驗跟蹤,并希望將其部署到托管平臺(例如Amazon Sagemaker),請使用MLFlow。

沒有銀彈 (No silver bullet)

While all of these tools have different focus points and different strengths, no tool is going to give you a headache-free process straight out of the box. Before sweating over which tool to choose, it’s usually important to ensure you have good processes, including a good team culture, blame-free retrospectives, and long-term goals. If you’re struggling with any machine learning problems, get in touch. We love talking shop, and you can schedule a free call with our CEO.

盡管所有這些工具都有不同的重點和優勢,但是沒有任何一種工具可以使您立即擺脫頭痛的困擾。 在努力選擇哪種工具之前,通常重要的是要確保您擁有良好的流程,包括良好的團隊文化,無可指責的回顧和長期目標。 如果您遇到任何機器學習問題,請與我們聯系。 我們喜歡談論商店,您可以安排與我們首席執行官的免費電話 。

翻譯自: https://towardsdatascience.com/airflow-vs-luigi-vs-argo-vs-mlflow-vs-kubeflow-b3785dd1ed0c

本文來自互聯網用戶投稿,該文觀點僅代表作者本人,不代表本站立場。本站僅提供信息存儲空間服務,不擁有所有權,不承擔相關法律責任。 如若轉載,請注明出處:http://www.pswp.cn/news/392215.shtml 繁體地址,請注明出處:http://hk.pswp.cn/news/392215.shtml 英文地址,請注明出處:http://en.pswp.cn/news/392215.shtml

如若內容造成侵權/違法違規/事實不符,請聯系多彩編程網進行投訴反饋email:809451989@qq.com,一經查實,立即刪除!![移動WEB開發之JS內置touch事件[轉]](http://pic.xiahunao.cn/移動WEB開發之JS內置touch事件[轉])

)

)

)

)