什么是探索性數據分析(EDA)? (What is Exploratory Data Analysis(EDA)?)

If we want to explain EDA in simple terms, it means trying to understand the given data much better, so that we can make some sense out of it.

如果我們想用簡單的術語來解釋EDA,則意味著試圖更好地理解給定的數據,以便我們可以從中獲得一些意義。

We can find a more formal definition in Wikipedia.

我們可以在Wikipedia中找到更正式的定義。

In statistics, exploratory data analysis is an approach to analyzing data sets to summarize their main characteristics, often with visual methods. A statistical model can be used or not, but primarily EDA is for seeing what the data can tell us beyond the formal modeling or hypothesis testing task.

在統計學中, 探索性數據分析是一種分析數據集以總結其主要特征的方法,通常使用視覺方法。 可以使用統計模型,也可以不使用統計模型,但是EDA主要用于查看數據可以在形式建模或假設檢驗任務之外告訴我們的內容。

EDA in Python uses data visualization to draw meaningful patterns and insights. It also involves the preparation of data sets for analysis by removing irregularities in the data.

Python中的EDA使用數據可視化來繪制有意義的模式和見解。 它還涉及通過消除數據中的不規則性來準備用于分析的數據集。

Based on the results of EDA, companies also make business decisions, which can have repercussions later.

根據EDA的結果,公司還制定業務決策,這可能會在以后產生影響。

- If EDA is not done properly then it can hamper the further steps in the machine learning model building process. 如果EDA處理不當,則可能會妨礙機器學習模型構建過程中的其他步驟。

- If done well, it may improve the efficacy of everything we do next. 如果做得好,它可能會提高我們接下來做的每件事的功效。

In this article we’ll see about the following topics:

在本文中,我們將看到以下主題:

- Data Sourcing 資料采購

- Data Cleaning 數據清理

- Univariate analysis 單變量分析

- Bivariate analysis 雙變量分析

- Multivariate analysis 多元分析

1.數據采購 (1. Data Sourcing)

Data Sourcing is the process of finding and loading the data into our system. Broadly there are two ways in which we can find data.

數據采購是查找數據并將其加載到我們的系統中的過程。 大致而言,我們可以通過兩種方式查找數據。

- Private Data 私人數據

- Public Data 公開資料

Private Data

私人數據

As the name suggests, private data is given by private organizations. There are some security and privacy concerns attached to it. This type of data is used for mainly organizations internal analysis.

顧名思義,私有數據是由私有組織提供的。 附加了一些安全和隱私問題。 這類數據主要用于組織內部分析。

Public Data

公開資料

This type of Data is available to everyone. We can find this in government websites and public organizations etc. Anyone can access this data, we do not need any special permissions or approval.

此類數據適用于所有人。 我們可以在政府網站和公共組織等中找到此文件。任何人都可以訪問此數據,我們不需要任何特殊權限或批準。

We can get public data on the following sites.

我們可以在以下站點上獲取公共數據。

https://data.gov

https://data.gov

https://data.gov.uk

https://data.gov.uk

https://data.gov.in

https://data.gov.in

https://www.kaggle.com/

https://www.kaggle.com/

https://archive.ics.uci.edu/ml/index.php

https://archive.ics.uci.edu/ml/index.php

https://github.com/awesomedata/awesome-public-datasets

https://github.com/awesomedata/awesome-public-datasets

The very first step of EDA is Data Sourcing, we have seen how we can access data and load into our system. Now, the next step is how to clean the data.

EDA的第一步是數據采購,我們已經看到了如何訪問數據并將其加載到系統中。 現在,下一步是如何清除數據。

2.數據清理 (2. Data Cleaning)

After completing the Data Sourcing, the next step in the process of EDA is Data Cleaning. It is very important to get rid of the irregularities and clean the data after sourcing it into our system.

完成數據采購后,EDA過程的下一步是數據清理 。 將數據來源到我們的系統中之后,消除不規則并清理數據非常重要。

Irregularities are of different types of data.

不規則性是不同類型的數據。

- Missing Values 缺失值

- Incorrect Format 格式錯誤

- Incorrect Headers 標頭不正確

- Anomalies/Outliers 異常/異常值

To perform the data cleaning we are using a sample data set, which can be found here.

為了執行數據清理,我們使用樣本數據集,可在此處找到。

We are using Jupyter Notebook for analysis.

我們正在使用Jupyter Notebook進行分析。

First, let’s import the necessary libraries and store the data in our system for analysis.

首先,讓我們導入必要的庫并將數據存儲在系統中進行分析。

#import the useful libraries.

import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

%matplotlib inline# Read the data set of "Marketing Analysis" in data.

data= pd.read_csv("marketing_analysis.csv")# Printing the data

dataNow, the data set looks like this,

現在,數據集看起來像這樣,

If we observe the above dataset, there are some discrepancies in the Column header for the first 2 rows. The correct data is from the index number 1. So, we have to fix the first two rows.

如果我們觀察上述數據集,則前兩行的“列”標題中會有一些差異。 正確的數據來自索引號1。因此,我們必須修復前兩行。

This is called Fixing the Rows and Columns. Let’s ignore the first two rows and load the data again.

這稱為修復行和列。 讓我們忽略前兩行,然后再次加載數據。

#import the useful libraries.

import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

%matplotlib inline# Read the file in data without first two rows as it is of no use.

data = pd.read_csv("marketing_analysis.csv",skiprows = 2)#print the head of the data frame.

data.head()Now, the dataset looks like this, and it makes more sense.

現在,數據集看起來像這樣,并且更有意義。

Following are the steps to be taken while Fixing Rows and Columns:

以下是修復行和列時要采取的步驟:

- Delete Summary Rows and Columns in the Dataset. 刪除數據集中的摘要行和列。

- Delete Header and Footer Rows on every page. 在每個頁面上刪除頁眉和頁腳行。

- Delete Extra Rows like blank rows, page numbers, etc. 刪除多余的行,例如空白行,頁碼等。

- We can merge different columns if it makes for better understanding of the data 如果可以更好地理解數據,我們可以合并不同的列

- Similarly, we can also split one column into multiple columns based on our requirements or understanding. 同樣,我們也可以根據我們的要求或理解將一列分為多列。

- Add Column names, it is very important to have column names to the dataset. 添加列名稱,將列名稱添加到數據集非常重要。

Now if we observe the above dataset, the customerid column has of no importance to our analysis, and also the jobedu column has both the information of job and education in it.

現在,如果我們觀察以上數據集, customerid列對我們的分析就不重要了,而jobedu列中也包含job和education的信息。

So, what we’ll do is, we’ll drop the customerid column and we’ll split the jobedu column into two other columns job and education and after that, we’ll drop the jobedu column as well.

因此,我們要做的是,刪除customerid列,并將jobedu列拆分為job和education另外兩個列,然后,我們也刪除jobedu列。

# Drop the customer id as it is of no use.

data.drop('customerid', axis = 1, inplace = True)#Extract job & Education in newly from "jobedu" column.

data['job']= data["jobedu"].apply(lambda x: x.split(",")[0])

data['education']= data["jobedu"].apply(lambda x: x.split(",")[1])# Drop the "jobedu" column from the dataframe.

data.drop('jobedu', axis = 1, inplace = True)# Printing the Dataset

dataNow, the dataset looks like this,

現在,數據集看起來像這樣,

Customerid and jobedu columns and adding job and education columnsCustomerid和Jobedu列,并添加Job和Education列 Missing Values

缺失值

If there are missing values in the Dataset before doing any statistical analysis, we need to handle those missing values.

如果在進行任何統計分析之前數據集中有缺失值,我們需要處理這些缺失值。

There are mainly three types of missing values.

缺失值主要有三種類型。

- MCAR(Missing completely at random): These values do not depend on any other features. MCAR(完全隨機消失):這些值不依賴于任何其他功能。

- MAR(Missing at random): These values may be dependent on some other features. MAR(隨機丟失):這些值可能取決于某些其他功能。

- MNAR(Missing not at random): These missing values have some reason for why they are missing. MNAR(不隨機遺漏):這些遺漏值有其遺失的某些原因。

Let’s see which columns have missing values in the dataset.

讓我們看看數據集中哪些列缺少值。

# Checking the missing values

data.isnull().sum()The output will be,

輸出將是

As we can see three columns contain missing values. Let’s see how to handle the missing values. We can handle missing values by dropping the missing records or by imputing the values.

如我們所見,三列包含缺失值。 讓我們看看如何處理缺失的值。 我們可以通過刪除丟失的記錄或估算值來處理丟失的值。

Drop the missing Values

刪除缺失的值

Let’s handle missing values in the age column.

讓我們處理age列中的缺失值。

# Dropping the records with age missing in data dataframe.

data = data[~data.age.isnull()].copy()# Checking the missing values in the dataset.

data.isnull().sum()Let’s check the missing values in the dataset now.

讓我們現在檢查數據集中的缺失值。

Let’s impute values to the missing values for the month column.

讓我們將值估算為月份列的缺失值。

Since the month column is of an object type, let’s calculate the mode of that column and impute those values to the missing values.

由于month列是對象類型,讓我們計算該列的模式并將這些值歸為缺失值。

# Find the mode of month in data

month_mode = data.month.mode()[0]# Fill the missing values with mode value of month in data.

data.month.fillna(month_mode, inplace = True)# Let's see the null values in the month column.

data.month.isnull().sum()Now output is,

現在的輸出是

# Mode of month is'may, 2017'# Null values in month column after imputing with mode0Handling the missing values in the Response column. Since, our target column is Response Column, if we impute the values to this column it’ll affect our analysis. So, it is better to drop the missing values from Response Column.

處理“ 響應”列中的缺失值。 由于我們的目標列是“響應列”,因此,如果將值估算給該列,則會影響我們的分析。 因此,最好從“響應列”中刪除丟失的值。

#drop the records with response missing in data.data = data[~data.response.isnull()].copy()# Calculate the missing values in each column of data framedata.isnull().sum()Let’s check whether the missing values in the dataset have been handled or not,

讓我們檢查一下數據集中的缺失值是否已得到處理,

We can also, fill the missing values as ‘NaN’ so that while doing any statistical analysis, it won’t affect the outcome.

我們還可以將缺失值填充為“ NaN”,以便在進行任何統計分析時都不會影響結果。

Handling Outliers

處理異常值

We have seen how to fix missing values, now let’s see how to handle outliers in the dataset.

我們已經看到了如何糾正缺失值,現在讓我們看看如何處理數據集中的異常值。

Outliers are the values that are far beyond the next nearest data points.

離群值是遠遠超出下一個最近數據點的值。

There are two types of outliers:

有兩種異常值:

Univariate outliers: Univariate outliers are the data points whose values lie beyond the range of expected values based on one variable.

單變量離群值:單變量離群值是數據點,其值超出基于一個變量的期望值的范圍。

Multivariate outliers: While plotting data, some values of one variable may not lie beyond the expected range, but when you plot the data with some other variable, these values may lie far from the expected value.

多變量離群值:在繪制數據時,一個變量的某些值可能不會超出預期范圍,但是在使用其他變量繪制數據時,這些值可能與預期值相差很遠。

So, after understanding the causes of these outliers, we can handle them by dropping those records or imputing with the values or leaving them as is, if it makes more sense.

因此,在理解了這些異常值的原因之后,我們可以通過刪除這些記錄或使用值進行插值或按原樣保留它們(如果更有意義)來處理它們。

Standardizing Values

標準化價值

To perform data analysis on a set of values, we have to make sure the values in the same column should be on the same scale. For example, if the data contains the values of the top speed of different companies’ cars, then the whole column should be either in meters/sec scale or miles/sec scale.

要對一組值進行數據分析,我們必須確保同一列中的值應在同一范圍內。 例如,如果數據包含不同公司汽車最高速度的值,則整列應以米/秒為單位或英里/秒為單位。

Now, that we are clear on how to source and clean the data, let’s see how we can analyze the data.

現在,我們很清楚如何獲取和清除數據,讓我們看看如何分析數據。

3.單變量分析 (3. Univariate Analysis)

If we analyze data over a single variable/column from a dataset, it is known as Univariate Analysis.

如果我們通過數據集中的單個變量/列來分析數據,則稱為單變量分析。

Categorical Unordered Univariate Analysis:

分類無序單變量分析:

An unordered variable is a categorical variable that has no defined order. If we take our data as an example, the job column in the dataset is divided into many sub-categories like technician, blue-collar, services, management, etc. There is no weight or measure given to any value in the ‘job’ column.

無序變量是沒有定義順序的分類變量。 以我們的數據為例,數據集中的job列分為許多子類別,例如技術員,藍領,服務,管理等。“ job ”中的任何值都沒有權重或度量柱。

Now, let’s analyze the job category by using plots. Since Job is a category, we will plot the bar plot.

現在,讓我們使用繪圖來分析工作類別。 由于Job是一個類別,因此我們將繪制條形圖。

# Let's calculate the percentage of each job status category.

data.job.value_counts(normalize=True)#plot the bar graph of percentage job categories

data.job.value_counts(normalize=True).plot.barh()

plt.show()The output looks like this,

輸出看起來像這樣,

By the above bar plot, we can infer that the data set contains more number of blue-collar workers compared to other categories.

通過上面的條形圖,我們可以推斷出與其他類別相比,數據集包含更多的藍領工人。

Categorical Ordered Univariate Analysis:

分類有序單變量分析:

Ordered variables are those variables that have a natural rank of order. Some examples of categorical ordered variables from our dataset are:

有序變量是具有自然順序的那些變量。 我們的數據集中的分類有序變量的一些示例是:

- Month: Jan, Feb, March…… 月:一月,二月,三月……

- Education: Primary, Secondary,…… 教育程度:小學,中學,……

Now, let’s analyze the Education Variable from the dataset. Since we’ve already seen a bar plot, let’s see how a Pie Chart looks like.

現在,讓我們從數據集中分析教育變量。 由于我們已經看過條形圖,因此讓我們看一下餅圖的外觀。

#calculate the percentage of each education category.

data.education.value_counts(normalize=True)#plot the pie chart of education categories

data.education.value_counts(normalize=True).plot.pie()

plt.show()The output will be,

輸出將是

By the above analysis, we can infer that the data set has a large number of them belongs to secondary education after that tertiary and next primary. Also, a very small percentage of them have been unknown.

通過以上分析,我們可以推斷出該數據集中有大量數據屬于該高等教育之后的中學。 而且,其中很小的一部分是未知的。

This is how we analyze univariate categorical analysis. If the column or variable is of numerical then we’ll analyze by calculating its mean, median, std, etc. We can get those values by using the describe function.

這就是我們分析單變量分類分析的方式。 如果列或變量是數字,那么我們將通過計算其平均值,中位數,std等進行分析。我們可以使用describe函數獲得這些值。

data.salary.describe()The output will be,

輸出將是

4.雙變量分析 (4. Bivariate Analysis)

If we analyze data by taking two variables/columns into consideration from a dataset, it is known as Bivariate Analysis.

如果我們通過考慮數據集中的兩個變量/列來分析數據,則稱為雙變量分析。

a) Numeric-Numeric Analysis:

a)數值分析:

Analyzing the two numeric variables from a dataset is known as numeric-numeric analysis. We can analyze it in three different ways.

分析數據集中的兩個數字變量稱為數字數值分析。 我們可以通過三種不同的方式對其進行分析。

- Scatter Plot 散點圖

- Pair Plot 對圖

- Correlation Matrix 相關矩陣

Scatter Plot

散點圖

Let’s take three columns ‘Balance’, ‘Age’ and ‘Salary’ from our dataset and see what we can infer by plotting to scatter plot between salary balance and age balance

讓我們從數據集中獲取三列“ Balance”,“ Age”和“ Salary”列,看看通過繪制散布在salary balance和age balance之間的圖可以推斷出什么

#plot the scatter plot of balance and salary variable in data

plt.scatter(data.salary,data.balance)

plt.show()#plot the scatter plot of balance and age variable in data

data.plot.scatter(x="age",y="balance")

plt.show()Now, the scatter plots looks like,

現在,散點圖看起來像

Pair Plot

對圖

Now, let’s plot Pair Plots for the three columns we used in plotting Scatter plots. We’ll use the seaborn library for plotting Pair Plots.

現在,讓我們為繪制散點圖時使用的三列繪制成對圖。 我們將使用seaborn庫來繪制對圖。

#plot the pair plot of salary, balance and age in data dataframe.

sns.pairplot(data = data, vars=['salary','balance','age'])

plt.show()The Pair Plot looks like this,

配對圖看起來像這樣,

Correlation Matrix

相關矩陣

Since we cannot use more than two variables as x-axis and y-axis in Scatter and Pair Plots, it is difficult to see the relation between three numerical variables in a single graph. In those cases, we’ll use the correlation matrix.

由于在散點圖和成對圖中我們不能使用兩個以上的變量作為x軸和y軸,因此很難在單個圖中看到三個數值變量之間的關系。 在這種情況下,我們將使用相關矩陣。

# Creating a matrix using age, salry, balance as rows and columns

data[['age','salary','balance']].corr()#plot the correlation matrix of salary, balance and age in data dataframe.

sns.heatmap(data[['age','salary','balance']].corr(), annot=True, cmap = 'Reds')

plt.show()First, we created a matrix using age, salary, and balance. After that, we are plotting the heatmap using the seaborn library of the matrix.

首先,我們使用年齡,薪水和余額創建一個矩陣。 之后,我們使用矩陣的seaborn庫繪制熱圖。

b) Numeric - Categorical Analysis

b)數值-分類分析

Analyzing the one numeric variable and one categorical variable from a dataset is known as numeric-categorical analysis. We analyze them mainly using mean, median, and box plots.

從數據集中分析一個數字變量和一個分類變量稱為數字分類分析。 我們主要使用均值,中位數和箱形圖來分析它們。

Let’s take salary and response columns from our dataset.

讓我們從數據集中獲取salary和response列。

First check for mean value using groupby

首先使用groupby檢查平均值

#groupby the response to find the mean of the salary with response no & yes separately.data.groupby('response')['salary'].mean()The output will be,

輸出將是

There is not much of a difference between the yes and no response based on the salary.

基于薪水,是與否之間的區別不大。

Let’s calculate the median,

讓我們計算中位數

#groupby the response to find the median of the salary with response no & yes separately.data.groupby('response')['salary'].median()The output will be,

輸出將是

By both mean and median we can say that the response of yes and no remains the same irrespective of the person’s salary. But, is it truly behaving like that, let’s plot the box plot for them and check the behavior.

通過均值和中位數,我們可以說是和否的響應保持不變,而與人的薪水無關。 但是,是否確實如此,讓我們為它們繪制箱形圖并檢查行為。

#plot the box plot of salary for yes & no responses.sns.boxplot(inp1.response, inp1.salary)

plt.show()The box plot looks like this,

箱形圖看起來像這樣,

As we can see, when we plot the Box Plot, it paints a very different picture compared to mean and median. The IQR for customers who gave a positive response is on the higher salary side.

如我們所見,當繪制箱形圖時,與均值和中位數相比,它繪制的圖像非常不同。 給予積極回應的客戶的IQR在較高的薪資方面。

This is how we analyze Numeric-Categorical variables, we use mean, median, and Box Plots to draw some sort of conclusions.

這就是我們分析數值分類變量的方式,我們使用均值,中位數和箱形圖得出某種結論。

c) Categorical — Categorical Analysis

c)分類-分類分析

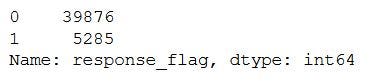

Since our target variable/column is the Response rate, we’ll see how the different categories like Education, Marital Status, etc., are associated with the Response column. So instead of ‘Yes’ and ‘No’ we will convert them into ‘1’ and ‘0’, by doing that we’ll get the “Response Rate”.

由于我們的目標變量/列是響應率,因此我們將看到“教育”,“婚姻狀況”等不同類別如何與“響應”列相關聯。 因此,通過將其轉換為“ 1”和“ 0”,而不是“是”和“否”,我們將獲得“響應率”。

#create response_rate of numerical data type where response "yes"= 1, "no"= 0

data['response_rate'] = np.where(data.response=='yes',1,0)

data.response_rate.value_counts()The output looks like this,

輸出看起來像這樣,

Let’s see how the response rate varies for different categories in marital status.

讓我們看看婚姻狀況不同類別的回應率如何變化。

#plot the bar graph of marital status with average value of response_rate

data.groupby('marital')['response_rate'].mean().plot.bar()

plt.show()The graph looks like this,

該圖看起來像這樣,

By the above graph, we can infer that the positive response is more for Single status members in the data set. Similarly, we can plot the graphs for Loan vs Response rate, Housing Loans vs Response rate, etc.

通過上圖,我們可以推斷出,對于數據集中的單個狀態成員而言,肯定的響應更大。 同樣,我們可以繪制貸款與響應率,住房貸款與響應率等圖表。

5.多元分析 (5. Multivariate Analysis)

If we analyze data by taking more than two variables/columns into consideration from a dataset, it is known as Multivariate Analysis.

如果我們通過考慮數據集中兩個以上的變量/列來分析數據,則稱為多變量分析。

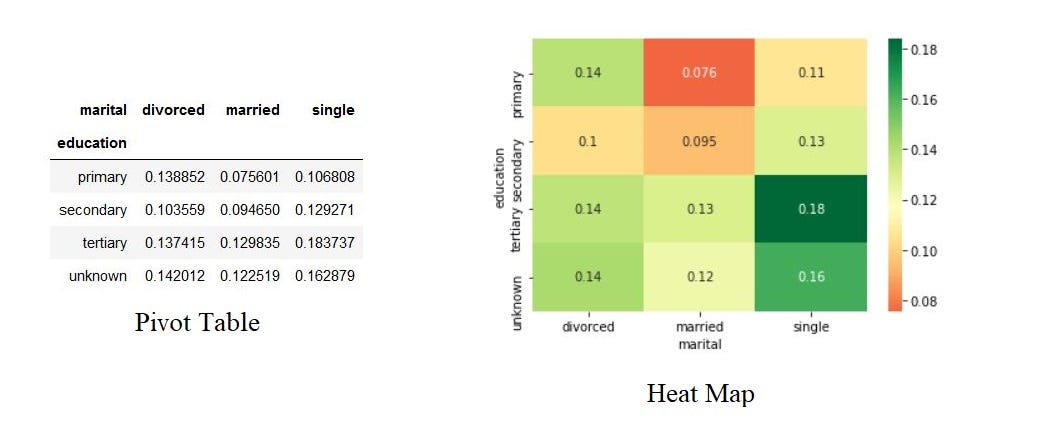

Let’s see how ‘Education’, ‘Marital’, and ‘Response_rate’ vary with each other.

讓我們來看看“教育”,“婚姻”和“回應率”之間如何變化。

First, we’ll create a pivot table with the three columns and after that, we’ll create a heatmap.

首先,我們將創建一個包含三列的數據透視表,然后創建一個熱圖。

result = pd.pivot_table(data=data, index='education', columns='marital',values='response_rate')

print(result)#create heat map of education vs marital vs response_rate

sns.heatmap(result, annot=True, cmap = 'RdYlGn', center=0.117)

plt.show()The Pivot table and heatmap looks like this,

數據透視表和熱圖如下所示,

Based on the Heatmap we can infer that the married people with primary education are less likely to respond positively for the survey and single people with tertiary education are most likely to respond positively to the survey.

根據熱圖,我們可以推斷出,受過初等教育的已婚人士對調查的正面React不太可能,而受過高等教育的單身人士對調查的正面回應的可能性最大。

Similarly, we can plot the graphs for Job vs marital vs response, Education vs poutcome vs response, etc.

同樣,我們可以繪制工作,婚姻,回應,教育,結果與回應等圖表。

結論 (Conclusion)

This is how we’ll do Exploratory Data Analysis. Exploratory Data Analysis (EDA) helps us to look beyond the data. The more we explore the data, the more the insights we draw from it. As a data analyst, almost 80% of our time will be spent understanding data and solving various business problems through EDA.

這就是我們進行探索性數據分析的方式。 探索性數據分析(EDA)可幫助我們超越數據范圍。 我們越探索數據,就越能從中獲得見識。 作為數據分析師,我們將有近80%的時間用于通過EDA了解數據和解決各種業務問題。

Thank you for reading and Happy Coding!!!

感謝您的閱讀和快樂編碼!!!

在這里查看我以前有關Python的文章 (Check out my previous articles about Python here)

Indexing in Pandas Dataframe using Python

使用Python在Pandas Dataframe中建立索引

Seaborn: Python

Seaborn:Python

Pandas: Python

熊貓:Python

Matplotlib: Python

Matplotlib:Python

NumPy: Python

NumPy:Python

Data Visualization and its Importance: Python

數據可視化及其重要性:Python

Time Complexity and Its Importance in Python

時間復雜度及其在Python中的重要性

Python Recursion or Recursive Function in Python

Python中的Python遞歸或遞歸函數

翻譯自: https://towardsdatascience.com/exploratory-data-analysis-eda-python-87178e35b14

本文來自互聯網用戶投稿,該文觀點僅代表作者本人,不代表本站立場。本站僅提供信息存儲空間服務,不擁有所有權,不承擔相關法律責任。 如若轉載,請注明出處:http://www.pswp.cn/news/391398.shtml 繁體地址,請注明出處:http://hk.pswp.cn/news/391398.shtml 英文地址,請注明出處:http://en.pswp.cn/news/391398.shtml

如若內容造成侵權/違法違規/事實不符,請聯系多彩編程網進行投訴反饋email:809451989@qq.com,一經查實,立即刪除!

)

)

)

:配置中心git示例)

)

_如何使用Big Query&Data Studio處理和可視化Google Cloud上的財務數據...)

(kmp))

模型應用于實際的多元數據集...)