美團騎手檢測出虛假定位

Coordination is one of the central features of information operations and disinformation campaigns, which can be defined as concerted efforts to target people with false or misleading information, often with some strategic objective (political, social, financial), while using the affordances provided by social media platforms.

協調是信息運營和虛假宣傳運動的主要特征之一,可以定義為協同努力,以具有虛假或誤導性信息的人們為目標,通常以某些戰略目標(政治,社會,財務)為目標,同時使用社會提供的能力媒體平臺。

Since 2017, platforms like Facebook have developed new framings, policies, and entire teams to counter bad actors using its platform to interfere in elections, drive political polarization, or manipulate public opinion. After several iterations, the platform has settled on describing malicious activity like this as “coordinated inauthentic behavior,” referring to a group of actors “working in concert to engage in inauthentic behavior…where the use of fake accounts is central to the operation.”

自2017年以來,Facebook之類的平臺已開發出新的框架,政策和整個團隊,以利用其平臺干預選舉,推動政治兩極分化或操縱輿論來對抗不良行為者。 經過幾次迭代,該平臺決定將這樣的惡意活動描述為“ 協調不真實的行為 ”,指的是一組參與者“協同工作以進行不真實的行為……其中使用偽造帳戶是操作的中心。”

More broadly, Twitter uses the phrase “platform manipulation,” which includes a range of actions, but also focuses on “coordinated activity…that attempts to artificially influence conversations through the use of multiple accounts, fake accounts, automation and/or scripting.”

更廣泛地講,Twitter使用了“ 平臺操縱 ”這個短語,其中包括一系列動作,但也側重于“協調活動……它試圖通過使用多個帳戶,偽造帳戶,自動化和/或腳本來人為地影響對話。”

Unfortunately, open source researchers have limited data at their disposal to assess some of the characteristics of disinformation campaigns. Figuring out the authenticity of an account requires far more information than publicly available (data like browser usage, IP logging, device IDs, accounts e-mails, etc.). And while we might be able to figure out when a group of accounts are using stock photos, we can’t say anything about the origin of the people using these accounts.

不幸的是,開源研究人員只能使用有限的數據來評估虛假信息宣傳活動的某些特征。 要弄清帳戶的真實性,需要的信息比公開可用的信息要多得多(瀏覽器使用情況,IP日志記錄,設備ID,帳戶電子郵件等數據)。 盡管我們也許可以弄清楚一組帳戶何時使用了照片,但是我們卻無法說出使用這些帳戶的人的來歷。

Even the terms “coordinated” and “inauthentic” are not straightforward or neutral judgments, a problem highlighted by scholars like Kate Starbird and Evelyn Douek. Starbird has used multiple case studies to show that there are not clear distinctions between “orchestrated” and “organic” activity:

甚至“協調”和“不真實”這兩個詞也不是簡單或中立的判斷,這是像凱特·斯塔伯德 ( Kate Starbird)和伊夫琳 ·杜耶克 ( Evelyn Douek)這樣的學者所強調的問題。 Starbird使用多個案例研究表明,“精心策劃”的活動與“有機”活動之間沒有明顯的區別:

In particular, our work reveals entanglements between orchestrated action and organic activity, including the proliferation of authentic accounts (real people, sincerely participating) within activities that are guided by and/or integrated into disinformation campaigns.

尤其是,我們的工作揭示了精心策劃的行動與組織活動之間的糾纏,包括在虛假宣傳活動指導和/或整合的活動中,真實賬戶(真實的人,真誠地參與)的擴散。

In this post, I focus specifically on the digital artifacts of “coordination” to show how this behavior can be used to identify accounts that may be part of a disinformation campaign. But I also show how coming to this conclusion always requires additional analysis, especially to distinguish between “orchestrated action and organic activity.”

在這篇文章中,我特別關注“協調”的數字工件,以展示如何將此行為用于識別可能是虛假信息活動一部分的帳戶。 但是,我還展示了如何得出這個結論總是需要額外的分析,尤其是要區分“精心策劃的行動和有機活動”。

We’ll look at original data I collected prior to a Facebook takedown of pro-Trump groups associated with “The Beauty of Life” (TheBL) media site, reportedly tied to the Epoch Media Group. The removal of these accounts came with great fanfare in late 2019, in part because some of the accounts used AI-generated photos to populate their profiles. But another behavioral trait of the accounts— and one more visible in digital traces— was their coordinated amplification of URLs to the thebl.com and other assets within the network of accounts. We’ll also look at data from QAnon accounts on Instagram to get a better sense of what coordination can look like within a highly active online community.

我們將查看我在Facebook刪除與“生活之美”(TheBL)媒體網站相關的親特朗普組織之前收集的原始數據,據報道,該網站與Epoch Media Group相關。 這些帳戶的刪除在2019年末大肆宣傳 ,部分原因是其中一些帳戶使用了AI生成的照片來填充個人資料。 但是,帳戶的另一行為特征(在數字跟蹤中更明顯)是它們協調地放大了thebl.com和帳戶網絡中其他資產的URL。 我們還將查看來自Instagram上QAnon帳戶的數據,以更好地了解高度活躍的在線社區中的協調情況。

Note: I’ll be using the programming language R in my analysis and things get a bit technical from here.

注意:我將在分析中使用編程語言R,因此此處的內容會有點技術性。

成對相關,聚類和{tidygraph} (Pairwise correlations, clustering, and {tidygraph})

One method I’ve used consistently to help identify coordination comes from David Robinson’s {widyr} package, which has functions that allow you to compute pairwise correlations, distance, cosine similarity, and counts, as well as k-means and hierarchical clustering (in development).

我一直用來幫助識別協調性的一種方法來自David Robinson的{ widyr }程序包,該程序包具有允許您計算成對相關性,距離,余弦相似度和計數以及k均值和層次聚類的功能。 (開發中)。

Using some of the functions from {widyr}, we can answer a question like: which accounts have a tendency to link to the same domains? This kind of question can be applied to other features as well, like sequences of hashtags (an example we’ll explore next), mentions, shared posts, URLs, text, and even content.

使用{widyr}中的某些功能,我們可以回答類似的問題: 哪些帳戶傾向于鏈接到相同的域 ? 這種問題也可以應用于其他功能,例如井號標簽序列(我們將在下面探討的示例),提及,共享帖子,URL,文本甚至內容。

Here, I’ve extracted the domains from URLs shared by Facebook groups from TheBL takedown, filtered domains that occurred less than 20 times, removed social media links, and put it all into a dataframe called domains_shared.

在這里,我從TheBL移除中從Facebook組共享的URL中提取了域,過濾了發生次數少于20次的域,刪除了社交媒體鏈接,并將其全部放入一個名為domains_shared的數據框中 。

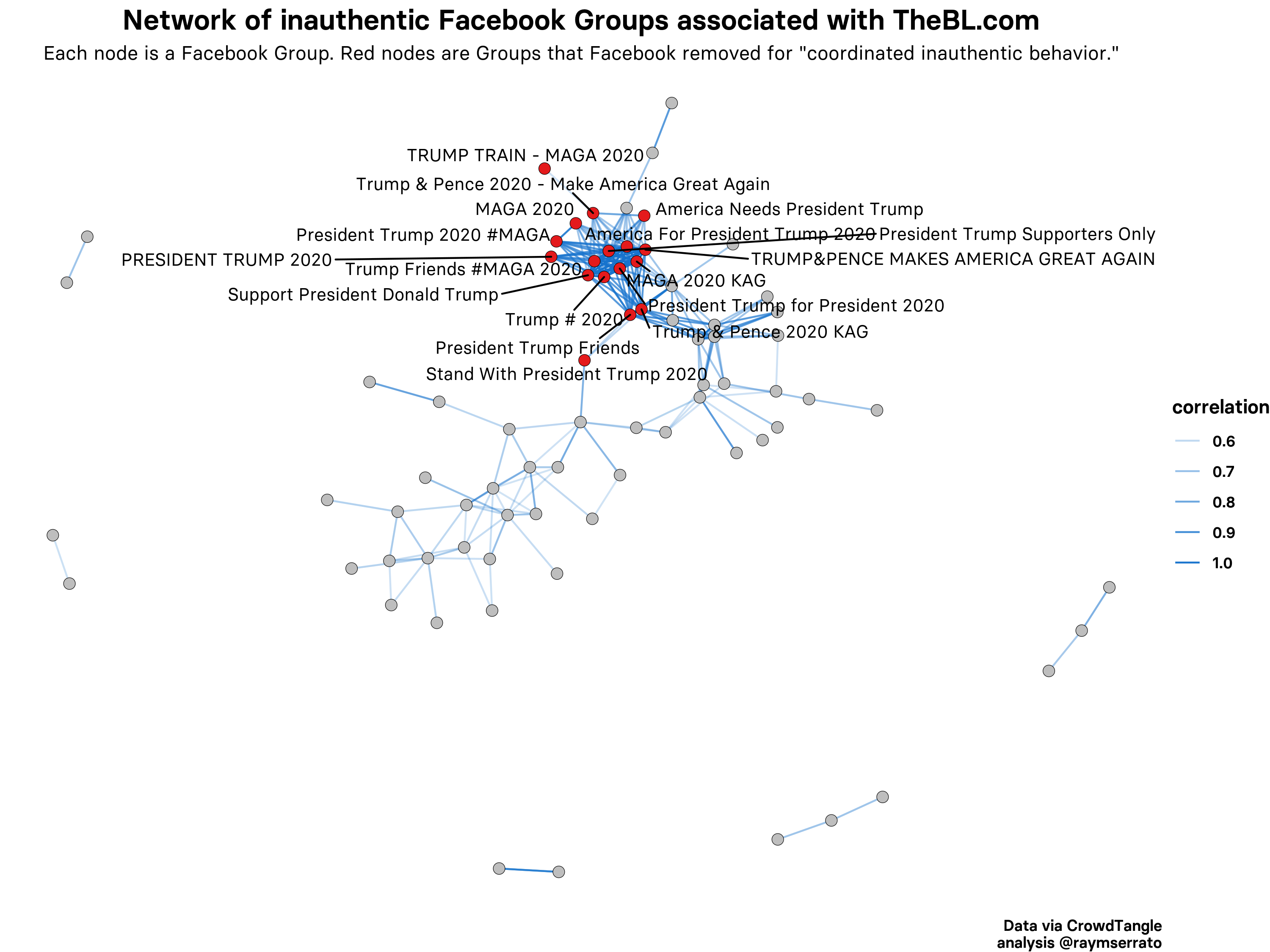

Using {tidygraph} and {ggraph}, we can then visualize the relationships between these groups. In the graph below, I’ve filtered the data to show relationships with a correlation above .5 and highlighted those groups (nodes) which I know were removed for inauthentic behavior. This graph shows that the greatest correlation (phi coefficient) is between the groups engaged in coordinated behavior because they had a much higher tendency to share URLs to content on thebl.com. An analysis focusing on the page-sharing behavior of the groups would yield a similar graph, showing the groups that were more likely to share posts by TheBLcom page and other assets.

使用{tidygraph}和{ggraph},我們可以可視化這些組之間的關系。 在下面的圖形中,我過濾了數據以顯示具有大于.5的相關性的關系,并突出顯示了我知道由于不真實行為而被刪除的那些組(節點)。 該圖顯示,最大的相關性(phi系數)在參與協調行為的組之間,因為他們有更高的傾向將URL共享到blbl.com上的內容。 著重于各組頁面共享行為的分析將得出相似的圖形,顯示出更有可能通過TheBLcom頁面和其他資產共享帖子的組。

This method can quickly help an investigator cluster accounts based on behaviors — domain-sharing in this case — but it still requires further examination of the accounts and the content they shared. This may sound familiar to disinformation researchers because it’s essentially the ABCs of Disinformation, by Camille Fran?ois, which focuses on manipulative actors, behaviors, and content.

這種方法可以根據行為(在這種情況下為域共享)快速地幫助調查人員群集帳戶,但仍然需要進一步檢查帳戶及其共享的內容 。 這可能聽起來很熟悉造謠研究人員,因為它本質上是造謠的基本知識 ,由卡米爾弗朗索瓦 ,其重點是操縱行為 , 行為和內容 。

In the graph, you’ll see many other groups (unlabeled in gray) that are linked based on their domain-sharing behavior, but with lower correlations. For each group an analyst would need to examine other details like: administrators and their associated information (profile photos, friends, timelines, tagged photos, etc.); other groups managed by the administrators; creation date of the groups; which specific domains linked the groups together; shared posting patterns within the groups, etc., all in order to determine if they too could have been inauthentic.

在圖中,您將看到許多其他組(未標記為灰色),這些組基于它們的域共享行為進行鏈接,但相關性較低。 對于每個小組,分析人員都需要檢查其他詳細信息,例如:管理員及其相關信息(個人資料照片,朋友,時間表,帶標簽的照片等); 由管理員管理的其他組; 組的創建日期; 哪些特定領域將各組聯系在一起; 組等中的所有共享發布模式,以確定它們是否也可能是不真實的。

In the case of TheBL takedown, group administrators showed many signals of inauthenticity, but almost none of those signals were available in digital trace data. (This is well illustrated in independent analysis by Graphika and the Atlantic Council’s Digital Forensic Research Lab (DFRLab), who were able to review a list of accounts prior to Facebook’s takedown).

在TheBL撤消的情況下,組管理員顯示了許多不真實的信號,但在數字跟蹤數據中幾乎沒有這些信號。 (這在Graphika和大西洋理事會的數字取證研究實驗室(DFRLab)進行的獨立分析中得到了很好的說明,他們能夠在Facebook刪除之前查看帳戶清單)。

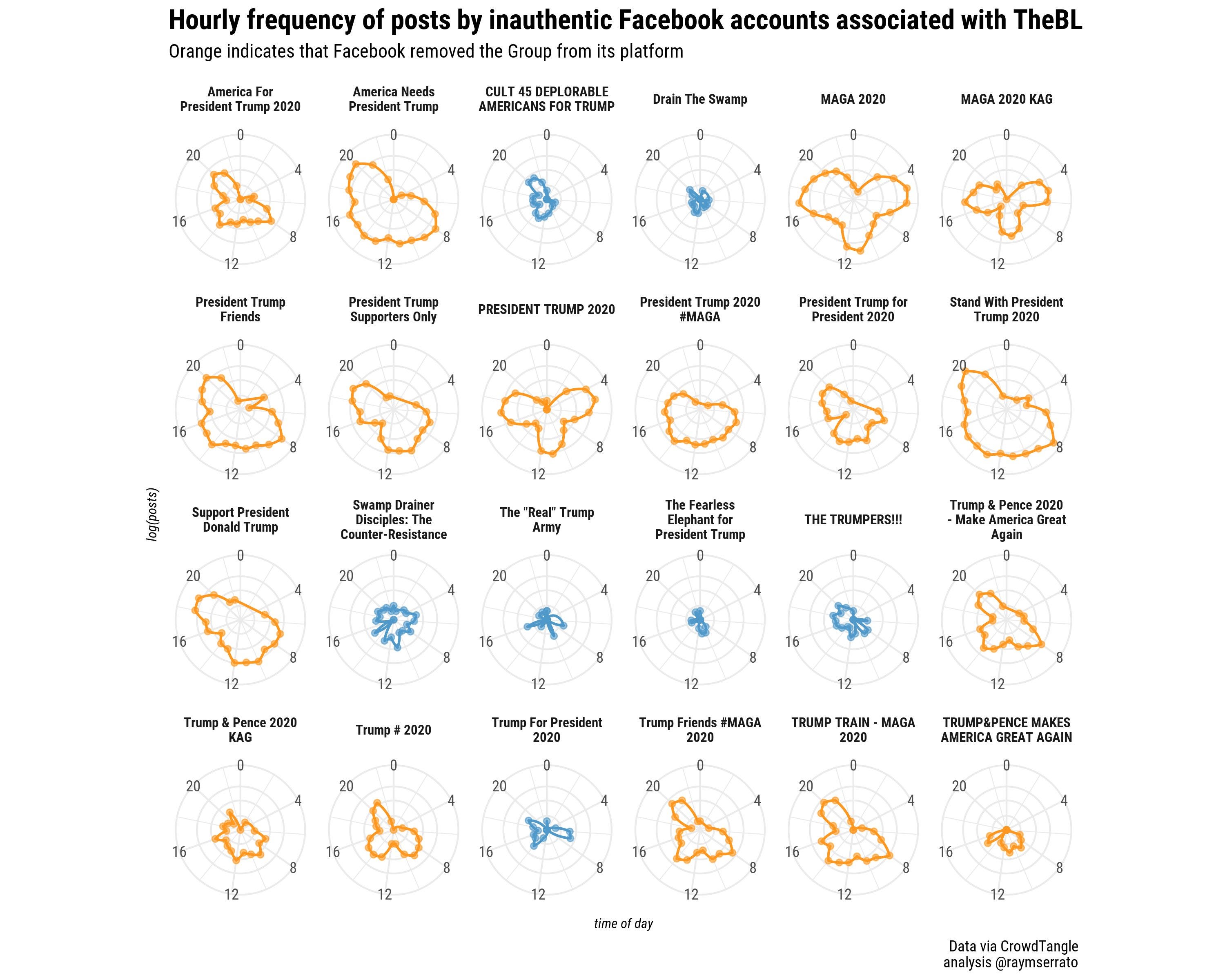

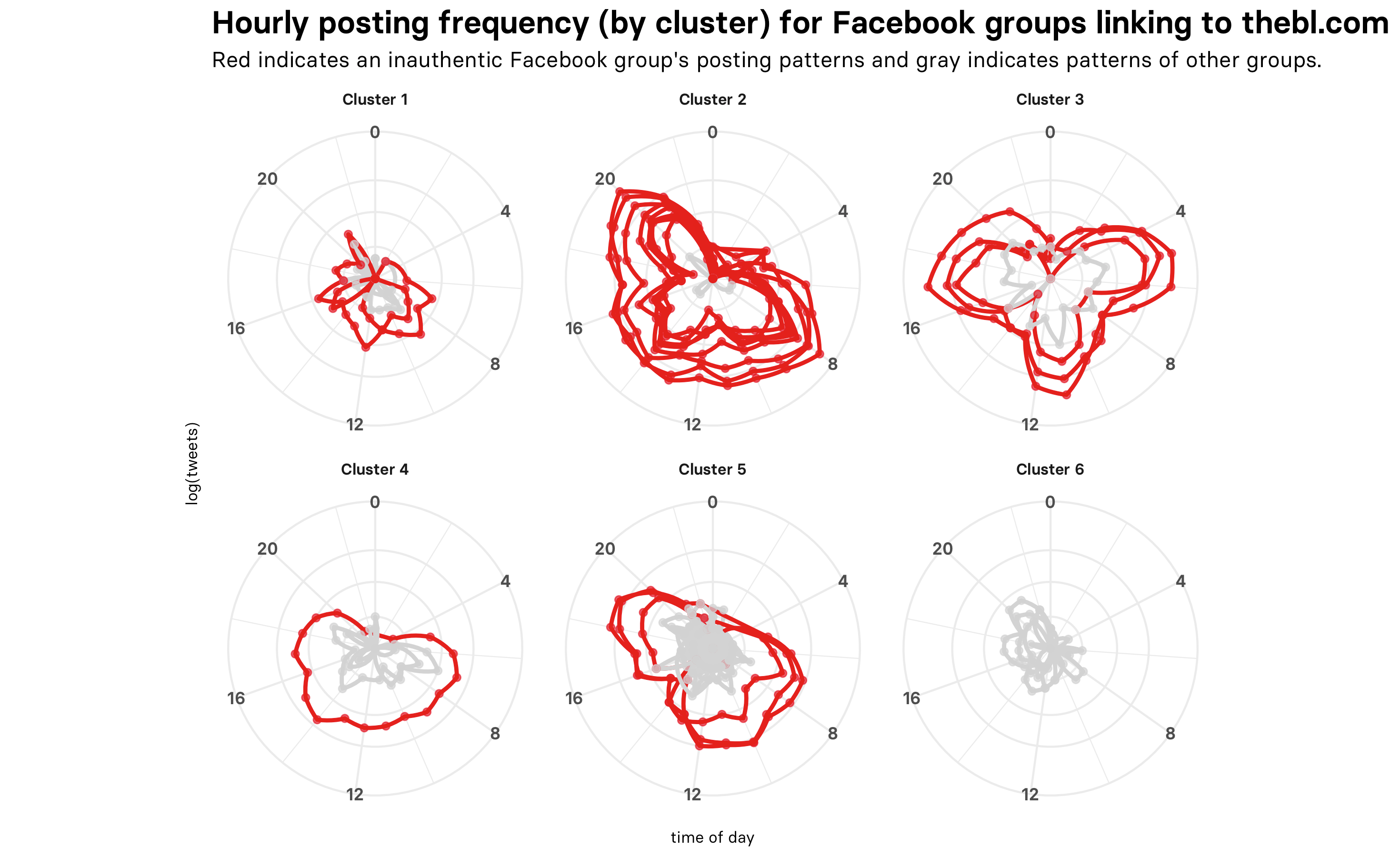

Post timestamps, however, are in the data, and using those we can visualize the groups’ temporal “signatures” for posts linking to thebl.com. The graph below shows that some groups had distinct hourly signatures, with some variation in their frequency of posting:

但是,發布時間戳是 在數據中,并使用這些數據,我們可以可視化小組的時態“簽名”,以顯示鏈接到thebl.com的帖子。 下圖顯示了某些組具有不同的小時簽名,其發布頻率有所不同:

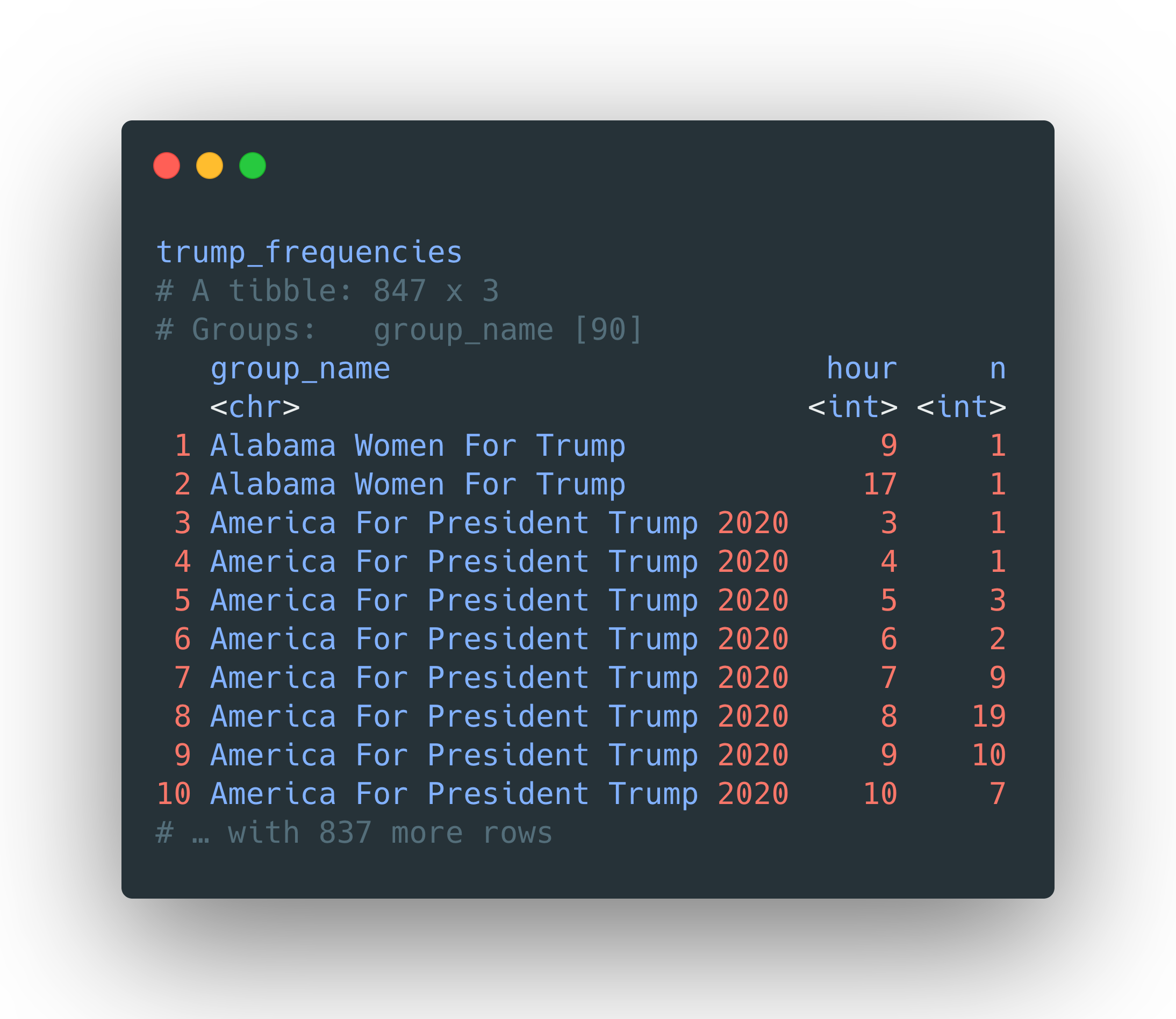

We can also use k-means clustering to try and distinguish groups better by their posting patterns. The {widyr} package has a function, widely_kmeans, that makes this straightforward to accomplish. First, we start with a dataframe where I’ve aggregated the number of hourly posts to the bl.com for all groups, shown here as trump_frequencies.

我們還可以使用k-means聚類嘗試通過組的發布方式更好地區分組。 {widyr}包具有一個函數wide_kmeans ,可以輕松完成此操作。 首先,我們從一個數據幀開始,在該數據幀中,我匯總了所有群組每小時在bl.com上發布的帖子數,此處顯示為trump_frequencies 。

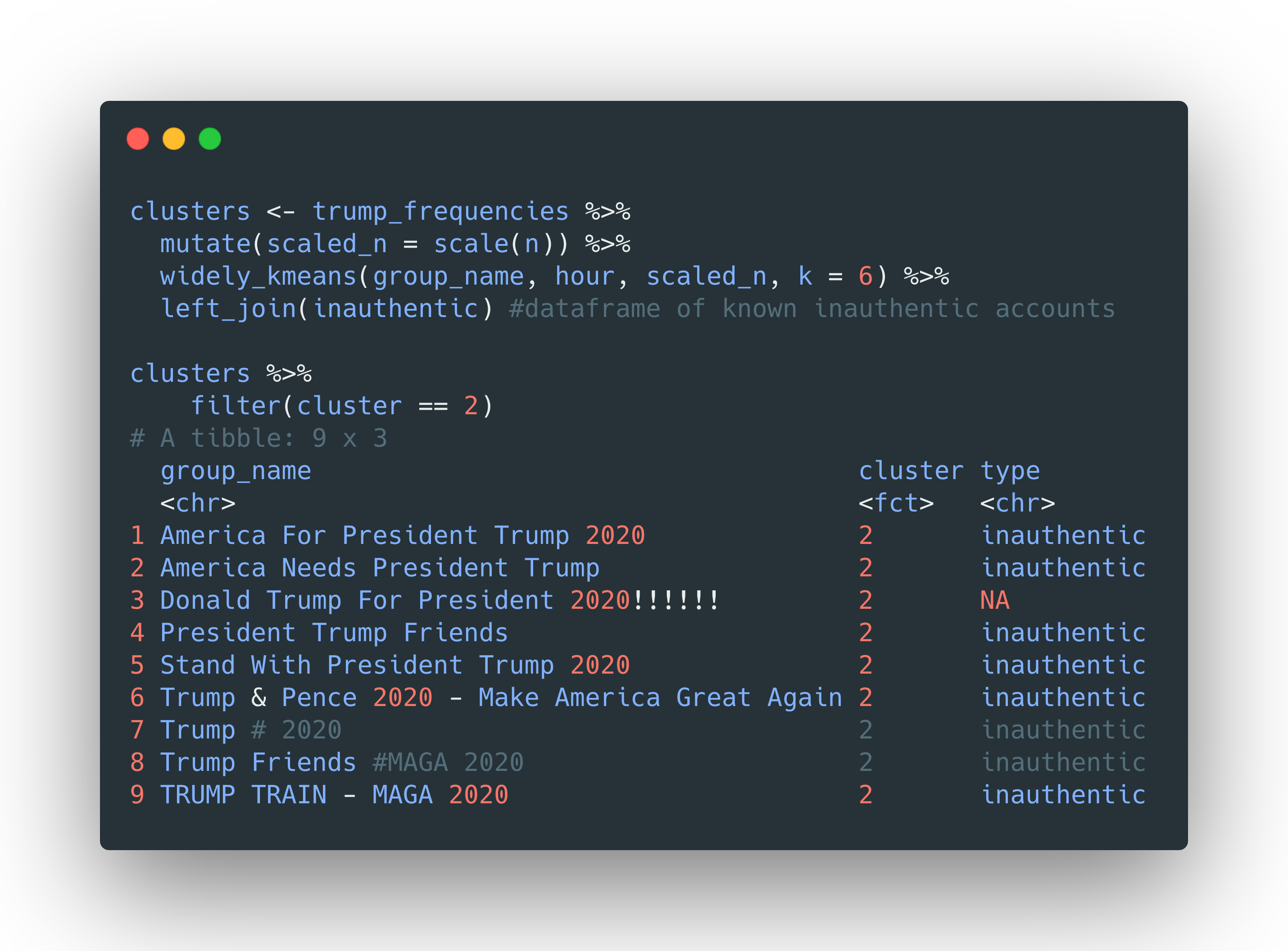

We then scale n and use widely_kmeans(), where group_name is our item, hour is our feature, and scaled_n our value. We can inspect one of the clusters and see how well it grouped inauthentic accounts together.

然后,我們縮放n并使用broadly_kmeans() ,其中group_name是我們的商品, hour是我們的功能,而scaled_n是我們的值。 我們可以檢查其中一個群集,并查看其如何將非真實帳戶分組在一起。

We can visualize the temporal signatures again, this time faceting by clusters. This graph shows the signatures of inauthentic groups in red and authentic groups in gray. We can clearly see the similarity of temporal signatures in each cluster — the inauthentic groups have very distinct signatures that set them apart from the rest. Even so, some inauthentic groups are clustered with many other authentic groups (Cluster 5), illustrating the need for manual verification.

我們可以再次可視化時間簽名,這次是聚類。 此圖以紅色顯示不真實組的簽名,以灰色顯示真實組的簽名。 我們可以清楚地看到每個群集中的時間簽名的相似性-不真實的組具有非常不同的簽名,這使它們與其余的區別開來。 即便如此,一些不真實的組也與許多其他真實的組一起聚在一起(集群5),這說明需要手動驗證。

協調鏈接共享行為 (Coordinated link sharing behavior)

Another method I’ve used comes from {CooRnet}, an R library created by researchers at the University of Urbino Carlo Bo and IT University of Copenhagen. This method focuses entirely on “coordinated link sharing behavior,” which “refers to a specific coordinated activity performed by a network of Facebook pages, groups and verified public profiles (Facebook public entities) that repeatedly shared the same news articles in a very short time from each other.” The package uses an algorithm to determine the time period in which coordinated link sharing is occurring (or you can specify it yourself) and groups accounts together based on this behavior. There are some false positives, especially for link-sharing in groups, but it’s a useful tool and it can be used to look at other kinds of coordinated behavior.

我使用的另一種方法來自{ CooRnet },這是一個由Urbino Carlo Bo大學和哥本哈根IT大學的研究人員創建的R庫。 此方法完全專注于“協調的鏈接共享行為”,“這是指由Facebook頁面,群組和經過驗證的公共資料(Facebook公共實體)網絡執行的特定協調活動,這些活動在很短的時間內重復共享了相同的新聞文章。彼此之間。” 該軟件包使用一種算法來確定發生協調鏈接共享的時間段(或您可以自行指定),并根據此行為將帳戶分組在一起。 有一些誤報,尤其是對于組中的鏈接共享,它是一個有用的工具,可用于查看其他類型的協調行為。

Recently, I adapted code to use {CooRnet}’s get_coord_shares function — the primary way to detect “networks of entities” engaged in coordination— on data from Instagram. A working paper out of the Center for Complex Networks and Systems Research lays out a network-based framework for uncovering accounts that are engaged in coordination, relying on data from content, temporal activity, handle-sharing, and other digital traces.

最近,我修改了代碼,以使用{CooRnet}的get_coord_shares函數(用于檢測參與協調的“實體網絡”的主要方式)對Instagram數據進行處理。 復雜網絡和系統研究中心的工作文件提出了一個基于網絡的框架,用于發現參與協調的帳戶,這些帳戶依賴于來自內容,臨時活動,句柄共享和其他數字跟蹤的數據。

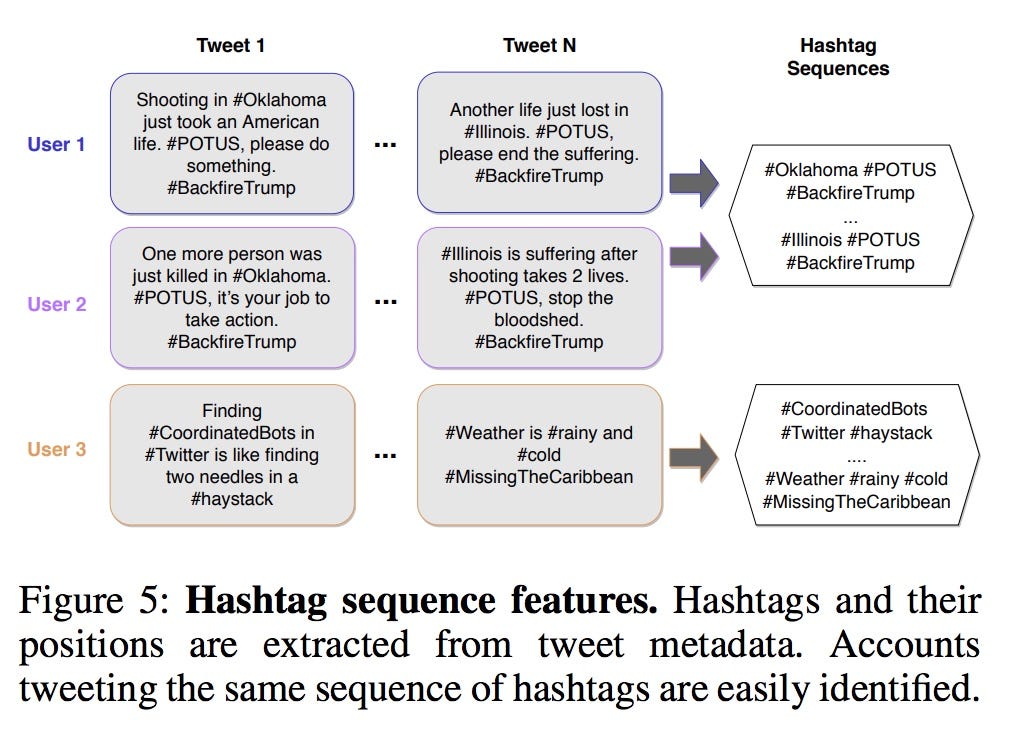

In one case study the authors propose identifying coordinated accounts using highly similar sequences of hashtags across messages (see Figure 5, from their paper); they theorize that while assets may try to obfuscate their coordination by paraphrasing similar text in messages, “even paraphrased text is likely to include the same hashtags based on the targets of a coordinated campaign.”

在一個案例研究中,作者建議使用跨消息的標簽標簽序列高度相似來識別協調帳戶(請參見論文中的圖5)。 他們的理論是,盡管資產可能會試圖通過在消息中用相似的文字來掩飾其協調性,但“即使是經過改寫的文字也可能會基于協調運動的目標而包含相同的主題標簽。”

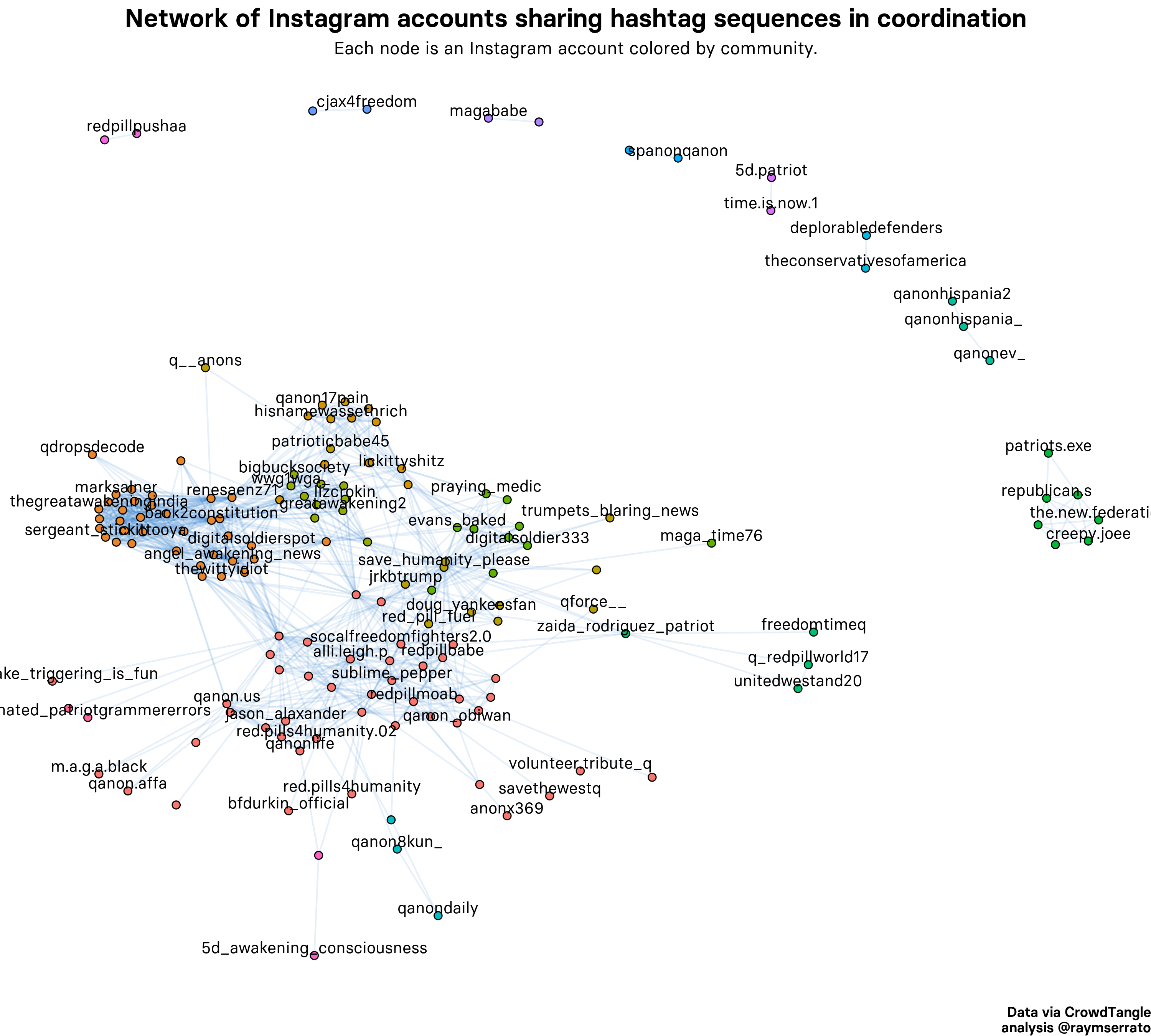

Given the content of Instagram messages, I thought this would be a good opportunity to test this method out with{CooRnet}. Using CrowdTangle, I retrieved 166,808 Instagram messages mentioning “QAnon” or “wg1wga” since January 1, 2020. I then extracted the sequence of hashtags used in each message of the dataset and later removed sequences that had not been used more than 20 times. This resulted in hashtag sequences that look like this (the QAnon community isn’t exactly known for its brevity):

鑒于Instagram消息的內容,我認為這是一個很好的機會,可以使用{CooRnet}測試此方法。 自2020年1月1日以來,我使用CrowdTangle檢索了166,808條Instagram消息,其中提及“ QAnon”或“ wg1wga”。然后,我提取了數據集中每條消息中使用的#標簽序列,然后刪除了未使用超過20次的序列。 這導致了如下所示的主題標簽序列(QAnon社區并不以其簡短而著稱):

QAnon WWG1WGA UnitedNotDivided TheGreatAwakening Spexit Espan?a Espan?aViva VivaEspan?a ArribaEspan?a NWO Bilderberg Rothschild MakeSpainGreatAgain AnteTodoEspan?a Comunismo Marxismo Feminismo Socialismo PSOE UnidasPodemos FaseLibertad Masones Satanismo ObamaGate Pizzagate Pedogate QAnonEspan?a DV1VTq qanon wwg1wga darktolight panicindc sheepnomore patriotshavenoskincolor secretspaceprogram thegreatawakeningasleepnomore savethechildren itsallaboutthekids protectthechildren momlife familyiseverything wqke wakeupamerica wakeupsheeple wwg1wga qanon🇺🇸 qanonarmy redpill stormiscoming stormishere trusttheplan darktolight freedomisnotfree q whitehats conspiracyrealistOnce I had my hashtag sequences, I could write code to feed data into the get_coord_shares function, setting a coordination interval of 300 seconds, or 5 minutes. This means that the algorithm would look for accounts that deployed identical hashtag sequences within a 5-minute window of one another. The algorithm detected 157 highly coordinated accounts, whose relationships I’ve visualized in the network graph below.

一旦有了我的標簽序列,就可以編寫代碼以將數據輸入到get_coord_shares函數中, 并將協調間隔設置為300秒或5分鐘。 這意味著該算法將尋找在彼此的5分鐘窗口內部署了相同主題標簽序列的帳戶。 該算法檢測到157個高度協調的帳戶,我在下面的網絡圖中可以看到它們的關系。

First, we can see small isolates that are clustered together and — even on first glance — are clearly related as backup accounts of one another: qanonhispania_ and qanonhispania2; redpillpusha and redpillpushaa; and other isolates like deplorabledefenders and theconservativesofamerica. We also find a larger isolate located in the right-middle of the graph, consisting of accounts like creepy.joee, the.new.federation, republican.s, and others.

首先,我們可以看到聚集在一起的小型隔離株,即使乍一看,也明顯彼此之間相互關聯:qanonhispania_和qanonhispania2; redpillpusha和redpillpushaa; 以及其他隔離株,例如令人反感的防御者和保守的美國足球協會。 我們還在圖的右中間找到一個較大的隔離區,其中包含蠕變.joee,新聯邦,共和黨等帳戶。

After inspecting each account in this isolate, I found that the accounts are tied to an organization called, “Red Elephant Marketing LLC.” The accounts are clearly coordinated, but it’s also obvious why this is the case. (After more open source research, I tied the accounts to a network of conservative media and LLCs, including Facebook pages that had no transparency about ownership, all of them likely linked to a single individual).

在檢查了隔離物中的每個帳戶之后,我發現這些帳戶與一個名為“ Red Elephant Marketing LLC”的組織有關。 帳目明確協調,但顯而易見的是為什么如此。 (經過更多開放源代碼研究之后,我將這些帳戶與保守的媒體和有限責任公司的網絡聯系在一起,包括對所有權不透明的Facebook頁面,所有這些頁面都可能鏈接到一個人)。

The dense cluster in the center of the graph looks like a giant soup of coordination — if we accept the algorithm’s output as gospel— but after inspecting the hashtags sequences here, I found that it is actually due to single sequences like “QAnon” and “wg1wga.” These were my initial search queries and they’re used frequently by many QAnon accounts. When I filtered out these single hashtag sequences, the number of coordinated accounts reduced dramatically. While we can’t rule out that this cluster is highly coordinated, it’s less likely, but to be certain we’d need to do additional analysis (by e.g., adjusting the coordination interval, checking results again, and examining other data like content).

圖中心密集的簇看起來像一個巨大的協調湯匙(如果我們接受算法的輸出為福音),但是在檢查了此處的標簽序列之后,我發現這實際上是由于諸如“ QAnon”和“ wg1wga。” 這些是我最初的搜索查詢,許多QAnon帳戶經常使用它們。 當我過濾掉這些單一的標簽序列時,協調帳戶的數量大大減少了。 盡管我們不能排除此集群的高度協調性,但可能性較小,但是可以肯定的是,我們需要進行其他分析(例如,調整協調間隔,再次檢查結果以及檢查其他數據,例如內容) 。

無論如何,什么是“協調”? (What is “coordination”, anyway?)

Part of what makes disinformation campaigns so difficult to detect is that so much of our online activity appears, and indeed is, coordinated. Hashtags can summon entire movements into being in just a few hours and diverse groups of people can appear in your replies in minutes. The crowdsourced mobilization of the #MeToo movement and #BlackLivesMatter are good examples of such events.

使虛假信息活動如此難以檢測的部分原因是,我們的許多在線活動都出現了,并且確實是協調的。 標簽可以在短短幾個小時內就將整個動作召喚出來,而各種各樣的人可以在幾分鐘內出現在您的回復中。 #MeToo運動和#BlackLivesMatter的眾籌動員就是此類事件的很好例子。

But these networked publics are also playgrounds for a growing number of adversaries — scammers, hyper-partisan media, fringe conspirators, political consultants, state-backed baddies, and more. All of these actors usually engage in some level of coordination and inauthenticity, some more obliquely than others, and they are trying to hide among sincere activists all the time. Evelyn Douek makes this point quite well in, “What Does “Coordinated Inauthentic Behavior” Actually Mean?”:

但是,這些網絡公眾還是越來越多的對手的騙子,包括騙子,超級黨派媒體,邊緣串謀者,政治顧問,國家支持的壞蛋等等。 所有這些參與者通常都從事某種程度的協調和虛偽,比其他參與者更傾向于傾斜,他們一直試圖躲在真誠的激進主義者中間。 伊夫琳·杜耶克(Evelyn Douek)在“協調的不真實行為實際上意味著什么? ”:

Coordination and authenticity are not binary states but matters of degree, and this ambiguity will be exploited by actors of all stripes.

協調性和真實性不是二元狀態,而是程度的問題,各種歧義的參與者都將利用這種歧義。

A recent working paper by researchers at the University of Florida also provides some insight into the difficulties of this distinction. The researchers analyzed 51 million tweets from Twitter’s information operations archive in an attempt to distinguish between the coordination used in state-backed coordination and that found in online communities.

佛羅里達大學研究人員最近的一份工作論文也提供了一些了解這種區別的困難的見解。 研究人員分析了Twitter信息運營檔案中的5100萬條推文,試圖區分由國家支持的協調所使用的協調與在線社區中所進行的協調。

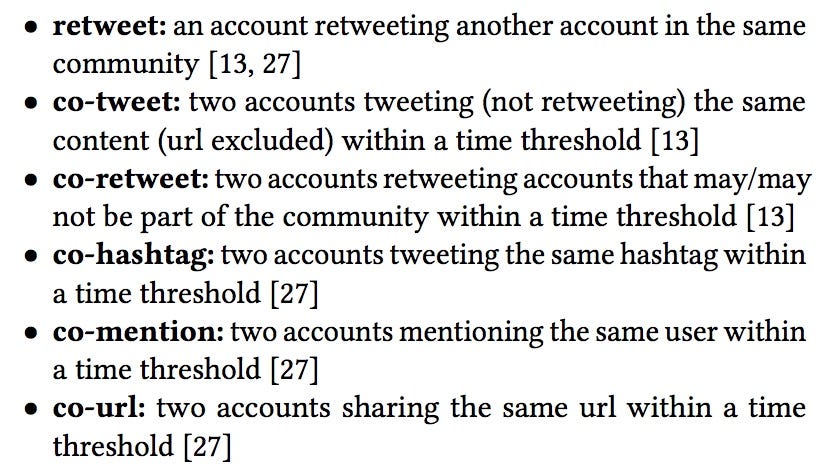

Using six previously established “coordination patterns” from other disinformation studies, the researchers built a network-based classifier that looked at 10 state-backed campaigns and 4 online communities ranging from politics to academics and security researchers. The researchers found that coordination is indeed not uncommon on Twitter and highly-political communities are more likely to show patterns similar to those used by strategic information operations.

研究人員使用其他信息研究中先前建立的六個“協調模式”,建立了一個基于網絡的分類器,該分類器研究了10個國家支持的競選活動以及4個在線社區,從政治到學者和安全研究人員。 研究人員發現,在Twitter上進行協調確實并不罕見,高度政治化的社區更有可能顯示出與戰略信息運營所使用的模式相似的模式。

These findings suggest that identifying coordination patterns alone is not enough to detect disinformation campaigns. Analysts need to consider coordination alongside other pieces of evidence that suggest that a group of accounts might be connected. Do the accounts have a similar or identical temporal signature? Do they have the same number of page administrators or similar locations? Is there additional information on domain registrants? Do the accounts deploy similar narratives, images, or other content? How else might they be connected, and are there reasonable explanations for those connections? As researchers in a field burgeoning with disinformation, it’s critical we come to conclusions with multiple pieces of evidence, alternative hypotheses, and with reliable confidence that we have tested and can defend those theories.

這些發現表明, 僅識別協調模式不足以檢測虛假信息活動。 分析師需要與其他證據一起考慮協調,這些證據表明可能存在一組帳戶。 帳戶是否具有相似或相同的時間簽名? 他們是否具有相同數量的頁面管理員或相似的位置? 是否有關于域名注冊人的其他信息? 這些帳戶是否部署了類似的敘述,圖像或其他內容? 能怎樣 可以連接它們嗎?對于這些連接有合理的解釋嗎? 隨著研究人員在虛假信息領域的蓬勃發展,我們得出結論的關鍵是多方面的證據,替代假設以及我們已經過測試并且可以捍衛這些理論的可靠信心。

翻譯自: https://medium.com/swlh/detecting-coordination-in-disinformation-campaigns-7e9fa4ca44f3

美團騎手檢測出虛假定位

本文來自互聯網用戶投稿,該文觀點僅代表作者本人,不代表本站立場。本站僅提供信息存儲空間服務,不擁有所有權,不承擔相關法律責任。 如若轉載,請注明出處:http://www.pswp.cn/news/389908.shtml 繁體地址,請注明出處:http://hk.pswp.cn/news/389908.shtml 英文地址,請注明出處:http://en.pswp.cn/news/389908.shtml

如若內容造成侵權/違法違規/事實不符,請聯系多彩編程網進行投訴反饋email:809451989@qq.com,一經查實,立即刪除!

函數)