bigquery

Bigquery is a fantastic tool! It lets you do really powerful analytics works all using SQL like syntax.

Bigquery是一個很棒的工具! 它使您能夠使用像語法一樣SQL來進行真正強大的分析工作。

But it lacks chaining the SQL queries. We cannot run one SQL right after the completion of another. There are many real-life applications where the output of one query depends upon for the execution of another. And we would want to run multiple queries to achieve the results.

但是它缺少鏈接SQL查詢的方法。 我們不能在完成另一個SQL之后立即運行一個SQL。 在許多實際應用中,一個查詢的輸出取決于另一個查詢的執行。 我們希望運行多個查詢來獲得結果。

Here is one scenario, suppose you are doing RFM analysis using BigQuery ML. Where first you have to calculate the RFM values for all the users then apply the k-means cluster to the result of the first query and then merge the output of the first query and second query to generate the final data table.

這是一種情況,假設您正在使用BigQuery ML進行RFM分析 。 首先,您必須為所有用戶計算RFM值,然后將k-means群集應用于第一個查詢的結果,然后合并第一個查詢和第二個查詢的輸出以生成最終數據表。

In the above scenario, every next query depends upon the output of the previous query and the output of each query also needs to be stored in data for other uses.

在上述情況下,每個下一個查詢都取決于上一個查詢的輸出,每個查詢的輸出也需要存儲在數據中以用于其他用途。

I this guide I will show how to execute as many SQL queries as you want in BigQuery one after another creating a chaining effect to gain the desire results.

在本指南中,我將展示如何在BigQuery中一個接一個地執行任意數量SQL查詢,以及如何創建鏈接效果以獲得所需的結果。

方法 (Methods)

I will demonstrate two approaches to chaining the queries

我將演示兩種鏈接查詢的方法

The First using cloud pub/sub and cloud function: This is a more sophisticated method as it ensures that the current query is finished executing before executing the next one. This method also required a bit of programming experience so better to reach out to someone with a technical background in your company.

第一種使用云發布/訂閱和云功能:這是一種更為復雜的方法,因為它可以確保在執行下一個查詢之前完成當前查詢的執行。 這種方法還需要一點編程經驗,因此更好地與您公司中具有技術背景的人員聯系。

The second using BigQuery’s own scheduler: However, the query scheduler cannot ensure the execution of one query is complete before the next is triggered so we will have to hack it using query execution time. More on this later.

第二個使用BigQuery自己的調度程序:但是,查詢調度程序無法確保一個查詢的執行在觸發下一個查詢之前就已經完成,因此我們將不得不利用查詢執行時間來破解它。 稍后再詳細介紹。

And If you want to get your hands dirty yourself then here is an excellent course to start with.

而且,如果您想弄臟自己的雙手,那么 這是一個很好的 起點。

Note: We will continue with the RFM example discussed above to get you the idea of the process. But the same can be applied for any possible scenario where triggering multiple SQL queries is needed.

注意 :我們將繼續上面討論的RFM示例,以使您了解該過程。 但是,對于需要觸發多個SQL查詢的任何可能情況,也可以應用相同的方法。

方法1 (Method 1)

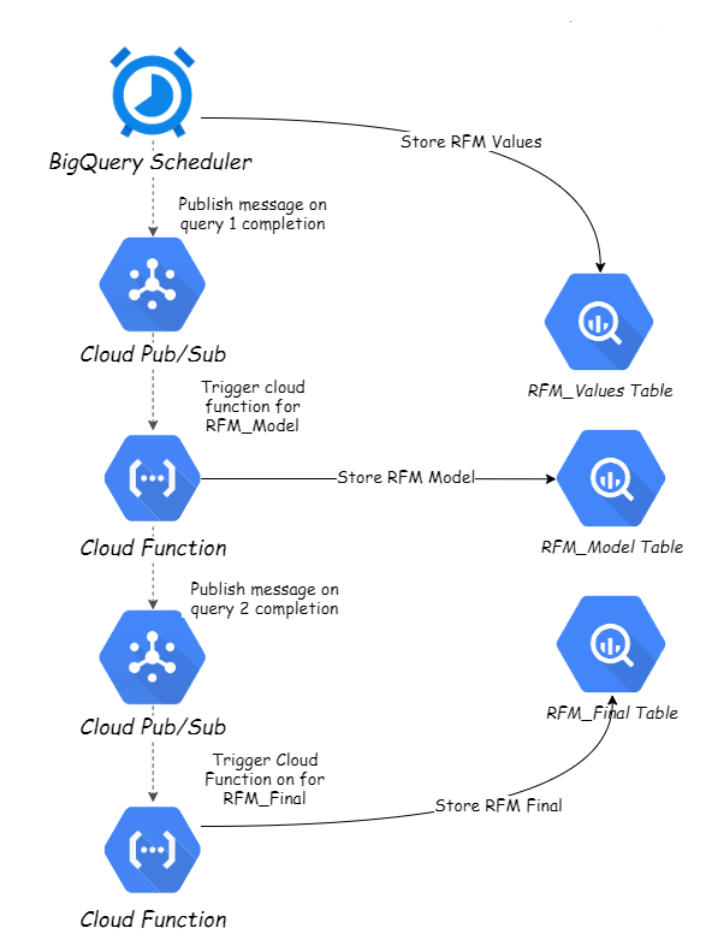

Method 1 uses the combination of cloud functions and pub/subs to chain the entire flow. The process starts by query scheduler which after executing the first query sends a message to pub/sub topic that triggers a cloud function responsible to trigger 2nd query and once completed sends a message to another pub/sub topic to start yet another cloud function. The process continues until the last query is executed by the cloud function.

方法1使用云功能和pub / sub的組合來鏈接整個流程。 該過程由查詢調度程序開始,查詢調度程序在執行第一個查詢后向pub / sub主題發送一條消息,該消息觸發一個負責觸發第二次查詢的云功能,一旦完成,就向另一個pub / sub主題發送一條消息以啟動另一個云功能。 該過程一直持續到云功能執行最后一個查詢為止。

Let’s understand the process with our RFM analysis use case.

讓我們通過我們的RFM分析用例來了解流程。

Suppose we have three queries that are needed to be run one after another to perform RFM analysis. First, that calculates RFM values, we will call it RFM Values. Second, that creates the model, we will call itRFM Model. Third, that merges model output with users RFM values, we will call it RFM Final.

假設我們有三個查詢需要一個接一個地運行以執行RFM分析。 首先 ,它計算RFM值,我們將其稱為 RFM Values 。 其次 ,創建模型,我們將其稱為 RFM Model 。 第三 ,將模型輸出與用戶RFM值合并,我們將其稱為 RFM Final 。

Here is how the data pipeline looks like:

數據管道如下所示:

Note: I will assume that tables for all three queries have already been created.

注意 : 我將假設已經創建了所有三個查詢的表。

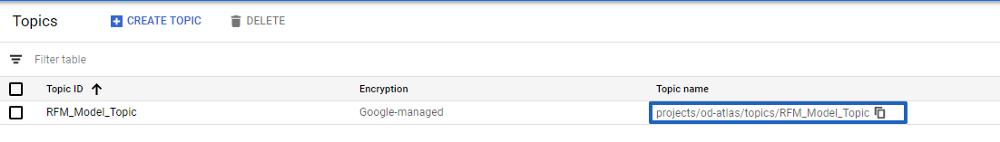

1- We start by first creating a Pub/Sub topic as it will be needed while creating RFM Values query schedular. I have named it RFM_Model_Topic as it will trigger the cloud function responsible for executing our model query (i.e RFM Model).

1-我們首先創建一個Pub / Sub主題,因為在創建RFM Values查詢計劃時將需要它。 我將其命名為RFM_Model_Topic ,因為它將觸發負責執行我們的模型查詢的云函數(即RFM Model )。

_Topic Pub/sub topic, by Muffaddal_Topic Pub / sub主題,作者:Muffaddal Copy the topic name that is needed while creating RFM Values schedular.

計劃創建 RFM Values ,復制所需的主題名稱 。

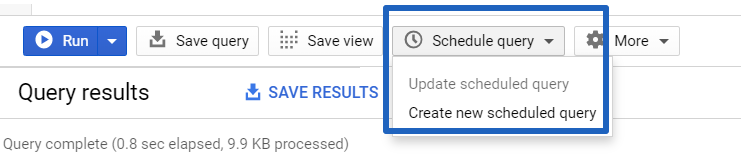

2- Next, go to BigQuery, paste the RFM Values query that calculates RFM values for our users, in the query editor, and click the ‘Schedule query’ button to create a new query schedular.

2-接下來,轉到BigQuery,在查詢編輯器中粘貼為我們的用戶計算RFM值的RFM Values查詢,然后單擊“計劃查詢”按鈕以創建新的查詢計劃。

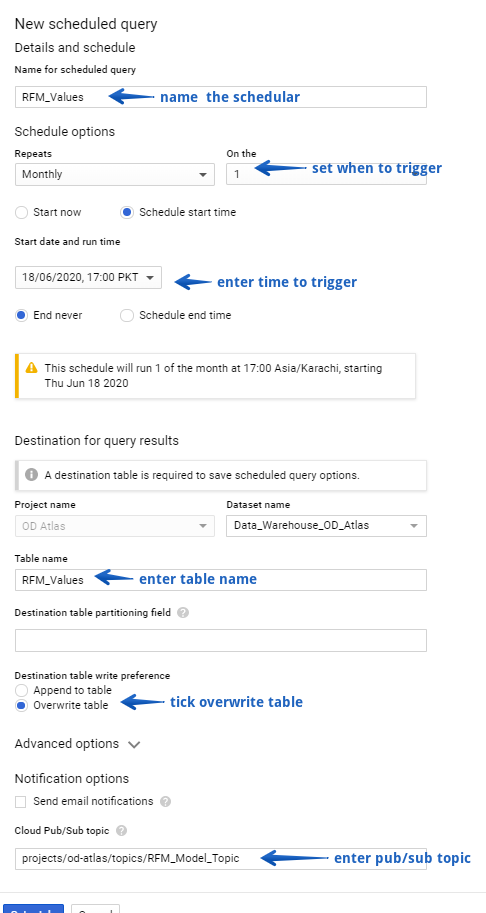

3- Enter the required values in the scheduler creation menu to create the scheduler

3-在調度程序創建菜單中輸入所需的值以創建調度程序

What this scheduler will do is it will execute on the specified time to calculate users' recency, frequency, and monetary values and store it in the mentioned BigQuery table. Once the schedule is done executing the query it will send a message to our RFM_Model_Topic which will trigger a cloud function to trigger our model query. So next let’s create a cloud function.

該調度程序將執行的操作是在指定的時間執行以計算用戶的新近度,頻率和貨幣值,并將其存儲在提到的BigQuery表中。 計劃執行完查詢后,它將向我們的RFM_Model_Topic發送一條消息,這將觸發一個云函數來觸發我們的模型查詢。 因此,接下來讓我們創建一個云功能。

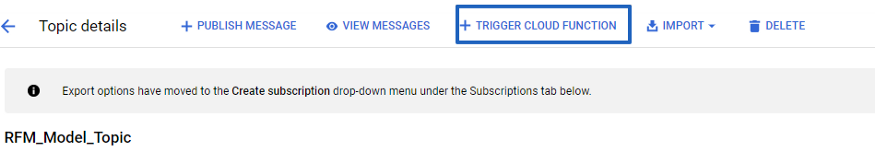

4- Go to RFM_Model_Topicpub/sub topi and click ‘Trigger Cloud Function’ Button at the top of the screen.

4-轉到RFM_Model_Topic pub / sub RFM_Model_Topic ,然后單擊屏幕頂部的“觸發云功能”按鈕。

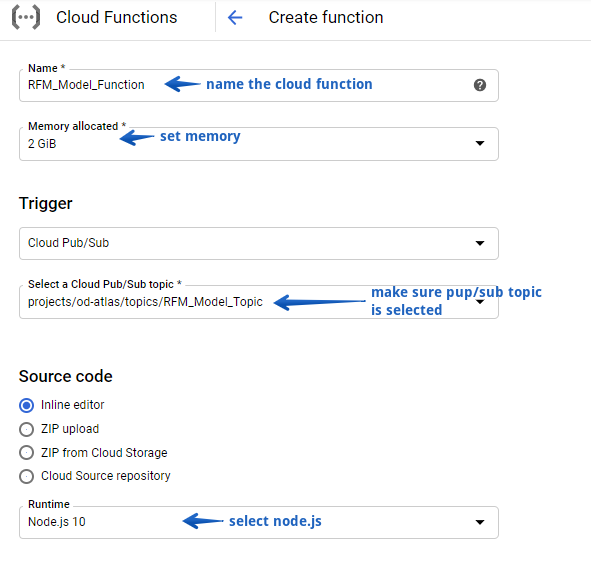

5- Enters settings as shown below and name the cloud function as RFM_Model_Function

5-輸入如下所示的設置,并將云功能命名為RFM_Model_Function

6- And paste below code in index.js file

6-并將以下代碼粘貼到index.js文件中

Once the query is executed cloud function sends a publish message to a new pub/sub topic named RFM_Final which triggers cloud function responsible for the last query that combines both RFM values and model results in one data set.

執行查詢后,云功能會將發布消息發送到名為RFM_Final的新發布/子主題,該主題會觸發負責最后一次查詢的云功能,該功能將RFM值和模型結果組合到一個數據集中。

7- Therefore, next, create RFM_Model topic in pub/sub and a cloud function as we did in the previous step. Copy-paste below code in cloud function so that it can run the last query.

7-因此,接下來,像在上一步中一樣,在pub / sub和一個云函數中創建RFM_Model主題。 將以下代碼復制粘貼到云函數中,以便它可以運行最后一個查詢。

And that is it!

就是這樣!

We can use as many pub/sub and cloud functions as we want to chain as many SQL queries as we want.

我們可以使用任意數量的pub / sub和cloud函數,以根據需要鏈接任意數量SQL查詢。

方法2 (Method 2)

Now the first approach is robust but requires a bit of programming background and says it is not your strong suit. You can use method 2 to chain the BigQuery queries.

現在,第一種方法是健壯的,但是需要一定的編程背景,并且說這不是您的強項。 您可以使用方法2鏈接BigQuery查詢。

BigQuery’s query scheduler can be used to run the queries one after another.

BigQuery的查詢計劃程序可用于依次運行查詢。

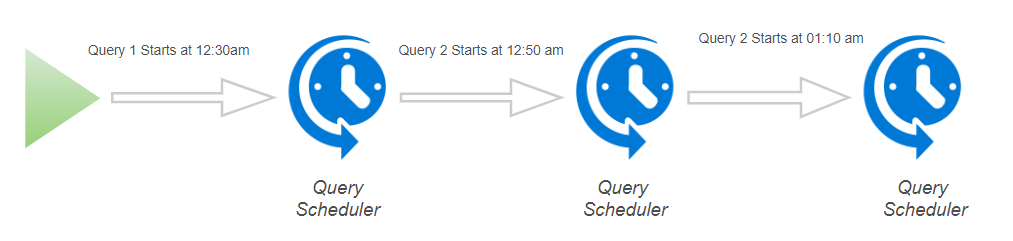

Idea is that we start the process the same as we did in method 1, i.e. trigger the first query using a scheduler and estimate its time for completion. Let’s say the first query takes 5 minutes to complete. What we will do is trigger the 2nd query 10 mints after the first query start time. This way we are ensured that the second query is triggered after the first query is completely executed.

想法是,我們開始的過程與方法1相同,即使用調度程序觸發第一個查詢并估計其完成時間。 假設第一個查詢需要5分鐘才能完成。 我們要做的是在第一個查詢開始時間之后10分鐘觸發第二個查詢。 這樣,我們可以確保在完全執行第一個查詢后觸發第二個查詢。

Let’s understand this by example

讓我們通過示例來了解這一點

Suppose we scheduled the first query at 12:30 am. It takes 10 mints to complete. So we know at 12:40 am the first query should be completed. We will set the second query scheduler to execute at 12:50 am (keeping a 10 mint gap between two schedulers just in case). And we will trigger the third query at 1:10 am and so on.

假設我們將第一個查詢安排在上午12:30。 它需要10顆薄荷糖才能完成。 因此,我們知道應該在12:40 am完成第一個查詢。 我們將第二個查詢調度程序設置為在上午12:50執行(以防萬一,兩個調度程序之間要保持10分鐘的間隔)。 然后,我們將在上午1:10觸發第三個查詢,依此類推。

Note: Since the query scheduler doesn’t work with BigQuery ML, therefore, method 2 won’t work for our RFM analysis case but It should get you the idea on how to use the scheduler to chain queries.

注意 :由于查詢調度程序不適用于BigQuery ML,因此方法2在我們的RFM分析案例中不起作用,但是它應該使您了解如何使用調度程序鏈接查詢。

摘要 (Summary)

Executing queries one after another helps to achieve really great results especially when the result of one query depends on the output of another and all the query results are also needed as table format as well. BigQuery out of the box doesn’t support this functionality but using GCP’s component we can streamline the process to achieve the results.

逐個執行查詢有助于獲得非常好的結果,尤其是當一個查詢的結果取決于另一個查詢的輸出并且所有查詢結果也都需要作為表格式時。 開箱即用的BigQuery不支持此功能,但是使用GCP的組件,我們可以簡化流程以實現結果。

In this article, we went through two of the method to do this. First using the cloud pub/sub and cloud function and another using BigQuery’s own query scheduler.

在本文中,我們介紹了兩種方法來執行此操作。 首先使用云發布/訂閱和云功能,另一個使用BigQuery自己的查詢調度程序。

With this article, I hope I was able to convey the idea of the process for you to pick it up and tailor it for your particular business case.

希望通過這篇文章,您可以傳達有關流程的想法,以供您選擇并針對您的特定業務案例進行調整。

您想要的類似讀物: (Similar Reads You Would Like:)

Automate the RFM analysis using BigQuery ML.

使用BigQuery ML自動執行RFM分析。

Store Standard Google Analytics Hit Level Data in BigQuery.

在BigQuery中存儲標準Google Analytics(分析)點擊量數據 。

Automate Data Import to Google Analytics using GCP.

使用GCP自動將數據導入Google Analytics(分析) 。

翻譯自: https://towardsdatascience.com/chaining-multiple-sql-queries-in-bigquery-8498d8885da5

bigquery

本文來自互聯網用戶投稿,該文觀點僅代表作者本人,不代表本站立場。本站僅提供信息存儲空間服務,不擁有所有權,不承擔相關法律責任。 如若轉載,請注明出處:http://www.pswp.cn/news/388822.shtml 繁體地址,請注明出處:http://hk.pswp.cn/news/388822.shtml 英文地址,請注明出處:http://en.pswp.cn/news/388822.shtml

如若內容造成侵權/違法違規/事實不符,請聯系多彩編程網進行投訴反饋email:809451989@qq.com,一經查實,立即刪除!

)

)

的實現原理)