我們考慮SVM的對偶問題,我們通常是在對偶空間中進行求解的。

1、Lagrange Multipliers

對于一個很一般的問題

Minimize?f(x)subject?to?{a(x)≥0b(x)≤0c(x)=0\begin{aligned} \text { Minimize } & f(x) \\ \text { subject to } \quad & \left\{\begin{array}{l} a(x) \geq 0 \\ b(x) \leq 0 \\ c(x)=0 \end{array}\right. \end{aligned} ?Minimize??subject?to??f(x)????a(x)≥0b(x)≤0c(x)=0??

構造拉氏函數

L(x,α)=f(x)?α1a(x)?α2b(x)?α3c(x){α1≥0α2≤0α3is?unconstrained?\begin{aligned} L(x, \alpha)= & f(x)-\alpha_1 a(x)-\alpha_2 b(x)-\alpha_3 c(x) \\ & \left\{\begin{array}{l} \alpha_1 \geq 0 \\ \alpha_2 \leq 0 \\ \alpha_3 \text { is unconstrained } \end{array}\right. \end{aligned} L(x,α)=?f(x)?α1?a(x)?α2?b(x)?α3?c(x)????α1?≥0α2?≤0α3??is?unconstrained???

我們對拉氏函數關于拉格朗日乘子求最大

max?αL(x,α)={f(x),if?{a(x)≥0b(x)≤0c(x)=0+∞,otherwise?\max _\alpha L(x, \alpha)=\left\{\begin{array}{lr} f(x), & \text { if }\left\{\begin{array}{l} a(x) \geq 0 \\ b(x) \leq 0 \\ c(x)=0 \end{array}\right. \\ +\infty, & \text { otherwise } \end{array}\right. αmax?L(x,α)=????f(x),+∞,??if?????a(x)≥0b(x)≤0c(x)=0??otherwise??

于是我們的優化目標變為

min?xmax?αL(x,α)subject?to?{a(x)≥0b(x)≤0c(x)=0\begin{aligned} \min _x &\max _\alpha L(x, \alpha)\\ \text { subject to } \quad & \left\{\begin{array}{l} a(x) \geq 0 \\ b(x) \leq 0 \\ c(x)=0 \end{array}\right. \end{aligned} xmin??subject?to??αmax?L(x,α)????a(x)≥0b(x)≤0c(x)=0??

進一步的,我們又有

min?xmax?αL(x,α)=max?αmin?xL(x,α)\min _x \max _\alpha L(x, \alpha)=\max _\alpha \min _x L(x, \alpha) xmin?αmax?L(x,α)=αmax?xmin?L(x,α)

當我們在內層把xxx消掉后,我們最終的優化問題將與樣本無關,只與拉格朗日乘子有關,SVM似乎不會受樣本的維數影響

2、KKT條件

Stationarity??f(x?)?α1?a(x?)?α2?b(x?)?α3?c(x?)=0Primal?feasibility?{a(x?)≥0b(x?)≤0c(x?)=0Dual?feasibility?{α1≥0α2≤0α3is?unconstrained?Complementary?slackness?{α1a(x?)=0α2b(x?)=0α3c(x?)=0\begin{aligned} & \text { Stationarity } \nabla f\left(x^*\right)-\alpha_1 \nabla a\left(x^*\right)-\alpha_2 \nabla b\left(x^*\right)-\alpha_3 \nabla c\left(x^*\right)=0 \\ & \text { Primal feasibility }\left\{\begin{array}{l} a\left(x^*\right) \geq 0 \\ b\left(x^*\right) \leq 0 \\ c\left(x^*\right)=0 \end{array}\right. \\ & \text { Dual feasibility }\left\{\begin{array}{l} \alpha_1 \geq 0 \\ \alpha_2 \leq 0 \\ \alpha_3 \text { is unconstrained } \end{array}\right. \\ & \text { Complementary slackness }\left\{\begin{array}{l} \alpha_1 a\left(x^*\right)=0 \\ \alpha_2 b\left(x^*\right)=0 \\ \alpha_3 c\left(x^*\right)=0 \end{array}\right. \end{aligned} ??Stationarity??f(x?)?α1??a(x?)?α2??b(x?)?α3??c(x?)=0?Primal?feasibility?????a(x?)≥0b(x?)≤0c(x?)=0??Dual?feasibility?????α1?≥0α2?≤0α3??is?unconstrained???Complementary?slackness?????α1?a(x?)=0α2?b(x?)=0α3?c(x?)=0??

3、Hard Margin SVM 對偶問題

回到我們的Hard Margin SVM

Minimize 12∥w∥2\frac{1}{2}\|\mathbf{w}\|^221?∥w∥2

subject to 1?yi(wTxi+b)≤01-y_i\left(\mathbf{w}^T \mathbf{x}_i+b\right) \leq 0 \quad1?yi?(wTxi?+b)≤0 for i=1,…,ni=1, \ldots, ni=1,…,n

構造拉格朗日函數

L=12wTw+∑i=1nαi(1?yi(wTxi+b))\mathcal{L}=\frac{1}{2} \mathbf{w}^T \mathbf{w}+\sum_{i=1}^n \alpha_i\left(1-y_i\left(\mathbf{w}^T \mathbf{x}_i+b\right)\right) L=21?wTw+i=1∑n?αi?(1?yi?(wTxi?+b))

分別對權重和偏置求偏導

w+∑i=1nαi(?yi)xi=0?w=∑i=1nαiyixi∑i=1nαiyi=0αi≥0\begin{aligned} \mathbf{w}+\sum_{i=1}^n \alpha_i\left(-y_i\right) \mathbf{x}_i&=\mathbf{0} \quad \Rightarrow \quad \mathbf{w}=\sum_{i=1}^n \alpha_i y_i \mathbf{x}_i \\ \sum_{i=1}^n \alpha_i y_i&=0 \quad \alpha_i \geq 0 \\ & \end{aligned} w+i=1∑n?αi?(?yi?)xi?i=1∑n?αi?yi??=0?w=i=1∑n?αi?yi?xi?=0αi?≥0?

因此將Hard Margin SVM轉化為對偶問題(把求得的w\mathbf{w}w代入)

max?.W(α)=∑i=1nαi?12∑i=1,j=1nαiαjyiyjxiTxjsubject?to?αi≥0,∑i=1nαiyi=0\begin{aligned} & \max . \quad W(\boldsymbol{\alpha})=\sum_{i=1}^n \alpha_i-\frac{1}{2} \sum_{i=1, j=1}^n \alpha_i \alpha_j y_i y_j \mathbf{x}_i^T \mathbf{x}_j \\ & \text { subject to } \alpha_i \geq 0, \sum_{i=1}^n \alpha_i y_i=0 \end{aligned} ?max.W(α)=i=1∑n?αi??21?i=1,j=1∑n?αi?αj?yi?yj?xiT?xj??subject?to?αi?≥0,i=1∑n?αi?yi?=0?

特別注意到:

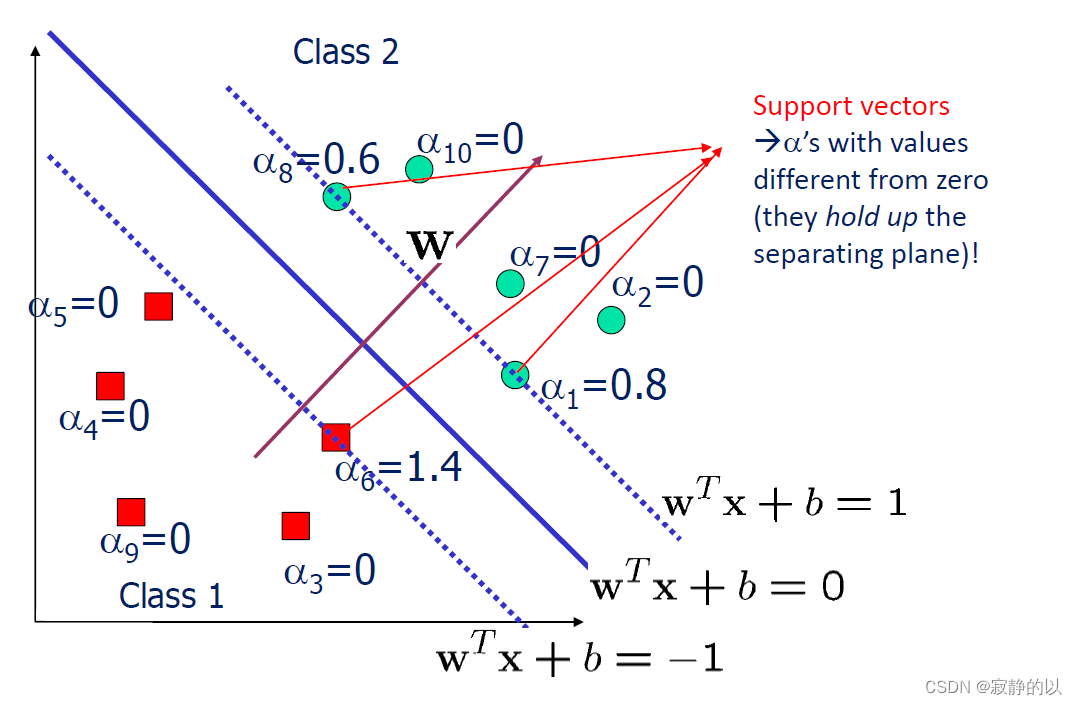

w=∑i=1nαiyixi\mathbf{w}=\sum_{i=1}^n \alpha_i y_i \mathbf{x}_i w=i=1∑n?αi?yi?xi?

- 由于標簽的值為+1或-1,所以上式隱含正負樣本對分解面的貢獻是大致相同的。正負樣本規模大致相當

- 對于每一個樣本xi\mathbf{x}_ixi?,都有一個αi\alpha_iαi?,而當αi\alpha_iαi?為000時,該樣本對分類器沒有貢獻,事實確實如此。而那些對分類器有貢獻的樣本又叫支撐向量Support Vectors

)

)

-- 配置文件之Gzip)