直方圖反向投影式通過給定的直方圖信息,在圖像找到相應的像素分布區域,opencv提供兩種算法,一個是基于像素的,一個是基于塊的。

使用方法不寫了,可以參考一下幾個網站:

直方圖反向投影參考1

直方圖參考2

?

測試例子1:灰度直方圖反向投影

IplImage * image= cvLoadImage("22.jpg");

IplImage * image2= cvLoadImage("2.jpg");

int hist_size=256;

float range[] = {0,255};

float* ranges[]={range}; IplImage* gray_plane = cvCreateImage(cvGetSize(image),8,1);

cvCvtColor(image,gray_plane,CV_BGR2GRAY);

CvHistogram* gray_hist = cvCreateHist(1,&hist_size,CV_HIST_ARRAY,ranges,1);

cvCalcHist(&gray_plane,gray_hist,0,0);

//cvNormalizeHist(gray_hist,1.0); IplImage* gray_plane2 = cvCreateImage(cvGetSize(image2),8,1);

cvCvtColor(image2,gray_plane2,CV_BGR2GRAY);

//CvHistogram* gray_hist2 = cvCreateHist(1,&hist_size,CV_HIST_ARRAY,ranges,1);

//cvCalcHist(&gray_plane2,gray_hist2,0,0);

//cvNormalizeHist(gray_hist2,1.0);

IplImage* dst = cvCreateImage(cvGetSize(gray_plane2),IPL_DEPTH_8U,1); cvCalcBackProject(&gray_plane2, dst ,gray_hist);

cvEqualizeHist(dst,dst);

//產生的圖像太暗,做了一些直方圖均衡cvNamedWindow( "dst");

cvShowImage("dst",dst);

cvNamedWindow( "src");

cvShowImage( "src", image2 );

cvNamedWindow( "templ");

cvShowImage( "templ", image );

cvWaitKey(); 效果圖:

?

第一個圖為源圖像,中間的那個小圖像是產生用于反向投影的直方圖的圖像,最后的用直方圖均衡化后的結果圖像,可以看到,蘋果的像素位置幾被找到了。

?

測試例子2:彩色直方圖反向投影測試

IplImage*src= cvLoadImage("myhand2.jpg", 1);

IplImage*templ=cvLoadImage("myhand3.jpg",1);cvNamedWindow( "Source" );

cvShowImage( "Source", src ); IplImage* h_plane2 = cvCreateImage( cvGetSize(src), 8, 1 );

IplImage* s_plane2 = cvCreateImage( cvGetSize(src), 8, 1 );

IplImage* v_plane2 = cvCreateImage( cvGetSize(src), 8, 1);

IplImage* planes2[] = { h_plane2, s_plane2,v_plane2 };IplImage* hsv2 = cvCreateImage( cvGetSize(src), 8, 3 );

cvCvtColor( src, hsv2, CV_BGR2HSV );

cvSplit( hsv2, h_plane2, s_plane2, v_plane2, 0 );

printf("h%d",h_plane2->widthStep);

printf("s%d",h_plane2->widthStep);

printf("v%d",h_plane2->widthStep);IplImage* h_plane = cvCreateImage( cvGetSize(templ), 8, 1 );

IplImage* s_plane = cvCreateImage( cvGetSize(templ), 8, 1 );

IplImage* v_plane = cvCreateImage( cvGetSize(templ), 8, 1);

IplImage* planes[] = { h_plane, s_plane,v_plane };

IplImage* hsv = cvCreateImage( cvGetSize(templ), 8, 3 );

cvCvtColor( templ, hsv, CV_BGR2HSV );

cvSplit( hsv, h_plane, s_plane, v_plane, 0 );

printf("h%d\n",h_plane->widthStep);

printf("s%d\n",s_plane->widthStep);

printf("v%d\n",v_plane->widthStep);int h_bins = 16, s_bins = 16,v_bins=16;

int hist_size[] = {h_bins, s_bins,v_bins};

float h_ranges[] = {0,255};

float s_ranges[] = {0,255};

float v_ranges[] = {0,255};

float* ranges[] = { h_ranges, s_ranges,v_ranges};

CvHistogram* hist;

hist = cvCreateHist( 3, hist_size, CV_HIST_ARRAY, ranges, 1 );

cvCalcHist( planes, hist, 0, 0 );

//1.double a=1.f;

//2.cvNormalizeHist(hist,a);

//templ's hist is just calculateIplImage*back_project=cvCreateImage(cvGetSize(src),8,1);//!!歸一,把改成,就彈出對話框,說planes的steps不是一致的!cvZero(back_project); //但是我去掉歸一,改成就可以顯示//NOW we begin calculate back projectcvCalcBackProject(planes2,back_project,hist);cvNamedWindow( "back_project" );

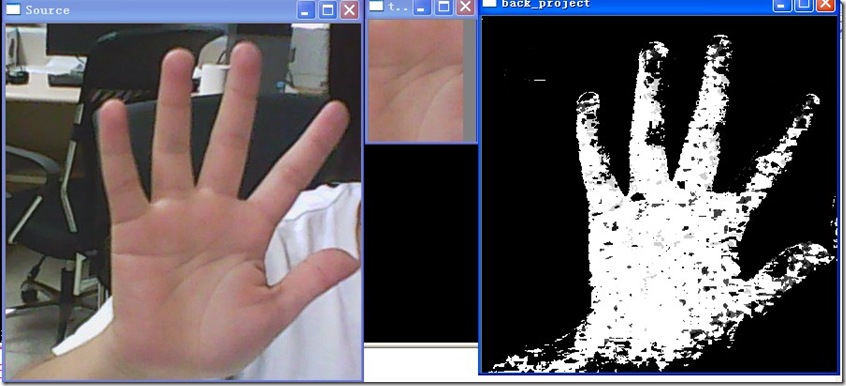

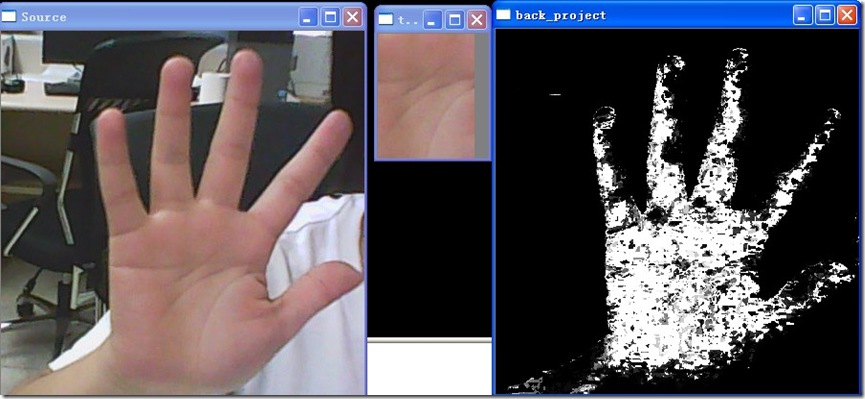

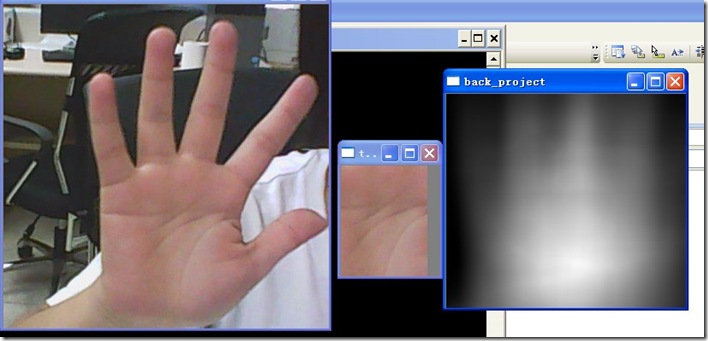

cvShowImage( "back_project", back_project ); cvWaitKey(0); 測試結果:

?

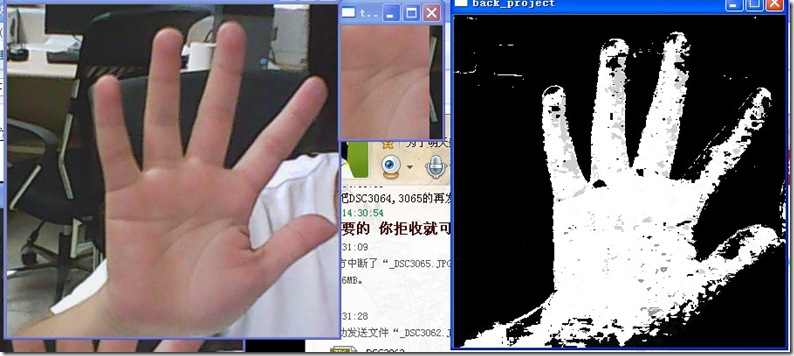

手的膚色位置基本找到了,但是有一個問題,在做直方圖反向的時候,直方圖分級是16等分,并不是256等分,下圖是32等分和8等分的圖像效果:

????? 32等分?

????? 32等分? ?8等分

?8等分

程序里面使用了SHV分量,也算是膚色檢測的一個實例,里面的顏色區分很明顯,所有采用大一點的區域統計,能更好的找到膚色的位置,如果采用很細的顏色區分,光照的影響也會考慮進去了。

測試例子3:基于塊的直方圖投影

這種方法速度很慢,模版圖像別弄的太大了。

IplImage*src= cvLoadImage("2.jpg", 1);

IplImage*templ=cvLoadImage("22.jpg",1);cvNamedWindow( "Source" );

cvShowImage( "Source", src ); IplImage* h_plane2 = cvCreateImage( cvGetSize(src), 8, 1 );

IplImage* s_plane2 = cvCreateImage( cvGetSize(src), 8, 1 );

IplImage* v_plane2 = cvCreateImage( cvGetSize(src), 8, 1);

IplImage* planes2[] = { h_plane2, s_plane2,v_plane2 };IplImage* hsv2 = cvCreateImage( cvGetSize(src), 8, 3 );

cvCvtColor( src, hsv2, CV_BGR2HSV );

cvSplit( hsv2, h_plane2, s_plane2, v_plane2, 0 );

printf("h%d",h_plane2->widthStep);

printf("s%d",h_plane2->widthStep);

printf("v%d",h_plane2->widthStep);IplImage* h_plane = cvCreateImage( cvGetSize(templ), 8, 1 );

IplImage* s_plane = cvCreateImage( cvGetSize(templ), 8, 1 );

IplImage* v_plane = cvCreateImage( cvGetSize(templ), 8, 1);

IplImage* planes[] = { h_plane, s_plane,v_plane };

IplImage* hsv = cvCreateImage( cvGetSize(templ), 8, 3 );

cvCvtColor( templ, hsv, CV_BGR2HSV );

cvSplit( hsv, h_plane, s_plane, v_plane, 0 );

printf("h%d\n",h_plane->widthStep);

printf("s%d\n",s_plane->widthStep);

printf("v%d\n",v_plane->widthStep);int h_bins = 16, s_bins = 16,v_bins=16;

int hist_size[] = {h_bins, s_bins,v_bins};

float h_ranges[] = {0,255};

float s_ranges[] = {0,255};

float v_ranges[] = {0,255};

float* ranges[] = { h_ranges, s_ranges,v_ranges};

CvHistogram* hist;

hist = cvCreateHist( 3, hist_size, CV_HIST_ARRAY, ranges, 1 );

cvCalcHist( planes, hist, 0, 0 );CvSize temp ;

temp.height = src->height - templ->height + 1;

temp.width = src->width - templ->width + 1;

IplImage*back_project=cvCreateImage(temp,IPL_DEPTH_32F,1);//!!歸一,把改成,就彈出對話框,說planes的steps不是一致的!cvZero(back_project); //但是我去掉歸一,改成就可以顯示

cvCalcBackProjectPatch(planes2, back_project, cvGetSize(templ), hist,CV_COMP_INTERSECT ,1);cvNamedWindow( "back_project" );

cvShowImage( "back_project", back_project ); cvWaitKey(0);

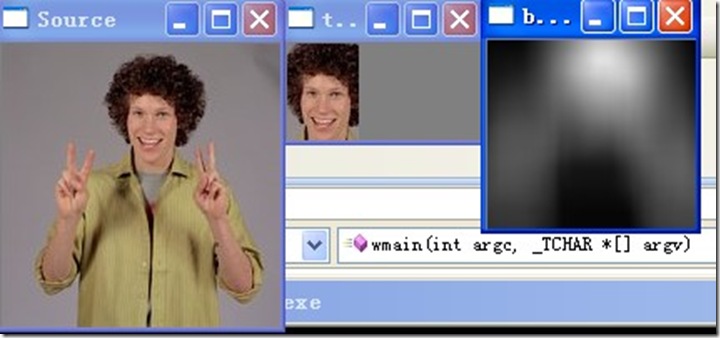

測試圖像:

當模版圖像小雨目標的時候,作為區域檢測器,測試如下:可以找到手區域

當模版等于目標的時候,測試如下:輸出圖像,較亮的部分就是人的頭部大致位置

基于塊的反向,速度太慢了。

![深入剖析授權在WCF中的實現[共14篇]](http://pic.xiahunao.cn/深入剖析授權在WCF中的實現[共14篇])

)

中檢測到有潛在危險的 Request.Form 值。...)

)