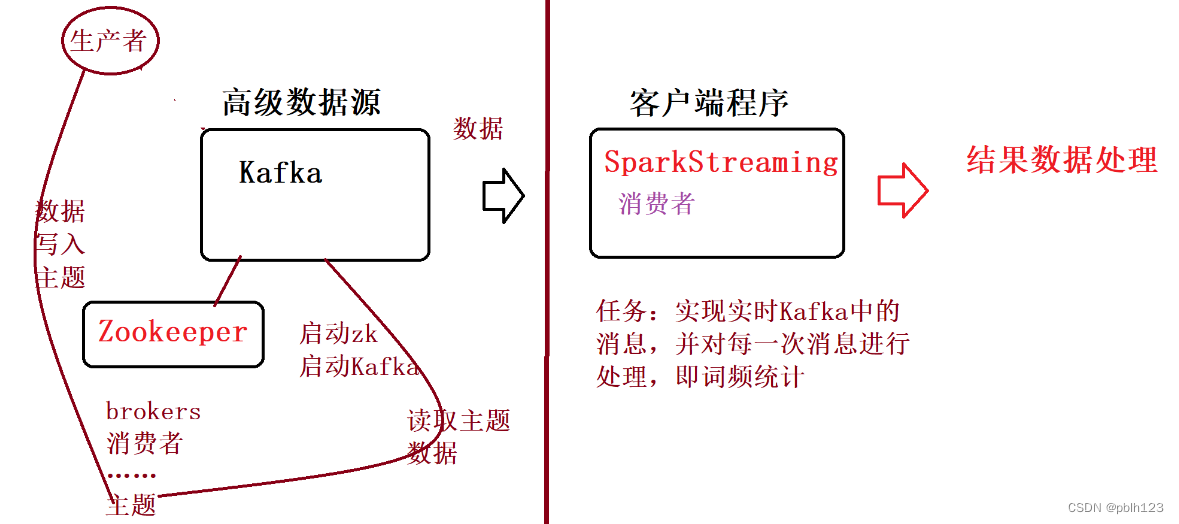

SparkStreaming讀取Kafka數據源:使用Direct方式

一、前提工作

-

安裝了zookeeper

-

安裝了Kafka

-

實驗環境:kafka + zookeeper + spark

-

實驗流程

二、實驗內容

實驗要求:實現的從kafka讀取實現wordcount程序

啟動zookeeper

zk.sh start# zk.sh腳本 參考教程 https://blog.csdn.net/pblh123/article/details/134730738?spm=1001.2014.3001.5502

啟動Kafka

kf.sh start# kf.sh 參照教程 https://blog.csdn.net/pblh123/article/details/134730738?spm=1001.2014.3001.5502

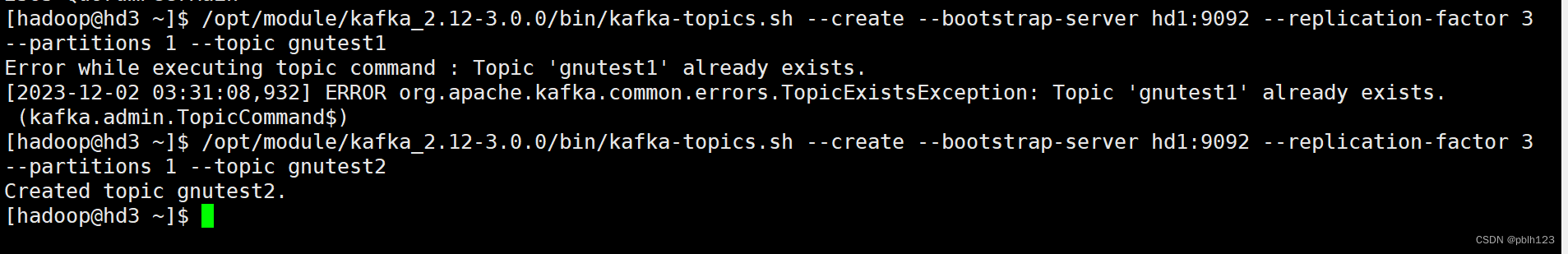

?(測試用,實驗不做)創建Kafka主題,如test,可參考:Kafka的安裝與基本操作

--topic 定義topic名

--replication-factor? 定義副本數

--partitions? 定義分區數

--bootstrap-server??連接的Kafka Broker主機名稱和端口號

--create?創建主題

--describe?查看主題詳細描述

# 創建kafka主題測試

/opt/module/kafka_2.12-3.0.0/bin/kafka-topics.sh --create --bootstrap-server hd1:9092 --replication-factor 3 --partitions 1 --topic gnutest2# 再次查看first主題的詳情

/opt/module/kafka_2.12-3.0.0/bin/kafka-topics.sh --bootstrap-server hd1:9092 --describe --topic gnutest2

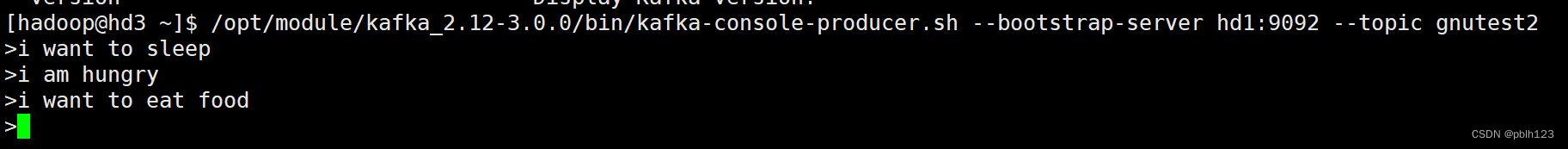

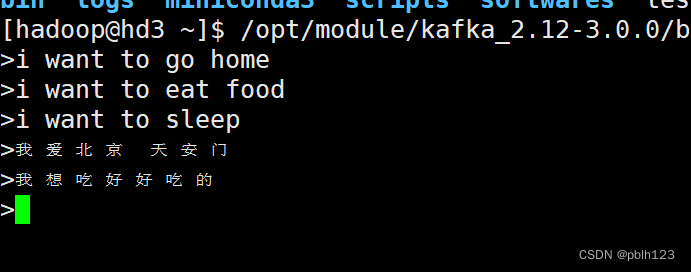

啟動Kafka控制臺生產者,可參考:Kafka的安裝與基本操作

# 創建kafka生產者

/opt/module/kafka_2.12-3.0.0/bin/kafka-console-producer.sh --bootstrap-server hd1:9092 --topic gnutest2

創建maven項目

添加kafka依賴

<!--- 添加streaming依賴 ---><dependency><groupId>org.apache.spark</groupId><artifactId>spark-streaming_2.13</artifactId><version>${spark.version}</version></dependency><!--- 添加streaming kafka依賴 ---><dependency><groupId>org.apache.spark</groupId><artifactId>spark-streaming-kafka-0-10_2.13</artifactId><version>3.4.1</version></dependency>編寫程序,如下所示:

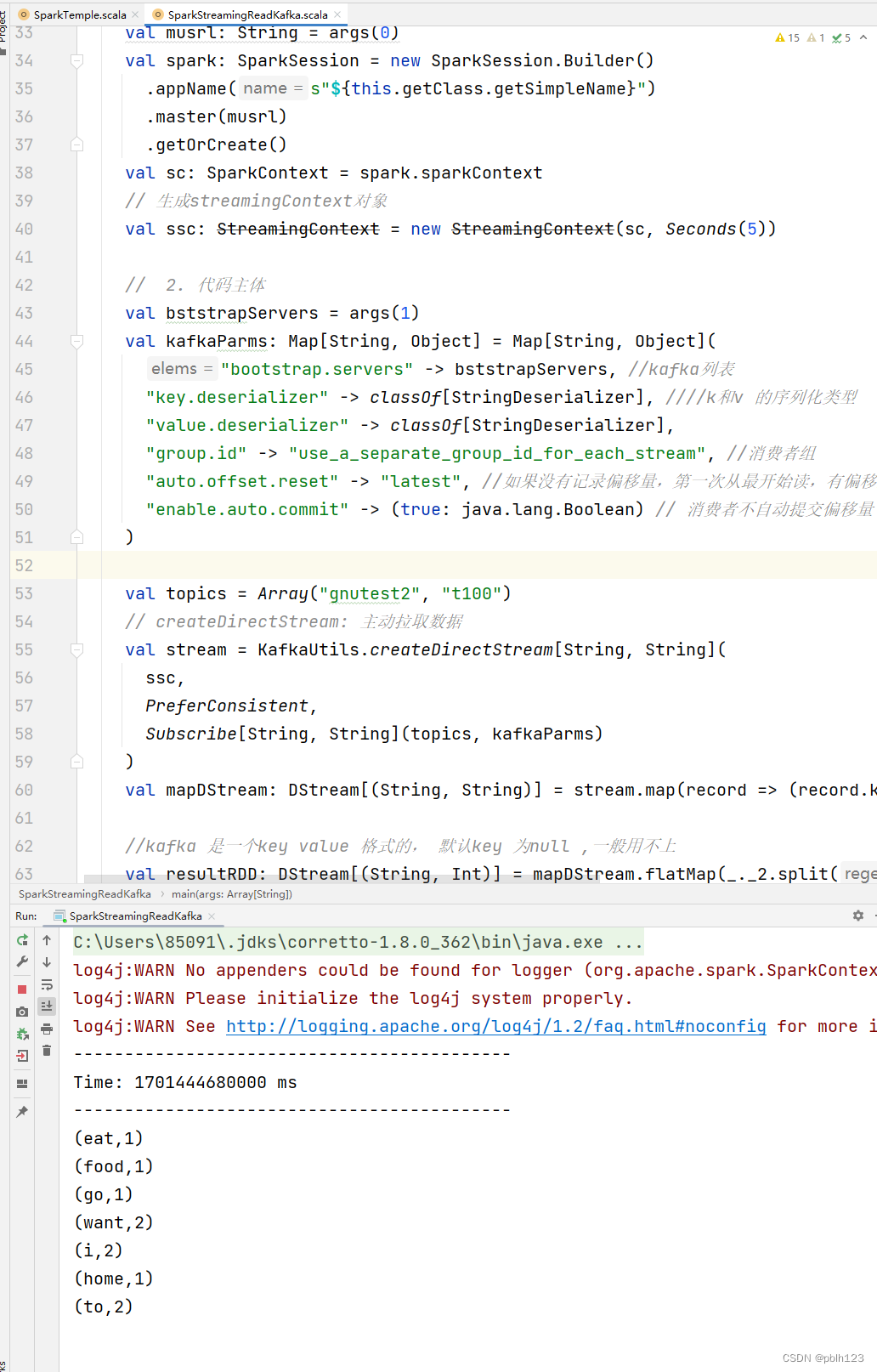

package examsimport org.apache.kafka.common.serialization.StringDeserializer

import org.apache.spark.SparkContext

import org.apache.spark.sql.SparkSession

import org.apache.spark.streaming.dstream.DStream

import org.apache.spark.streaming.kafka010._

import org.apache.spark.streaming.kafka010.LocationStrategies.PreferConsistent

import org.apache.spark.streaming.{Seconds, StreamingContext}

import org.apache.spark.streaming.kafka010.ConsumerStrategies.Subscribeimport java.lang/*** @projectName SparkLearning2023 * @package exams * @className exams.SparkStreamingReadKafka * @description ${description} * @author pblh123* @date 2023/12/1 15:19* @version 1.0**/object SparkStreamingReadKafka {def main(args: Array[String]): Unit = {// 1. 創建spark,sc對象if (args.length != 2) {println("您需要輸入一個參數")System.exit(5)}val musrl: String = args(0)val spark: SparkSession = new SparkSession.Builder().appName(s"${this.getClass.getSimpleName}").master(musrl).getOrCreate()val sc: SparkContext = spark.sparkContext// 生成streamingContext對象val ssc: StreamingContext = new StreamingContext(sc, Seconds(5))// 2. 代碼主體val bststrapServers = args(1)val kafkaParms: Map[String, Object] = Map[String, Object]("bootstrap.servers" -> bststrapServers, //kafka列表"key.deserializer" -> classOf[StringDeserializer], k和v 的序列化類型"value.deserializer" -> classOf[StringDeserializer],"group.id" -> "use_a_separate_group_id_for_each_stream", //消費者組"auto.offset.reset" -> "latest", //如果沒有記錄偏移量,第一次從最開始讀,有偏移量,接著偏移量讀"enable.auto.commit" -> (true: java.lang.Boolean) // 消費者不自動提交偏移量)val topics = Array("gnutest2", "t100")// createDirectStream: 主動拉取數據val stream = KafkaUtils.createDirectStream[String, String](ssc,PreferConsistent,Subscribe[String, String](topics, kafkaParms))val mapDStream: DStream[(String, String)] = stream.map(record => (record.key, record.value))//kafka 是一個key value 格式的, 默認key 為null ,一般用不上val resultRDD: DStream[(String, Int)] = mapDStream.flatMap(_._2.split(" ")).map((_, 1)).reduceByKey(_ + _)// 打印resultRDD.print()// 3. 關閉sc,spark對象ssc.start()ssc.awaitTermination()ssc.stop()sc.stop()spark.stop()}

}

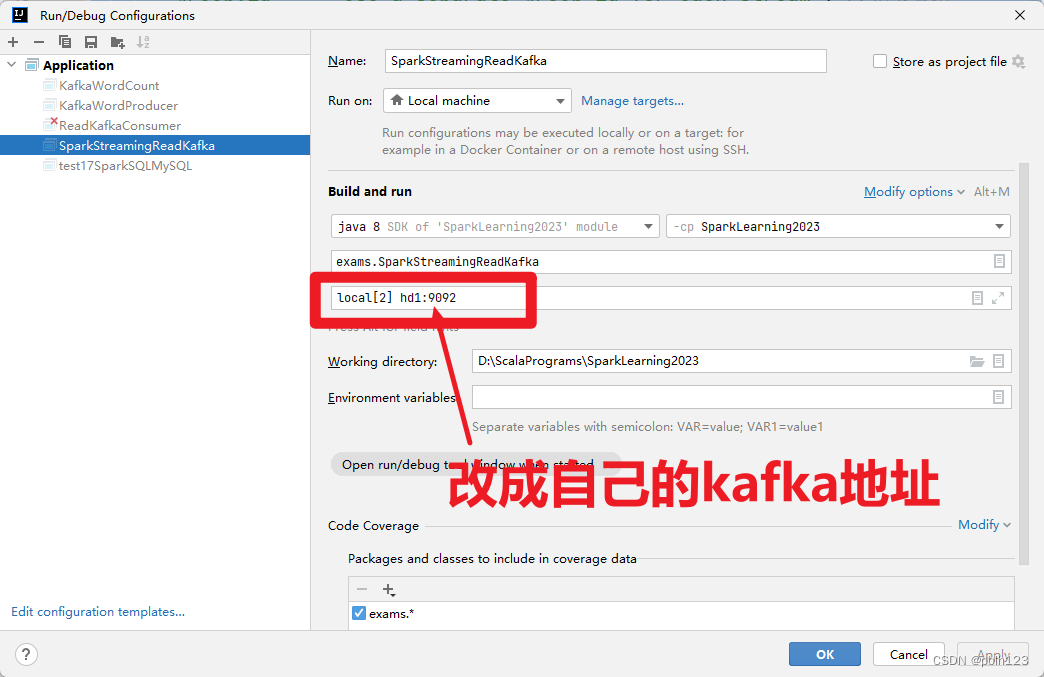

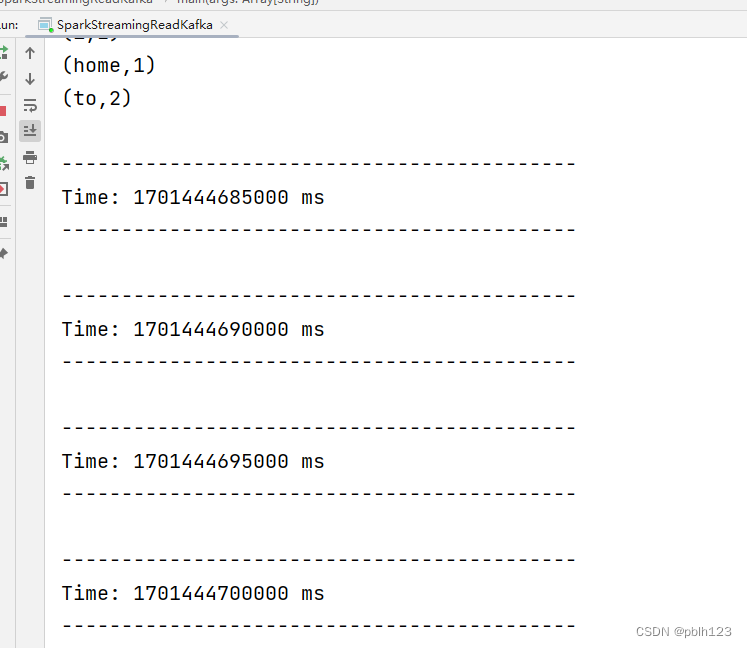

配置輸入參數

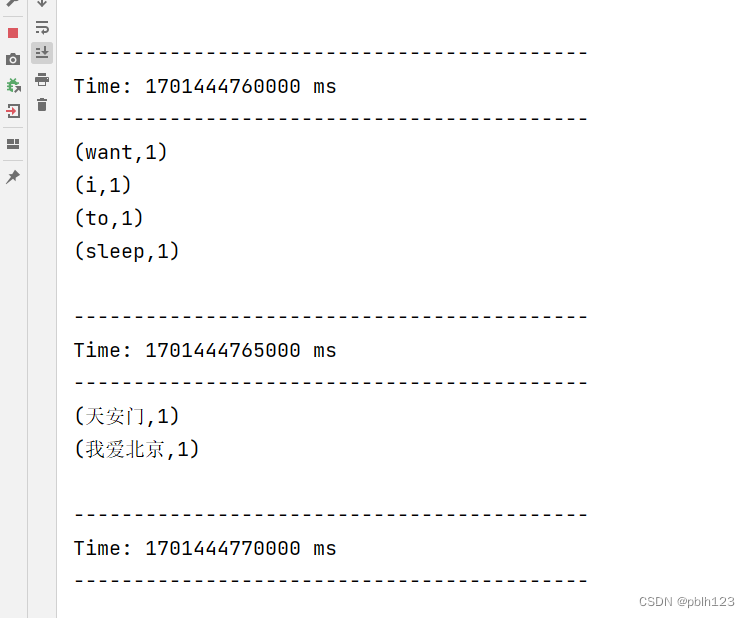

生產者追加數據

)

)

)

)