kube-prometheus

基于github安裝

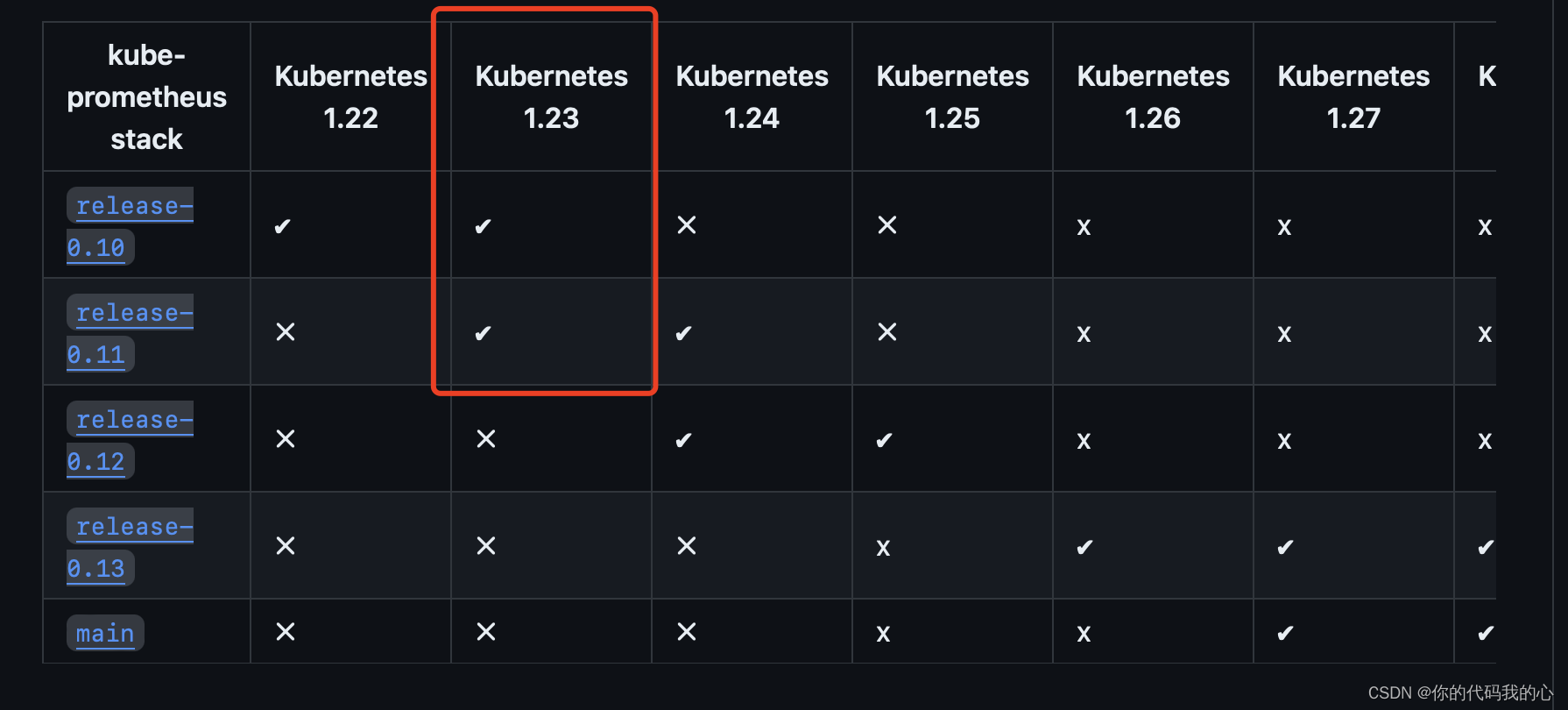

選擇對應的版本

這里選擇 ?https://github.com/prometheus-operator/kube-prometheus/tree/release-0.11

下載修改為國內鏡像源

image: quay.io 改為 quay.mirrors.ustc.edu.cn

image: k8s.gcr.io 改為 lank8s.cn創建?prometheus-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:namespace: monitoringname: prometheus-ingress

spec:ingressClassName: nginxrules:- host: grafana.wolfcode.cnhttp:paths:- path: /pathType: Prefixbackend:service:name: grafanaport:number: 3000- host: prometheus.wolfcode.cnhttp:paths:- path: /pathType: Prefixbackend:service:name: prometheus-k8sport:number: 9090- host: alertmanager.wolfcode.cnhttp:paths:- path: /pathType: Prefixbackend:service:name: alertmanager-mainport:number: 9093

host文件修改

根據自己的情況配置

# Mac 下

sudo vim /etc/hosts192.168.10.102 grafana.wolfcode.cn

192.168.10.102 prometheus.wolfcode.cn

192.168.10.102 alertmanager.wolfcode.cn# 先創建 setup 由于一些大資源,用apply會報錯

kubectl create -f ./manifests/setup/

# 創建 其他

kubectl apply -f ./manifests/# 查看

kubectl get all -n monitoring# 成功的結果 NAME READY STATUS RESTARTS AGE

pod/alertmanager-main-0 2/2 Running 0 7m53s

pod/alertmanager-main-1 2/2 Running 0 7m53s

pod/alertmanager-main-2 2/2 Running 0 7m53s

pod/blackbox-exporter-746c64fd88-kdvhp 3/3 Running 0 47m

pod/grafana-5fc7f9f55d-fnhlg 1/1 Running 0 47m

pod/kube-state-metrics-84f49c948f-k8g4g 3/3 Running 0 29m

pod/node-exporter-284jk 2/2 Running 0 47m

pod/node-exporter-p6prn 2/2 Running 0 47m

pod/node-exporter-v7cmd 2/2 Running 0 47m

pod/node-exporter-z6j9g 2/2 Running 0 47m

pod/prometheus-adapter-6bbcf4b845-22gbn 1/1 Running 0 9m49s

pod/prometheus-adapter-6bbcf4b845-n5bnq 1/1 Running 0 9m49s

pod/prometheus-k8s-0 2/2 Running 0 6m28s

pod/prometheus-k8s-1 1/2 ImagePullBackOff 0 5m20spod/prometheus-operator-f59c8b954-h6q4g 2/2 Running 0 8m11sNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/alertmanager-main ClusterIP 10.99.85.3 <none> 9093/TCP,8080/TCP 47m

service/alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 7m53s

service/blackbox-exporter ClusterIP 10.107.220.197 <none> 9115/TCP,19115/TCP 47m

service/grafana ClusterIP 10.100.1.202 <none> 3000/TCP 47m

service/kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 47m

service/node-exporter ClusterIP None <none> 9100/TCP 47m

service/prometheus-adapter ClusterIP 10.100.12.96 <none> 443/TCP 47m

service/prometheus-k8s ClusterIP 10.100.245.37 <none> 9090/TCP,8080/TCP 47m

service/prometheus-operated ClusterIP None <none> 9090/TCP 7m51s

service/prometheus-operator ClusterIP None <none> 8443/TCP 46mNAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/node-exporter 4 4 4 4 4 kubernetes.io/os=linux 47mNAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/blackbox-exporter 1/1 1 1 47m

deployment.apps/grafana 1/1 1 1 47m

deployment.apps/kube-state-metrics 1/1 1 1 47m

deployment.apps/prometheus-adapter 2/2 2 2 9m49s

deployment.apps/prometheus-operator 1/1 1 1 47mNAME DESIRED CURRENT READY AGE

replicaset.apps/blackbox-exporter-746c64fd88 1 1 1 47m

replicaset.apps/grafana-5fc7f9f55d 1 1 1 47m

replicaset.apps/kube-state-metrics-6c8846558c 0 0 0 47m

replicaset.apps/kube-state-metrics-84f49c948f 1 1 1 29m

replicaset.apps/prometheus-adapter-6bbcf4b845 2 2 2 9m49s

replicaset.apps/prometheus-operator-f59c8b954 1 1 1 47mNAME READY AGE

statefulset.apps/alertmanager-main 3/3 7m53s

statefulset.apps/prometheus-k8s 1/2 7m51s

具體操作

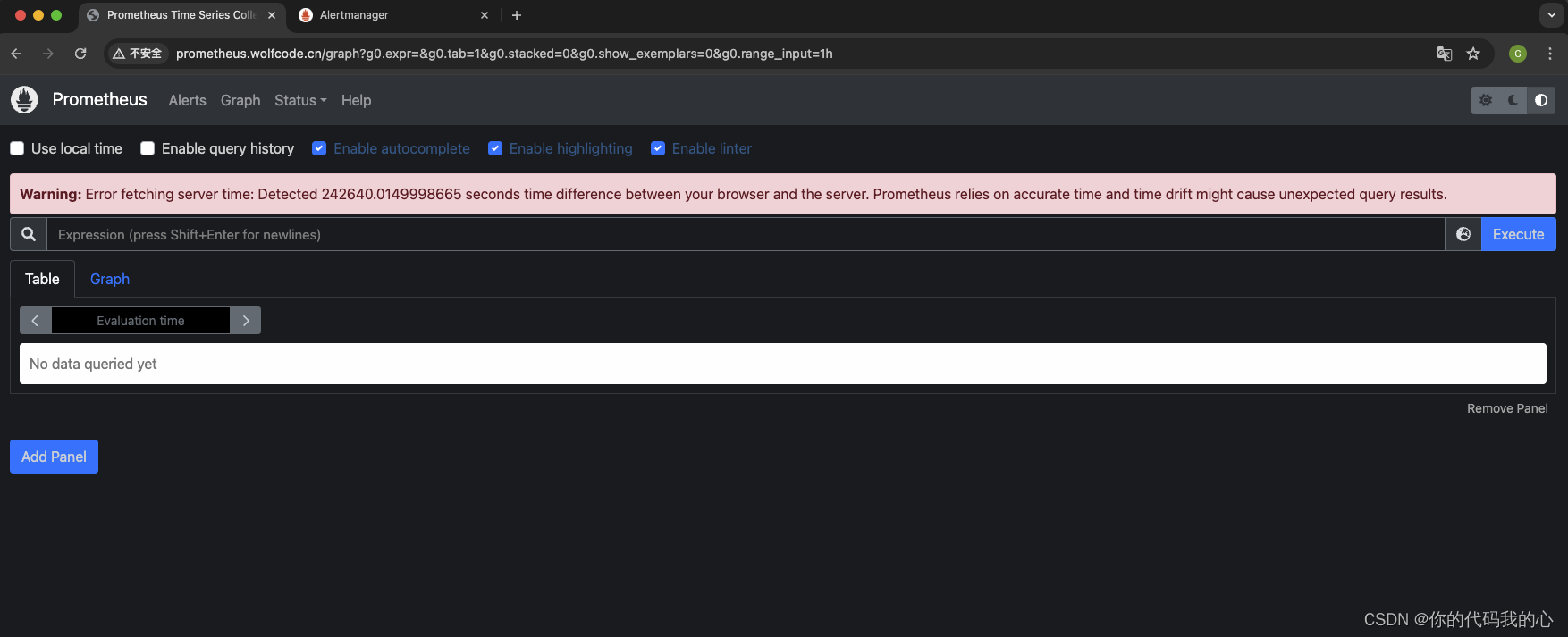

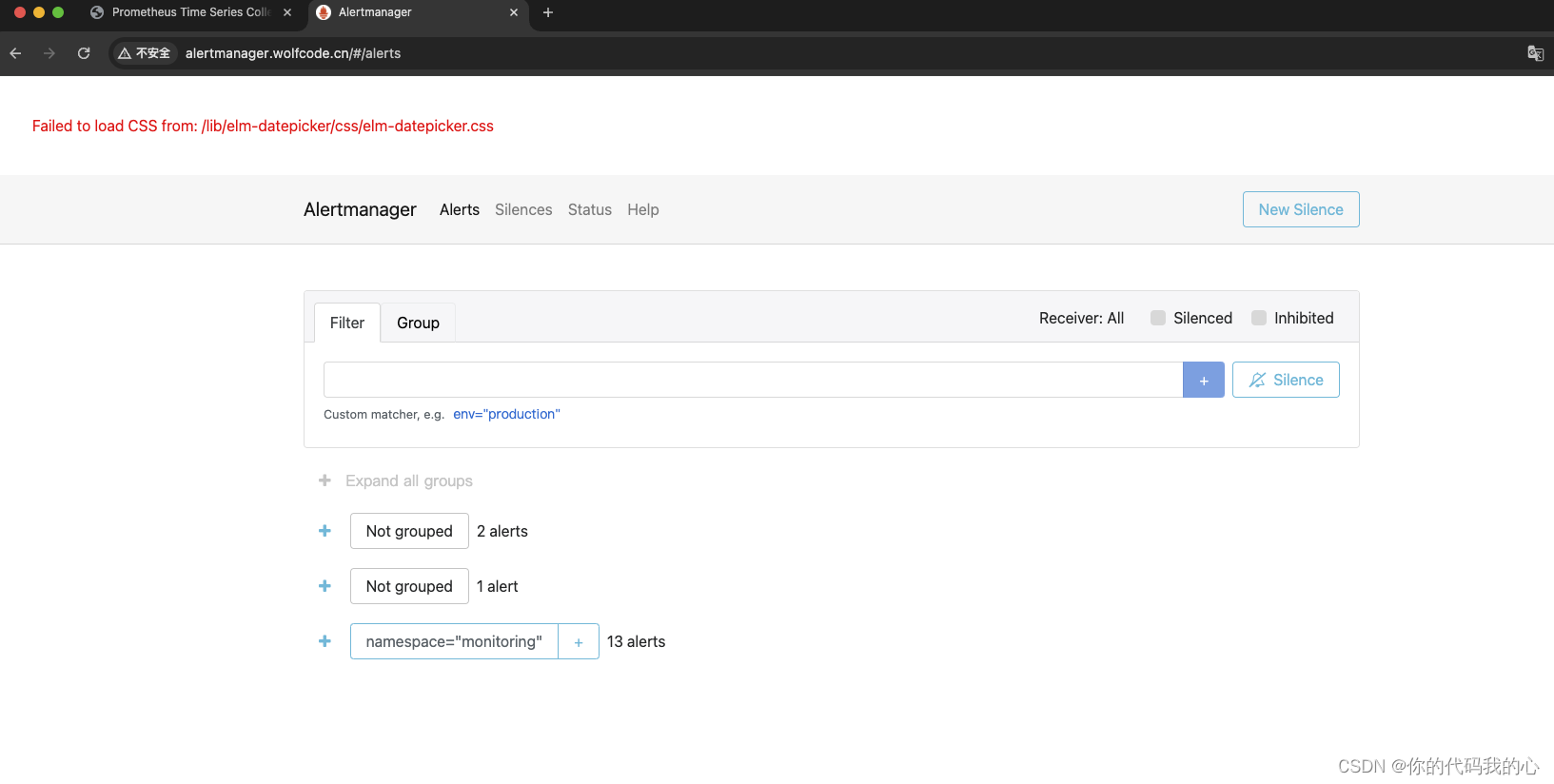

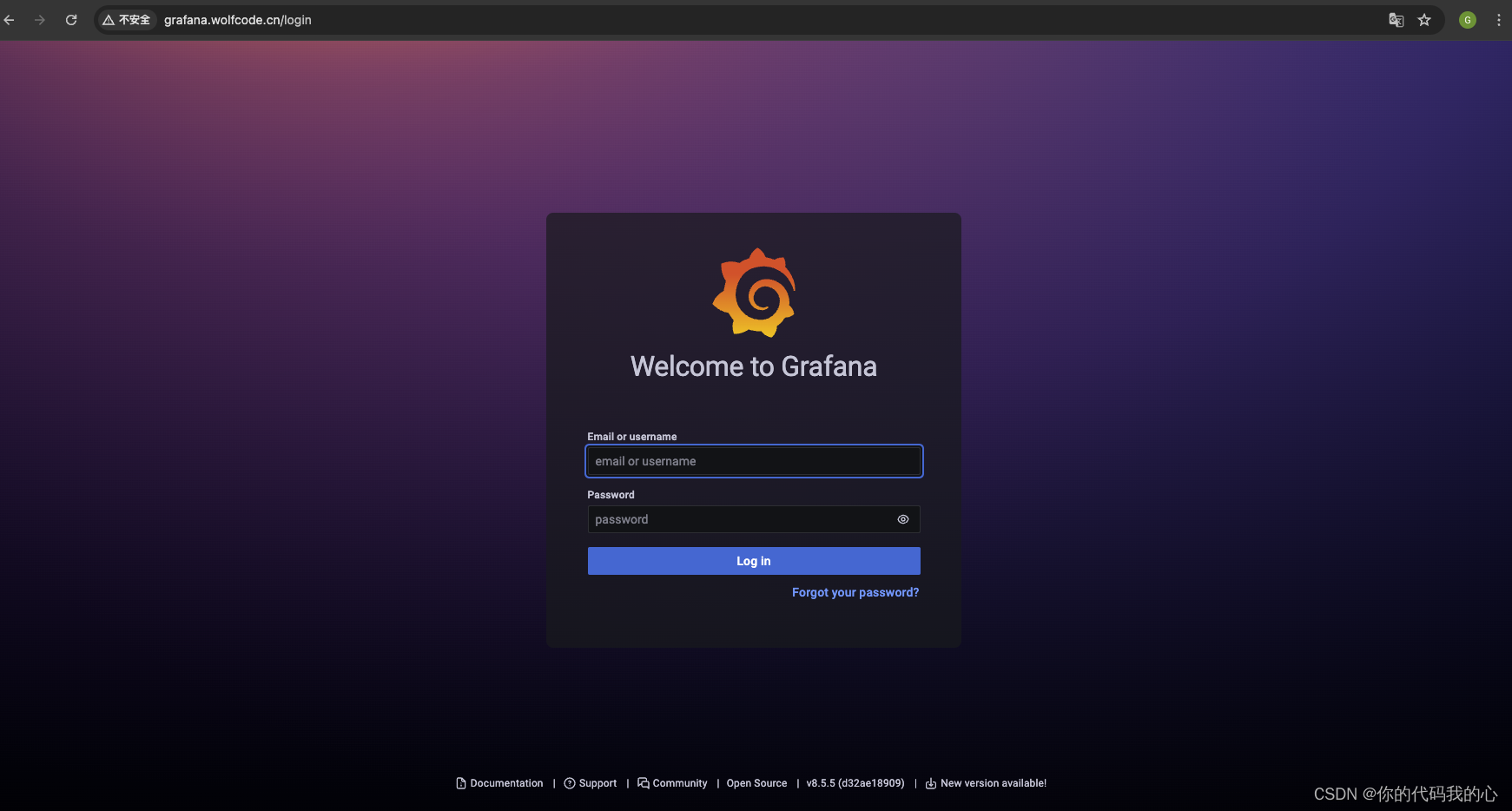

接下來訪問我們定義的ingress

訪問不了,由于我重新安裝了 所以導致我的ingress-nginx沒有部署---導致無法訪問

ingress安裝

最后的最后!!!!還是無法訪問,但但但但是在終端通過?

curl -H "host: alertmanager.wolfcode.cn" 192.168.10.102

又能打印出網頁內容地址--問了一下 ai ,我把代理一關,困擾了我幾天的問題終于解決啦

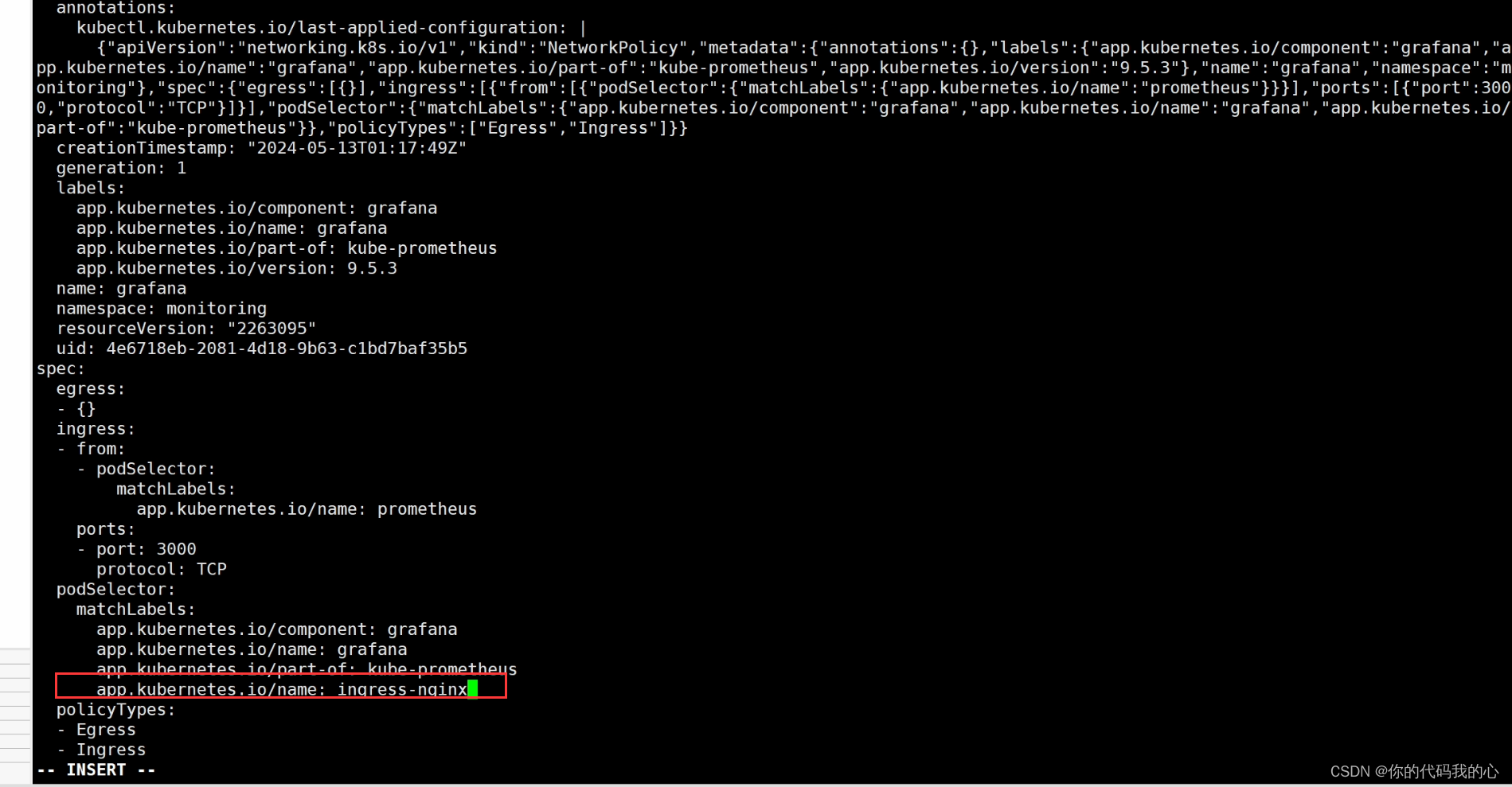

差一個grafana

kubectl edit networkPolicy -n monitoring grafana

內存不夠 升級虛擬機內存重啟 master報錯了

重新安裝

kubectl 查看命令是否正常

cd ~ 進入根目錄

ll -a 查看是否存在.kube文件

rm -rf .kube/ 刪除

systemctl restart docker 重啟docker

systemctl restart kubelet 重啟kubelet

kubeadm reset 重置

rm -rf /etc/cni/ 刪除

# Step 2: 安裝 kubeadm、kubelet、kubectl

yum install -y kubelet-1.23.6 kubeadm-1.23.6 kubectl-1.23.6

# 開啟自啟動

systemctl enable kubelet#================在k8s-master上執行==============

# Step 1: 初始化master節點

kubeadm init \--apiserver-advertise-address=192.168.10.100 \--image-repository registry.aliyuncs.com/google_containers \--kubernetes-version v1.23.6 \--service-cidr=10.96.0.0/12 \--pod-network-cidr=10.244.0.0/16# Step 2: 安裝成功后,復制如下配置并執行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config# Step 3: 查看是否初始化成功

kubectl get nodes# 其他的參考 https://blog.csdn.net/weixin_41104307/article/details/137704714?spm=1001.2014.3001.5501# ============分別在 k8s-node1 和 k8s-node2 執行================

# 下方命令可以在 k8s master 控制臺初始化成功后復制 join 命令

# Step 1: 將node節點加入master

kubeadm join 192.168.10.100:6443 --token ce9w0v.48oku10ufudimvy3 \--discovery-token-ca-cert-hash sha256:9ef74dbe219fb48ad72d776be94149b77616b8d9e52911c94fe689b01e47621c其他節點的處理

kubeadm reset

sudo rm /etc/kubernetes/kubelet.conf

sudo rm /etc/kubernetes/pki/ca.crt

sudo netstat -tuln | grep 10250

reboot

kubeadm join 192.168.10.100:6443 --token 3prl7s.pplcxwsvql7i1s1d \--discovery-token-ca-cert-hash sha256:9ef74dbe219fb48ad72d776be94149b77616b8d9e52911c94fe689b01e47621ck8s證書過期參考

token過期解決方案【里面包含重新生成token】

主要是這個鏡像

k8s拉取鏡像失敗處理 ImagePullBackOff ErrImageNeverPull

)

![c語言:利用隨機函數產生20個[120, 834] 之間互不相等的隨機數, 并利用選擇排序法將其從小到大排序后輸出(每行輸出5個)](http://pic.xiahunao.cn/c語言:利用隨機函數產生20個[120, 834] 之間互不相等的隨機數, 并利用選擇排序法將其從小到大排序后輸出(每行輸出5個))

)