- 🍨 本文為🔗365天深度學習訓練營 中的學習記錄博客

- 🍖 原作者:K同學啊

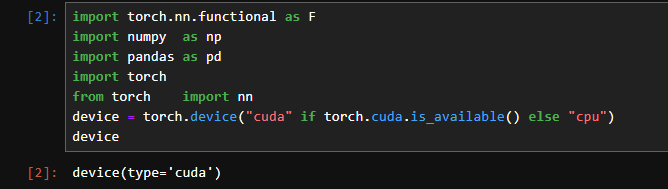

一、準備工作

1.1導入數據

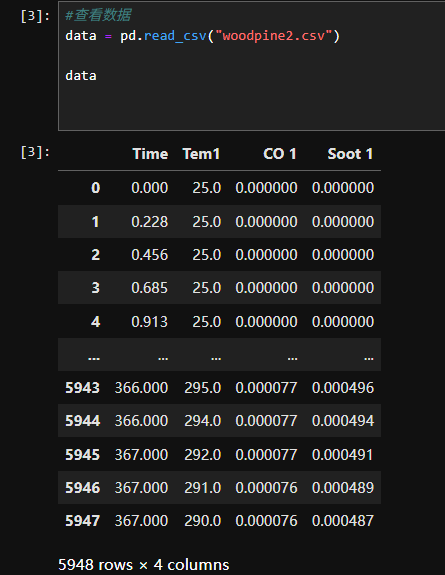

1.2數據集可視化

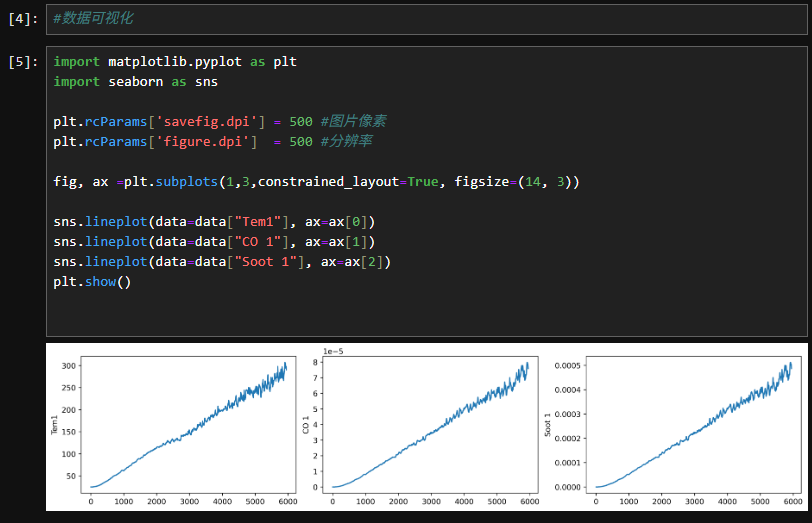

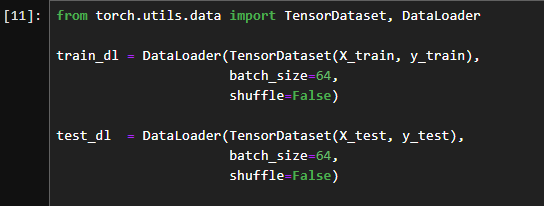

二、構建數據集

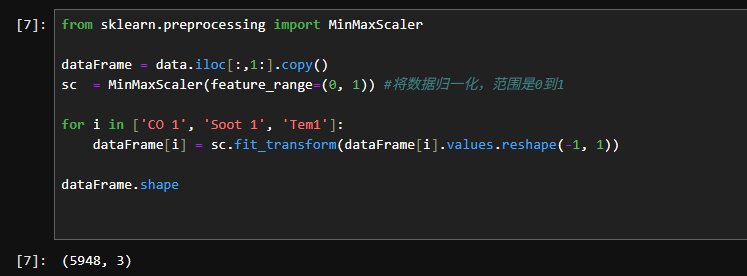

2.1數據集預處理

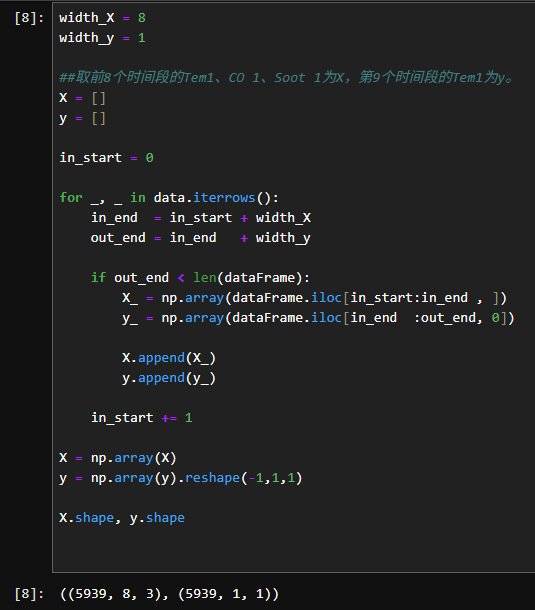

2.2設置X、Y

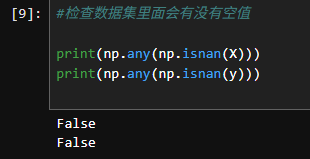

2.3檢查數據集中有沒有空值

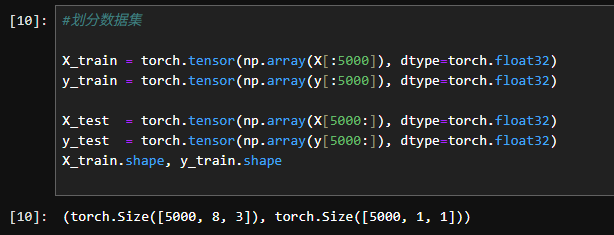

2.4劃分數據集

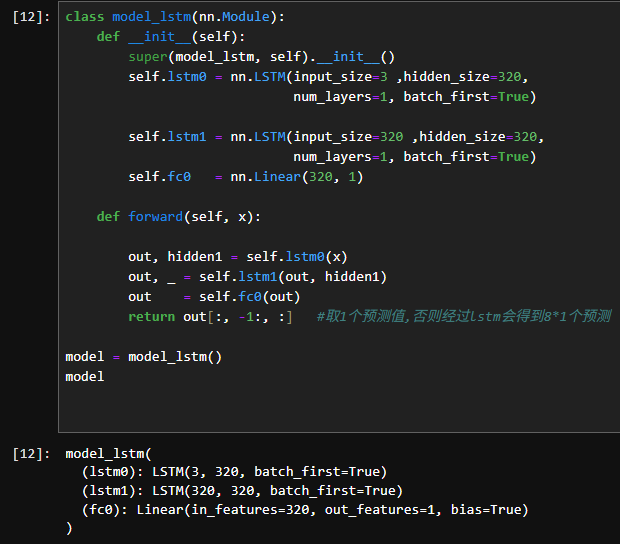

三、構建模型

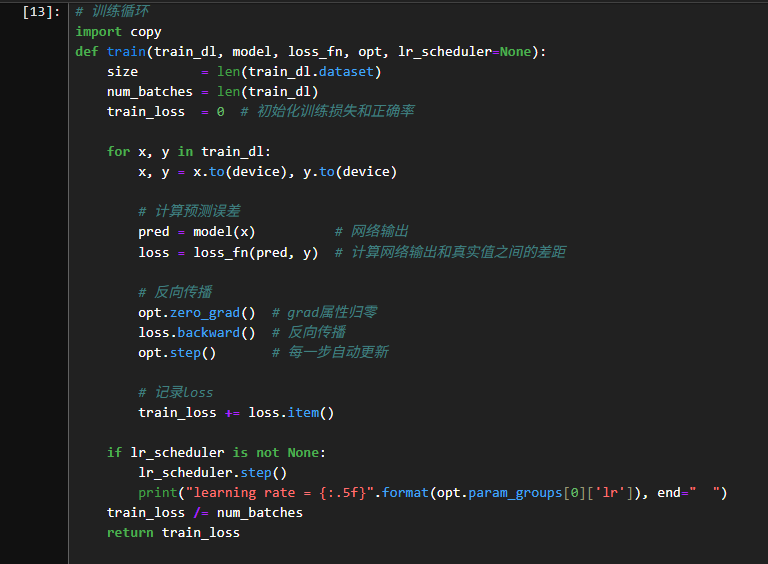

3.1定義訓練函數

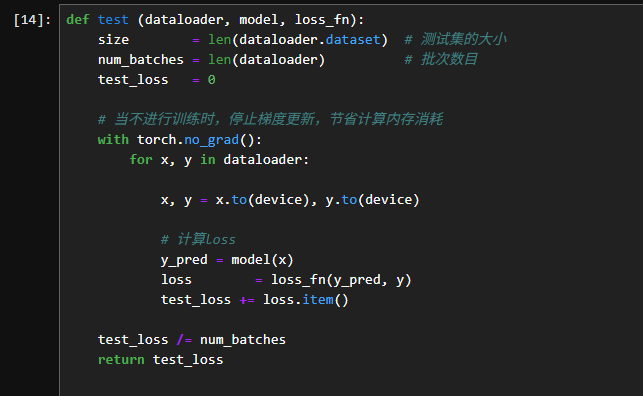

3.2定義測試函數

四、訓練模型

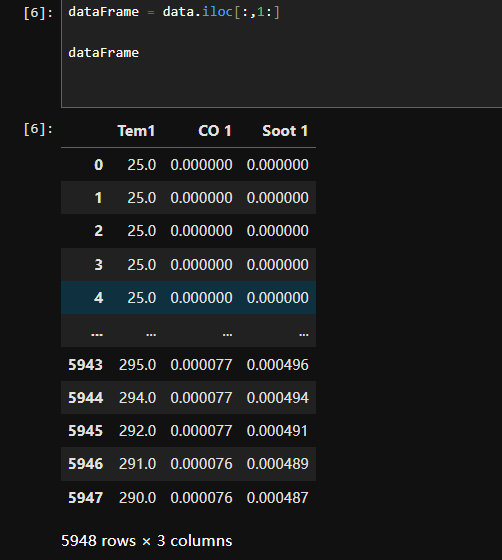

#訓練模型

model = model_lstm()

model = model.to(device)

loss_fn = nn.MSELoss() # 創建損失函數

learn_rate = 1e-1 # 學習率

opt = torch.optim.SGD(model.parameters(),lr=learn_rate,weight_decay=1e-4)

epochs = 50

train_loss = []

test_loss = []

lr_scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(opt,epochs, last_epoch=-1) for epoch in range(epochs):model.train()epoch_train_loss = train(train_dl, model, loss_fn, opt, lr_scheduler)model.eval()epoch_test_loss = test(test_dl, model, loss_fn)train_loss.append(epoch_train_loss)test_loss.append(epoch_test_loss)template = ('Epoch:{:2d}, Train_loss:{:.5f}, Test_loss:{:.5f}')print(template.format(epoch+1, epoch_train_loss, epoch_test_loss))print("="*20, 'Done', "="*20)

輸出

learning rate = 0.09990 Epoch: 1, Train_loss:0.00120, Test_loss:0.01197

learning rate = 0.09961 Epoch: 2, Train_loss:0.01372, Test_loss:0.01150

learning rate = 0.09911 Epoch: 3, Train_loss:0.01330, Test_loss:0.01102

learning rate = 0.09843 Epoch: 4, Train_loss:0.01282, Test_loss:0.01050

learning rate = 0.09755 Epoch: 5, Train_loss:0.01228, Test_loss:0.00993

learning rate = 0.09649 Epoch: 6, Train_loss:0.01166, Test_loss:0.00931

learning rate = 0.09524 Epoch: 7, Train_loss:0.01094, Test_loss:0.00863

learning rate = 0.09382 Epoch: 8, Train_loss:0.01013, Test_loss:0.00790

learning rate = 0.09222 Epoch: 9, Train_loss:0.00922, Test_loss:0.00712

learning rate = 0.09045 Epoch:10, Train_loss:0.00823, Test_loss:0.00631

learning rate = 0.08853 Epoch:11, Train_loss:0.00718, Test_loss:0.00550

learning rate = 0.08645 Epoch:12, Train_loss:0.00611, Test_loss:0.00471

learning rate = 0.08423 Epoch:13, Train_loss:0.00507, Test_loss:0.00397

learning rate = 0.08187 Epoch:14, Train_loss:0.00409, Test_loss:0.00330

learning rate = 0.07939 Epoch:15, Train_loss:0.00322, Test_loss:0.00272

learning rate = 0.07679 Epoch:16, Train_loss:0.00247, Test_loss:0.00223

learning rate = 0.07409 Epoch:17, Train_loss:0.00185, Test_loss:0.00183

learning rate = 0.07129 Epoch:18, Train_loss:0.00137, Test_loss:0.00152

learning rate = 0.06841 Epoch:19, Train_loss:0.00100, Test_loss:0.00128

learning rate = 0.06545 Epoch:20, Train_loss:0.00073, Test_loss:0.00110

learning rate = 0.06243 Epoch:21, Train_loss:0.00054, Test_loss:0.00097

learning rate = 0.05937 Epoch:22, Train_loss:0.00040, Test_loss:0.00087

learning rate = 0.05627 Epoch:23, Train_loss:0.00031, Test_loss:0.00079

learning rate = 0.05314 Epoch:24, Train_loss:0.00024, Test_loss:0.00073

learning rate = 0.05000 Epoch:25, Train_loss:0.00020, Test_loss:0.00069

learning rate = 0.04686 Epoch:26, Train_loss:0.00017, Test_loss:0.00066

learning rate = 0.04373 Epoch:27, Train_loss:0.00015, Test_loss:0.00063

learning rate = 0.04063 Epoch:28, Train_loss:0.00013, Test_loss:0.00061

learning rate = 0.03757 Epoch:29, Train_loss:0.00012, Test_loss:0.00059

learning rate = 0.03455 Epoch:30, Train_loss:0.00012, Test_loss:0.00058

learning rate = 0.03159 Epoch:31, Train_loss:0.00011, Test_loss:0.00057

learning rate = 0.02871 Epoch:32, Train_loss:0.00011, Test_loss:0.00056

learning rate = 0.02591 Epoch:33, Train_loss:0.00011, Test_loss:0.00055

learning rate = 0.02321 Epoch:34, Train_loss:0.00011, Test_loss:0.00055

learning rate = 0.02061 Epoch:35, Train_loss:0.00011, Test_loss:0.00055

learning rate = 0.01813 Epoch:36, Train_loss:0.00011, Test_loss:0.00055

learning rate = 0.01577 Epoch:37, Train_loss:0.00011, Test_loss:0.00055

learning rate = 0.01355 Epoch:38, Train_loss:0.00012, Test_loss:0.00056

learning rate = 0.01147 Epoch:39, Train_loss:0.00012, Test_loss:0.00056

learning rate = 0.00955 Epoch:40, Train_loss:0.00012, Test_loss:0.00057

learning rate = 0.00778 Epoch:41, Train_loss:0.00013, Test_loss:0.00058

learning rate = 0.00618 Epoch:42, Train_loss:0.00013, Test_loss:0.00059

learning rate = 0.00476 Epoch:43, Train_loss:0.00013, Test_loss:0.00060

learning rate = 0.00351 Epoch:44, Train_loss:0.00014, Test_loss:0.00060

learning rate = 0.00245 Epoch:45, Train_loss:0.00014, Test_loss:0.00061

learning rate = 0.00157 Epoch:46, Train_loss:0.00014, Test_loss:0.00061

learning rate = 0.00089 Epoch:47, Train_loss:0.00014, Test_loss:0.00061

learning rate = 0.00039 Epoch:48, Train_loss:0.00014, Test_loss:0.00061

learning rate = 0.00010 Epoch:49, Train_loss:0.00014, Test_loss:0.00061

learning rate = 0.00000 Epoch:50, Train_loss:0.00014, Test_loss:0.00061

==================== Done ====================

4.1Loss圖

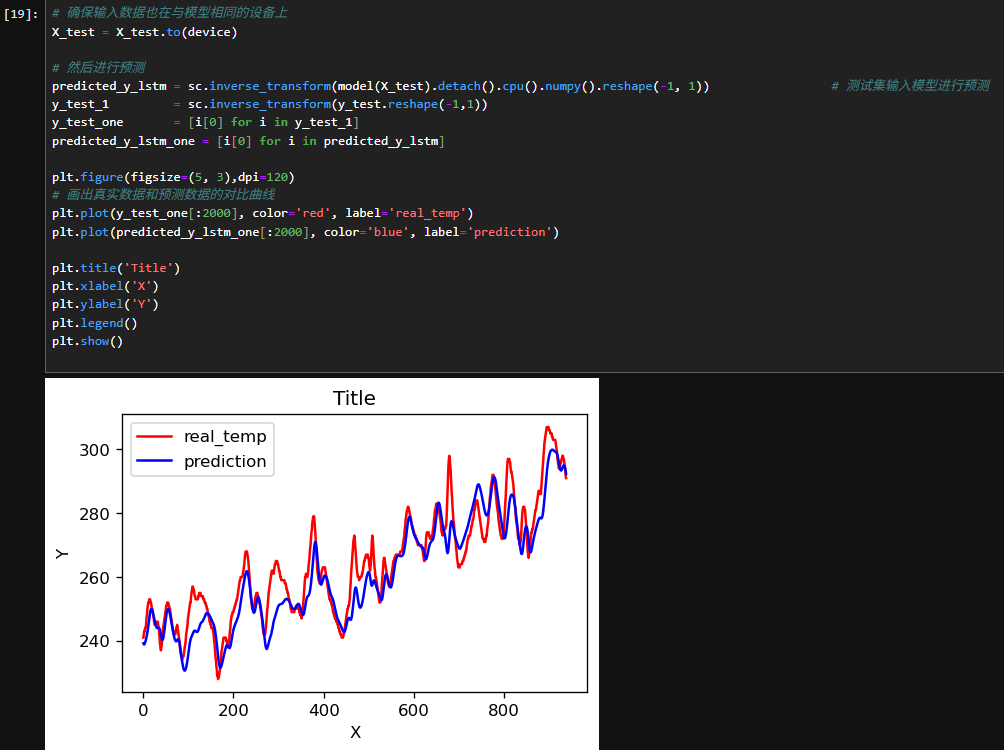

4.2調用模型進行預測

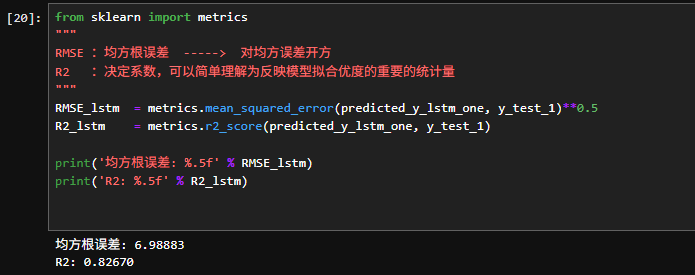

4.3R2值評估

五、總結

- LSTM模型的核心優勢

時間序列數據的建模能力:LSTM(長短時記憶網絡)專門設計來處理和預測序列數據中的長期依賴關系。在火災溫度預測這種時間序列問題中,LSTM能夠有效捕捉過去溫度數據與未來溫度之間的關聯,尤其在火災數據中,溫度往往有較強的時間依賴性。

解決梯度消失問題:傳統的RNN在長時間序列中容易遇到梯度消失問題,而LSTM通過引入記憶單元(cell state)和門控機制有效地緩解了這一問題,能夠記住遠期的信息。

- PyTorch的使用

靈活性和易用性:PyTorch框架提供了非常簡潔的API,并且在實現LSTM時具有高度的靈活性,能方便地調整網絡結構、訓練參數及優化方法。

調試和可視化:PyTorch的動態計算圖使得調試過程更加直觀,尤其是在處理復雜的深度學習模型時,可以逐步查看模型輸出、梯度等中間結果。

- 模型訓練與評估

數據預處理的重要性:時間序列數據的預處理,尤其是數據標準化或歸一化,能夠顯著提高LSTM模型的訓練效果。溫度數據可能存在波動和不規律的變化,因此,去噪和處理缺失數據是模型成功的關鍵因素。

模型評估:對于回歸任務,如火災溫度預測,常用的評估指標包括均方誤差(MSE)、均方根誤差(RMSE)和決定系數(R2)。這些指標可以幫助評估模型的預測精度,進一步調整模型超參數和結構。

- 模型的表現

訓練和測試誤差:通過模型訓練過程中觀察訓練和測試誤差,可能發現訓練集誤差較低但測試集誤差較高,暗示過擬合的存在。通過交叉驗證和正則化技術可以有效解決這一問題。

時間依賴性:LSTM能夠捕捉到較長時間跨度的數據關系,但有時也會遇到短期依賴的情況,可能需要調整網絡層數、隱藏層維度等參數