ubuntu kubeasz 部署高可用k8s 集群

- 測試環境

- 主機列表

- 軟件清單

- kubeasz 部署高可用 kubernetes

- 配置源

- 配置host文件

- 安裝 ansible 并進行 ssh 免密登錄:

- 下載 kubeasz 項?及組件

- 部署集群

- 部署各組件

- 開始安裝

- 修改 config 配置文件

- 增加 master 節點

- 增加 kube_node 節點

- 登錄dashboard

- 查看dashboard容器運行在那個節點及端口了

- 通過瀏覽器訪問dashboard

- 生成Token登錄

測試環境

kubeasz與K8S版本對比

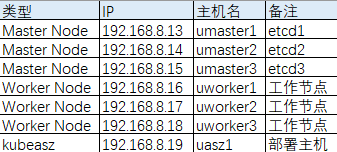

主機列表

軟件清單

操作系統: Ubuntu server 25.04

k8s版本 1.32.3

calico 3.28.3

kubeasz 3.6.6

etcd v3.5.20

kubeasz 部署高可用 kubernetes

部署節點基本配置

此處部署節點是:192.168.8.19,部署節點的功能如下:

1從互聯?下載安裝資源

2可選將部分鏡像修改tag后上傳到公司內部鏡像倉庫服務器

3對master進?初始化

4對node進?初始化

5后期集群維護,包括:添加及刪除master節點;添加就刪除node節點;etcd數據備份及恢復

配置源

1,配置本地光盤源

將系統光盤掛載到光驅, mount到/mnt下

mount /dev/cdrom /mnt

echo "edeb file:///mnt plucky main restricted" >/etc/apt/sources.list.d/cdrom.list

2,配置ali源

sudo cp /etc/apt/sources.list.d/ubuntu.sources /etc/apt/sources.list.d/ubuntu.sources.bak

cat > /etc/apt/sources.list.d/ubuntu.sources << EOF

Types: deb

URIs: http://mirrors.aliyun.com/ubuntu/

Suites: noble noble-updates noble-security

Components: main restricted universe multiverse

Signed-By: /usr/share/keyrings/ubuntu-archive-keyring.gpg

EOF

配置host文件

root@uasz1:~# cat /etc/hosts

127.0.0.1 localhost

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

192.168.8.13 umaster1.meng.com umaster1

192.168.8.14 umaster2.meng.com umaster2

192.168.8.15 umaster3.meng.com umaster3

192.168.8.16 uworker1.meng.com uworker1

192.168.8.17 uworker2.meng.com uworker2

192.168.8.18 uworker3.meng.com uworker3

192.168.8.19 uasz1.meng.com uasz1

安裝 ansible 并進行 ssh 免密登錄:

apt 安裝 ansieble,并將部署節點的公鑰拷貝至 master、node、etcd 節點,部署節點能免密登錄其他主機

注: 在部署節點執行

root@uasz1:~# apt update && apt install ansible -y

root@uasz1:~# ssh-keygen # 生成密鑰對,一路回車即可

root@uasz1:~# apt install sshpass -y # 如果已經安裝不需要執行

#!/bin/bash

#目標主機列表

IP="

192.168.8.13

192.168.8.14

192.168.8.15

192.168.8.16

192.168.8.17

192.168.8.18

"

REMOTE_PORT="22"

REMOTE_USER="root"

REMOTE_PASS="root"

for REMOTE_HOST in ${IP};doREMOTE_CMD="echo ${REMOTE_HOST} is successfully!"ssh-keyscan -p "${REMOTE_PORT}" "${REMOTE_HOST}" >> ~/.ssh/known_hosts #在本地添加遠程主機的公鑰信息,避免交互式應答sshpass -p "${REMOTE_PASS}" ssh-copy-id "${REMOTE_USER}@${REMOTE_HOST}"if [ $? -eq 0 ];thenecho ${REMOTE_HOST} 免秘鑰配置完成!ssh ${REMOTE_HOST} ln -sv /usr/bin/python3 /usr/bin/pythonelseecho "免密鑰配置失敗!"fi

done

# 驗證免密登錄

ssh 192.168.3.100

下載 kubeasz 項?及組件

root@uasz1:mkdir -p /data/kubeasz

root@uasz1:cd /data/kubeasz

root@uasz1:/data/kubeasz# wget https://github.com/easzlab/kubeasz/releases/download/3.6.6/ezdown

root@uasz1:/data/kubeasz# chmod +x ezdown

#下載kubeasz代碼、二進制、默認容器鏡像,會運行一個redistry鏡像倉庫,將下載的鏡像push到倉庫

root@uasz1:/data/kubeasz# ./ezdown -D

root@uasz1:/data/kubeasz# ll /etc/kubeasz/ #kubeasz所有文件和配置路徑

total 148

drwxrwxr-x 13 root root 4096 May 19 21:47 ./

drwxr-xr-x 112 root root 4096 May 19 22:24 ../

-rw-rw-r-- 1 root root 20304 Mar 23 20:24 ansible.cfg

drwxr-xr-x 5 root root 4096 May 19 21:14 bin/

drwxr-xr-x 3 root root 4096 May 19 21:47 clusters/

drwxrwxr-x 8 root root 4096 Mar 23 20:30 docs/

drwxr-xr-x 3 root root 4096 May 19 21:27 down/

drwxrwxr-x 2 root root 4096 Mar 23 20:30 example/

-rwxrwxr-x 1 root root 25868 Mar 23 20:24 ezctl*

-rwxrwxr-x 1 root root 32772 Mar 23 20:24 ezdown*

drwxrwxr-x 4 root root 4096 Mar 23 20:30 .github/

-rw-rw-r-- 1 root root 301 Mar 23 20:24 .gitignore

drwxrwxr-x 10 root root 4096 Mar 23 20:30 manifests/

drwxrwxr-x 2 root root 4096 Mar 23 20:30 pics/

drwxrwxr-x 2 root root 4096 Mar 23 20:30 playbooks/

-rw-rw-r-- 1 root root 6404 Mar 23 20:24 README.md

drwxrwxr-x 22 root root 4096 Mar 23 20:30 roles/

drwxrwxr-x 2 root root 4096 Mar 23 20:30 tools/

部署集群

root@uasz1:/data/kubeasz# cd /etc/kubeasz/

root@uasz1:/etc/kubeasz# ./ezctl new k8s-cluster01 # 新建管理集群

2025-05-19 21:47:03 [ezctl:145] DEBUG generate custom cluster files in /etc/kubeasz/clusters/k8s-cluster01 #集群使用相關配置路徑

2025-05-19 21:47:04 [ezctl:151] DEBUG set versions

2025-05-19 21:47:04 [ezctl:179] DEBUG cluster k8s-cluster01: files successfully created.

2025-05-19 21:47:04 [ezctl:180] INFO next steps 1: to config '/etc/kubeasz/clusters/k8s-cluster01/hosts'

2025-05-19 21:47:04 [ezctl:181] INFO next steps 2: to config '/etc/kubeasz/clusters/k8s-cluster01/config.yml'

配置用于集群管理的 ansible hosts 文件

root@uasz1:/etc/kubeasz/clusters/k8s-cluster01# cat hosts

# 'etcd' cluster should have odd member(s) (1,3,5,...)

[etcd]

192.168.8.13

192.168.8.14

192.168.8.15# master node(s), set unique 'k8s_nodename' for each node

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character

[kube_master]

192.168.8.13 k8s_nodename='umaster1'

192.168.8.14 k8s_nodename='umaster2'

192.168.8.15 k8s_nodename='umaster3'# work node(s), set unique 'k8s_nodename' for each node

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character

[kube_node]

192.168.8.16 k8s_nodename='uworker1'

192.168.8.17 k8s_nodename='uworker2'

192.168.8.18 k8s_nodename='uworker3'# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one

[harbor]

#192.168.1.8 NEW_INSTALL=false# [optional] loadbalance for accessing k8s from outside

[ex_lb]

#192.168.1.6 LB_ROLE=backup EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443

#192.168.1.7 LB_ROLE=master EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443# [optional] ntp server for the cluster

[chrony]

#192.168.1.1[all:vars]

# --------- Main Variables ---------------

# Secure port for apiservers

SECURE_PORT="6443"# Cluster container-runtime supported: docker, containerd

# if k8s version >= 1.24, docker is not supported

CONTAINER_RUNTIME="containerd"# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="calico"# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="10.68.0.0/16"# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="172.20.0.0/16"# NodePort Range

NODE_PORT_RANGE="30000-32767"# Cluster DNS Domain

CLUSTER_DNS_DOMAIN=

:Gorm查詢)

- 本地部署(前后端))

)