前言

《虛擬局域網(VLAN)》一文中描述了虛擬網卡、虛擬網橋的作用,以及通過iptables實現了vlan聯網,其實學習到這里自然就會聯想到目前主流的容器技術:Docker,因此接下來打算研究一下Docker的橋接網絡與此有何異同。

猜測

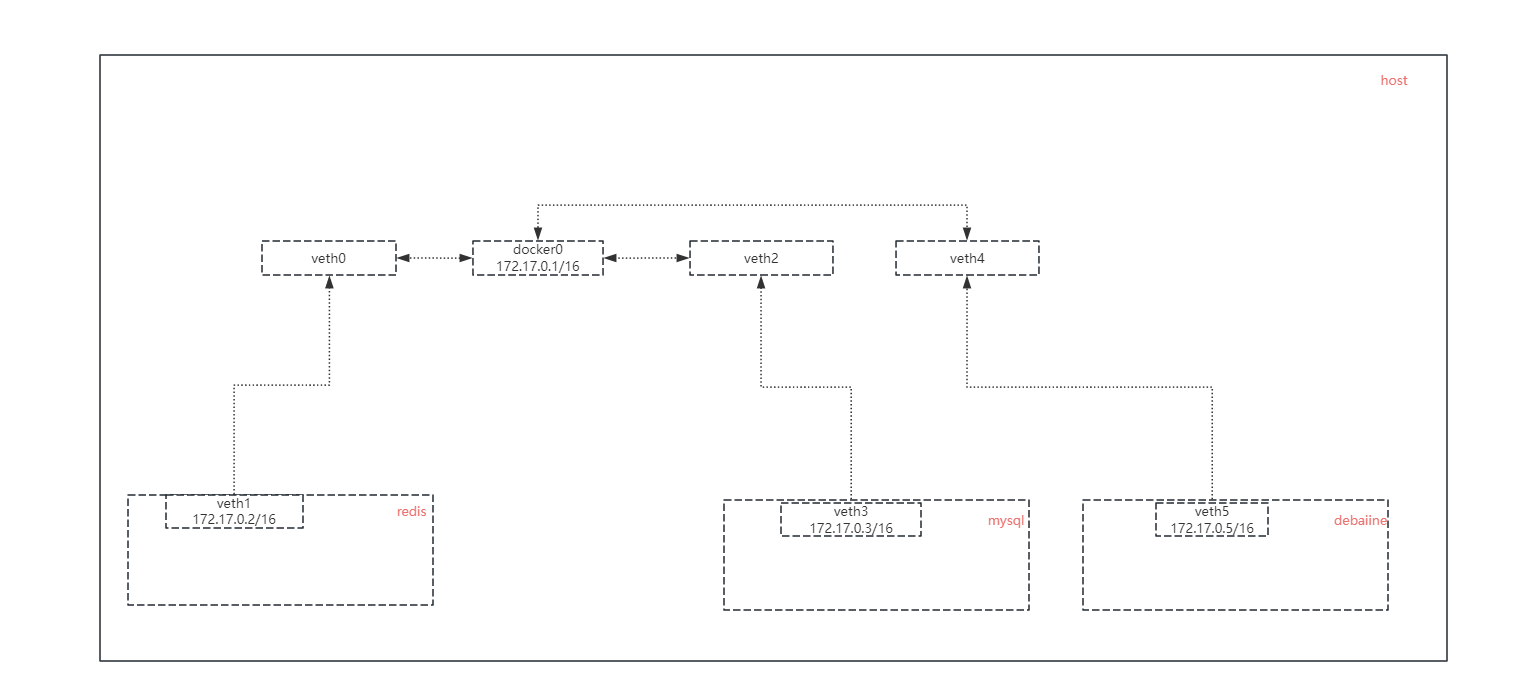

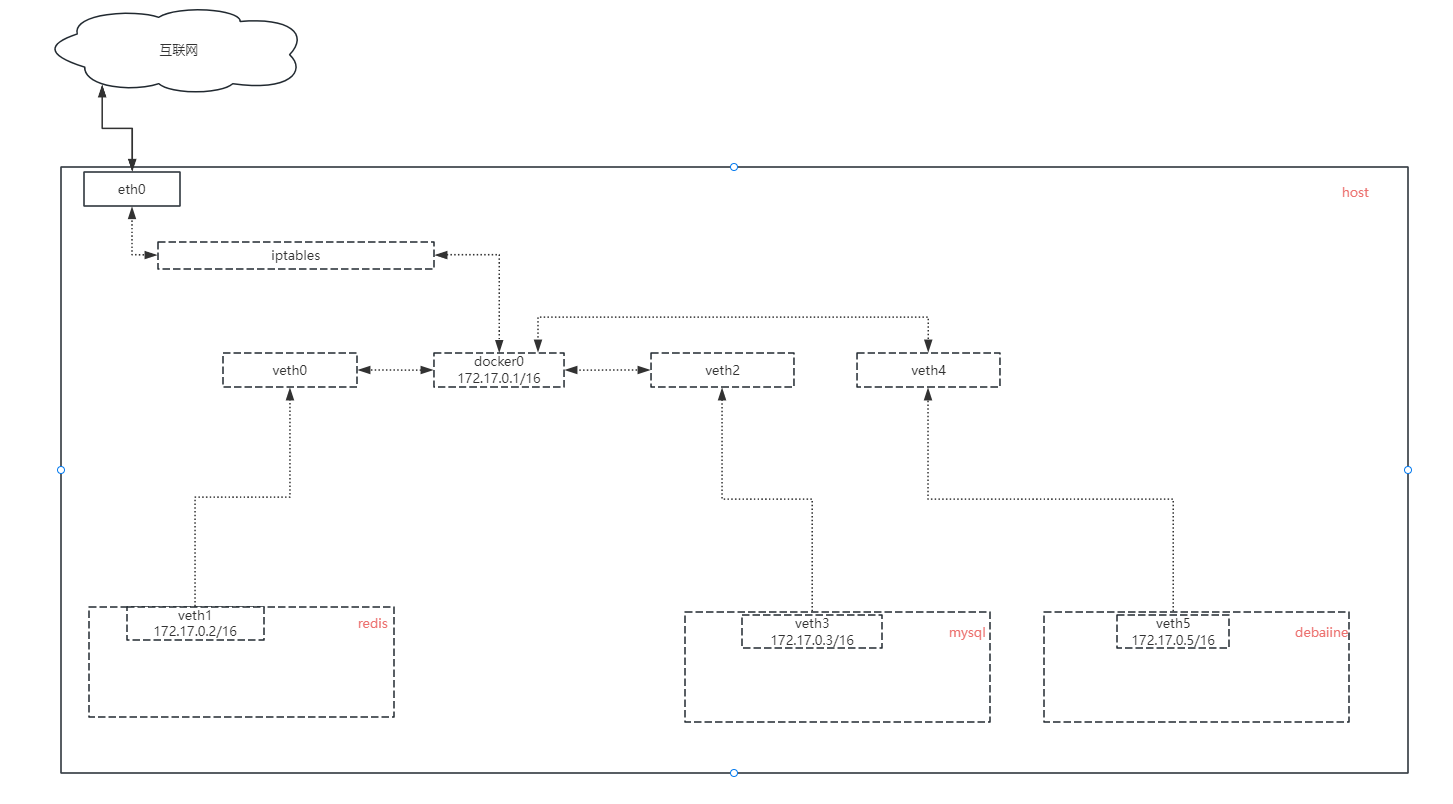

眾所周知,Docker有host、bridge、none三種網絡模式,這里我們僅分析橋接(bridge)模式。有了上一篇文章的基礎,bridge這個概念我們應該已經熟悉了,bridge網橋是一種基于mac地址在數據鏈路層進行數據交換的一個虛擬交換機。

所以我們現在可以大膽的進行猜測:Docker也是基于此模式實現了內部網絡通信。

- 猜測一:Docker引擎在創建容器的時候會自動為容器創建一對虛擬網卡(veth)并為其分配私有ip,然后將veth一端連接在docker0網橋中,另一端連接在容器的內部網絡中

- 猜測二:Docker同樣利用iptables的nat能力將容器內流量轉發至互聯網實現通信。

求證

檢查主機網卡列表

檢查docker容器及網卡列表,觀察是否存在docker網橋以及veth。

shell

# 查看本機正在運行的coekr容器(mysql、redis、halo、debian)

[root@VM-8-10-centos ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

56ffaf39316a debian "bash" 23 hours ago Up 7 minutes debian

c8a273ce122e halohub/halo:1.5.3 "/bin/sh -c 'java -X…" 5 months ago Up 47 hours 0.0.0.0:8090->8090/tcp, :::8090->8090/tcp halo

d09fcfa7de0f redis "docker-entrypoint.s…" 12 months ago Up 5 weeks 0.0.0.0:8805->6379/tcp, :::8805->6379/tcp redis

87a2192f6db4 mysql:5.7 "docker-entrypoint.s…" 2 years ago Up 5 weeks 0.0.0.0:3306->3306/tcp, :::3306->3306/tcp, 33060/tcp mysql

# 檢查主機網卡列表(確認docker0、veth存在)

[root@VM-12-15-centos ~]# ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT group default qlen 1000link/ether 52:54:00:b3:6f:20 brd ff:ff:ff:ff:ff:ffaltname enp0s5altname ens5

3: br-67cf5bfe7a5c: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default link/ether 02:42:c5:07:22:c7 brd ff:ff:ff:ff:ff:ff

4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default link/ether 02:42:38:d6:1b:ea brd ff:ff:ff:ff:ff:ff

5: br-9fd151a807e7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default link/ether 02:42:35:7f:ed:76 brd ff:ff:ff:ff:ff:ff

315: vethf2afb37@if314: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-67cf5bfe7a5c state UP mode DEFAULT group default link/ether 3a:06:f0:8d:06:f6 brd ff:ff:ff:ff:ff:ff link-netnsid 12

317: veth1ec30f9@if316: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-9fd151a807e7 state UP mode DEFAULT group default link/ether 4a:ad:1a:b0:5a:5f brd ff:ff:ff:ff:ff:ff link-netnsid 0

319: vethc408286@if318: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-67cf5bfe7a5c state UP mode DEFAULT group default link/ether 26:b0:3c:f4:c5:5b brd ff:ff:ff:ff:ff:ff link-netnsid 1

321: veth68fb8c6@if320: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-67cf5bfe7a5c state UP mode DEFAULT group default link/ether 96:ca:a9:42:f8:a8 brd ff:ff:ff:ff:ff:ff link-netnsid 9

323: veth6dba394@if322: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-67cf5bfe7a5c state UP mode DEFAULT group default link/ether 92:1c:5e:9c:a2:b3 brd ff:ff:ff:ff:ff:ff link-netnsid 4

325: veth1509ed0@if324: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-67cf5bfe7a5c state UP mode DEFAULT group default link/ether fa:22:33:da:12:e0 brd ff:ff:ff:ff:ff:ff link-netnsid 11

329: vethef1dbac@if328: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-67cf5bfe7a5c state UP mode DEFAULT group default link/ether aa:db:d2:10:36:60 brd ff:ff:ff:ff:ff:ff link-netnsid 3

331: veth69d3e7d@if330: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-67cf5bfe7a5c state UP mode DEFAULT group default link/ether 86:45:d0:0e:6b:a7 brd ff:ff:ff:ff:ff:ff link-netnsid 5

335: veth98588ae@if334: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-67cf5bfe7a5c state UP mode DEFAULT group default link/ether 86:59:55:39:17:ad brd ff:ff:ff:ff:ff:ff link-netnsid 7

349: vetha84d717@if348: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-67cf5bfe7a5c state UP mode DEFAULT group default link/ether ee:7f:d2:27:15:83 brd ff:ff:ff:ff:ff:ff link-netnsid 6

354: veth1@if355: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-mybridge state UP mode DEFAULT group default qlen 1000link/ether 72:c8:9e:24:a6:a3 brd ff:ff:ff:ff:ff:ff link-netns n1

356: br-mybridge: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000link/ether 72:c8:9e:24:a6:a3 brd ff:ff:ff:ff:ff:ff

使用ip link查看本機網卡列表,可以發現宿主機存在一個名為docker0的虛擬網橋,且虛擬網橋下有四對虛擬網卡分別對應 debian、halo、redis、mysql四個docker容器。

檢查網橋ip及Docker內部容器的網絡通信

shell

# docker0默認網橋的IP地址為172.17.0.1/16

[root@VM-8-10-centos ~]# ip addr show docker0

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:6f:d7:19:7e brd ff:ff:ff:ff:ff:ffinet 172.17.0.1/16 scope global docker0valid_lft forever preferred_lft foreverinet6 fe80::42:6fff:fed7:197e/64 scope link valid_lft forever preferred_lft forever

# 檢查橋接網絡內部容器的ip地址(分別為172.17.0.2/16、172.17.0.3/16、172.17.0.4/16、172.17.0.5/16)

[root@VM-8-10-centos ~]# docker network inspect bridge

[{"Name": "bridge","Id": "2dc75e446719be8cad37e1ea9ae7d1385fcc728b8177646a3c62929c2b289e94","Created": "2024-04-24T09:46:14.399901891+08:00","Scope": "local","Driver": "bridge","EnableIPv6": false,"IPAM": {"Driver": "default","Options": null,"Config": [{"Subnet": "172.17.0.0/16","Gateway": "172.17.0.1"}]},"Internal": false,"Attachable": false,"Ingress": false,"ConfigFrom": {"Network": ""},"ConfigOnly": false,"Containers": {"56ffaf39316ac9f776c6b3e2a8a79e9f42dfab42aa1f7de7525bd26c686defaa": {"Name": "debian","EndpointID": "47dd9441d4a4c8b09afea3bca23652b80ba35e6baa13d44ec21ec89522e722a6","MacAddress": "02:42:ac:11:00:05","IPv4Address": "172.17.0.5/16","IPv6Address": ""},"87a2192f6db48c9bf2996bf25c79d4c18c3ae2975cac9d55e7fdfdcec03f896b": {"Name": "mysql","EndpointID": "00b93de23c5abf2ed1349bac1c2ec93bf7ed516370dabf23348b980f19cfaa9c","MacAddress": "02:42:ac:11:00:02","IPv4Address": "172.17.0.2/16","IPv6Address": ""},"c8a273ce122ef5479583908f40898141a90933a3c41c8028dc7966b9af4c465d": {"Name": "halo","EndpointID": "ba8ef83c80f3edb6e7987c95ae6d56816a1fc00d07e8bb2bfbb0f19ef543badf","MacAddress": "02:42:ac:11:00:04","IPv4Address": "172.17.0.4/16","IPv6Address": ""},"d09fcfa7de0f2a7b3ef7927a7e53a8a53fb93021b119b1376fe4616381c5a57c": {"Name": "redis","EndpointID": "afbc9128f7d27becfbf64e843a92d36ce23800cd42c131e550abea7afb6a131e","MacAddress": "02:42:ac:11:00:03","IPv4Address": "172.17.0.3/16","IPv6Address": ""}},"Options": {"com.docker.network.bridge.default_bridge": "true","com.docker.network.bridge.enable_icc": "true","com.docker.network.bridge.enable_ip_masquerade": "true","com.docker.network.bridge.host_binding_ipv4": "0.0.0.0","com.docker.network.bridge.name": "docker0","com.docker.network.driver.mtu": "1500"},"Labels": {}}

]

# 進入debian容器測試內部網絡通信和互聯網通信

[root@VM-8-10-centos ~]# docker exec -it debian /bin/bash

root@56ffaf39316a:/# ping 172.17.0.1

PING 172.17.0.1 (172.17.0.1) 56(84) bytes of data.

64 bytes from 172.17.0.1: icmp_seq=1 ttl=64 time=0.071 ms

64 bytes from 172.17.0.1: icmp_seq=2 ttl=64 time=0.036 ms

--- 172.17.0.1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 999ms

rtt min/avg/max/mdev = 0.036/0.053/0.071/0.017 ms

root@56ffaf39316a:/# ping 172.17.0.3

PING 172.17.0.3 (172.17.0.3) 56(84) bytes of data.

64 bytes from 172.17.0.3: icmp_seq=1 ttl=64 time=0.067 ms

64 bytes from 172.17.0.3: icmp_seq=2 ttl=64 time=0.047 ms

--- 172.17.0.3 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.047/0.057/0.067/0.010 ms

root@56ffaf39316a:/# ping baidu.com

PING baidu.com (39.156.66.10) 56(84) bytes of data.

64 bytes from 39.156.66.10 (39.156.66.10): icmp_seq=1 ttl=247 time=59.0 ms

64 bytes from 39.156.66.10 (39.156.66.10): icmp_seq=2 ttl=247 time=55.4 ms

--- baidu.com ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 55.400/57.221/59.043/1.821 ms

小結

通過shell的結果分析:docker0網橋的ip為172.17.0.1/16,docker0各子網通信正常,并且通過ping baidu.com檢查了互聯網通信也正常。因此可以得出docker橋接模式與前一章中vlan模式是一致的,都是通過一個虛擬網橋實現了內部網絡的通信。

Docker容器與互聯網進行通信

在上一章節中不小心留了個坑,因為firewalld在iptables中內置了很多的規則,所以對于流量的分析很不友好,所以我索性直接關閉了firewalld,但是緊接著就發現這樣做有一個副作用:firewalld關閉后,iptables也會被清空。當時不覺得有什么影響,現在仔細回想了一下vlan之所以能夠連接互聯網,很大一部分原因是利用了iptables的nat功能,iptables被清空,意味著nat功能被關閉了,所以利用此功能的應用會失去網絡連接。下面使用shell命令來模擬并分析此現象。

shell

# 關閉firewalld

[root@VM-8-10-centos ~]# systemctl stop firewalld

# 檢查iptables

[root@VM-8-10-centos ~]# iptables -nvL

Chain INPUT (policy ACCEPT 0 packets, 0 bytes)pkts bytes target prot opt in out source destination Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)pkts bytes target prot opt in out source destination Chain OUTPUT (policy ACCEPT 0 packets, 0 bytes)pkts bytes target prot opt in out source destination

[root@VM-8-10-centos ~]# iptables -t nat -nvL

Chain PREROUTING (policy ACCEPT 0 packets, 0 bytes)pkts bytes target prot opt in out source destination Chain INPUT (policy ACCEPT 0 packets, 0 bytes)pkts bytes target prot opt in out source destination Chain OUTPUT (policy ACCEPT 0 packets, 0 bytes)pkts bytes target prot opt in out source destination Chain POSTROUTING (policy ACCEPT 0 packets, 0 bytes)pkts bytes target prot opt in out source destination

# 檢查debian容器互聯網連接情況

[root@VM-8-10-centos ~]# docker exec -it debian /bin/bash

root@56ffaf39316a:/# ping baidu.com

PING baidu.com (110.242.68.66) 56(84) bytes of data.

--- baidu.com ping statistics ---

5 packets transmitted, 0 received, 100% packet loss, time 4000ms

# 檢查內部網絡連接情況

root@56ffaf39316a:/# ping 172.17.0.1

PING 172.17.0.1 (172.17.0.1) 56(84) bytes of data.

64 bytes from 172.17.0.1: icmp_seq=1 ttl=64 time=0.041 ms

64 bytes from 172.17.0.1: icmp_seq=2 ttl=64 time=0.046 ms

--- 172.17.0.1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.041/0.043/0.046/0.002 ms

root@56ffaf39316a:/# ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.081 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.055 ms

--- 172.17.0.2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 0.055/0.068/0.081/0.013 ms

通過清空iptables發現docker容器內部確實丟失了互聯網連接,但是沒有影響內部網絡的通信。

手動添加nat記錄恢復Docker容器與互聯網的通信

# 添加snat記錄

[root@VM-8-10-centos ~]# iptables -t nat -A POSTROUTING -o eth0 -j MASQUERADE

# 檢查debian容器互聯網連接情況

[root@VM-8-10-centos ~]# docker exec -it debian /bin/bash

root@56ffaf39316a:/# ping baidu.com

PING baidu.com (39.156.66.10) 56(84) bytes of data.

64 bytes from 39.156.66.10 (39.156.66.10): icmp_seq=1 ttl=247 time=55.8 ms

64 bytes from 39.156.66.10 (39.156.66.10): icmp_seq=2 ttl=247 time=55.4 ms

--- baidu.com ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 55.386/55.610/55.834/0.224 ms

個人總結: docker容器與互聯網進行通信時確實依賴iptables,且行為上與vlan幾乎一致,因此我認為Docker其實是vlan+iptables一種高級應用。

思考

docker容器內的網絡通信是否也基于二層協議進行數據交換?

基于之前對vlan的了解,明白了bridge是一種工作在"數據鏈路層",根據mac地址交換數據幀的虛擬交換機,既然工作在二層,那么意味著它在進行數據交換時是沒有ip概念的,僅僅是按照mac地址轉發數據幀。既然如此,那么即使刪除了它的ip地址和路由表,應該也可以完成數據交換。

[root@VM-8-10-centos ~]# ip addr del 172.17.0.1/16 dev docker0

[root@VM-8-10-centos ~]# ip addr show docker0

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:6f:d7:19:7e brd ff:ff:ff:ff:ff:ffinet6 fe80::42:6fff:fed7:197e/64 scope link valid_lft forever preferred_lft forever

[root@VM-8-10-centos ~]# docker exec -it debian /bin/bash

root@56ffaf39316a:/# ping 172.17.0.3

PING 172.17.0.3 (172.17.0.3) 56(84) bytes of data.

64 bytes from 172.17.0.3: icmp_seq=1 ttl=64 time=0.066 ms

64 bytes from 172.17.0.3: icmp_seq=2 ttl=64 time=0.049 ms

--- 172.17.0.3 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.049/0.057/0.066/0.008 ms

iptables與路由表有何聯系和區別?誰決定了流量的出口網卡?

學習vlan的時候就存在一個疑惑:**虛擬網橋進行互聯網通信時,將流入網橋的流量轉發到出口網卡是由誰決定的?**當時做vlan的nat通信時,因為需要在iptables中配置FORWARD及NAT規則,自然而然的會認為是iptables實現的。如此的話,那么路由表存在的意義又是什么呢?**所以到底是iptables實現了流量轉發,還是路由表(ip route)實現了流量轉發?**或者具體點講:是誰將流量從docker0網卡轉發到eth0網卡?

具體過程需要深入分析iptables的工作原理,這里就不再贅述了,直接給出個人結論僅供參考。

個人結論:路由表不對流量做任何更改,僅僅用來確定數據包的出口網卡,iptables可以對ip數據包進行過濾、修改、轉發,但最終還是由路由表確定出口網卡。

即使沒有snat,數據包是不是應該也可以到達對方網絡?

在互聯網中基于ip協議進行通信的流量都會被標注源地址和目的地址,目的地址決定了流量應該如何發送給對方主機,源地址決定了其他主機如何區分數據包是由誰發送的。而SNAT的核心概念是通過轉換源地址的方式進行工作的,這是否意味著即使不配置snat,數據包依然可以到達對方網絡,只是對方網絡無法回復。

# 假設我有兩臺具有公網ipv4地址的云服務器xxx.xxx.xxx.xx1和xxx.xxx.xxx.xx2。xx1局域網內有另一臺主機x10# xx1主機

# 使用snat將源ip由xx1轉換為xx2

[root@VM-8-10-centos ~]# iptables -t nat -A POSTROUTING -s xx1 -o eth0 -j SNAT --to-source xxx.xxx.xxx.x10

# 監聽eth0網卡的icmp數據包

[root@VM-8-10-centos ~]# tcpdump -i eth0 -p icmp -nv | grep x10# xx2主機

# 監聽eth0網卡的icmp數據包

[root@VM-8-10-centos ~]# tcpdump -i eth0 -p icmp -nv

根據tcpdump抓包分析xx1確實發送了源地址為x10的數據包,但是從xx2主機的監聽結果看并沒有收到來自xx1或來自x10發送的數據包。或許是數據包在中途路由的過程中被丟棄了,又或者是我理解錯了??

——音頻數字化(模電與數電))

)

)

)