基于詞頻統計的聚類算法(kmeans)

數據集是三個政府報告文件,這里就不做詳細描述了,就是簡單的txt文件。

實驗過程主要分為如下幾步:

1.讀取數據并進行停用詞過濾

2.統計詞頻

3.基于三篇文章詞頻統計的層次聚類

4.基于三篇文章詞頻統計的k-means

代碼如下:

#詞頻統計模塊

import jieba

##########文件操作##########

#讀取文本

f = open(r"D:\dataset\文件1.txt","r",encoding='UTF-8')

text = f.read()#讀取文件

f.close()#關閉文件#讀取停用詞

f_stop = open(r"D:\dataset\中文停用詞表.txt","r",encoding='utf-8')

stop = f_stop.read()#讀取文件

f_stop.close()#關閉文件

##########文件操作###########刪除停用詞

for s in stop:text = text.replace(s, "");

text = text.replace(' ', '')

list = jieba.lcut(text)dict = {}

final_dict = {}

for l in list:dict[l] = list.count(l);#獲取單詞數目if l in final_dict:final_dict[l][0] = list.count(l) else:final_dict[l] = [0 for _ in range(3)]final_dict[l][0] = list.count(l)d = sorted(dict.items(),reverse = True,key = lambda d:d[1]); #排序cnt = 0

for i in d:cnt += i[1]print("該文章單詞總頻率 : ", cnt)

print("前20個單詞出現頻率為:")

for i in range(20):print(d[i][0]," : ",d[i][1], '/', cnt);import pandas as pd

pd.DataFrame(data = d).to_csv('count1.csv',encoding = 'utf-8')

#保存為.csv格式

##########文件操作##########

#讀取文本

f = open(r"D:\dataset\文件2.txt","r",encoding='UTF-8')

text = f.read()#讀取文件

f.close()#關閉文件#刪除停用詞

for s in stop:text = text.replace(s, "");

text = text.replace(' ', '')

list = jieba.lcut(text)dict = {}

for l in list:dict[l] = list.count(l);#獲取單詞數目if l in final_dict:final_dict[l][1] = list.count(l) else:final_dict[l] = [0 for _ in range(3)]final_dict[l][1] = list.count(l)d = sorted(dict.items(),reverse = True,key = lambda d:d[1]); #排序cnt = 0

for i in d:cnt += i[1]print("該文章單詞總頻率 : ", cnt)

print("前20個單詞出現頻率為:")

for i in range(20):print(d[i][0]," : ",d[i][1], '/', cnt);pd.DataFrame(data = d).to_csv('count2.csv',encoding = 'utf-8')

#保存為.csv格式##########文件操作##########

#讀取文本

f = open(r"D:\dataset\文件3.txt","r",encoding='UTF-8')

text = f.read()#讀取文件

f.close()#關閉文件#刪除停用詞

for s in stop:text = text.replace(s, "");

text = text.replace(' ', '')

list = jieba.lcut(text)dict = {}

for l in list:dict[l] = list.count(l);#獲取單詞數目if l in final_dict:final_dict[l][2] = list.count(l) else:final_dict[l] = [0 for _ in range(3)]final_dict[l][2] = list.count(l)d = sorted(dict.items(),reverse = True,key = lambda d:d[1]); #排序cnt = 0

for i in d:cnt += i[1]

print("該文章單詞總頻率 : ", cnt)

print("前20個單詞出現頻率為:")

for i in range(20):print(d[i][0]," : ",d[i][1], '/', cnt);pd.DataFrame(data = d).to_csv('count3.csv',encoding = 'utf-8')f_dict = sorted(final_dict.items(), reverse = True, key = lambda d:d[1][0] + d[1][1] + d[1][2])

final_dict_cpy = final_dict.copy()

#保存為.csv格式

pd.DataFrame(data = f_dict).to_csv('count_whole.csv', encoding = 'utf-8')

pd.DataFrame(data = final_dict).to_csv('all_data.csv', encoding = 'utf-8')

#按總頻數排序,前二十個對象。

print("前二十個總頻率最大的對象:")

for i in range(20):print(f_dict[i][0], " : ", f_dict[i][1])

print(final_dict)import numpy as np

from matplotlib import pyplot as plt

from scipy.cluster.hierarchy import dendrogram,linkage

import xlrd as xr

import pandas as pd

from sklearn import preprocessing

from sklearn.cluster import AgglomerativeClustering

#數據處理

# Reading the csv file

df_new = pd.read_csv('all_data.csv')# saving xlsx file

GFG = pd.ExcelWriter('all_data.xlsx')

df_new.to_excel(GFG, index=False)GFG.save()file_location="all_data.xlsx"

data=xr.open_workbook(file_location)

sheet = data.sheet_by_index(0)

#形成數據矩陣

lie=sheet.ncols

hang=sheet.nrows

stats = [[sheet.cell_value(c,r) for c in range(1,sheet.nrows)] for r in range(1,sheet.ncols)]#得到所有行列值

stats = pd.DataFrame(stats)

#輸出聚類過程

stats_frame=pd.DataFrame(stats)

normalizer=preprocessing.scale(stats_frame)

stats_frame_nomalized=pd.DataFrame(normalizer)

print(stats_frame)

print(stats_frame_nomalized)

#輸出數據矩陣結果

print("_____________")

import numpy as np

from matplotlib import pyplot as plt

from scipy.cluster.hierarchy import dendrogram

from scipy.cluster.hierarchy import linkage, dendrogram

#z=linkage(stats,"average",metric='euclidean',optimal_ordering=True)

#print(z)

#print("_____________")

## average=類平均法,ward=離差平方和法,sin=最短距離法,com=最長距離法,med=中間距離法,cen=重心法,fle=可變類平均法

#fig, ax = plt.subplots(figsize=(20,20))

#dendrogram(z, leaf_font_size=1) #畫圖##plt.axhline(y=4) #畫一條分類線

##plt.show()

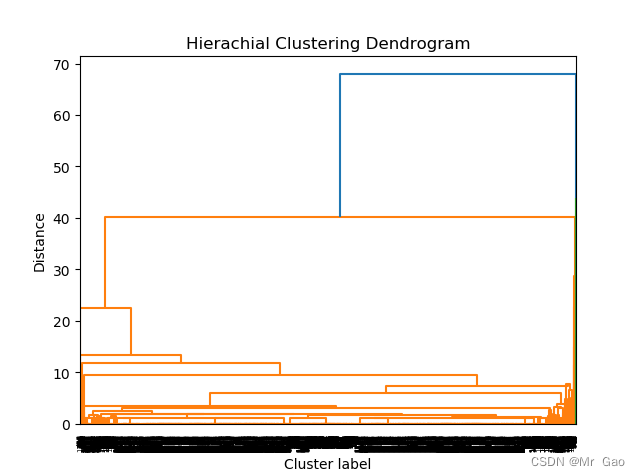

##可視化輸出print(stats)Z = linkage(stats, method='median', metric='euclidean')

p = dendrogram(Z, 0)

plt.title("Hierachial Clustering Dendrogram")

plt.xlabel("Cluster label")

plt.ylabel("Distance")

plt.show()cluster = AgglomerativeClustering(n_clusters=3, affinity='euclidean', linkage='average')

#linkage模式可以調整,n_cluser可以調整

print(cluster.fit_predict(stats))

for i in cluster.fit_predict(stats):print(i, end=",")

plt.figure(figsize=(10, 7))

plt.scatter(stats_frame[0],stats_frame[1], c=cluster.labels_)

plt.show()

#保存結果

# print(final_dict)

cnt = 0

res = cluster.fit_predict(stats)

final_item = final_dict.items()

# print(final_item)

for i in final_item:i[1].append(res[cnt])cnt += 1

# print(final_dict)

pd.DataFrame(data = final_dict).to_csv('result_hierarchicalClustering.csv', encoding = 'utf-8')

import numpy as np

from sklearn.cluster import KMeans

from sklearn.metrics import silhouette_score

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

colo = ['b', 'g', 'r', 'c', 'm', 'y', 'k']

# print(x)

x = []

for i in final_dict_cpy.items():x.append(i[1])

# print(x)

x = np.array(x)

fig = plt.figure(figsize=(12, 8))

ax = Axes3D(fig, elev=30, azim=20)shape = x.shape

sse = []

score = []

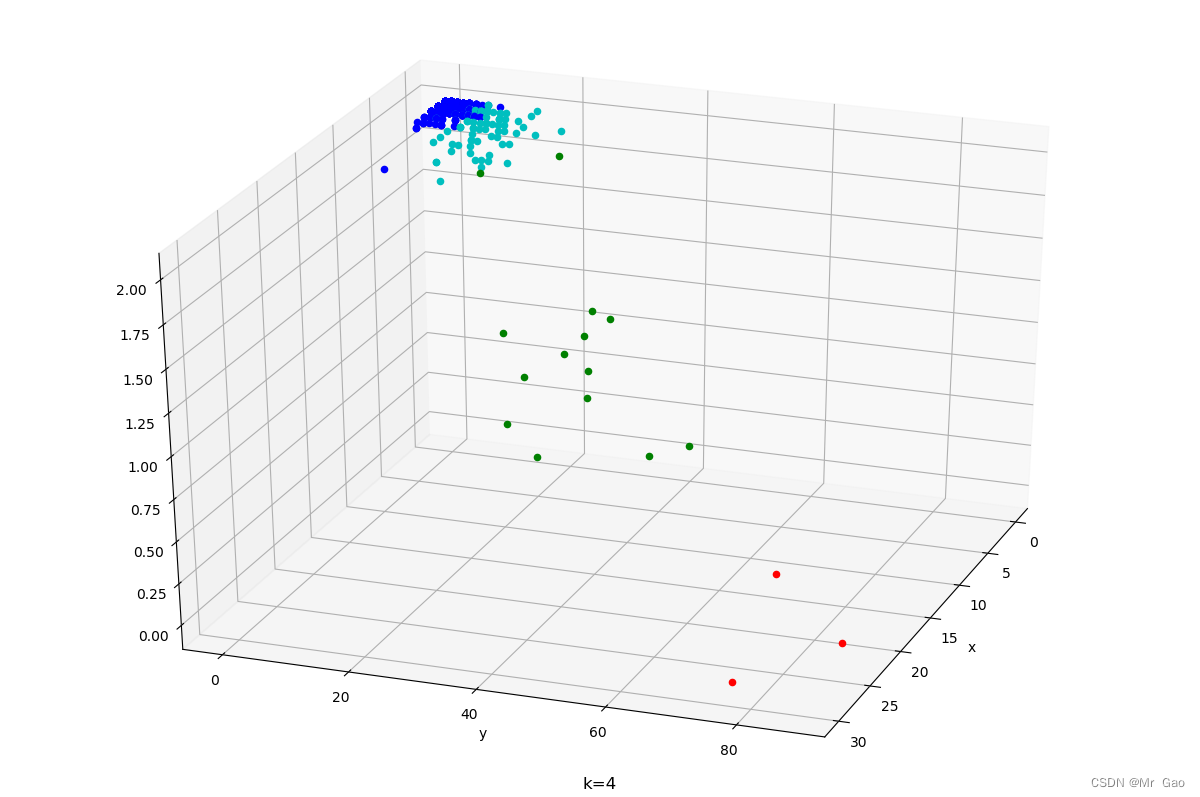

K = 4 # 分為K類

for k in [K]:clf = KMeans(n_clusters=k)clf.fit(x)sse.append(clf.inertia_)lab = clf.fit_predict(x)score.append(silhouette_score(x, clf.labels_, metric='euclidean'))for i in range(shape[0]):plt.xlabel('x')plt.ylabel('y')plt.title('k=' + str(k))ax.scatter(x[i, 0],x[i, 1], x[i, -1], c=colo[lab[i]])plt.show()# 保存結果

cnt = 0

res = clf.fit_predict(x)

final_item = final_dict_cpy.items()

for i in final_item:i[1].append(res[cnt])cnt += 1

pd.DataFrame(data = final_dict_cpy).to_csv('result_k-means.csv', encoding = 'utf-8')

運行結果如下:

)

)