p;?上篇文章分享了如何使用GPT-SoVITS實現一個HTTP服務器,并通過該服務器提供文本到語音(TTS)服務。今天,我們將進一步探討如何部署另一個強大的TTS模型——f5-tts。這個模型在自然語音生成方面表現出色,具有高度的可定制性和靈活性。通過這篇文章,我們將詳細介紹如何搭建f5-tts模型的環境,進行模型的配置,并通過HTTP服務器提供文本到語音服務,助力用戶更高效地集成到各種應用場景中。

1 部署及啟動F5-TTS服務器

1.1 項目下載及根據來源

這里就不贅述咋下載F5-TTS這個項目了,如果有不知道的兄弟可以看我上一篇文章:

TTS語音合成|盤點兩款主流TTS模型,F5-TTS和GPT-SoVITS

需要注意的是,這里f5-tts官方并沒有給我們實現api接口的http服務器,需要基于另一個項目去實現HTTP服務器的搭建,另一個項目地址在:f5-tts-api

可以看到,f5-tts-api這個項目提供了三種部署方式,實際上只有兩種部署方式。前兩種方式都使用了整合包里面的Python環境和模型,而第三種則是使用f5-tts官網的Python環境和模型。我嘗試過這三種方式,發現由于模型版本的差異,前兩種方式合成的語音中會出現雜音,而使用官網提供的環境和模型合成的語音則非常清晰,沒有雜音。這可能是由于整合包中的模型版本與官網最新版本存在一定的差異,影響了語音的質量,所以這里重點介紹第三種部署方式。

1.2 需要文件及代碼

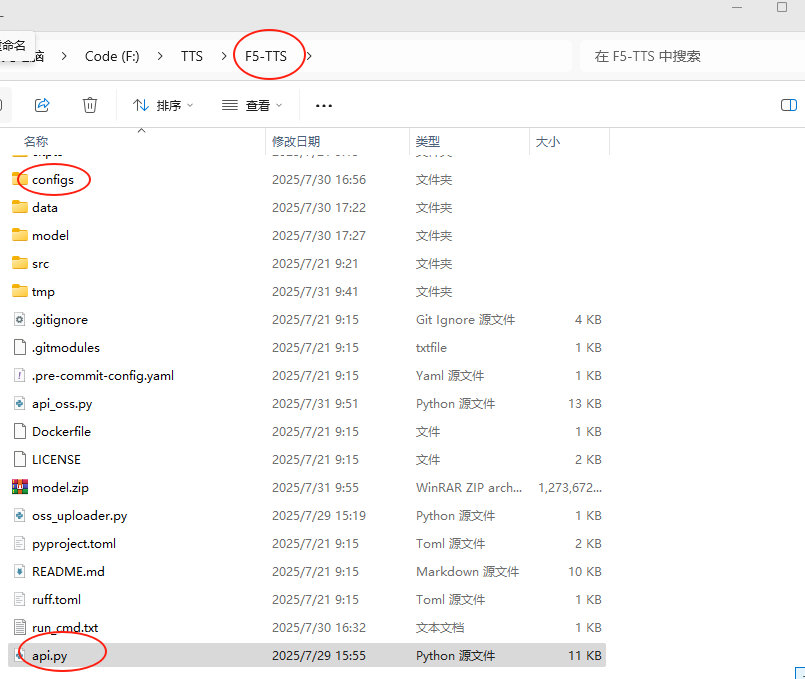

這里的項目F5-TTS是官網項目,configs和api.py是f5-tts-api這個項目中的文件。

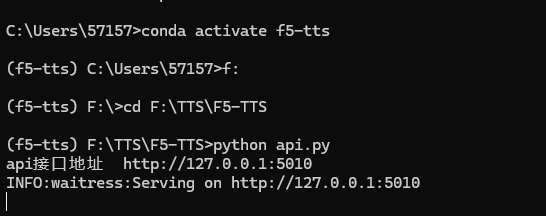

1.3 啟動服務命令

# 這個環境是f5-tts官網環境

conda activate f5-tts

pip install flask waitress

pip install Flask-Cors

cd F:\TTS\F5-TTS

python api.py

啟動起來大概是這樣的:

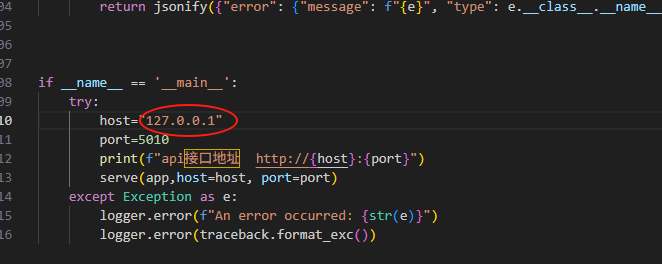

可以看到IP是127.0.0.1,只允許本地訪問,如果想要局域網內訪問這個服務,或者映射出去,需要到api.py里面修改ip,如下圖所示:

將ip改為0.0.0.0即可。

2 客戶端請求部署的TTS服務器

關于請求,f5-tts-api這個項目給了兩種請求方式,分別是API 使用示例接口和 兼容openai tts接口

2.1 API 使用示例

import requestsres=requests.post('http://127.0.0.1:5010/api',data={"ref_text": '這里填寫 1.wav 中對應的文字內容',"gen_text": '''這里填寫要生成的文本。''',"model": 'f5-tts'

},files={"audio":open('./1.wav','rb')})if res.status_code!=200:print(res.text)exit()with open("ceshi.wav",'wb') as f:f.write(res.content)

2.2 兼容openai tts接口

import requests

import json

import os

import base64

import structfrom openai import OpenAIclient = OpenAI(api_key='12314', base_url='http://127.0.0.1:5010/v1')

with client.audio.speech.with_streaming_response.create(model='f5-tts',voice='1.wav###你說四大皆空,卻為何緊閉雙眼,若你睜開眼睛看看我,我不相信你,兩眼空空。',input='你好啊,親愛的朋友們',speed=1.0) as response:with open('./test.wav', 'wb') as f:for chunk in response.iter_bytes():f.write(chunk)2.3 測試

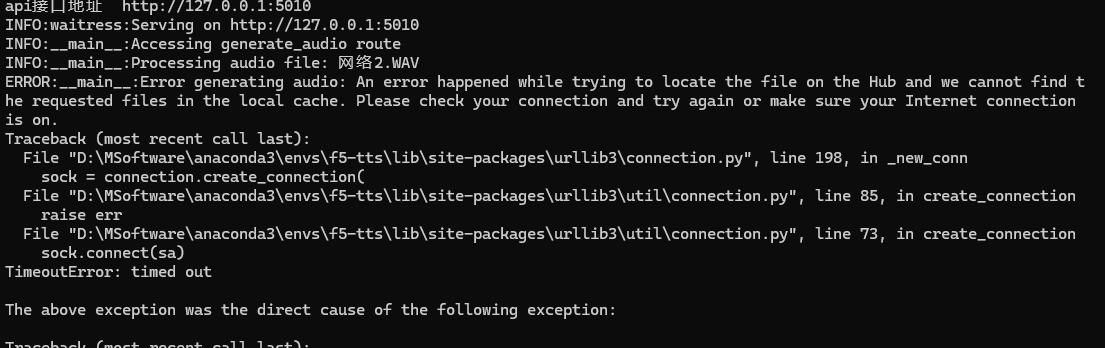

使用API 使用示例,可以看到,不出所料的報錯了😴:

原因很簡單,你沒科學上網,Hugging Face被墻了,繼續往下看解決辦法。

3 下載模型及修改api.py

3.1 查看需要模型

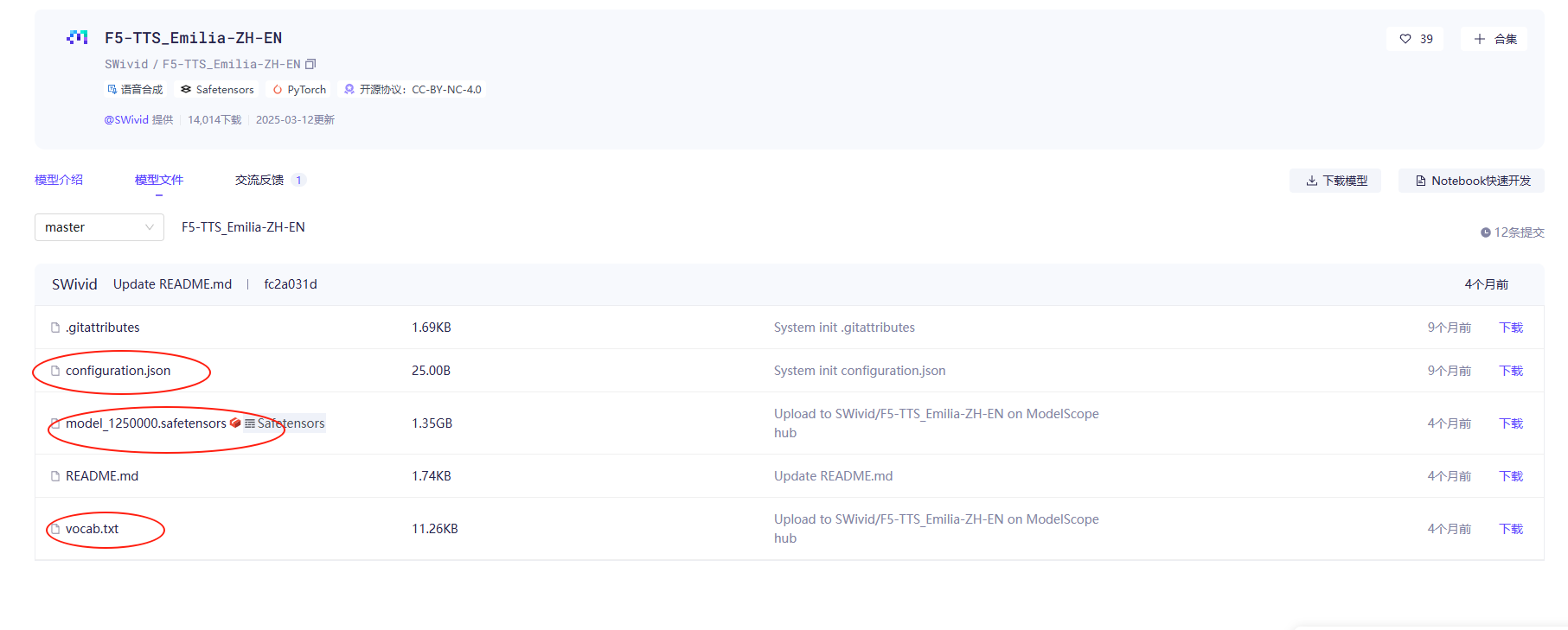

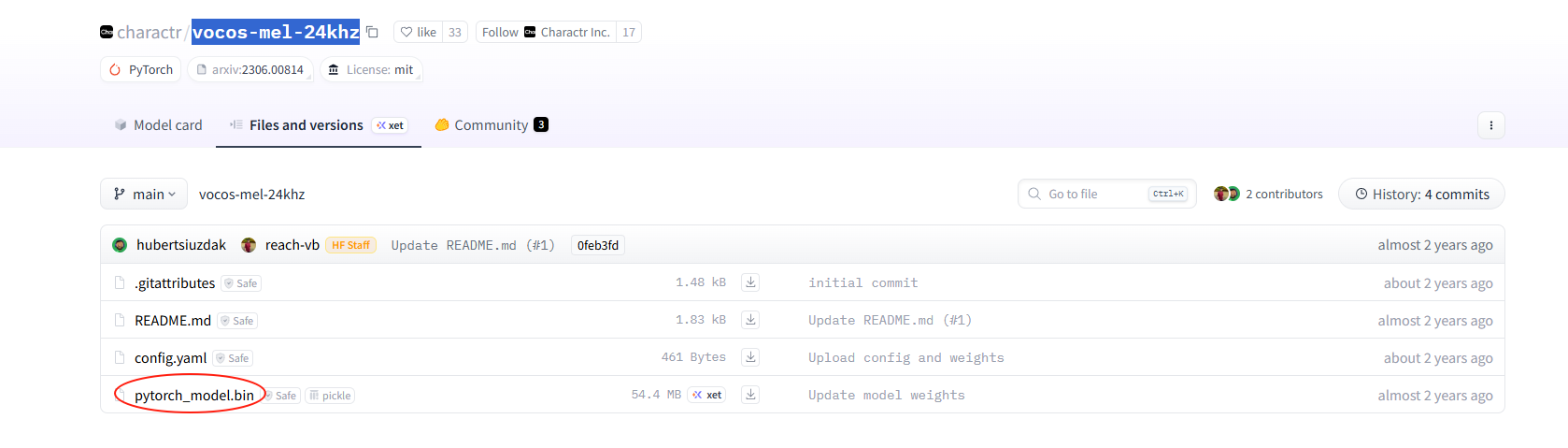

這里直接給你掠過,有興趣的兄弟們可以自行查看api.py,直接說結論,需要兩個模型,一個是TTS模型(F5-TTS_Emilia-ZH-EN

),一個是頻譜合成模型(vocos-mel-24khz)。

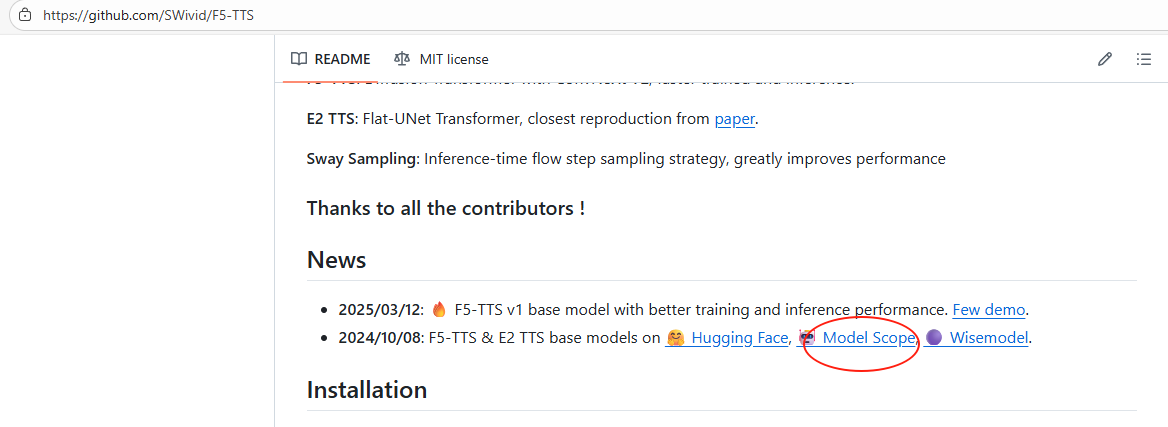

F5-TTS_Emilia-ZH-EN下載位置在:

*vocos-mel-24khz我在modelscope沒有找到,Hugging Face上倒是有:

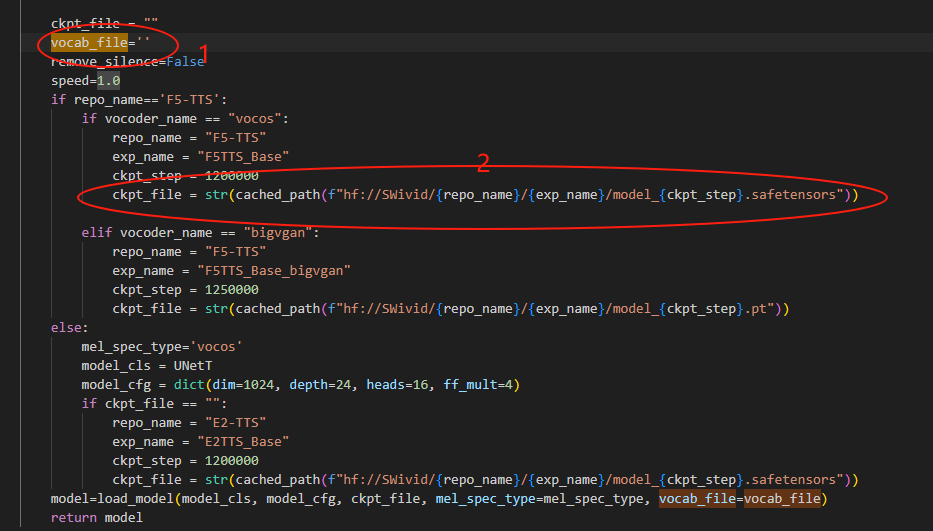

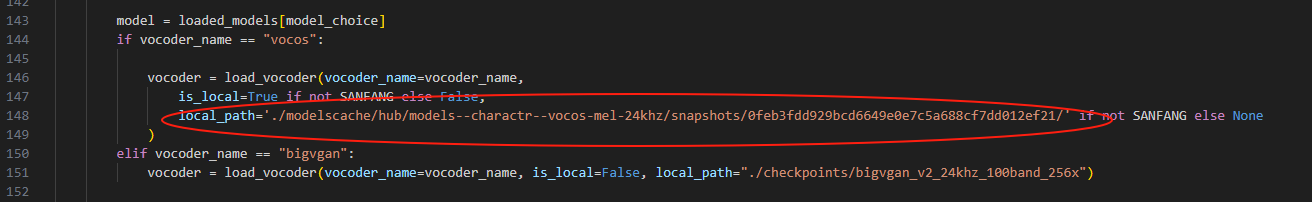

3.2 api.py代碼修改位置

上圖位置1改成vocab.txt位置,位置2改成F5-TTS_Emilia-ZH-EN模型位置。

上圖改成vocos-mel-24khz模型位置。

4 代碼優化

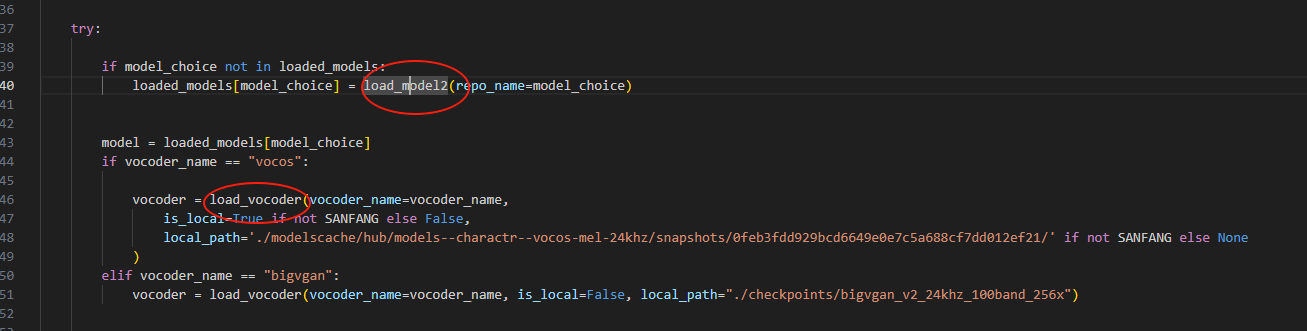

我發現每次推理時,都需要加載一遍模型,可能是為了節省資源:

我這里先把模型加載進來,避免每次都加載一遍模型,并且將參考音頻和參考文字都放在了服務端,并且將生成的音頻上傳到oss服務器,做成網絡音頻流。

具體代碼實現:

import os,time,sys

from pathlib import Path

ROOT_DIR=Path(__file__).parent.as_posix()# ffmpeg

if sys.platform == 'win32':os.environ['PATH'] = ROOT_DIR + f';{ROOT_DIR}\\ffmpeg;' + os.environ['PATH']

else:os.environ['PATH'] = ROOT_DIR + f':{ROOT_DIR}/ffmpeg:' + os.environ['PATH']SANFANG=True

if Path(f"{ROOT_DIR}/modelscache").exists():SANFANG=Falseos.environ['HF_HOME']=Path(f"{ROOT_DIR}/modelscache").as_posix()import re

import torch

from torch.backends import cudnn

import torchaudio

import numpy as np

from flask import Flask, request, jsonify, send_file, render_template

from flask_cors import CORS

from einops import rearrange

from vocos import Vocos

from pydub import AudioSegment, silencefrom cached_path import cached_pathimport soundfile as sf

import io

import tempfile

import logging

import traceback

from waitress import serve

from importlib.resources import files

from omegaconf import OmegaConffrom f5_tts.infer.utils_infer import (infer_process,load_model,load_vocoder,preprocess_ref_audio_text,remove_silence_for_generated_wav,

)

from f5_tts.model import DiT, UNetT

from oss_uploader import upload_to_oss

import requests

TMPDIR=(Path(__file__).parent/'tmp').as_posix()

Path(TMPDIR).mkdir(exist_ok=True)# Set up logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)app = Flask(__name__, template_folder='templates')

CORS(app)# --------------------- Settings -------------------- #audio_info = { # 字典定義26: {"audio_name": "data/cqgyq.wav", "ref_text": "111" ,"audio_speed": 1.3},25: {"audio_name": "data/shwd.wav", "ref_text": "222","audio_speed": 1.1},24: {"audio_name": "data/network8.wav", "ref_text": "333","audio_speed": 1},23: {"audio_name": "data/network4.wav", "ref_text": "444","audio_speed": 1.1},22: {"audio_name": "data/network2.wav", "ref_text": "555","audio_speed": 0.9},

}def get_audio_info(audio_id):# 判斷 audio_id 是否是整數if not isinstance(audio_id, int):try:# 嘗試將 audio_id 轉換為整數audio_id = int(audio_id)except ValueError:# 如果轉換失敗,輸出錯誤信息并返回默認值print(f"音頻ID {audio_id} 不是有效的整數,無法轉換。")return None, None, None# 判斷 audio_id 是否在字典中if audio_id in audio_info:audio_data = audio_info[audio_id]return audio_data["audio_name"], audio_data["ref_text"], audio_data["audio_speed"]else:print(f"音頻ID {audio_id} 在字典中沒有找到。")return None, None, None# Add this near the top of the file, after other imports

UPLOAD_FOLDER = 'data'

if not os.path.exists(UPLOAD_FOLDER):os.makedirs(UPLOAD_FOLDER)def get_model_and_vocoder(model_dir, vocab_dir, vcoder_dir):# mel_spec_type = vocoder_namemodel_cfg = f"{ROOT_DIR}/configs/F5TTS_Base_train.yaml"model_cfg = OmegaConf.load(model_cfg).model.archmodel = load_model(DiT, model_cfg, model_dir, mel_spec_type='vocos', vocab_file=vocab_dir)vocoder = load_vocoder(vocoder_name='vocos',is_local=True,local_path=vcoder_dir)return model, vocodermodel, vocoder = get_model_and_vocoder(f"{ROOT_DIR}/model/model_1250000.safetensors", f'{ROOT_DIR}/model/vocab.txt',f'{ROOT_DIR}/model/models--charactr--vocos-mel-24khz/snapshots/0feb3fdd929bcd6649e0e7c5a688cf7dd012ef21/')@app.route('/api', methods=['POST'])

def api():logger.info("Accessing generate_audio route")# 打印所有請求數據if request.is_json:data = request.get_json()gen_text = data['text']voice_id = data['anchor_id']ref_audio_path = data['ref_audio_path']logger.info("Received JSON data: %s", data)else:data = request.form.to_dict() logger.info("Received form data: %s", data)gen_text = request.form.get('text')voice_id = request.form.get('anchor_id')ref_audio_path = request.form.get('ref_audio_path')remove_silence = int(request.form.get('remove_silence',0))# 自定義speed# speed = float(request.form.get('speed',1.0))print("===========voice_id: ", voice_id, type(voice_id))audio_name, ref_text, audio_speed = get_audio_info(voice_id)print("================get audio_name: ",audio_name)print("================get ref_text :",ref_text)print("================get audio_speed :",audio_speed)print("================get ref_audio_path :",ref_audio_path)if not all([audio_name, ref_text, gen_text]): # Include audio_filename in the checkreturn jsonify({"error": "Missing required parameters"}), 400speed = audio_speed# 調用接口查詢是否存在記錄check_url = "xxx/existVoice"try:response = requests.post(check_url, data={"voice_text": gen_text, 'anchor_id': voice_id})if response.status_code == 200:result = response.json()if result.get("code") == 200 and result.get("data"):# 如果接口返回 code 為 200 且 data 有值,表示有記錄return {"code": 200, "oss_url": result.get("data")}except Exception as e:return {"code": 400, "message": "Request error", "Exception": str(e)}print("=======================================Check Success!!!")try:main_voice = {"ref_audio": audio_name, "ref_text": ref_text}voices = {"main": main_voice}for voice in voices:voices[voice]["ref_audio"], voices[voice]["ref_text"] = preprocess_ref_audio_text(voices[voice]["ref_audio"], voices[voice]["ref_text"])print("Voice:", voice)print("Ref_audio:", voices[voice]["ref_audio"])print("Ref_text:", voices[voice]["ref_text"])generated_audio_segments = []reg1 = r"(?=\[\w+\])"chunks = re.split(reg1, gen_text)reg2 = r"\[(\w+)\]"for text in chunks:if not text.strip():continuematch = re.match(reg2, text)if match:voice = match[1]else:print("No voice tag found, using main.")voice = "main"if voice not in voices:print(f"Voice {voice} not found, using main.")voice = "main"text = re.sub(reg2, "", text)gen_text = text.strip()ref_audio = voices[voice]["ref_audio"]ref_text = voices[voice]["ref_text"]print(f"Voice: {voice}")# 語音生成audio, final_sample_rate, spectragram = infer_process(ref_audio, ref_text, gen_text, model, vocoder, mel_spec_type='vocos', speed=speed)generated_audio_segments.append(audio)# if generated_audio_segments:final_wave = np.concatenate(generated_audio_segments)# 使用BytesIO在內存中處理音頻文件with io.BytesIO() as audio_buffer:# 將音頻寫入內存緩沖區sf.write(audio_buffer, final_wave, final_sample_rate, format='wav')if remove_silence == 1:# 如果需要去除靜音,需要先將數據加載到pydub中處理audio_buffer.seek(0) # 回到緩沖區開頭sound = AudioSegment.from_file(audio_buffer, format="wav")# 去除靜音sound = remove_silence_from_audio(sound) # 假設有這個函數# 將處理后的音頻重新寫入緩沖區audio_buffer.seek(0)audio_buffer.truncate()sound.export(audio_buffer, format="wav")# 上傳到OSSaudio_buffer.seek(0) # 回到緩沖區開頭以便讀取oss_url = upload_to_oss(audio_buffer.read(), "wav") # 上傳到 OSSprint(f"Uploaded to OSS: {oss_url}")# 將返回的 oss_url 插入數據庫insert_url = "xxx/insertVoice"try:insert_response = requests.post(insert_url, data={"voice_text": gen_text,"voice_type": 1,"oss_url": oss_url,"anchor_id": voice_id,"type": 1})if insert_response.status_code == 200:# 插入成功,返回生成的 oss_urlreturn {"code": 200, "oss_url": oss_url}else:# 如果插入失敗,返回錯誤信息return {"code": 400, "message": "Failed to insert oss_url into database"}except Exception as e:return {"code": 500, "message": "Error during TTS processing", "Exception": str(e)}# 返回 OSS URL# return {"code": 200, "oss_url": oss_url}# print("==========audio_file.filename: ",audio_file.filename)# return send_file(wave_path, mimetype="audio/wav", as_attachment=True, download_name="aaa.wav")except Exception as e:logger.error(f"Error generating audio: {str(e)}", exc_info=True)return jsonify({"error": str(e)}), 500 if __name__ == '__main__':try:# host="127.0.0.1"host="0.0.0.0"port=5010print(f"api接口地址 http://{host}:{port}")serve(app,host=host, port=port)except Exception as e:logger.error(f"An error occurred: {str(e)}")logger.error(traceback.format_exc())

總結

??本篇文章主要分享了如何使用F5-TTS實現一個HTTP服務器,并通過該服務器提供文本到語音(TTS)服務。通過搭建這個服務器,用戶可以方便地通過API接口進行文本轉語音的請求。文章詳細介紹了如何配置和運行該服務器,如何處理API請求,并展示了如何利用該服務將文本轉換為自然流暢的語音輸出。此外,文中還探討了如何優化服務器的性能,確保高效的文本轉語音處理,同時提供了相關的錯誤處理機制,確保用戶體驗的穩定性與可靠性。通過實現這個TTS服務,用戶能夠輕松將文本信息轉化為語音形式,廣泛應用于語音助手、自動化客戶服務以及各類語音交互系統中。

)

:Memory)

:組合數據類型——元組類型:創建元組)

)

網絡協議封裝)

)