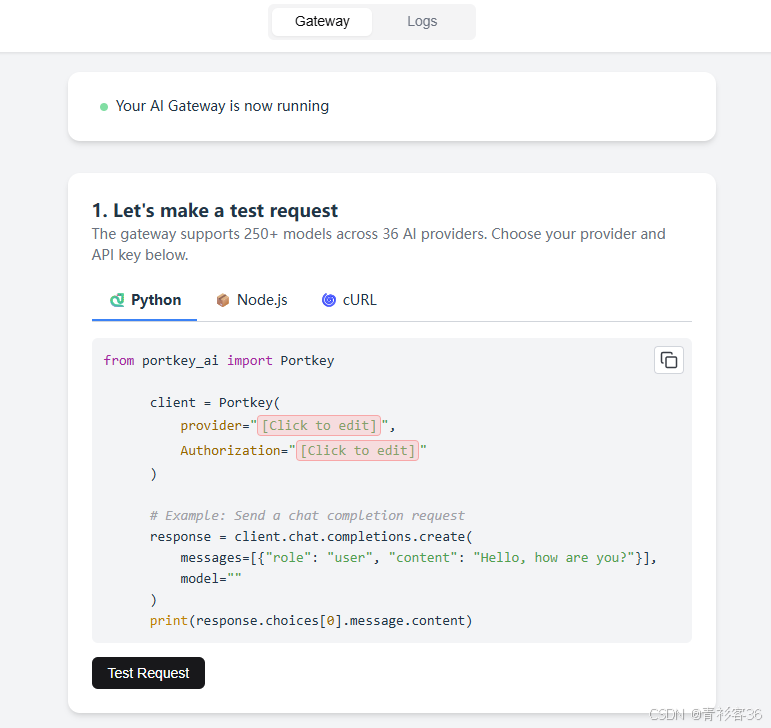

筆者最近在本地搭建了Portkey AI Gateway(模型路由網關),然后按照文檔中的方式進行測試。

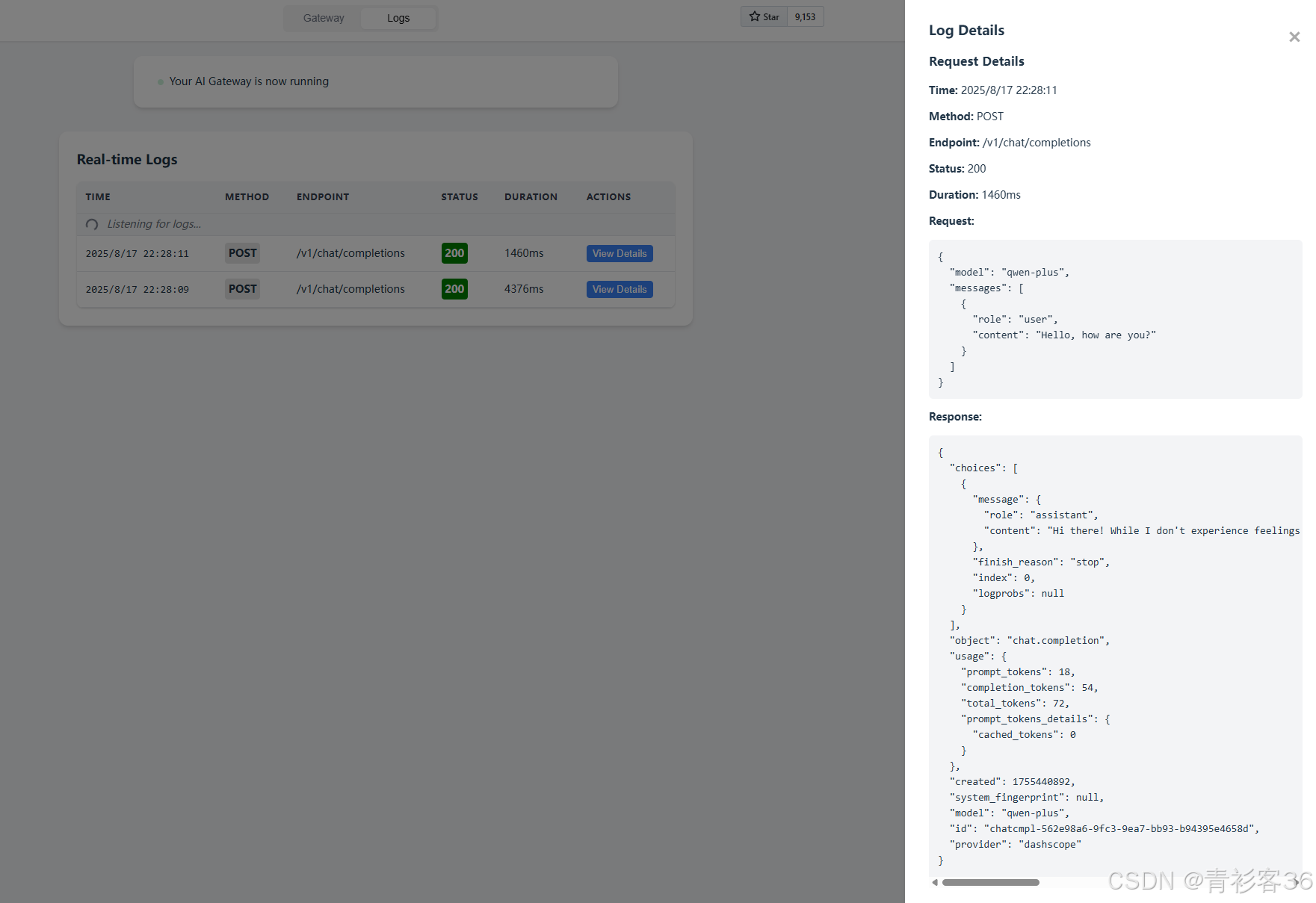

結果發現,網關能夠接收到請求,但是Python測試的程序卻運行報錯。

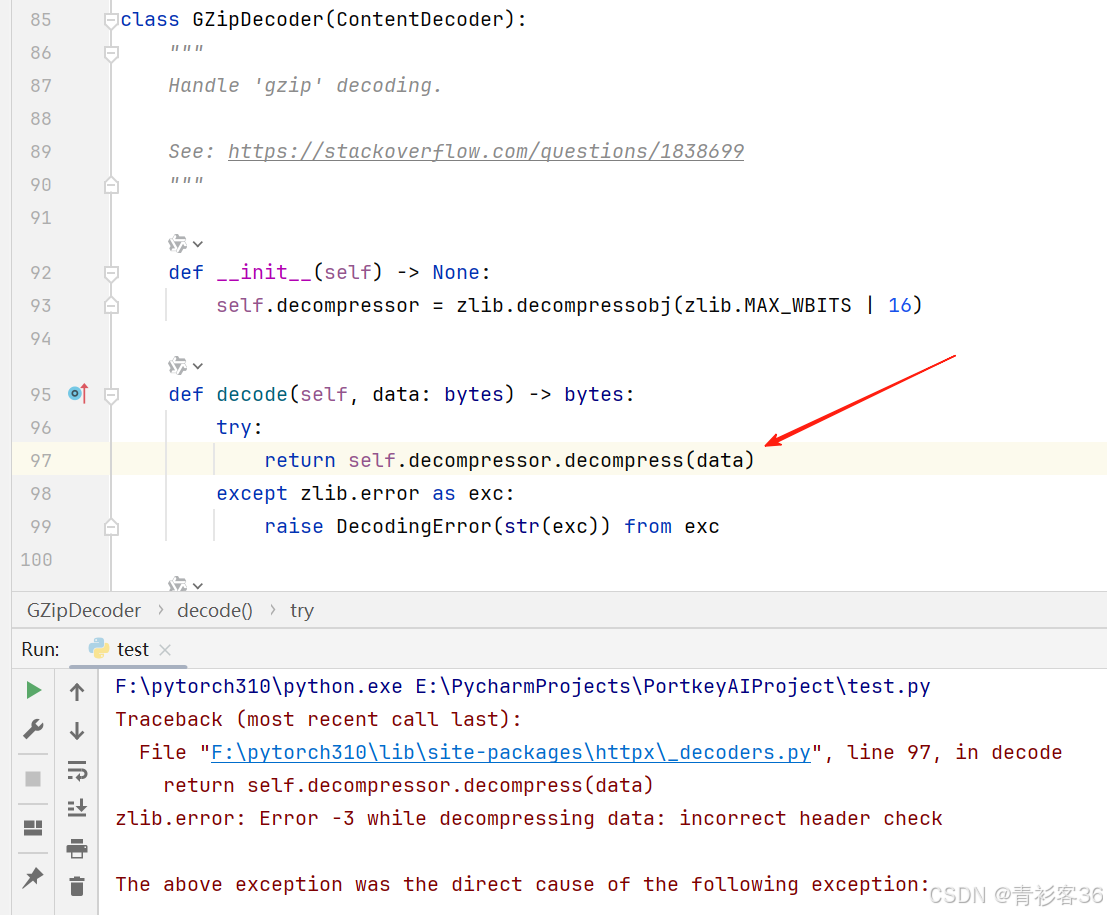

Python代碼報錯信息如下:

Traceback (most recent call last):File "F:\pytorch310\lib\site-packages\httpx\_decoders.py", line 97, in decodereturn self.decompressor.decompress(data)

zlib.error: Error -3 while decompressing data: incorrect header checkThe above exception was the direct cause of the following exception:Traceback (most recent call last):File "F:\pytorch310\lib\site-packages\portkey_ai\_vendor\openai\_base_client.py", line 989, in requestresponse = self._client.send(File "F:\pytorch310\lib\site-packages\httpx\_client.py", line 928, in sendraise excFile "F:\pytorch310\lib\site-packages\httpx\_client.py", line 922, in sendresponse.read()File "F:\pytorch310\lib\site-packages\httpx\_models.py", line 881, in readself._content = b"".join(self.iter_bytes())File "F:\pytorch310\lib\site-packages\httpx\_models.py", line 898, in iter_bytesdecoded = decoder.decode(raw_bytes)File "F:\pytorch310\lib\site-packages\httpx\_decoders.py", line 99, in decoderaise DecodingError(str(exc)) from exc

httpx.DecodingError: Error -3 while decompressing data: incorrect header checkThe above exception was the direct cause of the following exception:Traceback (most recent call last):File "E:\PycharmProjects\PortkeyAIProject\test.py", line 10, in <module>response = client.chat.completions.create(File "F:\pytorch310\lib\site-packages\portkey_ai\api_resources\apis\chat_complete.py", line 183, in createreturn self.normal_create(File "F:\pytorch310\lib\site-packages\portkey_ai\api_resources\apis\chat_complete.py", line 126, in normal_createresponse = self.openai_client.with_raw_response.chat.completions.create(File "F:\pytorch310\lib\site-packages\portkey_ai\_vendor\openai\_legacy_response.py", line 364, in wrappedreturn cast(LegacyAPIResponse[R], func(*args, **kwargs))File "F:\pytorch310\lib\site-packages\portkey_ai\_vendor\openai\_utils\_utils.py", line 287, in wrapperreturn func(*args, **kwargs)File "F:\pytorch310\lib\site-packages\portkey_ai\_vendor\openai\resources\chat\completions\completions.py", line 925, in createreturn self._post(File "F:\pytorch310\lib\site-packages\portkey_ai\_vendor\openai\_base_client.py", line 1259, in postreturn cast(ResponseT, self.request(cast_to, opts, stream=stream, stream_cls=stream_cls))File "F:\pytorch310\lib\site-packages\portkey_ai\_vendor\openai\_base_client.py", line 1021, in requestraise APIConnectionError(request=request) from err

openai.APIConnectionError: Connection error.

然后筆者定位到報錯位置,發現是gzip解碼時出現了問題。

癥狀是 incorrect header check,最終定位到網關在響應里帶了 Content-Encoding: gzip 頭,但實體其實是明文 JSON(即“假壓縮頭”)。任何會自動解壓的客戶端(httpx/Portkey SDK 等)都會在讀取階段失敗。

要想在根源上修復這個問題,需要在網關/反代上保證“頭與實體一致”——不壓就刪 Content-Encoding;壓了就只壓一層并設置正確的頭。可是這個網關是直接運行起來的開源項目,所以筆者只好想了一個臨時的辦法來解決這個問題:客戶端用 httpx.stream(...).iter_raw() 讀原始字節并手動解析。

復現與第一輪嘗試

1) 最初用 Portkey SDK 直連 provider

from portkey_ai import Portkey

client = Portkey(provider="dashscope", Authorization="Bearer <key>")

client.chat.completions.create(model="qwen-plus", messages=[...])

現象:httpx.DecodingError。

直覺:解壓階段掛了 → 看壓縮相關頭部。

2) 改走本地網關 base_url

Portkey(base_url="http://localhost:8787/v1")

這時 Portkey 的 provider= 參數不會自動變成網關能識別的路由頭,于是網關返回:

400 {'status': 'failure', 'message': 'Either x-portkey-config or x-portkey-provider header is required'}

結論:走自托管網關必須用請求頭指明路由(x-portkey-provider)。

我們補上頭之后,仍舊遇到 DecodingError。說明不僅是路由問題,壓縮/頭部也有坑。

關鍵破局:跳過自動解壓,直看“線上的原始字節”

為排除客戶端自動行為干擾,直接用 httpx.stream(...).iter_raw():

import httpx, jsonGATEWAY = "http://localhost:8787/v1"

payload = {"model": "qwen-plus", "messages": [{"role": "user", "content": "Hello"}]}

headers = {"x-portkey-provider": "dashscope","Authorization": "Bearer <your_secret_key>","Accept-Encoding": "identity","Content-Type": "application/json",

}with httpx.stream("POST", f"{GATEWAY}/chat/completions", json=payload, headers=headers, timeout=30) as r:print("status =", r.status_code)print("Content-Encoding =", r.headers.get("content-encoding"))raw = b"".join(r.iter_raw()) # ★ 不做解壓,拿“線上的原始字節”print("raw len =", len(raw))print("raw head =", raw[:80])# 如果服務端其實是明文 JSON(卻錯誤地帶了 Content-Encoding)try:print("as text ->", raw[:200].decode("utf-8"))except Exception: # 如果真的是 gzip,可自行解壓看import gzip, iotry:txt = gzip.GzipFile(fileobj=io.BytesIO(raw)).read().decode("utf-8")print("as gzip json ->", txt[:200])except Exception as e:print("manual decode failed:", e)

實際輸出(核心證據):

status = 200

Content-Encoding = gzip

raw len = 448

raw head = b'{"choices":[{"message":{"role":"assistant","content":"Hi there! \xd9\xa9(\xe2\x97\x95\xe2\x80\xbf\xe2\x97\x95\xef\xbd\xa1)'

as text -> {"choices":[{"message":{"role":"assistant","content":"Hi there! ?(???。)? How can I assist you today?"},"finish_reason":"stop","index":0,"logprobs":null}],"object":"chat.completion","usage":{

結論:服務端響應頭聲稱 gzip,但實體實際上是明文 JSON。

這就是“假壓縮頭”。httpx/SDK 看到 Content-Encoding: gzip 會嘗試解壓,結果自然報 incorrect header check。

為何會出現“假壓縮頭”?

常見幾種錯誤鏈路:

- 上游原本 gzip,但中間層解壓了實體,卻忘了刪

Content-Encoding頭; - 上游明文,但中間層“不小心”加了

Content-Encoding: gzip; - 雙重壓縮:上游已 gzip,網關又對同一實體再壓一層(頭與實體層級不一致)。

這類錯誤必須在服務端/網關側修。只靠客戶端改代碼是治標不治本。

Checklist:以后再遇到類似“解壓報錯”,按這個查

- 對齊兩個關鍵頭:

Accept-Encoding(請求) vsContent-Encoding(響應) - 抓原始字節:

iter_raw()→ 看是否“明文 + gzip 頭” - 斷開自動解壓的假象:客戶端臨時改為手動解析

- 修服務器:不壓就刪頭;壓就只壓一層、頭與體一致

- Portkey 自托管:走

base_url必帶x-portkey-*路由頭;鑒權不要留空

到這里,問題的根因就找到了,并且我們也提出了臨時的解決方案。下一步就是深入源碼來看看這到底是怎么個事兒!

讀者朋友們感興趣的話,也可以閱讀下這個項目的源碼(https://github.com/Portkey-AI/gateway),看看它到底是怎么實現的hh~。

:nvidia與cuda介紹)

)

)

)