原文-知識庫,歡迎大家評論互動

AI Model API

Portable ModelAPI across AI providers for Chat, Text to Image, Audio Transcription, Text to Speech, and Embedding models. Both synchronous and stream API options are supported. Dropping down to access model specific features is also supported.

With support for AI Models from OpenAI, Microsoft, Amazon, Google, Amazon Bedrock, Hugging Face and more.

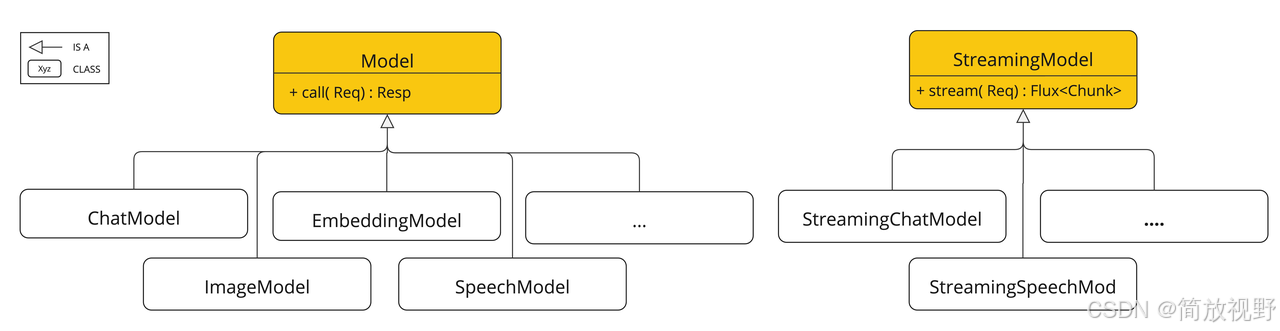

AI模型的三層結構:

- 領域抽象:Model、StreamingModel

- 能力抽象:ChatModel、EmbeddingModel

- 提供商具象:OpenAiChatModel、VertexAiGeminiChatModel、AnthropicChatModel

AI模型領域抽象

Model

調用AI模型的API

public interface Model<TReq extends ModelRequest<?>, TRes extends ModelResponse<?>> {/*** Executes a method call to the AI model.*/TRes call(TReq request);}

StreamingModel

調用AI模型的流式響應的API

public interface StreamingModel<TReq extends ModelRequest<?>, TResChunk extends ModelResponse<?>> {/*** Executes a method call to the AI model.*/Flux<TResChunk> stream(TReq request);}

ModelRequest

AI模型的輸入

public interface ModelRequest<T> {/*** Retrieves the instructions or input required by the AI model.*/T getInstructions(); // required inputModelOptions getOptions();}

ModelResponse

AI模型的響應

public interface ModelResponse<T extends ModelResult<?>> {/*** Retrieves the result of the AI model.*/T getResult();List<T> getResults();ResponseMetadata getMetadata();}

ModelResult

AI模型的輸出

public interface ModelResult<T> {/*** Retrieves the output generated by the AI model.*/T getOutput();ResultMetadata getMetadata();}

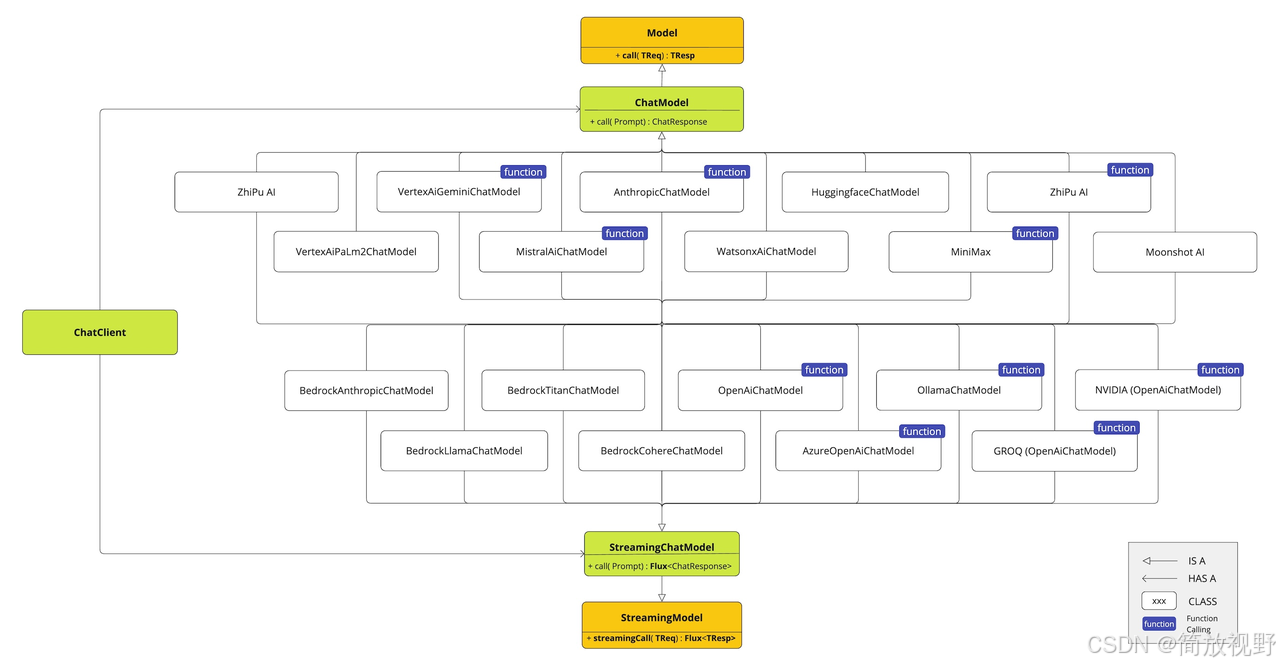

AI模型能力抽象

ChatModel

對話模型,文本聊天交互模型。

其工作原理是接收 Prompt 或部分對話作為輸入,將輸入發送給后端大模型,模型根據其訓練數據和對自然語言的理解生成對話響應,應用程序可以將響應呈現給用戶或用于進一步處理。

public interface ChatModel extends Model<Prompt, ChatResponse>, StreamingChatModel {default String call(String message) {Prompt prompt = new Prompt(new UserMessage(message));Generation generation = call(prompt).getResult();return (generation != null) ? generation.getOutput().getText() : "";}default String call(Message... messages) {Prompt prompt = new Prompt(Arrays.asList(messages));Generation generation = call(prompt).getResult();return (generation != null) ? generation.getOutput().getText() : "";}@OverrideChatResponse call(Prompt prompt);default ChatOptions getDefaultOptions() {return ChatOptions.builder().build();}@Overridedefault Flux<ChatResponse> stream(Prompt prompt) {throw new UnsupportedOperationException("streaming is not supported");}}

StreamingChatModel

對話模型的流式響應的API

@FunctionalInterface

public interface StreamingChatModel extends StreamingModel<Prompt, ChatResponse> {default Flux<String> stream(String message) {Prompt prompt = new Prompt(message);return stream(prompt).map(response -> (response.getResult() == null || response.getResult().getOutput() == null|| response.getResult().getOutput().getText() == null) ? "": response.getResult().getOutput().getText());}default Flux<String> stream(Message... messages) {Prompt prompt = new Prompt(Arrays.asList(messages));return stream(prompt).map(response -> (response.getResult() == null || response.getResult().getOutput() == null|| response.getResult().getOutput().getText() == null) ? "": response.getResult().getOutput().getText());}@OverrideFlux<ChatResponse> stream(Prompt prompt);}

EmbeddingModel

嵌入模型

嵌入(Embedding)的工作原理是將文本、圖像和視頻轉換為向量(Vector)的浮點數數組。

嵌入模型(EmbeddingModel)是嵌入過程中采用的模型。

public interface EmbeddingModel extends Model<EmbeddingRequest, EmbeddingResponse> {@OverrideEmbeddingResponse call(EmbeddingRequest request);// 向量嵌入default float[] embed(String text) {Assert.notNull(text, "Text must not be null");List<float[]> response = this.embed(List.of(text));return response.iterator().next();}/*** Embeds the given document's content into a vector.* @param document the document to embed.* @return the embedded vector.*/float[] embed(Document document);default List<float[]> embed(List<String> texts) {Assert.notNull(texts, "Texts must not be null");return this.call(new EmbeddingRequest(texts, EmbeddingOptionsBuilder.builder().build())).getResults().stream().map(Embedding::getOutput).toList();}default List<float[]> embed(List<Document> documents, EmbeddingOptions options, BatchingStrategy batchingStrategy) {Assert.notNull(documents, "Documents must not be null");List<float[]> embeddings = new ArrayList<>(documents.size());List<List<Document>> batch = batchingStrategy.batch(documents);for (List<Document> subBatch : batch) {List<String> texts = subBatch.stream().map(Document::getText).toList();EmbeddingRequest request = new EmbeddingRequest(texts, options);EmbeddingResponse response = this.call(request);for (int i = 0; i < subBatch.size(); i++) {embeddings.add(response.getResults().get(i).getOutput());}}Assert.isTrue(embeddings.size() == documents.size(),"Embeddings must have the same number as that of the documents");return embeddings;}default EmbeddingResponse embedForResponse(List<String> texts) {Assert.notNull(texts, "Texts must not be null");return this.call(new EmbeddingRequest(texts, EmbeddingOptionsBuilder.builder().build()));}default int dimensions() {return embed("Test String").length;}}

)

2025最新版(MATLAB R2024b))