神經網絡之萬能定理python-pytorch實現,可以擬合任意曲線

博主,這幾天一直在做這個曲線擬合的實驗,講道理,網上可能也有很多這方面的資料,但是博主其實試了很多,效果只能對一般的曲線還行,稍微復雜一點的,效果都不太好,后來博主經過將近一天奮戰終于得到了這個最好的結果:

代碼:

from turtle import shape

import torch

from torch import nn

import pandas as pd

import numpy as np

from scipy.optimize import curve_fit

import matplotlib.pyplot as plt

from utils import parameters

from scipy.optimize import leastsq

from turtle import title

import numpy as np

import matplotlib.pyplot as plt

import torch as t

from torch.autograd import Variable as varclass BP(t.nn.Module):def __init__(self):super(BP,self).__init__()self.linear1 = t.nn.Linear(1,100)self.s = t.nn.Sigmoid()self.linear2 = t.nn.Linear(100,10)self.relu = t.nn.Tanh()self.linear3 = t.nn.Linear(10,1)self.Dropout = t.nn.Dropout(p = 0.1)self.criterion = t.nn.MSELoss()self.opt = t.optim.SGD(self.parameters(),lr=0.01)def forward(self, input):y = self.linear1(input)y = self.relu(y)# y=self.Dropout(y)y = self.linear2(y)y = self.relu(y)# y=self.Dropout(y)y = self.linear3(y)y = self.relu(y)return yclass BackPropagationEx:def __init__(self):self.popt=[]#def fun(self,t,a,Smax,S0,t0):# return Smax - (Smax-S0) * np.exp(-a * (t-t0));def curve_fitm(self,x,y,epoch):xs =x.reshape(-1,1)xs=(xs-xs.min())/(xs.max()-xs.min())# print(xs)ys = yys=(ys-ys.min())/(ys.max()-ys.min())xs = var(t.Tensor(xs))ys = var(t.Tensor(ys))# bp = BP(traindata=traindata,labeldata=labeldata,node=[1,6,1],epoch=1000,lr=0.01)# predict=updata(10,traindata,labeldata)model=BP()for e in range(epoch):# print(e)index=0ls=0for x in xs:y_pre = model(x)# print(y_pre)loss = model.criterion(y_pre,ys[index])index=index+1# print("loss",loss)ls=ls+loss# Zero gradientsmodel.opt.zero_grad()# perform backward passloss.backward()# update weightsmodel.opt.step()if(e%2==0 ):print(e,ls)ys_pre = model(xs)loss = model.criterion(y_pre,ys)print(loss)plt.title("curve")plt.plot(xs.data.numpy(),ys.data.numpy(),label="ys")plt.plot(xs.data.numpy(),ys_pre.data.numpy(),label="ys_pre")plt.legend()plt.show()def predict(self,x):return self.fun(x,*self.popt)def plot(self,x,y,predict):plt.plot(x,y,'bo')#繪制擬合后的點plt.plot(x,predict,'r-')#擬合的參數通過*popt傳入plt.title("BP神經網絡")plt.show()

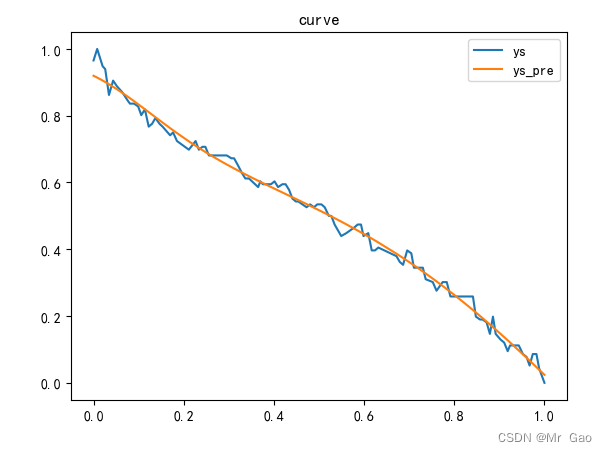

來看一下結果:

你們可能覺得這個擬合好像也一般啊,其實不是,我這個問題非常難,基本上網上的代碼都是擬合效果很差的,數據的話,感興趣的,可以私聊我,我可以發給你們。

這個實現想做到博主這個效果的,很難,因為博主做了大量實現,發現,其實嚴格意義上的萬能定理的實現其實是需要很多的考慮的。

另外隨著訓練輪數和神經元的增加,實際上我們的效果可以真正實現萬能定理。

)

)

(三))

)

回調函數)

的量化技術Quantization原理學習)

求解23個基準函數(提供MATLAB代碼))

)