模型壓縮相關文章

- Learning both Weights and Connections for Efficient Neural Networks (NIPS2015)

- Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding(ICLR2016)

Learning both Weights and Connections for Efficient Neural Networks (NIPS2015)

論文目的:

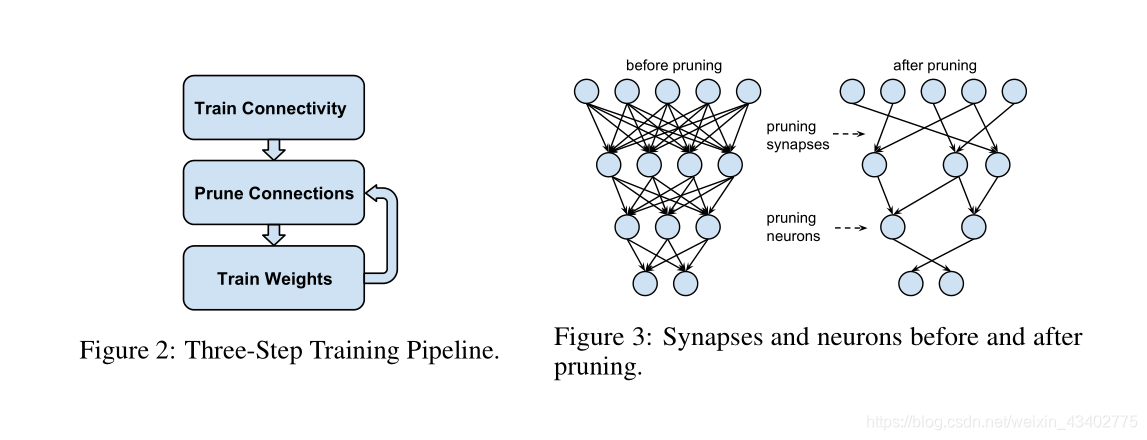

訓練過程中不僅學習權重參數,也學習網絡連接的重要性,把不重要的刪除掉。

論文內容:

1.使用L2正則化

2.Drop 比率調節

3.參數共適應性,修剪之后重新訓練的時候,參數使用原來的參數效果好。

4.迭代修剪連接,修剪-》訓練-》修剪-》訓練

5.修剪0值神經元

Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding(ICLR2016)

論文內容:

修剪,參數精度變小,哈夫曼編碼 壓縮網絡

type的運用)

和.cuda()的區別)

返回false的解決辦法)