Unity 工具 之 Azure 微軟SSML語音合成TTS流式獲取音頻數據的簡單整理

目錄

Unity 工具 之 Azure 微軟SSML語音合成TTS流式獲取音頻數據的簡單整理

一、簡單介紹

二、實現原理

三、實現步驟

四、關鍵代碼

一、簡單介紹

Unity 工具類,自己整理的一些游戲開發可能用到的模塊,單獨獨立使用,方便游戲開發。

本節介紹,這里在使用微軟的Azure 進行語音合成的兩個方法的做簡單整理,這里簡單說明,如果你有更好的方法,歡迎留言交流。

語音合成標記語言 (SSML) 是一種基于 XML 的標記語言,可用于微調文本轉語音輸出屬性,例如音調、發音、語速、音量等。 與純文本輸入相比,你擁有更大的控制權和靈活性。

可以使用 SSML 來執行以下操作:

- 定義輸入文本結構,用于確定文本轉語音輸出的結構、內容和其他特征。 例如,可以使用 SSML 來定義段落、句子、中斷/暫停或靜音。 可以使用事件標記(例如書簽或視素)來包裝文本,這些標記可以稍后由應用程序處理。

- 選擇語音、語言、名稱、樣式和角色。 可以在單個 SSML 文檔中使用多個語音。 調整重音、語速、音調和音量。 還可以使用 SSML 插入預先錄制的音頻,例如音效或音符。

- 控制輸出音頻的發音。 例如,可以將 SSML 與音素和自定義詞典配合使用來改進發音。 還可以使用 SSML 定義單詞或數學表達式的具體發音。

下面是 SSML 文檔的基本結構和語法的子集:

?<speak version="1.0" xmlns="http://www.w3.org/2001/10/synthesis" xmlns:mstts="https://www.w3.org/2001/mstts" xml:lang="string"><mstts:backgroundaudio src="string" volume="string" fadein="string" fadeout="string"/><voice name="string" effect="string"><audio src="string"></audio><bookmark mark="string"/><break strength="string" time="string" /><emphasis level="value"></emphasis><lang xml:lang="string"></lang><lexicon uri="string"/><math xmlns="http://www.w3.org/1998/Math/MathML"></math><mstts:audioduration value="string"/><mstts:express-as style="string" styledegree="value" role="string"></mstts:express-as><mstts:silence type="string" value="string"/><mstts:viseme type="string"/><p></p><phoneme alphabet="string" ph="string"></phoneme><prosody pitch="value" contour="value" range="value" rate="value" volume="value"></prosody><s></s><say-as interpret-as="string" format="string" detail="string"></say-as><sub alias="string"></sub></voice> </speak>?SSML 語音和聲音

語音合成標記語言 (SSML) 的語音和聲音 - 語音服務 - Azure AI services | Microsoft Learn

官網注冊:

面向學生的 Azure - 免費帳戶額度 | Microsoft Azure

官網技術文檔網址:

技術文檔 | Microsoft Learn

官網的TTS:

文本轉語音快速入門 - 語音服務 - Azure Cognitive Services | Microsoft Learn

Azure Unity SDK? 包官網:

安裝語音 SDK - Azure Cognitive Services | Microsoft Learn

SDK具體鏈接:

https://aka.ms/csspeech/unitypackage

?

二、實現原理

1、官網申請得到語音合成對應的 SPEECH_KEY 和 SPEECH_REGION

2、然后對應設置 語言 和需要的聲音 配置

3、使用 SSML 帶有流式獲取得到音頻數據,在聲源中播放或者保存即可,樣例如下

public static async Task SynthesizeAudioAsync()

{var speechConfig = SpeechConfig.FromSubscription("YourSpeechKey", "YourSpeechRegion");using var speechSynthesizer = new SpeechSynthesizer(speechConfig, null);var ssml = File.ReadAllText("./ssml.xml");var result = await speechSynthesizer.SpeakSsmlAsync(ssml);using var stream = AudioDataStream.FromResult(result);await stream.SaveToWaveFileAsync("path/to/write/file.wav");

}三、實現步驟

基礎的環境搭建參照:Unity 工具 之 Azure 微軟語音合成普通方式和流式獲取音頻數據的簡單整理_unity 語音合成

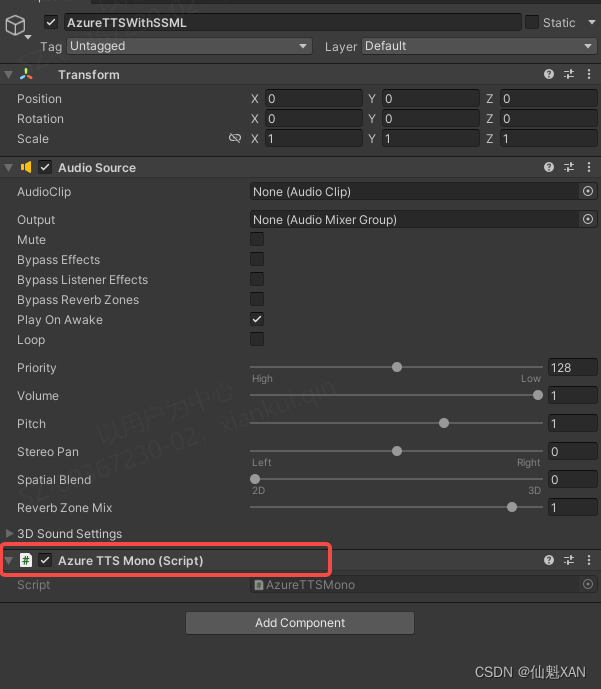

1、腳本實現,掛載對應腳本到場景中

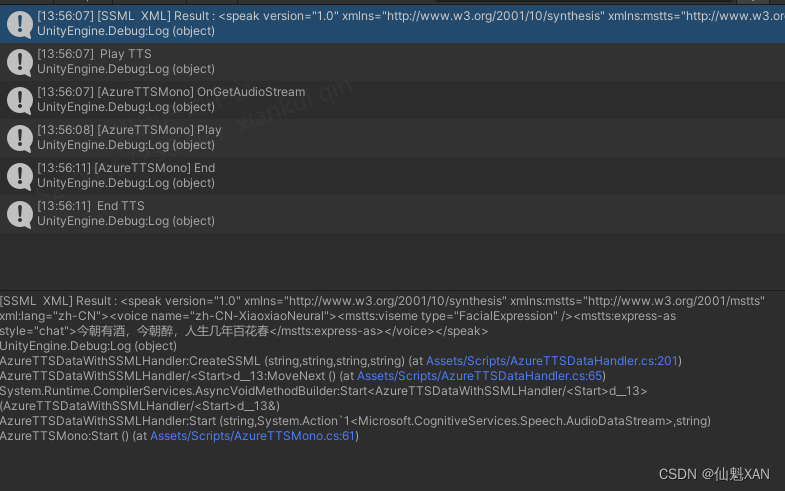

2、運行場景,會使用 SSML方式合成TTS,并播放

?

四、關鍵代碼

1、AzureTTSDataWithSSMLHandler

using Microsoft.CognitiveServices.Speech;

using System;

using System.Threading;

using System.Threading.Tasks;

using System.Xml;

using UnityEngine;/// <summary>

/// 使用 SSML 方式語音合成

/// </summary>

public class AzureTTSDataWithSSMLHandler

{/// <summary>/// Azure TTS 合成 必要數據/// </summary>private const string SPEECH_KEY = "YOUR_SPEECH_KEY";private const string SPEECH_REGION = "YOUR_SPEECH_REGION";private const string SPEECH_RECOGNITION_LANGUAGE = "zh-CN";private string SPEECH_VOICE_NAME = "zh-CN-XiaoxiaoNeural";/// <summary>/// 創建 TTS 中的參數/// </summary>private CancellationTokenSource m_CancellationTokenSource;private AudioDataStream m_AudioDataStream;private Connection m_Connection;private SpeechConfig m_Config;private SpeechSynthesizer m_Synthesizer;/// <summary>/// 音頻獲取事件/// </summary>private Action<AudioDataStream> m_AudioStream;/// <summary>/// 開始播放TTS事件/// </summary>private Action m_StartTTSPlayAction;/// <summary>/// 停止播放TTS事件/// </summary>private Action m_StartTTSStopAction;/// <summary>/// 初始化/// </summary>public void Initialized(){m_Config = SpeechConfig.FromSubscription(SPEECH_KEY, SPEECH_REGION);m_Synthesizer = new SpeechSynthesizer(m_Config, null);m_Connection = Connection.FromSpeechSynthesizer(m_Synthesizer);m_Connection.Open(true);}/// <summary>/// 開始進行語音合成/// </summary>/// <param name="msg">合成的內容</param>/// <param name="stream">獲取到的音頻流數據</param>/// <param name="style"></param>public async void Start(string msg, Action<AudioDataStream> stream, string style = "chat"){this.m_AudioStream = stream;await SynthesizeAudioAsync(CreateSSML(msg, SPEECH_RECOGNITION_LANGUAGE, SPEECH_VOICE_NAME, style));}/// <summary>/// 停止語音合成/// </summary>public void Stop(){m_StartTTSStopAction?.Invoke();if (m_AudioDataStream != null){m_AudioDataStream.Dispose();m_AudioDataStream = null;}if (m_CancellationTokenSource != null){m_CancellationTokenSource.Cancel();}if (m_Synthesizer != null){m_Synthesizer.Dispose();m_Synthesizer = null;}if (m_Connection != null){m_Connection.Dispose();m_Connection = null;}}/// <summary>/// 設置語音合成開始播放事件/// </summary>/// <param name="onStartAction"></param>public void SetStartTTSPlayAction(Action onStartAction){if (onStartAction != null){m_StartTTSPlayAction = onStartAction;}}/// <summary>/// 設置停止語音合成事件/// </summary>/// <param name="onAudioStopAction"></param>public void SetStartTTSStopAction(Action onAudioStopAction){if (onAudioStopAction != null){m_StartTTSStopAction = onAudioStopAction;}}/// <summary>/// 開始異步請求合成 TTS 數據/// </summary>/// <param name="speakMsg"></param>/// <returns></returns>private async Task SynthesizeAudioAsync(string speakMsg){Cancel();m_CancellationTokenSource = new CancellationTokenSource();var result = m_Synthesizer.StartSpeakingSsmlAsync(speakMsg);await result;m_StartTTSPlayAction?.Invoke();m_AudioDataStream = AudioDataStream.FromResult(result.Result);m_AudioStream?.Invoke(m_AudioDataStream);}private void Cancel(){if (m_AudioDataStream != null){m_AudioDataStream.Dispose();m_AudioDataStream = null;}if (m_CancellationTokenSource != null){m_CancellationTokenSource.Cancel();}}/// <summary>/// 生成 需要的 SSML XML 數據/// (格式不唯一,可以根據需要自行在增加刪減)/// </summary>/// <param name="msg">合成的音頻內容</param>/// <param name="language">合成語音</param>/// <param name="voiceName">采用誰的聲音合成音頻</param>/// <param name="style">合成時的語氣類型</param>/// <returns>ssml XML</returns>private string CreateSSML(string msg, string language, string voiceName, string style = "chat"){// XmlDocumentXmlDocument xmlDoc = new XmlDocument();// 設置 speak 基礎元素XmlElement speakElem = xmlDoc.CreateElement("speak");speakElem.SetAttribute("version", "1.0");speakElem.SetAttribute("xmlns", "http://www.w3.org/2001/10/synthesis");speakElem.SetAttribute("xmlns:mstts", "http://www.w3.org/2001/mstts");speakElem.SetAttribute("xml:lang", language);// 設置 voice 元素XmlElement voiceElem = xmlDoc.CreateElement("voice");voiceElem.SetAttribute("name", voiceName);// 設置 mstts:viseme 元素XmlElement visemeElem = xmlDoc.CreateElement("mstts", "viseme", "http://www.w3.org/2001/mstts");visemeElem.SetAttribute("type", "FacialExpression");// 設置 語氣 元素XmlElement styleElem = xmlDoc.CreateElement("mstts", "express-as", "http://www.w3.org/2001/mstts");styleElem.SetAttribute("style", style.ToString().Replace("_", "-"));// 創建文本節點,包含文本信息XmlNode textNode = xmlDoc.CreateTextNode(msg);// 設置好的元素添加到 xml 中voiceElem.AppendChild(visemeElem);styleElem.AppendChild(textNode);voiceElem.AppendChild(styleElem);speakElem.AppendChild(voiceElem);xmlDoc.AppendChild(speakElem);Debug.Log("[SSML XML] Result : " + xmlDoc.OuterXml);return xmlDoc.OuterXml;}}

2、AzureTTSMono

using Microsoft.CognitiveServices.Speech;

using System;

using System.Collections.Concurrent;

using System.IO;

using UnityEngine;[RequireComponent(typeof(AudioSource))]

public class AzureTTSMono : MonoBehaviour

{private AzureTTSDataWithSSMLHandler m_AzureTTSDataWithSSMLHandler;/// <summary>/// 音源和音頻參數/// </summary>private AudioSource m_AudioSource;private AudioClip m_AudioClip;/// <summary>/// 音頻流數據/// </summary>private ConcurrentQueue<float[]> m_AudioDataQueue = new ConcurrentQueue<float[]>();private AudioDataStream m_AudioDataStream;/// <summary>/// 音頻播放完的事件/// </summary>private Action m_AudioEndAction;/// <summary>/// 音頻播放結束的布爾變量/// </summary>private bool m_NeedPlay = false;private bool m_StreamReadEnd = false;private const int m_SampleRate = 16000;//最大支持60s音頻 private const int m_BufferSize = m_SampleRate * 60;//采樣容量private const int m_UpdateSize = m_SampleRate;//audioclip 設置過的數據個數private int m_TotalCount = 0;private int m_DataIndex = 0;#region Lifecycle functionprivate void Awake(){m_AudioSource = GetComponent<AudioSource>();m_AzureTTSDataWithSSMLHandler = new AzureTTSDataWithSSMLHandler();m_AzureTTSDataWithSSMLHandler.SetStartTTSPlayAction(() => { Debug.Log(" Play TTS "); });m_AzureTTSDataWithSSMLHandler.SetStartTTSStopAction(() => { Debug.Log(" Stop TTS "); AudioPlayEndEvent(); });m_AudioEndAction = () => { Debug.Log(" End TTS "); };m_AzureTTSDataWithSSMLHandler.Initialized();}// Start is called before the first frame updatevoid Start(){m_AzureTTSDataWithSSMLHandler.Start("今朝有酒,今朝醉,人生幾年百花春", OnGetAudioStream);}// Update is called once per frameprivate void Update(){UpdateAudio();}#endregion#region Audio handler/// <summary>/// 設置播放TTS的結束的結束事件/// </summary>/// <param name="act"></param>public void SetAudioEndAction(Action act){this.m_AudioEndAction = act;}/// <summary>/// 處理獲取到的TTS流式數據/// </summary>/// <param name="stream">流數據</param>public async void OnGetAudioStream(AudioDataStream stream){m_StreamReadEnd = false;m_NeedPlay = true;m_AudioDataStream = stream;Debug.Log("[AzureTTSMono] OnGetAudioStream");MemoryStream memStream = new MemoryStream();byte[] buffer = new byte[m_UpdateSize * 2];uint bytesRead;m_DataIndex = 0;m_TotalCount = 0;m_AudioDataQueue.Clear();// 回到主線程進行數據處理Loom.QueueOnMainThread(() =>{m_AudioSource.Stop();m_AudioSource.clip = null;m_AudioClip = AudioClip.Create("SynthesizedAudio", m_BufferSize, 1, m_SampleRate, false);m_AudioSource.clip = m_AudioClip;});do{bytesRead = await System.Threading.Tasks.Task.Run(() => m_AudioDataStream.ReadData(buffer));if (bytesRead <= 0){break;}// 讀取寫入數據memStream.Write(buffer, 0, (int)bytesRead);{var tempData = memStream.ToArray();var audioData = new float[memStream.Length / 2];for (int i = 0; i < audioData.Length; ++i){audioData[i] = (short)(tempData[i * 2 + 1] << 8 | tempData[i * 2]) / 32768.0F;}try{m_TotalCount += audioData.Length;// 把數據添加到隊列中m_AudioDataQueue.Enqueue(audioData);// new 獲取新的地址,為后面寫入數據memStream = new MemoryStream();}catch (Exception e){Debug.LogError(e.ToString());}}} while (bytesRead > 0);m_StreamReadEnd = true;}/// <summary>/// Update 播放音頻/// </summary>private void UpdateAudio() {if (!m_NeedPlay) return;//數據操作if (m_AudioDataQueue.TryDequeue(out float[] audioData)){m_AudioClip.SetData(audioData, m_DataIndex);m_DataIndex = (m_DataIndex + audioData.Length) % m_BufferSize;}//檢測是否停止if (m_StreamReadEnd && m_AudioSource.timeSamples >= m_TotalCount){AudioPlayEndEvent();}if (!m_NeedPlay) return;//由于網絡,可能額有些數據還沒有過來,所以根據需要判斷是否暫停播放if (m_AudioSource.timeSamples >= m_DataIndex && m_AudioSource.isPlaying){m_AudioSource.timeSamples = m_DataIndex;//暫停Debug.Log("[AzureTTSMono] Pause");m_AudioSource.Pause();}//由于網絡,可能有些數據過來比較晚,所以這里根據需要判斷是否繼續播放if (m_AudioSource.timeSamples < m_DataIndex && !m_AudioSource.isPlaying){//播放Debug.Log("[AzureTTSMono] Play");m_AudioSource.Play();}}/// <summary>/// TTS 播放結束的事件/// </summary>private void AudioPlayEndEvent(){Debug.Log("[AzureTTSMono] End");m_NeedPlay = false;m_AudioSource.timeSamples = 0;m_AudioSource.Stop();m_AudioEndAction?.Invoke();}#endregion

}

)

)

和純水中的吸收研究(Matlab代碼實現))

用來修改或者添加屬性或者屬性值)

![[保研/考研機試] KY26 10進制 VS 2進制 清華大學復試上機題 C++實現](http://pic.xiahunao.cn/[保研/考研機試] KY26 10進制 VS 2進制 清華大學復試上機題 C++實現)

)

)