序言

ViewFs 是在Federation的基礎上提出的,用于通過一個HDFS路徑來訪問多個NameSpace,同時與ViewFs搭配的技術是client-side mount table(這個就是具體的規則配置信息可以放置在core.xml中,也可以放置在mountTable.xml中).?

總的來說ViewFs的其實就是一個中間層,用于去連接不同的Namenode,然后返還給我們的客戶端程序. 所以ViewFs必須要實現HDFS的所有接口,這樣才能來做轉發管理. 這樣就會有一些問題,比如不同的NameNode版本帶來的問題,就沒法解決cuiyaonan2000@163.com

Federation Of HDFS

只是單純的搭建聯盟其實比較簡單.

core.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.

--><!-- Put site-specific property overrides in this file. --><configuration><property><name>hadoop.tmp.dir</name><value>/soft/hadoop/data_hadoop</value></property><!-- 這里的ip要改成對應的namenode地址 --><property><name>fs.defaultFS</name><value>hdfs://hadoop:9000/</value></property></configuration>

hfds-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.

--><!-- Put site-specific property overrides in this file. --><configuration><property><name>dfs.replication</name><value>2</value></property><property><name>dfs.namenode.name.dir</name><value>file:/soft/hadoop/data_hadoop/datanode</value></property><property><name>dfs.datanode.data.dir</name><value>file:/soft/hadoop/data_hadoop/namenode</value></property><property><name>dfs.permissions</name><value>false</value></property><property><name>dfs.nameservices</name><value>ns1,ns2</value></property><property><name>dfs.namenode.rpc-address.ns1</name><value>hadoop:9000</value></property><property><name>dfs.namenode.http-address.ns1</name><value>hadoop:50070</value></property><property><name>dfs.namenode.secondaryhttp-address.ns1</name><value>hadoop:50090</value></property><property><name>dfs.namenode.rpc-address.ns2</name><value>hadoop1:9000</value></property><property><name>dfs.namenode.http-address.ns2</name><value>hadoop1:50070</value></property><property><name>dfs.namenode.secondaryhttp-address.ns2</name><value>hadoop1:50090</value></property></configuration>

啟動

首先就是需要格式化namenode,這個很常規 hdfs namenode -format

關于聯盟版本的創建則需要設置聯盟的id,所以需要再格式化namenode 的時候指定

hdfs namenode -format -clusterId cui

驗證

?

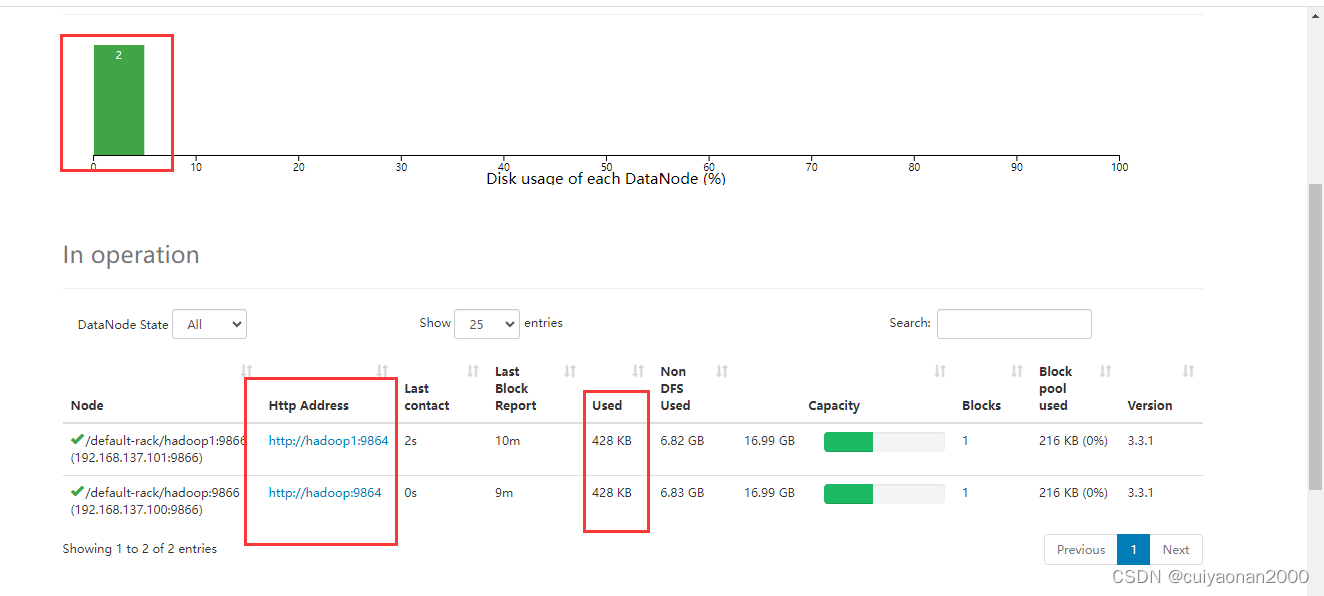

?最主要的就是共用DataNode,即他們的DataNode 信息一樣

)