美劇迷失

Machine translation doesn’t generate as much excitement as other emerging areas in NLP these days, in part because consumer-facing services like Google Translate have been around since April 2006.

如今,機器翻譯并沒有像其他NLP新興領域那樣令人興奮,部分原因是自2006年4月以來,面向消費者的服務(例如Google Translate)就已經出現了。

But recent advances, particularly the work by Hugging Face in making transformer models more accessible and easy to use, have opened up interesting new possibilities for those looking to translate more than just piecemeal sentences or articles.

但是,最近的進步,尤其是Hugging Face在使轉換器模型更易于訪問和易于使用方面所做的工作,為那些希望翻譯不只是零碎的句子或文章的人提供了有趣的新可能性。

For one, small batch translation in multiple languages can now be run pretty efficiently from a desktop or laptop without having to subscribe to an expensive service. No doubt the translated works by neural machine translation models are not (yet) as artful or precise as those by a skilled human translator, but they get 60% or more of the job done, in my view.

首先,現在可以從臺式機或筆記本電腦高效運行多種語言的小批量翻譯,而無需訂閱昂貴的服務 。 毫無疑問, 神經機器翻譯模型的翻譯作品還不如熟練的人工翻譯者那么巧妙或精確,但我認為它們完成了60%或更多的工作。

This could be a huge time saver for workplaces that run on short deadlines, such as newsrooms, to say nothing of the scarcity of skilled human translators.

對于工作期限很短的工作場所(例如新聞編輯室)而言,這可以節省大量時間,更不用說熟練的人工翻譯人員的匱乏了。

Over three short notebooks, I’ll outline a simple workflow for using Hugging Face’s version of MarianMT to batch translate:

在三個簡短的筆記本上 ,我將概述一個簡單的工作流程,以使用Hugging Face的MarianMT版本批量翻譯:

English speeches of varying lengths to Chinese

不同長度的英語演講到中文

English news stories on Covid-19 (under 500 words) to Chinese

關于Covid-19(500字以下)的英語新聞報道到中文

Chinese speeches of varying lengths to English.

不同長度的中文演講與英語 。

1.數據集和翻譯后的輸出 (1. DATA SET AND TRANSLATED OUTPUT)

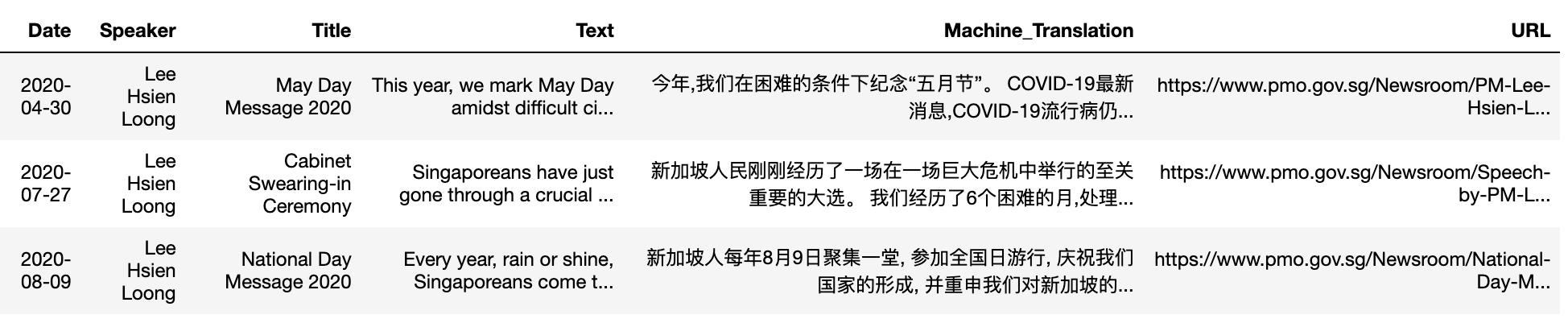

There are two datasets for this post. The first comprises 11 speeches in four languages (English, Malay, Chinese, and Tamil) taken from the website of the Singapore Prime Minister’s Office. The second dataset consists of five random English news stories on Covid-19 published on Singapore news outlet’s CNA’s website in March 2020.

此帖子有兩個數據集。 在第一個包括11個演講從網站采取四種語言(英語,馬來語,中國語和泰米爾語) 新加坡總理辦公室 。 第二個數據集由2020年3月在新加坡新聞媒體的CNA網站上發布的Covid-19上的五個隨機英語新聞報道組成。

Results of the output CSV files with the machine translated text and original copy can be downloaded here.

帶有機器翻譯文本和原始副本的輸出CSV文件的結果可在此處下載。

At the time of writing, you can tap over 1,300 open source models on Hugging Face’s model hub for machine translation. As no MarianMT models for English-Malay and English-Tamil (and vice versa) have been released to date, this series of notebooks will not deal with these two languages for now. I’ll revisit them as and when the models are available.

在撰寫本文時,您可以在Hugging Face的模型中心上挖掘1,300多種開源模型進行機器翻譯。 由于迄今為止尚未發布針對英語-馬來語和英語-泰米爾語(反之亦然)的MarianMT模型,因此該系列筆記本目前暫不支持這兩種語言。 我將在可用模型時重新訪問它們。

I won’t dive into the technical details behind machine translation. Broad details of Hugging Face’s version of MarianMT can be found here, while those curious about the history of machine translation can start with this fairly recent article.

我不會深入探討機器翻譯背后的技術細節。 可以在此處找到Hugging Face版本的MarianMT的廣泛細節,而對機器翻譯歷史感到好奇的人可以從這篇相當新的文章開始 。

2A。 3種語言的英語到漢語機器翻譯 (2A. ENGLISH-TO-CHINESE MACHINE TRANSLATION OF 3 SPEECHES)

The three English speeches I picked for machine translation into Chinese range from 1,352 to 1,750 words. They are not highly technical but cover a wide enough range of topics — from Covid-19 to Singapore politics and domestic concerns — to stretch the model’s capability.

我選擇將機器翻譯成中文的三個英語演講的范圍是1,352至1,750個單詞。 它們的技術性不是很高,但是涵蓋了足夠廣泛的主題(從Covid-19到新加坡政治以及國內關注的問題),以擴展模型的功能。

The text was lightly cleaned. For best results, the sentences were translated one at a time (you’ll notice a significant drop in translation quality if you run the entire speech through the model without tokenizing at the sentence level). The notebook took just minutes to run on my late-2015 iMac (32Gb RAM) — but may vary depending on your hardware.

文本被輕輕清理了。 為了獲得最佳結果,句子一次被翻譯一次(如果您在整個模型中運行整個語音而沒有在句子級別進行標記化,則翻譯質量會明顯下降)。 這款筆記本在我2015年末的iMac(32Gb RAM)上運行僅需幾分鐘,但具體取決于您的硬件。

Some of the familiar problems with machine translation are apparent right away, particularly with the literal translation of certain terms, phrases and idioms.

機器翻譯中的一些常見問題會立即顯現出來,尤其是某些術語,短語和習語的直譯時。

May Day, for instance, was translated as “五月節” (literally May Festival) instead of 勞動節. A reference to “rugged people” was translated as “崎嶇不平的人”, which would literally mean “uneven people”. Clearly the machine translation mixed up the usage of “rugged” in the context of terrain versus that of a society.

例如,“五月節”被翻譯為“五月節”(實際上是五月節),而不是勞動節。 提到“崎people的人”被翻譯為“崎嶇不平的人”,字面意思是“參差不齊的人”。 顯然,機器翻譯在地形環境和社會環境中混淆了“堅固”的用法。

Here’s a comparison with Google Translate, using a snippet of the second speech. Results from the Hugging Face — MarianMT model held up pretty well against Google’s translation, in my view:

這是使用第二段語音的片段與Google Translate的比較。 在我看來,Hugging Face — MarianMT模型的結果與Google的翻譯非常吻合:

To be sure, neither version can be used without correcting for some obvious errors. Google’s results, for instance, translated the phrase “called the election” to “打電話給這次選舉”, or to literally make a telephone call to the election. The phrasing of the translated Chinese sentences is also awkward in many instances.

可以肯定的是,如果不糾正一些明顯的錯誤,則無法使用這兩個版本。 谷歌的結果,比如,翻譯成“叫選”的短語“打電話給這次選舉”,或從字面上打了一個電話給選舉 。 在許多情況下,中文翻譯句子的措詞也很尷尬。

A skilled human translator would definitely do a better job at this point. But I think it is fair to say that even a highly experienced translator won’t be able to translate all three speeches within minutes.

在這一點上,熟練的人工翻譯肯定會做得更好。 但我認為可以說,即使是經驗豐富的翻譯人員也無法在幾分鐘之內翻譯全部三篇演講稿。

The draw of machine translation, for now, appears to be scale and relative speed rather than precision. Let’s try the same model on a different genre of writing to see how it performs.

到目前為止,機器翻譯的吸引力似乎是比例和相對速度,而不是精度。 讓我們在不同的寫作風格上嘗試相同的模型,看看其表現如何。

2B。 英文到中文的機器翻譯5條新聞文章 (2B. ENGLISH-TO-CHINESE MACHINE TRANSLATION OF 5 NEWS ARTICLES)

Speeches are more conversational, so I picked five random news articles on Covid-19 (published in March 2020) to see how the model performs against a more formal style of writing. To keep things simple, I selected articles that are under 500 words.

演講更具對話性,因此我在Covid-19(于2020年3月發布)上選擇了五篇隨機新聞文章,以了解該模型在更正式的寫作風格下的表現。 為了簡單起見,我選擇了500字以內的文章。

The workflow in this second trial is identical to the first, except for additional text cleaning rules and the translation of the articles’ headlines alongside the body text. Here’s what the output CSV file looks like:

除了附加的文本清理規則以及文章標題與正文文本的翻譯之外, 第二個試驗的工作流程與第一個試驗的工作流程相同。 這是輸出CSV文件的樣子:

Let’s compare one of the examples with the results from Google Translate:

讓我們將其中一個示例與Google Translate的結果進行比較:

Both Google and MarianMT tripped up on the opening paragraph, which was fairly long and convoluted. The two models performed slightly better on the shorter sentences/paragraphs, but the awkward literal translations of simple phrases continue to be a problem.

谷歌和MarianMT都在開頭段中跳了起來,該段相當長且令人費解。 這兩個模型在較短的句子/段落上的表現稍好一些,但是簡單短語的尷尬字面翻譯仍然是一個問題。

For instance, the phrase “as tourists stay away” was translated by the MarianMT model as “游客離家出走” or “tourists ran away from home”, while Google translated it as “游客遠離了當地” or “tourists kept away from the area”.

例如,MarianMT模型將“游客離家出走”一詞翻譯為“游客離家出走”或“游客離家出走”,而Google將其翻譯為“游客離了本地”或“游客離家出走”該區域”。

These issues could result in misinterpretations of factual matters, and cause confusion. I haven’t conducted a full-fledged test, but based on the trials I’ve done so far, both MarianMT and Google Translate appear to do better with text that’s more conversational in nature, as opposed to more formal forms of writing.

這些問題可能導致對事實問題的誤解,并引起混亂。 我還沒有進行全面的測試,但是根據我到目前為止所做的測試,與更正式的寫作形式相比,MarianMT和Google Translate似乎在本質上更具對話性的文本上表現更好。

2C。 達世幣APP (2C. DASH APP)

Plotly has released a good number of sample interactive apps for transformer-based NLP tasks, including one that works with Hugging Face’s version of MarianMT. Try it out on Colab, or via Github (I edited the app’s headline in the demo gif below).

Plotly已發布了大量用于基于變壓器的NLP任務的示例交互式應用程序 ,其中包括可與Hugging Face版本的MarianMT一起使用的應用程序。 在Colab上或通過Github嘗試一下(我在下面的演示gif中編輯了應用程序的標題)。

3.漢譯英三種語言的翻譯 (3. CHINESE-TO-ENGLISH MACHINE TRANSLATION OF 3 SPEECHES)

Machine translation of Chinese text into English tend to be a trickier task in general, as most NLP libraries/tools focus are English-based. There isn’t a straightforward equivalent of the sentence tokenizer in NLTK, for instance.

由于大多數NLP庫/工具的重點都是基于英語的,因此將中文文本機器翻譯成英語通常是一項棘手的任務 。 例如,NLTK中沒有直接與句子標記器等效的功能。

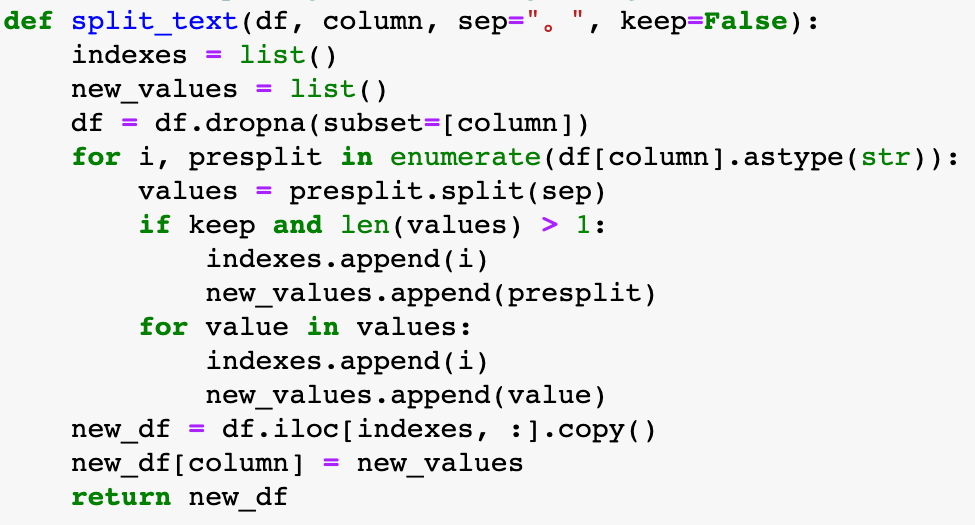

I experimented with jieba and hanlp but didn’t get very far. As a temporary workaround, I adapted a function to split the Chinese text in the dataframe column into individual sentences prior to running them via the Chinese-to-English MarianMT model.

我嘗試用解霸和hanlp但沒有得到很遠。 作為臨時的解決方法,我修改了一個功能,以在通過中英文MarianMT模型運行它們之前,將數據框列中的中文文本拆分為單個句子。

But even with the somewhat clumsy workaround, the batch translation of the three Chinese speeches took just about 5 minutes. Do note that these three speeches are the official Chinese versions of the three earlier English speeches. The two sets of speeches cover the same ground but there are some slight variations in the content of the Chinese speeches, which aren’t direct, word-for-word translations of the English versions.

但是即使有一些笨拙的解決方法,三項中文語音的批量翻譯也只花了大約5分鐘。 請注意,這三個演講是之前三個英文演講的官方中文版本。 兩組演講內容相同,但中文演講內容略有不同,這并不是英文版本的直接逐字翻譯。

You can download the output CSV file here. Let’s see how the results for the third speech compare with Google Translate:

您可以在此處下載CSV輸出文件。 讓我們看看第三次演講的結果與Google Translate的比較:

The Google Translate version reads much better, and didn’t have the glaring error of translating “National Day” as “Fourth of July”. Overall, the results of Chinese-to-English machine translation appear (to me at least) considerably better than that for English-to-Chinese translation. But one possible reason is that the Chinese speeches in my sample were more simply written, and did not push the neural machine translation models that hard.

Google翻譯版本的閱讀效果更好,而且沒有將“國慶日”翻譯為“ 7月四日”的明顯錯誤。 總體而言,漢英機器翻譯的結果(至少對我而言)比英漢翻譯的結果要好得多。 但是一個可能的原因是,我的樣本中的中文語音寫得更簡單,并且沒有使神經機器翻譯模型如此困難。

4.食物的思考 (4. FOOD FOR THOUGHT)

While Hugging Face has made machine translation more accessible and easier to implement, some challenges remain. One obvious technical issue is the fine-tuning of neural translation models for specific markets.

盡管Hugging Face使機器翻譯更易于訪問且更易于實現,但仍存在一些挑戰。 一個明顯的技術問題是針對特定市場的神經翻譯模型的微調 。

For instance, China, Singapore and Taiwan differ quite significantly in their usage of the written form of Chinese. Likewise, Bahasa Melayu and Bahasa Indonesia have noticeable differences even if they sound/look identical to non-speakers. Assembling the right datasets for such fine tuning won’t be a trivial task.

例如,中國,新加坡和臺灣在使用中文書面形式方面有很大的不同。 同樣,馬來語和印尼語也有明顯的不同,即使它們的發音/外觀與非母語者相同。 組裝合適的數據集以進行微調將不是一件容易的事。

In terms of the results achievable by the publicly available models, I would argue that the machine translated English-to-Chinese and Chinese-to-English texts aren’t ready for public publication unless a skilled translator is on hand to check and make corrections. But that’s just one use case. In situations where the translated text is part of a bigger internal workflow that does not require publication, machine translation could come in very handy.

就可以通過公開模型獲得的結果而言,我認為機器翻譯的英文譯成中文和中文譯成英文的文本尚未準備好公開發布,除非有熟練的翻譯人員在手進行檢查和更正。 。 但這只是一個用例。 如果翻譯后的文本是不需要發布的較大內部工作流程的一部分,則機器翻譯可能會非常方便。

For instance, if I’m tasked to track disinformation campaigns on social media by Chinese or Russian state actors, it just wouldn’t make sense to try to translate the torrent of tweets and FB posts manually. Batch machine translation of these short sentences or paragraphs would be far more efficient in trying to get a broad sense of the messages being peddled by the automated accounts.

例如,如果我受命由中國或俄羅斯國家行為者在社交媒體上追蹤虛假信息宣傳活動,那么嘗試手動翻譯大量推文和FB帖子就沒有意義。 這些簡短句子或段落的批處理機器翻譯在試圖獲得由自動帳戶兜售的消息的廣泛意義上將更加有效。

Likewise, if you are tracking social media feedback for a new product or political campaign in a multi-lingual market, batch machine translation will likely be a far more efficient way of monitoring non-English comments collected from Instagram, Twitter or Facebook.

同樣,如果您要在多語言市場中跟蹤針對新產品或政治活動的社交媒體反饋,則批處理機器翻譯可能是監視從Instagram,Twitter或Facebook收集的非英語評論的更有效的方法。

Ultimately, the growing use of machine translation will be driven by the broad decline of language skills, even in nominally multi-lingual societies like Singapore. You may scoff at some of the machine translated text above, but most working adults who have gone through Singapore’s bilingual education system are unlikely to do much better if asked to translate the text on their own.

最終,機器語言的廣泛使用將受到語言技能廣泛下降的推動,即使在名義上講多種語言的社會中,例如新加坡。 您可能會嘲笑上面的一些機器翻譯文本,但如果要求自己翻譯文本,大多數經過新加坡雙語教育系統的在職成年人不太可能做得更好。

The Github repo for this post can be found here. This is the fourth in a series on practical applications of new NLP tools. The earlier posts/notebooks focused on:

這篇文章的Github倉庫可以在這里找到。 這是有關新NLP工具的實際應用的系列文章中的第四篇。 較早的帖子/筆記本著重于:

sentiment analysis of political speeches

政治演講的情感分析

text summarisation

文字總結

fine tuning your own chat bot.

微調您自己的聊天機器人 。

If you spot mistakes in this or any of my earlier posts, ping me at:

如果您發現此錯誤或我以前的任何帖子中的錯誤,請通過以下方式對我進行ping:

Twitter: Chua Chin Hon

Twitter: 蔡展漢

LinkedIn: www.linkedin.com/in/chuachinhon

領英(LinkedIn): www.linkedin.com/in/chuachinhon

翻譯自: https://towardsdatascience.com/lost-in-machine-translation-3b05615d68e7

美劇迷失

本文來自互聯網用戶投稿,該文觀點僅代表作者本人,不代表本站立場。本站僅提供信息存儲空間服務,不擁有所有權,不承擔相關法律責任。 如若轉載,請注明出處:http://www.pswp.cn/news/391496.shtml 繁體地址,請注明出處:http://hk.pswp.cn/news/391496.shtml 英文地址,請注明出處:http://en.pswp.cn/news/391496.shtml

如若內容造成侵權/違法違規/事實不符,請聯系多彩編程網進行投訴反饋email:809451989@qq.com,一經查實,立即刪除! does not exist 解決方法 (grant 授予權限)...)

、深度優先(DFS)、廣度優先(BFS))

)

)

)